Security Now 987 transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show

0:00:00 - Leo Laporte

It's time for security now. Steve Gibson is here. I am here in my new attic studio, but we have lots to talk about, including a new attack called Sitting Duck. Are you a sitting duck? Why? All AMD, almost all AMD chips, have a critical microcode bug. That is about as bad as you can get. Microsoft decides not to fix a simple Windows bug that can cause a blue screen of death or worse, and then we'll talk about certificate revocation. It turns out it's a hard computer science problem and for some reason, let's Encrypt has decided to do it a different way. Stay tuned. Security Now is coming up. Next, podcasts you love.

From people you trust this is twit this is security now, with steve gibson, episode 987, recorded august 13th 2024 rethinking revocation. It's time for security Now, the show we cover your security, your privacy, your safety, your online habitation at all times, with this man right here, steve Gibson of GRCcom. Hi, steve, hello.

0:01:17 - Steve Gibson

Leo, great to be with you again. For now, wait for it the last episode of our 19th year Holy camoly.

0:01:30 - Leo Laporte

So you're saying as of next week, which will be the 20th we'll be in our 20th year.

0:01:36 - Steve Gibson

Our birthday will have been the previous day, monday, august 19th of 2005. A day that will live in infamy. Episode number one Honey Monkeys. Or was that number two, I think? Wow, oh, I think that was all a 15-minute podcast.

0:01:58 - Leo Laporte

Oh yes, fortunately we don't have. It's gotten longer, ever since.

0:02:01 - Steve Gibson

unfortunately, we don't have four ads in a 15-minute podcast, or I'd be like, uh, leo, wait, wait, let me get a word in uh, no, well, I can you if you're.

0:02:11 - Leo Laporte

If you're interested, this is the episode. It's still online, it's 18 minutes and 10 seconds and it's security episode 1, august 18th.

0:02:20 - Steve Gibson

It says oh, wait a minute. Okay, two days, yes, two days ahead, we will have been that.

0:02:27 - Leo Laporte

Do you want to hear how young we sounded back in the day? First of all, we had the worst music. Can you hear that? I can hear it, but I don't. Oh, it's coming out of the wrong hole. Sorry about that, that's what happens when you get older.

0:02:46 - Steve Gibson

Leo, how many times have I heard that?

0:02:50 - Leo Laporte

well, anyway, we'll uh, we'll, figure that out. Actually, we'll leave it as an exercise for the viewer perfect.

0:02:57 - Steve Gibson

It is there if anyone's really dying to know what it was like 20 years ago or 19 wow it was.

0:03:03 - Leo Laporte

It was us kind of staring into the headlights actually, yeah what are we going to do now?

0:03:07 - Steve Gibson

What is this? A whatcast?

0:03:09 - Leo Laporte

What the hell is this?

0:03:10 - Steve Gibson

When you first said this, how would you like to do a weekly podcast? I said a whatcast.

0:03:15 - Leo Laporte

Well, if you want to go directly there, twittv slash SN, the number one. Wow, it's for now and forever.

0:03:23 - Steve Gibson

The first security now is, for now and forever, the first security now. Yes, we'll see how long you leave this online leo?

0:03:31 - Leo Laporte

uh, that's that remains to be. Oh, you mean like after we retire, and all that exactly yeah, you know it is an interesting uh question that people talk about all the time is like, what happens to your digital life when you pass away? Um, I don't know wikipedia pages.

0:03:46 - Steve Gibson

They stay around. You know, yeah, and you know a lot of what we do is on archiveorg is on, uh, you know, so maybe just live on an archiveorg well, today episode and I like the digits 987.

uh, we're never going to get to 9876. I think that's asking too much. But 987, that we got, and no one is worried about 999 because we know that we're going to. I will have to spend a little time fixing my software to deal with four digits. That was always true. But you know I got time, I'll make time. Okay, so a bunch of stuff, fun stuff, to talk about. It turns out that a million domains, it's estimated, are vulnerable to something known as the sitting duck attack.

0:04:38 - Leo Laporte

What is this?

0:04:38 - Steve Gibson

That's not good. That's not good. You do not want to be a sitting duck. Is it new? Why does it happen and who needs to worry about it? Also, where the name came from is kind of fun. We'll get to that.

Also. We've got believe it or not, another 9.8 CVSS remote code execution vulnerability discovered in Windows remote desktop licensing service. Unfortunately, some 170,000 of those are exposed publicly. The good news is it was patched last month, that is, last month's Patch Tuesday. Today is August's Patch Tuesday.

Corporation, an enterprise of any kind who's been delaying updates? And you have remote desktop licensing service publicly exposed. At least patch that. You can go get the incremental patches from Microsoft if you want Either turn off the service or patch it. Anyway, we'll talk more about that.

I realize I forgot that I was doing a summary here. This is uh, yeah, this is just the short part. Yeah, yes, also, all of amd's chips have a critical but probably patchable if they choose to do it, and apparently they're saying we're not really sure, we're going to bother Microcode bug that allows boot time security to be compromised. Okay, also, microsoft apparently decides not to fix a simple Windows bug which will allow anyone to easily crash Windows with a blue screen of death anytime they want. Anyway, you don't want that in your startup folder. Uh, grc's is boot secure. Freeware is updated. It's now a gui, a regular windows app, almost finished. I'll mention that briefly, and believe it or not, today's podcast 987 is titled Rethinking Revocation. Oh no, because the entire certificate revocation system that the industry has just spent the past 10 years getting to work is about to be scrapped in favor of what never worked before.

0:07:01 - Leo Laporte

Well, at least it never worked before.

0:07:03 - Steve Gibson

Yeah, I think maybe we're just never happy with what we have and it's like, well, ok, even though that didn't work before, maybe we can make it work now, because what we have today has problems that we don't seem able to fix. Anyway, we're going to talk about that because everything's about to change and, of course, once again, uh and we always have the best, uh sponsors our show this hour brought to you by delete me.

0:07:57 - Leo Laporte

Oh, here's a question for you. Have you ever searched for your name online? Don't do not, I beg of you. Uh, it is kind of an eye-opener. You will not like how much personal information is just out there floating around on the public internet and and I should point out that maintaining your privacy is not just a personal issue. If you are a manager in a company, it's a cyber security issue for your company. We learned that at Twit. That's why we have Delete Me for our CEO. It's also a family affair. You're family members and they may not be as sophisticated as you. They're just as exposed and often relationships between people are also exposed. Delete Me, scott. That's why Delete Me has family plans, which means you can ensure everyone in the family can feel safe online.

Deleteme helps reduce risk from all of the hazards that all that public information offers out there Identity theft, cybersecurity threats, harassment and more. I just saw in the news another data broker got breached in the news. Another data broker got breached and these are all American customers. This is the amazing thing, but it was something like 2.7 billion data records were leaked from this data broker. So it's not merely that the data broker will sell your information to anybody. They're not security experts, obviously. Hackers leak 2.7 billion data records of American citizens, including social security numbers, all known physical addresses. All of this stuff is online Now. It impacts your cybersecurity because bad guys can use this information things like your cell phone number, your direct reports, your company org chart and all that to send you very credible spear phishing attacks. So there's that too.

Delete Me is the best way to get that stuff off the public net. They will find and remove your information from hundreds of data brokers. These are real human experts in finding this stuff and getting rid of it. And if you want to get the family plan, it's great. You can assign a unique data sheet to each family member and it's tailored to what they want online and what they don't want online, right, it doesn't have to be a blanket deletion. The controls are easy. Account owners can manage privacy settings for the whole family, whether it's family, individual or corporate. Deleteme will continue to scan and remove that information regularly, and that's important because as soon as this stuff is deleted, I'm sure this national public data the company that allegedly lost 2.7 billion records I'm sure that you know they.

If you say delete me, they'll delete you that day, but they continue. How else would you get 2.7 billion records? There are fewer than a billion people in the us. That gives you some idea. They're constantly building up these dossiers on every single person. I'm talking addresses, photos, emails, your relatives. That's why you want to have the family plan, your phone numbers, your social media, your property value and a whole lot more. Protect yourself, reclaim your privacy. Do what we did. We're glad we did too. It really works. Join deletemecom slash twit is the address. Join deletemecom slash twit is the address. Joindeletecom slash twit. Use the offer code TWIT and you'll get 20% off. That's a pretty good deal. Joindeletecom slash twit and use the offer code TWIT for 20% off. Thank you, delete me. Now let's get back to steve and our picture of the week. I'm looking at it and I don't.

0:11:52 - Steve Gibson

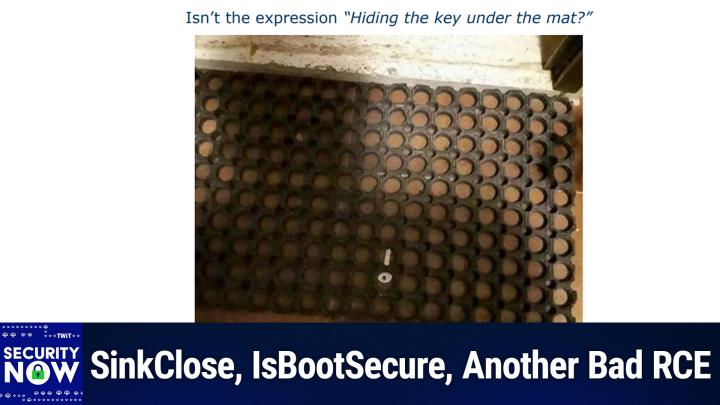

Oh, oh so you know, um, oh, like the welcome mat that you have in your in front of your front door, it says welcome on it, right? But to do that you've got to be able to print the word. Now what we have here looks like a front door mat, and I've seen these before. You might have them like in an area that it gets a lot of snow, where you'd like to be able to scrape your feet off. So this mat is a grid of holes which could be useful for scraping stuff off the bottom of your shoes. Now the problem, however, for this particular instance, is that someone thought that they'd hide a key under the mat. No, but you know the operative word in that phrase. Hiding the key under the mat is hiding, and this mat, which is all holes, uh, is a really bad hiding place. It's pretty obvious.

0:13:00 - Leo Laporte

There's a key there, yeah it's really obvious. Yeah, that's great so, anyway, I hit the key out of the mat. Well, I didn't hide it, but it is under the mat another one of those weird what were they thinking?

0:13:12 - Steve Gibson

pictures that we let we entertain ourselves with every week here on this crazy podcast. Okay, every few years a security researcher rediscovers that many sites' DNS services are not properly configured and authenticated, to no one's surprise until it happens to them. This leads to those you know, misconfigured domains being taken over, meaning that the IP addresses being resolved from a domain name are pointed to malicious servers elsewhere. People going to those sites are often, you know, none the wiser. The domain name is right and it looks like the right site. Little do they know that they're talking actually to a server in Russia. So this generally doesn't happen to major sites, because that problem would be seen and resolved almost immediately. But many large organizations register every typo variation of their primary domain name that they can think of in order to keep the so-called typo squatters from registering those almost typed correctly domains and thus catching people who mistyped the actual domain name. But that's just an example of where you would have, like lots of potential domains where if it was being hacked, if something had been broken in the DNS, it wouldn't immediately come to any attention, but you could still get up to some mischief with it. It wouldn't immediately come to any attention, but you could still get up to some mischief with it. So the most recent round.

As I said, every few years, someone rediscovers this. The most recent round of this DNS hijacking surfaced recently, when two security firms, eclipsium and Infoblox, co-published their discovery. Now, as I said, though, it really should be considered a rediscovery, which is not to take anything away from them. We know that independent invention happens, since this issue does deserve as much attention as it can get if for no other reason than you know, as an industry, we're still obviously having trouble getting this right. So great, let's run the headlines again. Eclipsium's write up said multiple threat actors have been exploiting this attack vector, which we're calling sitting ducks, since at least 2019 and actually 2016, as we'll see in a second, but they wrote 2019 to perform malware delivery, phishing, brand impersonation and data exfiltration. As of the time of writing, which I think was yesterday. Numerous, they wrote. Numerous DNS providers enable this through weak or non-existent verification of domain ownership for a given account. Well, that can't be good. There are an estimated 1 million exploitable domains, and we have confirmed that more than 30,000, and I did see 35,000. Elsewhere hijacked domains since 2019. Elsewhere hijacked domains since 2019. Researchers at Infoblox and Eclipsium who discovered this issue okay, rediscovered have been coordinating with law enforcement and national certs since discovery in June 2024.

Okay, now, as I said, I'm glad this resurfaced, but we talked about this same issue eight years ago when, on December 5th of 2016, security researcher Matthew Bryant posted his discovery under the title quote 120,000 domains via a DNS vulnerability in AWS, google Cloud, rackspace and DigitalOcean. Now I should just clarify those weren't actually taken over, they were susceptible to being taken over, and that posting was Matthew's second of two postings about this problem. So in this second of two postings on December 5th 2016, matthew said recently I found the Digital Ocean suffered. You know, a major cloud hosting provider early on suffered from a security vulnerability in their domain import system which allowed for the takeover of 20,000 domain names. If you haven't given that post a read meaning his first one, he said I recommend doing so before going through this write-up.

Originally, I had assumed that this issue was specific to DigitalOcean, but as I've now learned, this could not be further from the truth. It turns out this vulnerability affects just about every popular managed DNS provider on the web. If you run a managed DNS service, it likely affects you too. This vulnerability is present when a managed DNS provider allows someone to add a domain to their account without any, like a bad guy adding a domain to their bad guy account without any verification of ownership of the domain name itself which, again, why would anybody do that? But okay, this is actually an incredibly common flow and is used in cloud services such as AWS, google Cloud, rackspace and, of course, digitalocean.

The issue occurs when a domain name is used with one of these cloud services and the zone is later deleted without also changing the domain's name servers. This means that the domain is still fully set up for use in the cloud service, but has no account with the zone file. A set of DNS records is called a zone file to control it. In many cloud providers. This means that anyone can create a DNS zone, meaning a set of DNS records for that domain, and take full control over the domain. Now I'll just note, if this sounds like that recent CNAME vulnerability we talked about, where Microsoft lost control of a CNAME record because someone you know, they deleted their service from Azure, but left DNS pointing to it.

Yes, it's very much the same, and so what we're seeing is we're seeing this rush to everything being done in the cloud having some downstream consequences, because the system really wasn't built for that. But why would that stop us? So, matthew says this allows an attacker to take full control over the domain, to set up a Web site, issue SSL and TLS certificates, host email, etc. Worst yet, after combining the results from the various providers affected by this problem, over 120,000 domains were vulnerable, he says, prince, likely many more. Okay, so in the recent reporting it turns out that Russian hackers have been doing this for years. That is actually doing this sort of quietly behind the scenes, just getting up to what they get up to this time around. Eclipsium and Infoblox have brought this to everyone's attention again and giving it a catchy name, you know, sitting Duck. Ok now, I particularly like this name, since it nicely and accurately captures the essence, which is that a surprising number of domains are in fact, as we say, sitting ducks. The naming was a bit of a stretch, but it's fun.

The initials DNS okay domain name system are repurposed to ducks now sitting right, okay, thus sitting ducks, okay, ducks now sitting, that's right. That's what DNS stands for kids. The trouble lies in the fact that responsibility is also responsibly disclosed. His discovery to a bunch of cloud providers those he enumerated where, due to the nature of delegating DNS to them, the trouble is, or was, rampant. The trouble is, or was, rampant. Google Cloud DNS at the time had around 2,500 domains affected. They did what they could. Amazon Web Services, their Route 53 DNS and they named it Route 53 because that's its port 53 that DNS runs on had around 54,000 domains affected, so they performed multiple mitigations for the problem after this was brought to their attention. Digitalocean had around those 20,000 domains we talked about earlier at risk and they worked to fix what they could. And, interestingly, rackspace felt differently than the others about the problem. They had around 44,000 domains affected and they said this was not their problem.

Matthew explains how simple it is to take over an abandoned Rackspace domain. He wrote Rackspace offers a cloud DNS service which is included free with every Rackspace account. Oh well, then let's use the free one, right? Unlike Google Cloud and AWS Route 53, there are only two name servers, dns1.stabletransitcom and dns2.stabletransitcom for their cloud DNS offering. So no complicated zone creation or deletion process is needed, he writes. All that needs to be done is to enumerate the vulnerable domains and add them to your account. The steps for this are the following First, under the cloud DNS panel, click the Create Domain button and specify the vulnerable domain and a contact email and time to live for the domain records. Step two simply create whatever DNS records you'd like for the taken over domain and you're done.

0:24:04 - Leo Laporte

He says this could be.

0:24:05 - Steve Gibson

Yeah, that's like the taken over domain.

0:24:06 - Leo Laporte

And you're done. He says this could be yeah.

0:24:07 - Steve Gibson

That's like ouch.

0:24:08 - Leo Laporte

Yeah, press the easy button on that one.

0:24:10 - Steve Gibson

That's right this could be done. He writes for any of the 44,000 domain names to take them over. Rackspace does not appear to be interested in patching this. See below he writes so if you are a Rackspace customer, please ensure you're properly removing Rackspace's name servers from your domain, meaning your domain registration, wherever that be, after you move elsewhere, and I'll explain all that in a second. So Matthew's blog posting provides the entire timeline of his interactions with the various providers. In the case of Rackspace, he initially notified them of this problem on September 9th 2016. They responded on the 12th, so that was good. There was some back and forth for a few months until finally on December 5th, after notifying Rackspace that he would be disclosing in 30 days, rackspace replied quote Thank you again for your work to raise public awareness of this DNS issue.

We've been aware of the issue for quite a while. We've been aware of the issue for quite a while. It can affect customers who don't take standard security precautions when they migrate their domains. Whether those customers are hosted at Rackspace or other cloud provider, he or she needs to accomplish that migration before pointing a domain registrar at that provider's name servers. Otherwise, any other customer of that provider who has malicious intentions could conceivably add that domain to his or her account. That could allow the bad actor to effectively impersonate the domain owner, with power to set up a website and receive email for the hijacked domain. We appreciate your raising public awareness of this issue and are always glad to work with you.

We're not going to do anything about it Exactly, but in the meantime we go, good luck to you. So in other words, yes, it's entirely possible for someone to set up their DNS incorrectly and if they do so, others who share the same DNS name servers such as generally happens within cloud providers could register and take over their domain. Have a nice day. So now, as I said, if this seems vaguely familiar, it's probably because we recently talked about that somewhat similar DNS record problem with Microsoft and Azure and that CNAME record pointing somewhere and that C name record pointing somewhere. During today's round of rediscovery of this existing problem, which has been exacerbated by the move to the cloud, brian Krebs interviewed a guy named Steve Job. You know he's still alive and there's no S on his name, so just Steve Job. He is the founder and senior vice president of the firm DNS Made Easy and the interview was conducted by email. Steve Job said, interviewed by Brian Krebs, that the problem isn't really his companies to solve. He understands about it too, noting that DNS providers, who are also not the domain's registrars, have no real my belief that the onus needs to be on the domain registrants themselves. If you're going to buy something and point it somewhere you have no control over. We can't prevent that. So, okay, here's the bottom line takeaway from all of this, despite all the press it has received, largely because they gave it a great name this time to the domain's DNS name servers that are providing the records for that domain.

My registrar, hover, manages the domain records for GRCcom and points to GRC's name servers. Since I run my own DNS, anyone who does that is safe from any of these problems. The other way to be safe is to have one's domain registrar, like Hover, also provide the domain's DNS servers and services. That's the optimal solution for most people. That has the advantage of keeping both the registration pointers and the servers to which they are pointing all in the family.

The danger that Matthew discovered and first raised back in 2016, and which Eclipsium and Infoblox have highlighted again, occurs when a domain's registrars are pointing to DNS servers that are being shared among tens of thousands of others.

If an account in that shared cloud environment is discontinued while the external domain's registration is still pointing to the cloud's name servers, anyone else can register that domain in that cloud and start receiving all of its traffic. So, as I noted before, this is unlikely to be happening with anyone's primary accounts, since those accounts would tend to be well-watched. But DNS for low-priority domains, such as hundreds of excess typosquatting prevention accounts which might have a server in the cloud which is just being used to bounce incoming traffic over to the correct domain, they could be, dare I say, sitting ducks. So just something to be aware of. If you're registered somewhere with your DNS yet your servers are elsewhere, you want to be very sure with how you handle repointing your registration, the idea being the only safe way to do it is to set up DNS where you're moving to first and then change your registration records to point to the new DNS. At no time do you want your red your registration name servers pointed somewhere where you do not have dns.

0:31:31 - Leo Laporte

So I'm confused because I do this all the time, so and so when they say migration, I don't understand what that means. So I have my DNS. My domain name company is Hover right, like yours, yep, but I often want the DNS to be hosted by FastMail. So what I do is I go in the DNS record at Hover and I make sure that it says where in the DNS servers that it's FastMail servers.

0:32:02 - Steve Gibson

So you have an MX record in.

0:32:05 - Leo Laporte

Hover, it's everything. So, hover, you can go into the full DNS. Or you could just say well, who's your DNS provider? And you say my DNS provider is FastMail, at which point none of the other DNS settings apply. Fastmail has CNAME, has MX, has mx, has a. Everything right, right, so what's what? So?

0:32:31 - Steve Gibson

so okay, so where's the? Risk is what I'm worried about, because I do that all the time so what that would mean is that your your hover, so a, a, a domain registration has that like. The root of it is two name servers Right which are something, and presumably it would be somethingfastmailcom. Yeah.

0:32:58 - Leo Laporte

DNS1.fastmailcom. Dns2.fastmailcom.

0:33:01 - Steve Gibson

Right, okay, right, okay. So the danger would be that your account at Fastmail would ever disappear. Oh, I see you would move it somewhere else, right, but without repointing it. Yes, exactly, so your registration would still be pointing to FastMail, even though you no longer had DNS services from FastMail, and that would allow someone else to swoop in and register an account and saying you know, this is my domain.

0:33:40 - Leo Laporte

Because there's no authentication when I'm pointing over to FastMail Right.

0:33:45 - Steve Gibson

FastMail relies on you to point to them. They're not responsible for verifying the ownership of the domain that they're hosting. They assume that you must own it. You pointed it. They assume your ownership because you were able to point it to them Exactly.

0:34:04 - Leo Laporte

So if you point it and forget, then you're in trouble and move your account on. Okay, then you would be a sitting duck. Well, and I do this with Cloudflare as well, because, again, hovers at a registrar, but sometimes I want things hosted on Cloudflare, yep, so I will point the servers. Now, the nice thing about Cloudflare I'm sure they do this right. Uh, fastmail really only has two servers and it's one and it has two. Cloudflare it's a different server every time that you point to. Like the d, they have many, many dns servers, right. So that does that help.

0:34:36 - Steve Gibson

That helps uh, it makes uh taking it over. Well, it's a good question, because Matthew specifically said that Rackspace only had two specific name servers.

0:34:49 - Leo Laporte

So that made. That's what raised my interest, because that's the same as FastMail.

0:34:53 - Steve Gibson

Yeah, but however Cloudflare has things set up normally, your domain registration is just a pair of name servers.

0:35:03 - Leo Laporte

It is, but it's a different.

0:35:04 - Steve Gibson

Different, but it's like a serialized, it's like a good ah, then that would probably be good, because that that that suggests it's unguessable that they're not all clustered under one destination server somewhere. Right, that makes sense, so it's so. It sounds like it has your, your account name, you know, built, uh, built into the name server name. I would expect Cloudflare to do it right, yeah, yeah.

Speaking of doing it right, leo, we're 30 minutes in. Let's take a pause. And then, believe it or not, we have another 9.8 remote code execution vulnerability, courtesy of Microsoft. Oh no.

0:35:44 - Leo Laporte

Oh yes, oh no, all right, thank, thank you steve, they did patch it, but you got to update wow, all right.

Well, this episode. This is why, if you're wondering, we have so many great security sponsors on this show. It's, it's a scary world out there. This episode of security now brought to you by bigID. They are the leading DSPM solution. Dspm is Data Security Posture Management, but BigID does it a little bit differently. So DSPM centers around risk management and how organizations need to assess, understand, identify and remediate data security risks across their data. Right, and this is part of the problem. Nowadays, you might be on-prem in cloud, multi-homes, in all sorts of locations. Bigid solves this. They seamlessly integrate with your existing tech stack, whatever it may be, and lets you coordinate security and remediation workflows across that entire stack.

You can use BigID to uncover dark data data you didn't even know you had. Like it's, you know somebody's, it's on some server somewhere right. Identify and manage risk everywhere, remediate however you choose to. That's important to have that flexibility right and to scale your data security strategy. You could take action on data risks by annotating, deleting, quarantining and more based on the data and, very important, all on maintaining an audit trail. Big deal for compliance, right. So many people use BigID. It's been you know the secret not well kept, fortunately, the secret weapon of service. Now Palo Alto Networks, microsoft uses BigID, google uses BigID, aws those are all partners. With BigID's advanced AI models, you can reduce risk, accelerate time to insight and gain visibility and control over all your data.

Here's a great testimonial Big ID equipped the United States Army. Now imagine the US Army. They've got databases everywhere, they've got data everywhere. They were able to illuminate the dark data, accelerate cloud migration thanks to Big ID, minimize redundancy and automate data retention. This is the quote from US Army Training and Doctrine Command. They said quote the first wow moment with Big ID came with just being able to have that single interface, one interface that inventories all the varieties of data holdings structured and unstructured data across emails yeah, emails, that's important too. Right, zip files you can look into a zip file SharePoint databases and more To see that mass, that mass of data to be able to correlate across all those different endpoints is completely novel, they said. The US Army said I've never seen a capability that brings us together like Big ID does Not bad.

Cnbc recognized Big ID as one of the top 25 startups for the enterprise. Big ID was named to the Inc 5000. Big ID is in the Deloitte 500, two years in a row, they're the leading modern data security vendor in the market today. I'll give you one more quote. The publisher of Cyber Defense Magazine, big ID quote.

Big ID embodies three major features we judges look for to become winners Understanding tomorrow's threats today, providing a cost effective solution. And innovating in unexpected ways that can help mitigate cyber risk and get one step ahead of the next breach. You need this. Start protecting your sensitive data wherever your data lives. At bigidcom slash security now you can get a free demo to see how BigID can help your organization reduce data risk.

Accelerate the adoption of generative AI that's a big part of this picture too. Right, because you want to train your AI on your data, but not all your data. You want to know what it's looking at. You want to be able to set rules for that. Bigid does that too. All your data you want to know what it's looking at. You want to be able to set rules for that. Bigid does that too. Bigid B-I-G-I-D dot com slash security now. Also, when you get there, bigidcom slash security now. There are a bunch of white papers that can really help you understand this very fast changing landscape. There's a new report that gives you some valuable insights and key trends on AI adoptions challenges. We were talking about AI and the overall impact of generative AI across organizations, but that's just one of many. Bigidcom slash security now. Bigidcom slash security now. We thank them so much for their support of Steve and the good work he does here on security now. Steve of Steve and the good work he does here on security now Steve.

0:40:26 - Steve Gibson

Did I mention that this zero-day exploit has a proof of concept released publicly?

0:40:37 - Leo Laporte

I may have missed that that makes it really dangerous, right yeah?

0:40:42 - Steve Gibson

When you're a Microsoft shop headlines which read exploitable proof of concept released for a zero-click remote code execution.

0:40:53 - Leo Laporte

Oh, it's zero-click. You didn't mention that either.

0:40:55 - Steve Gibson

Zero-click yes because we wouldn't want to put anyone out by? Asking them to click their mouse to be taken over.

It says yeah, zero-click RCE that threatens all Windows servers. Now, okay, my heart skipped a beat because I am a Microsoft shop and it's like from Windows 2000 all the way through 2025, all of them. So if your organization is publicly exposing the Windows Remote Desktop Licensing Service, rdl, and the good news is I'm not exposing anything, so then I went, oh OK, I'm OK, then it's hopefully not too late for you to do something about it. Researchers have detailed and published proof of concept exploit code for a critical CVSS 9.8 vulnerability being tracked as CVE 2024 38077. It's referred to as MAD license. This 9.8 impacts all iterations of Windows Server, from 2000 through 2025. And it's not even 2025 yet, it's 2024. In other words, all of them.

And if your service is publicly exposed, that's as bad as it gets. It's a pre-authentication remote code execution vulnerability that allows attackers to seize complete control of a targeted server without any form of user interaction. So it's remote and zero-click and affects all Windows services which are exposing the Windows Remote Desktop Licensing Service. This RDL service is responsible for managing licenses for remote desktop services, as its name sounds, and it's consequently deployed across many organizations. Security researchers have identified a minimum of 170,000 RDL services directly exposed to the Internet, which renders them all readily susceptible to exploitation. And I, you know, at some point I'll get tired of saying that it it just is not safe to expose any Microsoft service except Web. You know, because there you have no choice. Service except web. You know, because there you have no choice. But you know, put it behind some sort of protection, a VPN, a, you know, some extra filtering of some sort, you know port knocking anything, but don't just have a Microsoft service on the public Internet. One after the other, they are found to be vulnerable and people are being hurt. Anyway, the good news is well, actually it's not the good news, but in terms of limiting the total number 170,000, not good. But the nature of this is that it's going to be larger enterprises which are exposed and unfortunately, those are the tasty targets. Okay, so this so-called mad license vulnerability arises from a simple heap overflow. By manipulating user-controlled input, attackers can trigger a buffer overflow which leads to arbitrary code execution.

Within the context of the RDL service, researchers have successfully demonstrated a proof-of-concept exploit on Windows Server 2025, achieving a near 100% takeover success rate. The exploit effectively circumvents all contemporary mitigations. There were some detail that I didn't want to drag everyone through where the latest server 2025 has. You know, so-called you know advanced protection mitigations. This just ignores them. So, while the proof of concept demonstrated the vulnerabilities exploitation on Windows Server 2025, the researchers emphasized that the bug could be exploited more quickly and efficiently on older versions of Windows Server and of course there's lots of those and those have fewer mitigations in place. The proof of concept was designed to load a remote DLL Wow. But the researchers noted that, with slight modifications, it could execute arbitrary shell code provided by the attacker within the RDL process, making the attack even stealthier. Meaning that you know the infected system would not be reaching out onto the Internet to obtain and download that malicious DLL which might give it away. So, just you know, provide your own shellcode to do what you need.

The researchers did responsibly disclose the vulnerability to Microsoft a month before going public and while Microsoft patched the flaw in last month's security patch Tuesday, meaning July's patch Tuesday the fact that it was initially marked, lord knows why, as exploitation less likely highlights the potential for underestimating such threats. You know Microsoft would like to say, oh, don't worry about that. But you know, wow, they should be protecting their customers. Doesn't seem like they are in this instance. So anyone who's not actively using and depending upon this remote desktop licensing service, who does have it publicly exposed, should definitely shut it down immediately. And if, for any reason, you're a month behind on updates since, as I mentioned at the top of the show, today is august's patch tuesday, so now there's two months worth of patches, both which fix this you should seriously consider at least applying the patch. For this one. There is a proof of concept posted on GitHub. So we know it's not going to be long before the bad guys are attacking any unpatched network, and we know there will be some for reasons which defy belief or understanding.

Okay, this next bit of news would have been today's main topic if I didn't think it was also important and very interesting to catch up on what's been happening in the important and still troubled world of certificate revocation week. After DigiCert's mass revocation event and my own revokedgrccom server event, which we'll catch up on, it turns out that in researching this further, I found that everything we've been doing for certificate revocation over the past 10 years is about to change again. Okay, so we'll get to that. For now, let's talk about the big runner up this week, which was dubbed Sync Close. It was dubbed Sync Close by the two security researchers who discovered it. We've just been talking about Secure Boot and how AMIs the AMI BIOSes never meant to be shipped. Sample platform key was indeed shipped in as many as 850 different makes and models of PCs.

0:48:34 - Leo Laporte

You mean the one that said don't trust this key.

0:48:36 - Steve Gibson

Yes, Do not ship, do not trust. And if you look at the certificate for it, it says that right there on the front page. It's like okay, well, I guess no one looked at that. Another thing that's not good is when two researchers discover a fundamental vulnerability in AMD's processors and by that I mean nearly all of AMD's processors which allows for the subversion of this same secure boot process regardless of what key its platform is using. Well, that makes it easier, and AMD is a popular processor as we we know. I have one right over here, uh-huh.

Okay, so wired covered this discovery and gave it the headline sync close flaw in hundreds of millions of amd chips allows deep, virtually unfixable. Now, that's a bit of an exaggeration, but in fairness, we seem to be encountering similar. Sky is falling headlines these days, almost weekly. So what's going on? Their subhead was not any more encouraging. It read their subhead was not any more encouraging. It read researchers warned that a bug in AMD's chips would allow attackers to root into some of the most privileged portions of a computer and that it has persisted in the company's processors for decades.

Okay, so I'm going to first share a somewhat trimmed down version of what Wired wrote, because we have a more sophisticated audience, but then we'll see where we are. So Wired explained. Security flaws in firmware have long been a target for hackers looking for. A stealthy foothold appear not in the firmware of any particular computer maker, but in the chips found across hundreds of millions of PCs and servers. Now security researchers have found one such flaw that has persisted in AMD processors for decades. That would allow malware to burrow deep enough into a computer's memory that in many cases, it may be easier to discard a machine than to disinfect it I'm not discarding my my game machine.

Hold on there, buddy at the defcon hacker conference, which is underway right now, researchers from the security firm IOactive that we've spoken of many times in the past plan to present a vulnerability in AMD chips they're calling sync clothes. The flaw allows hackers to run their own code in one of the most privileged modes of an AMD processor, known as system management mode. That's even below ring zero. That's like the code that has the lights on the motherboard even when nothing else is running Wired wrote, designed to be reserved only for a specific protected portion of its firmware, only for a specific protected portion of its firmware. Ioactive's researchers warn that it affects virtually all AMD chips dating back to 2006 or possibly earlier. The researchers Nisim and oh, I've practiced pronouncing this before, but it's like it's O-K-U-P-S-K-I.

0:52:28 - Leo Laporte

Oh sure, that's Okupski Okupski, okupski, okupski.

0:52:33 - Steve Gibson

Reminds me of Shaboopi, but no Okupski.

0:52:37 - Leo Laporte

The key on all of these is, if you say it in a Boris Badenov accent, it works. You can get away with anything. You can get away.

0:52:56 - Steve Gibson

You say. Okupski note that exploiting the bug would require hackers to have already obtained relatively deep access to an AMD-based PC or server, but that the sync-close flaw would then allow them to plant their malicious code far deeper still. In fact, for any machine with one of the vulnerable AMD chips and again, everybody who has AMD chips are vulnerable essentially the IO active researchers warn that an attacker could infect a computer with malware known as a boot kit that evades antivirus tools and is potentially invisible even to the operating system, while offering a hacker full access to tamper with the machine and surveil its activity. For systems with certain faulty configurations in how a computer maker implemented platform secure boot, which the researchers warn encompasses a large majority of the systems they tested a malware infection installed via sync close could be harder yet to detect or remediate, they say, surviving even a reinstallation of the operating system. Right, we talked about this. Right, it's down in the firmware that boots the system. It is a boot kit, okupski said. Quote imagine nation state hackers or whoever wants to persist in your system. Even if you wipe your drive clean, it's still going to be there. It's going to be nearly undetectable and nearly unpatchable. Okupski said, only opening a computer's case. Physically connecting directly to a certain portion of its memory chips with a hardware-based programming tool known as an SPI flash programmer, and then meticulously scouring the memory would allow the malware to be removed. And of course, you'd have to know what was expected there, because normally no motherboard provider is going to let you have what should be in that flash chip. Nism sums up that worst case scenario in more practical terms quote you basically have to throw your computer away, unquote silently cursing them, and noted that it has quote released mitigation options for its AMD EPYC data center products and AMD Ryzen PC products, with mitigations for AMD embedded products coming soon, and Wired said the term embedded in this case refers to AMD chips found in systems such as industrial devices and autos. For its EPYC processors designed for use in data center servers specifically, the company noted that it released patches earlier this year because the researchers gave them plenty of time.

Amd declined to answer questions in advance about how it intends to fix the sink-close vulnerability or for exactly which devices and when, but it pointed to a full list of affected products that can be found on its website's security bulletin page, and I do have a link to that below this in the show notes. In a background statement to Wired, amd emphasized the difficulty of exploiting sync close. That is, you know, they're trying to keep everyone from hyperventilating. They said. To take advantage of the vulnerability, a hacker has to already possess access to a computer's kernel, the core of its operating system. Amd compares the sinkhole technique to a method for accessing a bank's safe deposit boxes after already bypassing its alarms, the guards and the vault door. On the other hand, ocean's Eleven, nisim and Okupski respond that while exploiting sync close does require kernel level access, exploiting those vulnerabilities known or unknown, nisim said quote people have kernel exploits right now for all these systems. They exist and they're available for attackers. This is the next step. In other words, this is what they've been waiting for.

Nisim and Okupski's sink-close technique works by exploiting an obscure feature of AMD chips known as T-close. The sink-close name comes from combining the T-Close term with Sinkhole, the name of an earlier system management mode exploit found in Intel chips back in 2015. In AMD-based machines, a safeguard known as TSEG prevents the computer's operating systems from writing to a protected part of memory meant to be reserved for system management mode, known as System Management Random Access Memory, or SMRAM. Amd's T-Close feature, however, is designed to allow computers to remain compatible with older devices that use the same memory addresses as SM RAM, remapping other memory to those SM RAM addresses when it's enabled. In other words, and when you do that, you're no longer able to access the underlying memory because you've mapped other memory on top of it and so that's what you now access. But there's a problem with that. Nisam and Okupski found that with only the operating system's level of privileges, they could use that T-close remapping feature to trick the system management mode code into fetching data they've tampered with in a way that allows them to redirect the processor and cause it to execute their own code at the same highly privileged system management mode level. Okupski said, quote I think it's the most complex bug I've ever exploited, and speaking of complex, I've avoided his first name because it doesn't have any vowels and I can't even begin to pronounce it.

Nisam and Okupski, both of whom specialize in the security of low-level code like processor firmware, said they first decided to investigate AMD's architecture two years ago simply because they felt it had not gotten enough scrutiny compared to Intel, which, of course, we've over-scrutinized, if anything, even as its market share has risen. They found the critical T-close edge case that enabled sync close. They said get this just by reading and rereading AMD's documentation. Nisim said quote I think I read the page where the vulnerability was about a thousand times and then on the 1001, I noticed it. They alerted AMD to the flaw back in October of last year but have waited nearly 10 months to give AMD ample time to prepare a fix, because they need to change the microcode in all of the affected AMD chips in order to fix this flaw in T-Close. So for users seeking to protect themselves, nisam and Okupski say that for Windows machines likely the vast majority of affected systems they expect patches for Sync Close to be integrated into updates shared by computer makers with Microsoft, who will roll them into future operating system updates. Patches for servers, embedded systems and Linux machines may be more piecemeal and manual. For Linux machines it will depend in part on the distribution of Linux a computer has installed.

Nisim and Okupski say they agreed with AMD not to publish any proof of concept code for their sync close exploit for several additional months to come, now that this has everyone's attention, in order to provide more time for the problem to be fixed. But they argue that despite any attempt by AMD or others to downplay sync close as too difficult to exploit. Well, everyone listening to this podcast knows better than that. Amd or others to downplay sync close as too difficult to exploit. Well, everyone listening to this podcast knows better than that. It should not prevent users from patching as soon as possible. Sophisticated hackers may already have discovered their technique, right, I mean, we don't know that somebody else didn't study those pages and go, wow, there's a problem here. Or they could figure out how. Now they know there's a problem there, exactly how Nisim and Okupski present their findings. Once this has been presented at DEF CON Again, it's now public. Even if sync close requires relatively deep access, the IO active researchers warn the far deeper level of control it offers means that potential targets should not wait to implement any fix available.

Nism finished. If the foundation is broken, then the security for the whole system is broken. So, as I said, I've got a link in the show notes. It's like all the chips that AMD makes, as we know, when we've covered this relative to the need for Intel to patch their microcode, windows is able to, and fortunately does patch the underlying processor microcode at boot time on the fly. It's somewhat annoying that there isn't anything more definitive from AMD about what's patched and what isn't, and when what isn't will be, since this really is a rather big and important vulnerability. It allows anything that's able to get into the kernel to drill itself right down into the firmware and live there permanently, period. But we may need to wait a few months until IOactive guys are able to disclose more and hopefully they'll produce a definitive test for the presence of the patch. Someone will create a benign test for the vulnerability, which would be very useful, so wow.

1:04:28 - Leo Laporte

Another bad bad biggie.

1:04:32 - Steve Gibson

And Leo, we're at one hour, let's take a break. And then we're going to look at, okay, the most bizarre thing imaginable A user, a trivial, to reproduce blue screen of death, allowing anyone who wants to to crash Windows, which Microsoft said. Well, it didn't happen to us. So what are you talking about? And they're not fixing it.

1:05:01 - Leo Laporte

Okay, yeah, your mileage may vary. I guess I'm glad you're here. We're glad you're watching Security Now with Steve Gibson. We do it every Tuesday. It's kind of the day of the week that most security professionals wait for, all week long to find out what's going on in the world of security, and the show is no exception. Wow, holy cow, our show today, brought to you by Vanta. I have to say we have the best sponsors on this show.

Whether you're starting or scaling your company's security program, it's become very clear that demonstrating top-notch security practices and establishing trust with your customers is more important than ever, and that's why you need Vanta. Vanta automates compliance for SOC 2, for ISO 27001, and more, saving you time, saving you money, helping you build customer trust. Plus, you can streamline security reviews by automating questionnaires and demonstrating your security posture with a customer-facing trust center. Customer trust Plus you can streamline security reviews by automating questionnaires and demonstrating your security posture with a customer-facing trust center. Really nice looking too, all powered by Vanta AI. I guess it kind of explains why over 7,000 global companies like Atlassian, flowhealth, quora, use Vanta to manage risk and prove security. Prove security in real time. Get $1,000 off Vanta right now when you go to vantacom slash security now. That's V-A-N-T-A. Vantacom slash security now. $1,000 off. Vantacom slash security now. We thank Vanta so much for supporting the security now program and the good work Steve is doing to save us all from ourselves and the bad guys on the Internet. This one's wild.

1:06:52 - Steve Gibson

This is OK. So we have yet another example. We have yet another example We've unfortunately had a few of Microsoft apparently choosing deliberately choosing not to fix what appears to be a somewhat significant problem. This allows anyone to maliciously crash any recent and fully patched Windows system, and you know that would seem to be significant. Yet Microsoft doesn't appear to think so, or they can't be bothered. This all came to light just yesterday and the Dark Reading site has a good take on it.

They wrote a simple bug in the Common Log File System driver that's CLFS. So it's CLFSsys. The CommonLogFileSystemDriver can instantly trigger the infamous blue screen of death across any recent versions of Windows. Clfs, they write, is a user and kernel mode logging service, which is why any user can do it. That helps applications record and manage logs. It's also a popular target for hacking and if you Google CLFSsys, you'll see it's had a troubled history. So you'd think Microsoft would go oops again. Apparently not, they write. While experimenting with its driver last year, a Fortra researcher discovered an improper validation of specified quantities of input data which allowed him to trigger system crashes at will. His proof of concept exploit worked across all versions of Windows tested, including 1011 and Windows Server 2022, even in the most up-to-date systems. The associate director of security R&D at Fortress said it's very simple Run a binary, call a function and that function causes the system to crash. He added I probably shouldn't admit to this, but in dragging and dropping it from system to system today, I accidentally double-clicked it and crashed my server.

That sounds like something I'd do. Cve-2024-67-68 concerns base log files, blfs, a type of CLFS file that contains metadata used for managing logs. Ah, that's an interpreter. The CLFSsys driver, it seems, does not adequately validate the size of data within a particular field in the BLF. Okay, so right. So this is a metadata file which is used to control the CLFS, and it's assuming the metadata is correct, because why wouldn't it be? Who would ever tamper with that? Right, any attacker with access to a Windows system can craft a file with incorrect metadata size information, which will confuse the driver. Then, unable to resolve the confusion, it triggers KEBUGCHECKX, the function that triggers the blue screen of death.

Crash A CVE. This CVE, it's a medium badness. Right, it's a 6.8 out of 10 on the CVSS scale. It doesn't affect the integrity or confidentiality of data, nor cause any kind of unauthorized system control, although it probably could ground Delta Airlines when you think about it. It does, however, allow for wanton crashes that can disrupt business operations or potentially cause data loss. Or, as Fortra's associate director of security, r&d, explains, quote it could be paired with other exploits for greater effect. It's a good way for an attacker to maybe cover their tracks or take down a service where they otherwise shouldn't be able to, and I think that's where the real risk comes in. He says these systems reboot unexpectedly. You ignore the crash because it came right back up and oh look, it's fine now. But that might have been somebody hiding their activity, hiding the fact that they wanted the system to reboot so that it could load some new settings or have them take effect.

Fortra first reported get this its findings last December 20th, five days before Christmas. Here's a present for you, microsoft. After months of back and forth, they say, fortress said that Microsoft closed their investigation without acknowledging it as a vulnerability or applying a fix. Thus, as of this writing yesterday, it persists in Windows systems, no matter how updated they are. In recent weeks, windows Defender has been identifying Fortress Proof of Concept, which apparently they provided to Microsoft as malware. Oh, so don't do that. But let's not fix the problem. But besides running Windows Defender and trying to avoid running any binary that exploits it, there's nothing organizations can do with CVE-2024-6768 until Microsoft releases a patch. Dark reading has reached out to Microsoft for its input, but has heard nothing back. Okay, so get a load of this timeline which Fortra published on their vulnerability disclosure report December 20th 2023, reported to Microsoft with a proof of concept exploit. So, hey, microsoft, I know it's close to Christmas, but here's some code that crashes everything. Just thought you should know.

January 8th, microsoft responded that their engineers could not reproduce the vulnerability. I wonder what version of Windows they're using? Version of Windows they're using? January 12th, four days later, fortra provided a screenshot showing a version of Windows running the January patch Tuesday updates, meaning OK, we patched in January. Here's the memory dump after it crashes. February 21st, microsoft replied that they still could not reproduce the issue and they were closing the case. A week later, february 28th, fortra reproduced the issue, again with the February patch Tuesday updates installed and provided additional evidence, including a video of the crash condition. Maybe they should just put it on YouTube, ok, so that's February 28th.

Now we zoom fast forward to June 19th, 2024. Fortra followed up to say that they said we intended to pursue a CVE and publish our research. Almost a month goes by july date. Now we're on. July 16th, fortress shared that it had reserved cve 2024 67, 68 and would be publishing soon. August 8th, reproduced on latest updates. That's the july patch tuesday. Last month's patch Tuesday of Windows 11 and Server 2022 to produce screenshots to share with the media. August 12th yesterday, is the CVE publication date, the same company, meaning Microsoft, that wants to continuously record everything every Windows PC user does while using Windows, while assuring us that our entire recorded history will be no, thank you. So I oh, and I did see on github that what was a windows crash has now evolved, with this but with this flaw, into an elevation of privilege see, isn't that what happens?

1:15:32 - Leo Laporte

that's why you never can say, oh, it's just a crash. Yep, because the next step after the crash is well, now we've got what do we do to? We can control a system.

1:15:41 - Steve Gibson

Now now we have an unprivileged user. Is any apparently unprivileged user is able to elevate themselves to full root?

1:15:50 - Leo Laporte

and that's how it always progresses yep that's why you take that that stuff seriously yep, crazy, okay.

1:15:58 - Steve Gibson

So, um, as we know, uh, two weeks ago we covered the news from binerly that an estimated 10 of pcs had shipped with, and we're still currently using and relying upon ami sample demonstration uefi platform keys which had never been intended to be shipped into production. Blah, blah, blah. Secure Boot isn't? They? Binary named it PK Fail. The following Friday I saw that it was not easy for anyone to determine whether they had these keys.

By last Tuesday I had a Windows console app finished and published. I now, and I said I would have the, the, the graphical version, this week, and I do. I'm going to after the podcast today. There's a problem with foreign language installs of Windows. I know how to fix it. I just didn't have time before the podcast to do so. I know how to fix it, I just didn't have time before the podcast to do so. So the first release is there. Works for everybody running English version of Windows. It kind of works for everybody else in the world, but it's not the way I want it to. So there's a cosmetic problem that I'm going to fix.

So is secure boot is available for download from GRC. You're able to run it. It tells you immediately what your situation is Are you BIOS or UAFI? If your UAFI is secure boot enabled or not, and if it's enabled, it'll show you your cert. Many BIOSes will show you your cert even if secure boot is not enabled Not that it matters, but still it's nice to know. Some BIOSes don't. So it tells you that either way, and if it is able to get the cert, it'll show it to you and allow you to browse around and look at the information in it. And if, for some reason, you want to write it to a file you're able to, you're able to export the cert from your your firmware uh. Using uh is boot secure as well. So, anyway, a cute little freebie and uh case closed. We'll. We'll see how this evolves over time.

1:18:11 - Leo Laporte

So is it? Is it a like a real graphical interface, or is it just a two? Is it just text drawing?

1:18:17 - Steve Gibson

no, no it's a, it's a dialogue. Yeah, in fact, if you go to, is boot secure dot htm okay uh, you'll see two sample dialogues and you can bring it up on our screen while we're talking about it, grccom slash is boot secure and it ought to do I have to type the ht or? No, you don't, Because I put that on for you.

1:18:40 - Leo Laporte

How does he do that? There it is.

1:18:44 - Steve Gibson

Yeah, yeah. And so shows you that it is or is not Nice Enabled, and whether or not you've got good keys or keys that say do not trust, do not ship.

1:18:58 - Leo Laporte

Yeah, and what is it? Yeah?

1:19:01 - Steve Gibson

Is it 3,300 downloads so far? Wow.

1:19:04 - Leo Laporte

Yeah, it's taken off nicely, and it's only just begun.

1:19:08 - Steve Gibson

Yeah, and what's really cool is that I did the mailing to SecurityNow listeners this morning. Security Now listeners this morning, 8,145 of our listeners 8,145, received the email hours ago and I've already had feedback from them.

1:19:33 - Leo Laporte

So even before the podcast is happening, we're getting feedback on the podcast. You've got a good group. I have to say, yeah, we really do. It's really impressive. If you're not on the email list list, go to grccom, slash email and it's actually got two benefits. You can sign up for emails, but you can also validate your own address so that you can, from then on, email steve so yes then exactly and boy did I get feedback when what I'm about to tell you happened, happened oh yeah, I can't wait.

Well, let's talk about revocation in the new way, the way that the cool kids are doing it In just a second with Steve Gibson, and Security Now our show today, brought to you by 1Password. We had such good news a few months ago when our regular longtime sponsor, collide, announced hey, we just got acquired by 1Password, and now you got the two together in a very nice match. They call it extended access management. It takes the passwords management, the security of 1Password so that's for validation of who you are, who you say you are and then marries it with the amazing tools that Collide provides to make sure that every user is running secure software on a secure device. See, here's the problem. Your end users don't always work on company-owned devices, right? They're not only using IT-approved apps, right? In fact, very often it's shadow IT. They're bringing their own stuff. So now the question is how do you keep your company's data safe when it's sitting on all those unmanaged apps and devices? And that's where extended access management from 1Password comes in. 1password, extended access management, is the first security solution that brings all those unmanaged devices and apps and identities under your control in a way that users prefer, that doesn't impinge on their productivity. They actually they like it.

One password's extended access management ensures every user credential is strong and protected. Every device is known and healthy. Every app is visible. It solves problems that traditional IAM and MDM can't touch. They like to use this analogy. I like it too. If you've ever been in a college campus, you've seen the beautiful quadrangle with the manicured lawn and the brick buildings and those lovely brick paths from building to building. That's your company's security right. The company-owned devices, the IT-approved apps, the managed employee identities everything's clean, everything's secure. Then there are the paths people actually use the BYOD devices, the shadow IT, the shortcuts that go from point A to point B. This is actually the shortest distance. People aren't dumb. Unfortunately, those unmanaged devices of shadow IT apps, the non-employee identities like contractors, are where the problems almost always lie. Most security tools only work on the little happy brick paths, but you get so many more security problems on the shortcuts. So you need one password extended access management security.

1password Extended Access Management. It's security for the way people actually work and it's a new world. It's a new world. Every one of our employees is working at home now I bet you have a lot of remote employees as well. 1password Extended Access Management is available to companies, with Okta now Coming later this year to Google Workspace and Microsoft Entra. That's really good news. Check it out. 1passwordcom slash security now the number one. P-a-s-s-w-o-r-d dot com slash security now. We thank him so much for supporting Steve Gibson and security now. Collide's been a great sponsor forever and we're glad to have 1password there too. It's a nice, it's a fantastic team, thank you. One password and collide.

1:23:22 - Steve Gibson

One passwordcom slash security I like that logo, that the the circle with the little, a little keyhole.

1:23:30 - Leo Laporte

Yeah, I think that's been theirs for a long time but I may be wrong.

1:23:49 - Steve Gibson

But yeah, I like it too. All right, let's hear about this revocation revolt underscore onto the front of randomly generated 32-character subdomain names which are being used in DNSC name records to create proof of control over those domains. So you would say to DigiCert hey, I want a certificate for Jimmy's Tire Works. And they'd say, okay, here's a blob, a random string, add this to Jimmy's Tireworks DNS. When you have done that, let us know, we'll pull that, we will query that record with that random string, with that random string, and if we can, then that means you actually have control of Jimmy Tireworks DNS and we're going to give you a certificate. So that's proof of control over a domain.

At the end of that discussion last week, we talked about the historical mess of certificate revocation and I reminded everybody about GRC's revokedgrccom test website, which I originally created back in 2014, 10 years ago, when this podcast covered this topic in depth and to bring in order to talk about it, to bring the revokedgrccom site back to life for last week's podcast.

The previous week, on Thursday, august 1st, I had created and then immediately revoked a brand new certificate for the revokedgrccom web server and, as we all know, five days later, on August 6th one week ago today, during last week's podcast, leo and I, and all of our online listeners at the time, were verifying that. Sure enough, that site, which was serving a certificate that had been revoked five days earlier, which Ivan Ristick's SSL Labs site verified Leo, you brought that up while we were on the air Verified was revoked. Despite that, that site was still being happily displayed by everyone's browsers of every ilk, on every platform, even those that were set to use OCSP, the Online Certificate Status Protocol. As luck would have it, the next day I began receiving email from our listeners informing that none of their various web browsers would any longer display the revokedgrccom site.

1:26:44 - Leo Laporte

Yeah, I just tried it on Arc on Mac. What do you know? And this is what you want to see, right?

1:26:52 - Steve Gibson

That's a bad certificate buddy. It is revoked. And it even says there net colon error underscore cert underscore revoked. So for everybody, it was showing as an untrusted site with a revoked certificate. Okay, what happened? Yeah, one day, overnight. What happened is that five days before the podcast, when I was bringing this up, with DigiCert's help, I created a valid certificate for the domain revokedgrccom and installed it on its server. Then I surfed over to that site with my browser to verify that everything was good the server was up, the page came up up and everything was fine its own certificate and found DigiCert's online certificate status protocol server's URL, which is at http//ocspdigicertcom and note that the URL actually is HTTP, because the service that's behind this URL has no need for any protection from spoofing or privacy it's http//ocspdigicertcom.

Upon finding that URL, five days before the podcast, the podcast, grc's revoked server queried DigiCert's OCSP server for the current status of this brand new certificate. And, because I was testing the server to make sure the certificate was installed and working properly, I had not yet revoked it. So DigiCert's OCSP response was to return a short-lived, seven-day time-stamped certificate attesting to the fact that this bright and shiny new certificate was valid as the day it was born, grc's revoked server stored that brand new OCSP certificate in a local cache and from then on, every time anyone's web browser attempted to connect to revokedgrccom GRC server would not only send out its own revokedgrccom certificate, but stapled to that certificate was DigiCert's OCSP certificate having a valid expiration date of August 8th, one week from the date it was first asked to produce an OCSP certificate for the revokedgrccom site. So having then seen that everything looked good and was working as I expected, I returned to DigiCert and immediately manually revoked the brand new certificate. I checked DigiCert's own site status page for the revokedgrccom domain and it confirmed revocation. I went over to Ivan Ristick's excellent ssllabscom facility and verified that it was very unhappy with the revokedgrccom site. So everything looked fine. So everything looked fine revoked certificate, the original and not yet expired pre-revocation OCSP certificate it had received from DigiCert the first time it was asked.

Whoops, and just as today's web servers are now stapling OCSP certificates to their own certificates, today's web browsers are now relying upon those stapled OCSP certificate assertions, since it means that they do not need to look any further. They don't need to bother making their own independent queries as to the certificates, you know, to the certificates authority to check on the current status of the certificate, because there's a freshly stapled short life certificate from that certificate authority saying yes, the certificate is good, except when it's not. As a consequence of that, last Tuesday everyone's browser going to the revokedgrccom website received not only the recently minted revokedgrccom certificate that was valid for the next 13 months, but also a valid and unexpired OCSP assertion stapled to that certificate stating that it was as of the date of the OCSP certificate. The revokegrccom certificate was valid and in good standing, so no one's normally configured web browser would or did look any further. Here was a recent fresh, unexpired assertion signed by DigiCert themselves, stating that all's good with the cert.

A day before its cached copy of that original OCSP certificate was due to expire, grc's revoked server reached out to DigiCert for an update to update its cached OCSP stapling. Web servers that do OCSP stapling typically begin asking for an OCSP status update well before their existing OCSP certificate is due to expire, so that they're never caught flat-footed without one and are certain to obtain a replacement from their certificate authority well before the current certificate expires. That happens a day before typically, and at that point six days after the certificate's creation. Last Wednesday, grc's revoked server learned that the certificate it was serving had been revoked was serving had been revoked, so it got out its stapler and replaced the soon-to-expire original OCSB certificate with the new one, carrying the news to everyone who was asking from that point on. So what we've all just witnessed is a perfect example of a pretty good compromise. Yeah, it worked. Well, it worked, and it wasn't immediate, but it was, you know six days, yeah, yeah okay.

So to see what I mean by that, let's turn the clock back 10 years to see how we got here. We first looked at all this 10 years ago in 2014, on this podcast. At that time, the concept of OCSP stapling existed, but it was still not widely deployed. I recall talking about it wistfully, since it really did seem like an ideal solution, but it still wasn't widely supported. Our longtime listeners will recall that OCSP services themselves did exist 10 years ago, but their services were not used by default. As we know again, OCSP stands for Online Certificate Status Protocol and the idea behind it is elegantly simple. Any certificate authority that's issuing certificates runs servers at a public URL that's listed in every certificate they sign, so anyone can know how to find it from within the certificate and queried the certificate authority's OCSB service right then and there to obtain up-to-the-moment information about any specific domain's certificate. And I'll just note that if browsers were doing that today, instead of relying on the stapled and potentially up to a week old OCSB certificate, everyone's browser would have immediately complained. You know, last, I mean like immediately after I revoked the site. But browsers aren't doing that now. They're relying on stapling when it exists. So what the OCSB service returns is a time-stamped, short-lived signed-by-the-certificate-authority attestation of the current status of the queried certificate. It created, as its name says, an online certificate status protocol.

At the time, 10 years ago, we played with Firefox's settings which could cause Firefox to query certificate authorities' OCSB servers in real time. But there were a couple of problems with this. One problem was that OCSB services back then were still not very reliable and could be slow to respond. So a browser visits a website like the main website the visitor wants to go to, receives the site's TLS, back then SSL certificate examines that certificate to obtain its issuing certificate authority's OCSP server URL and queries the URL for a real-time verification of the certificate's validity. Even if the OCSP server responds quickly, a cautious web browser should wait before doing anything with the website's page, since the presumption is that the certificate it was served might be bogus. This is, after all, the reason for the OCSP double-check is to make sure. So this could introduce a significant delay in the user's experience that no one wants.

And what if no one is home at the OCSP service? What if the CA is having a brief outage or they're rebooting their service or updating it or who knows what? Does the web browser just refuse to connect to a site that it cannot positively verify. The goal, of course, is to never trust an untrustworthy site, but given the fact that nearly all checked certificates will be good and that only the very rare certificate will have been revoked before it expires on its own, it seems wrong to refuse to connect to any site whose certificate authority's OCSB service doesn't respond or might be slow as a consequence.

10 years ago, even when a web browser had been configured to check OCSB services, unless it was forced to do otherwise, browsers would generally fail open, meaning they would default to trusting. Any site that they were unable to determine should definitely not be trusted. So one clear problem was the real-time trouble that real-time certificate verification created. Recall that. Back then Google was like on a tear about, like they were frantic about the performance of their web browser, chrome, which was still kind of new. The last thing they were going to do was anything that might slow it down in any way. So that's, they created these bogus crl sets and I called them out on it because they were saying oh yeah, this works as I said, it doesn't.

1:39:38 - Leo Laporte

Yeah, we talked about this. Oh yeah, we really want that.

1:39:43 - Steve Gibson

Yeah, it was a number of podcasts and it came back a few times. Ok, but another problem was the privacy implications which this brought to the web browser's user. If every site someone visits causes their browser to reach out to remote certificate authority operated OCSP servers, then anyone monitoring OCSP traffic which, as I mentioned, has always been unencrypted HTTP and even is today that would allow anyone monitoring OCSB traffic to track the sites users were visiting. You get the complete list pretty much yeah.