Security Now 985 transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show

0:00:00 - Leo Laporte

It's time for security now. Steve Gibson is here. The post-mortem on the CrowdStrike flaw. Actually, crowdstrike explained how it happened. I think you'll enjoy Steve's reaction to that. Firefox is apparently not doing what it says it's doing when it comes to tracking cookies. We'll talk about that. And then a flaw that makes nearly 850 different PC makes and models insecure. It's all coming up. Next on Security Now Podcasts you love From people you trust. This is Twit. This is Security Now with Steve Gibson, episode 985, recorded Tuesday, july 30th 2024. Platform key disclosure. It's time for Security Now, the show where we cover the latest news in the security front. And boy, there's always a lot of news in the security front. With this guy right here. He is our security weatherman, mr Steve Gibson. Hi, steve.

0:01:11 - Steve Gibson

And the outlook is cloudy.

0:01:14 - Leo Laporte

Chance of disaster.

0:01:16 - Steve Gibson

Yes, yes, Remember duck and cover? Well, anyway, we did not. Well, we did not have a new disaster since 10 days ago, when we had one that pretty much made the record books. But we do have a really interesting discovery, and what's really even more worrisome is that it's a rediscovery. Even more worrisome is that it's a rediscovery. Today's podcast is titled Platform Key Disclosure for Security. Now number 985, this glorious last podcast of July and the second to the last podcast, the penultimate podcast, where you are in the studio, Leo, rather than in your new attic bunker.

0:02:08 - Leo Laporte

Can an attic be a bunker, though, really? Oh, that's a good point. I think I'm actually more exposed.

0:02:16 - Steve Gibson

You'll be the first to go. But really sometimes you think maybe that's the best right.

0:02:22 - Leo Laporte

Oh, I always think that Maybe that's the best right. Yeah, oh, I always think that I know the worst thing is a prolonged, slow, agonizing, suffering, death, what we know as life, or life as it's known. Yes, that's the worst thing.

0:02:37 - Steve Gibson

Especially when you're a CIO and you're well anyway. So we've got a bunch of stuff to talk about. Yes, We've got, of course, the obligatory follow-up on the massive CrowdStrike event. How do CrowdStrike users feel? Are they switching or staying? How does CrowdStrike explain now what happened, and does that make any sense? How much blame should they receive? Which blame should they receive? We've also got an update on how Entrust will be attempting to retain its customers and keeping them from wandering off to other certificate authorities. Firefox just no one understands what's going on exactly, but it appears not to be blocking third party tracking cookies when it claims to be. Also, we're going to look at how hiring remote workers can come back to bite you in the you know what.

0:03:29 - Leo Laporte

Oh, don't tell me that. That's all remote workers now. Yeah, yeah, yeah, yeah.

0:03:34 - Steve Gibson

A security company got a rude awakening and we learned something about just how determined North Korea is to get into our britches. Also, did Google really want to kill off third-party cookies? Or are they maybe actually happy about what happened? And is there any hope of ending abusive tracking? Auto-updating anything is obviously fraught with danger. We just survived some. Why do we do it, and is there no better solution? And what serious mistake did a security firm discover that compromises the security of nearly 850 PC makes and models? Call, and I'll be further driving home the point of why, despite Microsoft's best intentions assuming that we agree, they have only the best of intentions I know that opinions differ widely there they can't keep recall data safe Because it's not necessarily their fault. It's just not a safe ecosystem that they're trying to do this in. So anyway, we have a fun picture of the week and a great podcast ahead.

0:04:51 - Leo Laporte

Actually that's a good topic we could talk about. Could any computing system make recall a safe thing? Probably not right, it's just the nature of computing systems.

0:05:05 - Steve Gibson

Yep, we have not come up with it yet.

0:05:07 - Leo Laporte

Yeah, there's no such thing as a secure, perfectly secure operating system. You could try. That's where our sponsors come in. They are there to help you.

Like Lookout, today, every company is a data company, right? I mean it's like you're all running recall all the time. Every company is at risk as a result Cyber threats, breaches, leaks this is the new norm. If you listen to the show, I don't have to tell you that Cyber criminals are becoming more sophisticated every minute now, using AI and all sorts of. I mean it's terrifying. And it's worse because we live in a time when boundaries for your data no longer exist. What it means for your data to be secure has fundamentally changed because it's everywhere.

Enter Lookout. There is a solution, from the first phishing text to the final data grab. Lookout stops modern breaches as swiftly as they unfold. Lookout stops modern breaches as swiftly as they unfold, whether on a device in the cloud across networks or hanging out working remotely at a local coffee shop. Lookout gives you clear visibility into all your data, in motion and at rest, wherever it is. Wherever it's going, you'll monitor, assess and protect, without sacrificing productivity and employee happiness for security. With a single, unified cloud platform, lookout simplifies and strengthens reimagining security for the world that we'll be today. Visit Lookoutcom today to learn how to safeguard data, secure hybrid work and reduce IT complexity. That's Lookoutcom. We thank them so much for reduce IT complexity. That's lookoutcom. We thank them so much for supporting the good work Steve's doing here at SecurityNow Lookoutcom. Do we have a? I didn't even look. Do we have a picture of the week this week? We do indeed.

0:07:00 - Steve Gibson

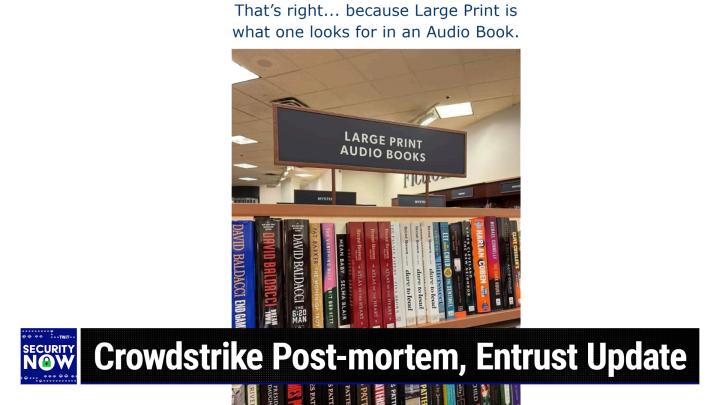

This one was just sort of irresistible. I know what they meant, but it's just sort of fun what actually transpired.

0:07:11 - Leo Laporte

I just saw it.

0:07:12 - Steve Gibson

Yeah, now I think and you'll probably recognize this too the signage. I think it's a Barnes Noble.

0:07:19 - Leo Laporte

Yeah.

0:07:32 - Steve Gibson

It's sadly been quite a while since I've walked into an actual bookstore and filled my lungs with that beautiful scent of paper pulp Used to love it.

0:07:37 - Leo Laporte

I grew up in the San Mateo Public Library. They just would sort of nod to me as I would walk in. Hello Steve.

0:07:40 - Steve Gibson

Enjoy your time in the stacks, anyway. So what we have here is a sign over a rack of books. The sign reads large print audio books and of course, I gave that the caption. That's right, because large print is what one looks for in an audio book. Now, many of our clever listeners who received us already via email a couple hours ago, they actually responded with what my first caption was, which was variations of. Do you think they actually meant loud audio books?

0:08:23 - Leo Laporte

Okay, that could be, wow, okay, that could be.

0:08:26 - Steve Gibson

And somebody took it more seriously and said well, you know, steve, somebody who's visually impaired might need large print on the instructions for how to play the audio book.

0:08:38 - Leo Laporte

That makes sense.

0:08:40 - Steve Gibson

That's a point.

Actually, what we know is the case is that large print books are on the upper few shelves and the audio books are down below and they put them both on the same sign, creating this little bit of fun for us and our audience. So thank you for whomever sent that to me. It's much appreciated, and I do have many more goodies to come, and I do have many more goodies to come. Ok, so I want to share the note Today that I mentioned last week. This was somebody who was forced to DM me because I don't remember why, but he, he wrote the whole thing. Then he I think he created a Twitter account in order to send it to me.

Now I'll take this note, I'll take this moment to mention I just was forced to turn off DMs, incoming DMs from unknown senders or senders Twitter people who I don't follow and, of course, I famously follow no one. So, and the reason is, when I went there today, all I had a hard time finding any actual new direct messages to me from among the crap. It was so many people wanting to date me and I don't. I don't think any of them actually do. You know and I am wearing a ring.

I'm proud of that, that and foreign language DMs that I can't read, and I finally thought what am I? You know why? What no? So I just turned it off. So I'm sorry, but I will still post the show notes to my you know from at SGGRC on Twitter. I've had a lot of people who thanked me for even though I now have email up and running and this system is working beautifully Seventy five hundred of our listeners received the show notes and a summary of the podcast and a thumbnail of the picture of the week and the ability to get the full sizes and everything several hours ago. But I just it's no longer works as an. I can't do open DMS and I don't know why, because it's been great for so long, leo. I mean I haven't had any problem, but maybe I mean the only thing I can figure is that the spam level on Twitter is just going way up.

0:11:15 - Leo Laporte

You've just been lucky. Really, I think I've just been lucky.

0:11:18 - Steve Gibson

Maybe I've just been sort of off the radar because I don't do much on Twitter except send out the little weekly tweet. So anyway, this is what someone wrote, really good piece that I wanted to share. He said hi, steve, I'm writing to you from New South Wales, australia. I don't really use Twitter, but here I am sending you a DM. Without a doubt you'll be mentioning the CrowdStrike outage in your security now this week. I thought to give you an industry perspective here and just to explain, I didn't get this from him until, as I do every Tuesday, I went over to Twitter to collect DMs and found this. So it didn't make it into last week's podcast, though I wish it had, so I'm sharing it today.

He said I work in cybersecurity at a large enterprise organization with the CrowdStrike Falcon agent deployed across the environment Think approximately 20,000 endpoints. He said around 2 to 3 pm Sydney, the BSOD wave hit Australia. The impact cannot be overstated All Windows systems in our network started BSODing at once. This is beyond a horrible outage. Crowdstrike will need to be held accountable and show they can improve their software stability rather than sprucing AI mumbo-jumbo. He said if they want to remain a preferred provider, ok. He says In defense of CrowdStrike. Their Falcon EDR tool is nothing short of amazing. The monitoring features of Falcon are at the top. It monitors file creation, network processes, registry edits, dns requests, process execution scripts, HTTPS requests, logons, logoffs, failed logons and so much more. The agent also allows for containing infected hosts, blocking indicators of compromise, opening a command shell on the host, collecting forensic data, submitting files to a malware sandbox, automation of workflows, an API, powershell, python Go libraries. It integrates with threat Intel feeds and more and more. It integrates with threat Intel feeds and more and more. Most importantly, he says CrowdStrike has excellent customer support. Their team is helpful, knowledgeable and responsive to questions or feature requests. Despite this distavous outage, we are a more secure company for using CrowdStrike. They have saved our butts numerous times. I'm sure other enterprises feel the same.

Full disclosure I do not work for CrowdStrike, but I do have one of their T-shirts, he says. Why am I telling you this? Because the second in-line competitor to CrowdStrike Falcon is Microsoft Defender for Endpoint, mde. He says MDE is not even close to offering CrowdStrike Falcon's level of protection. Even worse, microsoft's customer support is atrocious to the point of being absurd. I've interacted with Microsoft security support numerous times. They were unable to answer even the most basic questions about how their own product operated and often placed me in an endless loop of escalating my problem to another support staff, forcing me to re-explain my problem to the point where I gave up asking for their help. I even caught one of their support users using chat GPT to respond to my emails to my emails and this is with an enterprise level support plan, he says. As the dust settles. As the dust starts to settle after this event, I imagine Microsoft will begin a campaign of aggressively selling Defender for Endpoint Falcon is often more expensive than MDE, since Microsoft provides significant discounts depending on the other Microsoft services a customer consumes. Sadly, I imagine many executive leadership teams will be dumping CrowdStrike after this outage and signing on with MDE. Relying on Microsoft for endpoint security will further inflate the single point of failure balloon that is Microsoft, leaving us all less secure in the long run. And then he signs off.

Finally, I'm a big fan of the show. I started listening around 2015. As a result of listening to your show, I switched to a career in cybersecurity. Thank you, Leo and Steve. So I wanted to share that because that's some of the best feedback I've had from somebody who really has some perspective here, and it all rings 100% true to me. This is the correct way for a company like CrowdStrike to survive and to thrive, that is, by really offering value. They're offering dramatically more value and functionality than Microsoft, so the income they're earning is actually deserved.

One key bit of information we're missing is whether all of these Windows systems, servers and networks that are constantly being overrun with viruses and ransomware and costing their enterprises tens of millions of dollars to restore and recover, we're normally talking about here every week, are they protected with CrowdStrike, or is CrowdStrike saving those systems that would otherwise fall? You know, if CrowdStrike is actually successfully blocking enterprise-wide catastrophe, hour by hour and day by day, then that significantly factors into the risk reward calculation. Our CrowdStrike user wrote they have saved our butts numerous times, and he said and I'm sure other enterprises feel the same Well, that is a crucially important fact that is easily missed. It may well be that right now, corporate CIOs are meeting with their other C-suite executives and boards and reminding them that while, yes, what happened 10 days ago was bad, but even so it's worth it, because the same system had previously prevented, say I don't know for example, 14 separate known instances of internal and external network breach, any of which may have resulted in all servers and workstations being irreversibly encrypted, public humiliation for the company and demands for illegal ransom payments to Russia. So if that hasn't happened because, as our listener wrote, crowdstrike saved our butts numerous times then the very rational decision might well be to stick with this proven solution, in the knowledge that CrowdStrike will have definitely learned a very important and valuable lesson and will be arranging to never allow anything like this to happen again. This superior solution is the obvious win, but if it ever should happen again, then in retrospect, remaining with them will have been difficult to justify and I you know you could imagine not being surprised if people were fired over their decision not to leave CrowdStrike after this first major incident. But even so, if CrowdStrike's customers are able to point to the very real benefits they have been receiving on an ongoing basis for years from their use of this somewhat okay, can be dangerous system, then even so, it might be worth remaining with it.

Before we talk about CrowdStrike's response, I want to share another interesting and important piece of feedback from a listener, and also something that I found on Reddit. So our listener, dan Mutal, sent. He wrote. I work at a company that uses CrowdStrike and I thought you would appreciate some insight. Crowdstrike and I thought you would appreciate some insight. Thankfully, we were only minimally affected, as many of our Windows users were at a team-building event with their laptops powered down and not working, and our servers primarily run Linux, so only a handful of workstations were affected.

However, the recovery was hampered by the common knowledge which turned out to be false, that the BitLocker recovery key would be needed to boot into safe mode. When you try to boot into safe mode in Windows, you are asked for the BitLocker recovery key. Most knowledgeable articles, even from Microsoft, state that you need to enter the BitLocker key at this point, he says but this is not required. It's just not obvious and not well known how to bypass this. He says here's what we discovered over the weekend Cycle through the blue screen error when the host continues to crash until you get to the recovery screen.

Perform the following steps First, navigate to Troubleshoot Advanced Options, startup Settings, press Restart. Skip the first BitLocker Recovery Key prompt by pressing Escape. Skip the second BitLocker Recovery Key prompt by selecting skip this drive at the bottom right. Navigate to troubleshoot advanced options command prompt. Then enter bcdedit space forward slash set and then default safe boot minimal. Then press enter. Close the command prompt window by clicking the X in the top right. This will return you back to the blue screen, which is the Windows RE main menu. Select continue. Your PC will now reboot. It may cycle two to three times, but then your PC should boot into safe mode.

He said. I confirmed this worked and allowed us to recover a few systems where we did not have the BitLocker keys available. He says I think Microsoft deserves a lot of blame for the poor recovery process. When safe mode is needed, they should not be asking for BitLocker keys if they're not needed. At the bare minimum. They need to make this knowledge much more well-known so system admins who don't have BitLocker keys handy can still boot into safe mode when disaster strikes. I also want to send a shout out to CrowdStrike's technical support team. I'm sure they were swamped by support requests on Friday, but despite that we were talking to them after waiting on hold for only 10 minutes, and they were very helpful. Most vendors aren't not quick or useful on a good day, let alone on a day when they are the cause of a massive outage. Crowdstrike is an expensive product. But it is clear that a large chunk of that expense pays for a large and well-trained support staff. So that was from a listener of ours.

0:23:40 - Leo Laporte

Those are really excellent points, I mean aren't they yes? Yeah, I mean. So much worse to get bit by ransomware than a temporary boot. You know, flaw.

0:23:51 - Steve Gibson

Yes, exactly. So for our listeners to say, yes, you know this was not good, you know that first listener had 20,000 endpoints, but he said still, it has saved our butts. Endpoints, but he said still, it has saved our butts. Yeah, well, saved our butts must mean that he has evidence that something bad would have happened to them, had crowd strike, not blocked it. So what's that worth? You know it's worth a lot, um.

Okay, so on reddit I found a posting. Oh and, by the way, leo, I just should mention I don't normally have our monitor screen up so that I know if you're there or not. So just a note to your control room.

0:24:37 - Leo Laporte

I'm here, by the way, nice, to hear your voice.

0:24:41 - Steve Gibson

So this post on Reddit said just exited a meeting with CrowdStrike, you can remediate all of your endpoints from the cloud. There's news, he said. If you're thinking that's impossible, how he says this was also the first question I asked and they gave a reasonable answer. And they gave a reasonable answer. To be effective, crowdstrike services are loaded very early in the boot process which, of course, is what we talked about last week and they communicate directly with CrowdStrike. This communication is used to tell CrowdStrike to quarantine Windows System, 32 drivers, crowdstrike and then the infamous, you know 291 star dot sys file. So he said, to do this, you must first opt in. That is for this, for this cloud based recovery, by submitting a request via the support portal providing your customer IDs and request to be included in cloud remediation. And request to be included in cloud remediation At the time of the meeting. He says when he was in this meeting, the average wait time for inclusion was less than one hour. Once you receive email indicating that you have been included, you simply have your users reboot their computers. In other words, it's a self-repair of this, of this problem. Yeah, yeah, he said. Cloud strike noted cloud strike noted, um, that sometimes the boot process completes too quickly for the client to get the update and a second or third try is needed, but it is working for nearly all of their affected users. At the time of the meeting, they had remediated more than 500,000 endpoints this way. He says it was suggested to use a wired connection when possible, since Wi-Fi connected users have the more the most frequent trouble with this approach, probably because Wi-Fi connectivity becomes available later in the boot process, after a crash will have occurred. He says this also works with home and remote users, since all they need is an internet connection any internet connection. The point is they do not need to be, and should not be, vpned into the corporate network. So, anyway, I thought that was really interesting, since essentially, we have another one of those race conditions We've talked about those recently, right, in this case it's one where we're hoping that the CrowdStrike network-based update succeeds before the crash can occur. Okay, so with all of that, what more do we know today than we did a week ago?

At the time of last week's podcast, the questions that were on everyone's mind were variations of how could this possibly have been allowed to happen in the first place? How could CrowdStrike not have had measures in place to prevent this? And even, you know, staggering. The release of the buggy file would have limited the scale of the damage. Why wasn't that at least part of their standard operating procedure? For last week's podcast? We had no answers to any of those questions. Among the several possibilities I suggested were that they did have some pre-release testing system in place, and if so, then it must have somehow failed has received from them since that time. I have no doubt about its truth, since CrowdStrike executives will be repeating that under oath shortly. Last week we shared what little was known which CrowdStrike had published by that point under the title what Happened, but they weren't yet saying how, and we also had that statement that I shared from George Kurtz, crowdstrike's founder and CEO. This week we do have there what happened, which is followed by what went wrong and why.

And okay, despite the fact that it contains a bunch of eye-crossing jargon which sounds like gobbledygook, I think it's important for everyone to hear what CrowdStrike said. So here's what they have said to explain this and in order to create the context that's necessary, we learn a lot more about the innards of what's going on. Necessary. We learn a lot more about the innards of what's going on. They said CrowdStrike delivers security content configuration updates to our sensors. And when they say sensors they're talking about basically a kernel driver. So some of that, some of it, is built into the kernel driver or delivered with driver updates and the other is real time. So they said so. Whenever you hear me say sensors you know it's not anything physical, although it sounds like it is. It is software running in the kernel. They said in two ways sensor content that is shipped with our sensor directly and rapid response content that is designed to respond to the changing threat landscape at operational speed. They wrote. The issue on Friday involved a rapid response content update with an undetected error.

Sensor content provides a wide range of capabilities to assess an adversary response. It is always part of a sensor release and not dynamically updated from the cloud. Sensor content includes on-sensor AI and machine learning models and comprises code written expressly to deliver long-term reusable capabilities for CrowdStrike's threat detection engineers. These capabilities include template types which is this figure strongly in the response which have predefined fields for threat detection engineers to leverage in rapid response content. Template types are expressed in code. All sensor content, including template types, go through an extensive QA process which includes automated testing, manual testing, validation and rollout.

The sensor release process begins with automated testing both prior to and after merging into our code base. This includes unit testing, integration testing, performance testing and stress testing. This culminates in a staged sensor rollout process that starts with dogfooding internally at CrowdStrike, followed by early adopters. It's then made generally available to customers. Customers then have the option of selecting which parts of their fleet should install the latest sensor release N, or one version older, n-1, or two versions older N-2, through sensor update policies. Now, okay, to be clear, all of that refers to the, essentially the driver, the so-called sensor. So for that stage they are doing you know, incremental rollout, early adopter testing and so forth, unfortunately not for the rapid response stuff. So they said the event of Friday July 19th was not triggered by sensor content, which is only delivered with the release of an updated Falcon sensor, meaning, you know, updated static drivers. They said customers have complete control over the deployment of the sensor, which includes sensor content and template types.

Rapid response content is used to perform a variety of behavioral pattern matching operations on the sensor using a highly optimized engine. Right, because you don't want to slow the whole Windows operating system down as it takes time out to analyze everything that's going on. So they said rapid response content is a representation of fields and values, with associated filtering. This rapid response content is stored in a proprietary binary format that contains configuration data. It is not code or a kernel driver, but I'll just note. Unfortunately it is interpreted, and how much time have we spent about interpreters going wrong on this podcast.

They said rapid response content is delivered as template instances, which are instantiations of a given template type. Each template instance maps to specific behaviors for the sensor to observe, detect or prevent. Template instances have a set of fields that can be configured to match the desired behavior. In other words, template types represent a sensor capability that enables new telemetry and detection, and their runtime behavior is configured dynamically by the template instance. In other words, specific rapid response content. Rapid response content provides visibility and detections on the sensor without requiring sensor code changes. This capability is used by threat detection engineers to gather telemetry, identify indicators of adversarial behavior and perform detections and preventions.

Rapid response content is behavioral heuristics, separate and and distinct from CrowdStrike's on-sensor AI prevention and detection capabilities. Rapid response content is delivered as content configuration updates to the Falcon sensor. There are three primary systems the content configuration system, the content interpreter and the sensor detection engine. The content configuration system is part of the Falcon platform in the cloud, while the content interpreter and sensor detection engine are components of the Falcon sensor, in other words running in the kernel can censor, in other words running in the kernel. So we've got a content interpreter running in the kernel. What could possibly go wrong? Well, we found out, they said. The content configuration system is used to create template instances which are validated and deployed to the sensor through a mechanism called channel files. The sensor stores and updates its content configuration data through channel files which are written to disk on the host.

The content interpreter on the sensor reads the channel files and interprets the rapid response content enabling the sensor detection engine to observe, detect or prevent malicious activity, depending on the customer's policy configuration. The content interpreter is designed to gracefully handle exceptions from potentially problematic content. I'm going to read that sentence because that's what failed. The content interpreter is designed to gracefully handle exceptions from potentially problematic content, except in this instance, as we know, it did not. And they finish with. Newly released template types are stress tested across many aspects, aspects such as resource utilization, system performance, impact and event volume. For each template type, a specific template instance is used to stress test the template type by matching against any possible value of the associated data fields to identify adverse system interactions. In other words, that's the nice way of saying crashes. And Tepland instances are created and configured through the use of the content configuration system, which includes the content validator that performs validation checks on the content before it is published. Okay, now I've read this a total of, I think maybe five times and I now finally feel like I understand it. So you know, don't be put off if that's just all. Like what did he just say? I get it. They then lay out a timeline of events, which I'll make a bit easier to understand by interpreting what they wrote. So recall that there was mention of named pipes being involved with this trouble and I explained that named pipes were a very common means for different independent processes to communicate with each other within Windows. So way back on February 28th of this year, crowdstrike Sensor version 7.11 was made generally available to customers. It introduced a new inter-process communication IPC template type which was designed to detect novel attack techniques that abused named pipes. This release followed all sensor content testing procedures outlined above with the, you know, in that sensor content section. So again, sensor content is the. This was an update essentially to the driver and all of its AI and heuristic stuff, and that happened on February 28th. A week later, on March 5th, a stress test of the IPC, this newly created IPC template type, was executed, they said, in our staging environment, which consists of a variety of operating system workloads. The IPC template type passed the stress test and was thereby validated for use.

Later, that same day, following the successful stress testing, the first of the IPC template instances were released to production as part of a content configuration update. So, like what happened on this fateful Friday, that happened back on March 1st for the first time for this new IPC template type, this new IPC template type, they said. After that, three additional IPC template instances were deployed between April 8th and April 24th. These template instances performed as expected in production.

Then, on that fateful day of Friday, july 19th, two additional IPC template instances were deployed, much as multiples of those had in February, march and April. One of these two, one of the two deployed on July 19th, was malformed and should never have been released due to a bug in the content validator and also in the interpreter. That malformed IPC template instance erroneously passed validation despite containing problematic content data, they said. Based on the testing performed before the initial deployment of the new IPC template type back on March 5th, and in the trust in the checks performed by the content validator and the several previous successful IPC template instance deployments, on Friday the 19th, these two instances, one of them malformed, were released and deployed into production.

When received by the sensor and loaded into the content interpreter, which is in the kernel, problematic content in channel file 291 resulted in an out-of-bounds memory read, triggering an exception. Memory read triggering an exception. This unexpected exception could not be gracefully handled, resulting in a Windows operating system crash, the infamous blue screen of death which then followed its attempt to recover. And, leo, we're going to talk about how they prevent it from happening again.

0:41:44 - Leo Laporte

But let's take a break so I can uh catch my breath and sip a little bit caffeine bessie in our youtube chat says how can all those pcs fail, but no pcs at crowd strike itself fail? Don't they use crowd strike at crowd strike? Uh, I'm curious and I'm sure you'll cover this how quickly they figured out that this update was causing a problem. Um, surely maybe crowd strike was closed for the day. I don't know. It happened in australia.

0:42:17 - Steve Gibson

It did happen at the wee hours of the morning on in in the united states it's wild, it's just wild.

0:42:23 - Leo Laporte

Anyway, we get to that the post-mortem in just a moment. But first a word from our sponsor, aci Learning. You know their name as IT Pro. We've talked about IT Pro for years. It Pro is now provided by our friends at ACI Learning, but it's the same binge-worthy video-on-demand IT and cybersecurity training we've talked about all this time.

With IT Pro you get cert-ready, certification-ready, with access to a library of more than 7,250 hours of up-to-date, complete, informative, engaging training. There's nothing old in this training. They're updating it all the time. That's why they have so many studios. What is it? Seven or eight studios running all day, monday through Friday. They're always updating it because the tests change, the certs change, the programs you're using change, new versions come out. That's a nonstop. It's like painting the Golden Gate Bridge they never finish. They never get all the videos done before they have to start over. But that's good for you because it means those 7,250 hours of training are as up to date as possible.

Premium training plans also include, besides the videos, you get practice tests, which is, I will tell you from my own experience. The best way to take an exam is to take practice tests ahead of time. It prepares you for the format, gives you the confidence that you know the material and if you don't know it, lets you know pretty quickly. Back to the books. They also offer virtual labs, which have so many useful features. First of all, you can set up a complete enterprise environment with Windows servers and everything, without having any of it. All you need is an HTML5 compatible browser. You do it on a Chromebook and if you break something it's okay because you just you know you close that tab and you move on with your life. But you also a lot of MSPs use this to test, to trial new software, new setups, new configurations. It's a really nice tool. Itpro from ACI Learning makes training fun. All the videos produced in an engaging talk show format by experts in the field whose passion for what they're teaching you communicates, you get, or you're engaged and you start to get passionate about it as well Super valuable.

You could take your IT or cyber career to the next level by being bold, training smart with ACI Learning. Now we've got a great deal for you. Visit goacilearningcom. Slash twit the offer code SN100. Okay, sn for security, now 100. Enter that at checkout. 30% off your first month or first year if you sign up for a year of IT Pro training. Can I make a recommendation? That's the most savings and it's worth it. You will not regret it. So many of our listeners got their certs, got their first jobs in IT, got better jobs in IT from IT Pro. We know it works. Goacilearningcom slash twit the offer code SN100. Thank you ACI Learning for supporting Steve and the work he's doing here. Steve, I'm trying to decide. I'm bringing you know I'm packing up stuff. As you probably noticed, stuff's starting to disappear from the studio. I'm trying to decide. Should I take this needlepoint that says Look, humble Can't?

0:45:56 - Steve Gibson

decide. Where did it come from? Does it have a special meaning for?

0:46:01 - Leo Laporte

you. No, it wasn't my work. Grandma was probably a listener sent it to me. I just always thought it was very funny. I've always had it. I am definitely taking the Nixie clock.

0:46:10 - Steve Gibson

You cannot have a studio without a Nixie clock.

0:46:12 - Leo Laporte

No, I think you should keep the Nixie clock, it's definitely everything that blinks I'm taking good, I am not taking this darn clock, which has been the bane of my existence since since the brick house, people get mad when that clock is not visible on the show, that digital clock. But I have other clocks. I'm not going to bring that one. We'll see?

0:46:31 - Steve Gibson

well, I guess the question is also how much room do you have? Well, it's, that's the point is I?

0:46:35 - Leo Laporte

you know, I'm pretty much everything you see behind me. I'm going to leave here. We've got a, a company that doesn't leave behind. Yeah, there's a company that's a liquidator that they come in and they sell what they can, they donate what they can, recycle what they can and toss the rest and uh, I guess that's all we can do. I'm. This is a crazy amount of stuff we've accumulated over the years as one does as one does.

We are opening the studio on the 8th to any club twit members who want to come and get something. Come on down. We're blowing it out to the bare walls. You know what I'm giving away and I hate to do it, but I have that giant demonstration slide rule. I don't think you can see it as you just see the bottom of it. It's one of those yellow.

0:47:20 - Steve Gibson

I do see the bottom of it with the plastic slider, but where am I going to put that?

0:47:26 - Leo Laporte

Yeah, hanging off the roof, I don't know. Yeah, I only have, you know, a tiny little attic studio. So a lot of stuff getting left behind.

0:47:35 - Steve Gibson

I'm sad to say I've got some friends who some of my high school buddies are actively lightening their load. Yeah, lightening their load, yeah. Like you know, one guy deliberately converted his huge CD collection over to audiophiles and threw away all the CDs. I mean it hurt to do it right, because those are like.

0:47:54 - Leo Laporte

Lifetime. It's a lifetime of collecting.

0:47:56 - Steve Gibson

Yeah, but it's like eh, you know, I want to travel more. I don't want to like.

0:48:00 - Leo Laporte

Am I going to dump this on my kids? I going to dump this on my kids? I don't want to do that. So yeah, they call it. There's a there's a book about it, called swedish death cleaning, where you prepare for your death and you and do do your heirs a favor. You get rid of the stuff that you don't think they'll be interested in. It's hard, though. Yeah, I don't know what those pdp8s that I've got are gonna I'm taking. I'm taking my mI, the two.

Raspberry Pi devices and the Pi DP8. I'm absolutely taking those. They're too cool.

0:48:30 - Steve Gibson

Blinking lights have got to stay and I have been remiss in not telling our listeners that just so much has been happening on the podcast, but the guy that did the fantastic Pi DP8 has done a PDP-10, and it is astonishing it. I mean it, it is, it is I. I'll have to make time to, to I don't know when, the podcast has just been so crazy lately, but the but the emulated pdp 10. He gathered all the software from MIT.

0:49:05 - Leo Laporte

It was Oscar Vermeulen right Oscar yes.

0:49:09 - Steve Gibson

Oscar has done a PDP-10 also with a little Raspberry Pi running behind it, a gorgeous injection molded PDP-10 console recreation. So there's the 8.

0:49:22 - Leo Laporte

Yeah, this is the one we have.

0:49:25 - Steve Gibson

And the 10.

0:49:27 - Leo Laporte

Is there a link to it there? His vintage computer collection. I think this is.

0:49:33 - Steve Gibson

Yeah, he gave it his own website.

0:49:36 - Leo Laporte

Oh, okay, I'll find that.

0:49:38 - Steve Gibson

I'm surprised he's not linked to it, but you might put in PDP-10 recreation or something.

0:49:44 - Leo Laporte

Yeah, let's see what I can find. To find it because because, oh my goodness, Obsolescence guaranteed.

0:49:50 - Steve Gibson

Look at that.

0:49:52 - Leo Laporte

Oh, okay, Oscar.

0:49:55 - Steve Gibson

I want it. It is astonishing oh it's beautiful, it's very. Star Trek.

0:50:01 - Leo Laporte

That is Star Trek.

0:50:03 - Steve Gibson

It's an injection molded, you know, full working system. All of the software is there. You're able to connect a normal PC to it so you can work with a console and keyboard.

0:50:16 - Leo Laporte

Oh, it's got Adventure. Uh-oh, I might have to buy this.

0:50:21 - Steve Gibson

So it's running a.

0:50:23 - Leo Laporte

Raspberry Pi, but that's the same performance as a PDP-10?.

0:50:27 - Steve Gibson

Oh, it blows the PDP-10 away. He had to slow it down in order to make it.

0:50:35 - Leo Laporte

Oh, this is so cool. I mean the original software running.

0:50:41 - Steve Gibson

And so there they were, comparing the operation of their console to their emulator, to a real one to the emulator. In fact, I was thinking about this because Paul Allen was selling some of their original machines, right?

0:50:57 - Leo Laporte

Oh wow, Oscar Vermeulen. Obsolescencewixsitecom is obsolescence guaranteed and you can. You can build the kit.

0:51:09 - Steve Gibson

You can buy it it is or build the kit yeah, embarrassingly inexpensive again and uh oh boy, but I mean for from he and his, his, his, uh, buddy, would, they were out demonstrating it to the Boston Computer Museum. Oh wow, and he said, steve, could you make time for us to show you? So he came and set this up plugged its. Hdmi output into our screen in our family room and gave Lori and me a full demonstration of the operation of this.

0:51:46 - Leo Laporte

Did Lori know what she was getting into when she married you?

0:51:50 - Steve Gibson

And later we're going to have somebody demonstrate a replica PDP-10.

0:51:54 - Leo Laporte

Won't that be fun. I love it. Well Oscar, well done. I'm glad he did it again.

0:52:03 - Steve Gibson

Yeah, I got the email too.

0:52:05 - Leo Laporte

And I guess I'll have to build because I have the Pied Pied is already on the set in the attic and I think I have to upgrade.

0:52:13 - Steve Gibson

Oh, just look at that console.

0:52:15 - Leo Laporte

It is just gorgeous.

0:52:16 - Steve Gibson

It was funny too, because her 28-year-old son, Robert, happened to also be there, oh, and he was watching this and he'd never seen a console before right with like lights and switches and he said are those bits good question?

0:52:37 - Leo Laporte

it was.

0:52:38 - Steve Gibson

It was like question it was, it would have never occurred to me to ask that question, but I it's like yes, those are bits. Those are what bits are. Are you know? Those individual lights turning on and off and the switches are bits.

Wow, wow and what's cool is that, whereas the the pdp8 is a pain to program because you've only got a three-bit op code, so you got seven instructions. The pdp 10, oh, it's a 36-bit system and it's got. It's a gorgeous instruction set. I mean really just a joy. So I mean so this is a complete recreation.

0:53:19 - Leo Laporte

You can be using it with its editors and its compilers, and that's what you want, right, steve there by the way are osco, oscar and auto uh showing off the entire line, oscar's on the left at the vintage computer festival very cool, very cool. So they've got a 1, 8, 11 and a and a 10.

0:53:48 - Steve Gibson

Yes, and he says down there, and the 1 are at the, I guess 1 and 10. At that time we're in prototype, 10 is finished and they're now working on a PDP-1. And I have to tell you he credits this podcast as changing this from a hobby to a business, because there was so much interest shown in the PIDP 8 and then in the 11 that they turned it into a business. And so, anyway, I'm glad that this came up, because I felt badly that I have not found time, because I mean, our podcasts have been running more than two hours.

0:54:29 - Leo Laporte

I know, I know Recently, but I'm so glad because I wanted to get this into. So I'm glad we could mention it as I, as I pack up my pie DP and bring it home.

0:54:39 - Steve Gibson

My party and gentlemen, listening, if you saw the actual size it's not a huge thing. So it does store in the closet. If you're talking about how patient.

0:54:53 - Leo Laporte

Lori is with me. Don't put it on the dining room table, whatever you do. I know you're tempted, gentlemen, but don't. Yeah, it's the white one right here, Yep exactly.

0:55:05 - Steve Gibson

So it's what.

0:55:06 - Leo Laporte

It's about a couple of feet wide, maybe it is a scale.

0:55:09 - Steve Gibson

Yes, it's a scale size replica of the console of the original pdp-10. Yeah, and I have that and back, but loaded with loaded with all of the original software. They even have one guy who specializes in recreating, recovering data from unreadable nine-track magnetic tapes, and so they were getting mag tapes. You can see one right there behind my head that is a nine-track magnetic tape that actually came from sale from Stanford's Artificial Intelligence.

0:55:48 - Leo Laporte

Lab and that's got my code on. Oh, that's really cool.

0:55:52 - Steve Gibson

Wow, so so they, so they, they've. They went back and recreated the original files and, in some cases, hand editing typos out in order to get everything to recompile again. In order to, because there was no like preservation, uh, project until now. So they've, they've, really they did a beautiful job and congratulations yeah okay, so following on what happened Now, back to the bad news. Naturally, CrowdStrike wants to explain how this will never happen again.

0:56:33 - Leo Laporte

Yeah.

0:56:41 - Steve Gibson

So, under a subhead of software resiliency and testing, they've got bullet points and I have to say that this first batch sounds like the result of a brainstorming session rather than an action plan. They have improve rapid response content testing that was the problematic download right by using testing types such as local developer testing, content update and rollback testing, stress testing, fuzzing and fault injection stability testing, content update and rollback testing, stress testing, fuzzing and fault injection stability testing and content interface testing. And of course, many people would respond to that why weren't you doing all that before? Unfortunately, that's the generic response right to anything that they say that they're now going to do is well, why weren't you doing that before? Anyway, so they also have add additional validation checks to the content validator for rapid response content. A new check is in progress to guard against this type of problematic content from being deployed in the future. Good, enhance existing error handling in the content interpreter right, because it was ultimately the interpreter that crashed the entire system when it was interpreting some bad content, so that should not have been able to happen. And then, under the subhead of rapid response content deployment, they've got four items Implement a staggered deployment strategy for rapid response content in which updates are gradually deployed to larger portions of the sensor base, starting with a canary deployment. Improve monitoring for both sensor and system performance, collecting feedback during rapid response content deployment to guide a phased rollout. Provide customers with greater control over the delivery of rapid response content updates by allowing granular selection of when and where these updates are deployed. Provide content update details via release notes which customers can subscribe to. Details via release notes which customers can subscribe to.

And I have to say kind of reading between the lines. Again, you know, programmers have egos right. We write code and we think it's right, and then it's tested and the testing agrees that it's right. And it's difficult, without evidence of it being wrong, to go overboard. They did have systems in place to catch this stuff. It turns out in retrospect something got past that system or those systems. Now they know that what they had was not good enough and they're making it better. More yes, but can't you always do more yes? And then one could argue in that case, if it's possible, if it's in any way possible for something to go wrong, then shouldn't you prevent that? Well, they thought they had. They thought that the content interpreter was bulletproof, that it was an interpreter, it would find any problems and refuse to interpret them if they were going to cause a problem. But there was a bug in that, so this happened, okay.

So the bottom line to all of this, I think, is that CrowdStrike now promises to do what it should have been doing all along, like the staggered deployment. Again, I mean, that's indefensible, right? Why were you guys not incrementally releasing this? Well, it's because they really and truly believed that nothing could cause this to happen. They really thought that they were wrong. But it wasn't negligence. I mean, in the same sense that most programmers don't release buggy code, right, they fix the bugs. Microsoft is an exception. They've got a list of 10 000 known bugs when they release windows, but they're small and they figure they won't actually hurt anybody. And they'll, they'll. You know they're. They're not show stoppers, they actually use that term. So it's like okay, fine, it works. Um, so is this another of those small earthquake tremors I've been recently talking about? You know, I guess it would depend upon whom you ask.

The source of the problem was centralized, but the remediation of the problem was widely distributed. A half million machines, several hundred thousand individual technicians got to work figuring out what had happened to their own networks and workstations and each was repairing the machines over which they had responsibility, because initially you know CrowdStrike, as we saw they ended up coming up with a cool cloud-based solution, but initially, no, that didn't exist. Presumably it will now be deployed in some sort of a permanent fashion and, as we know, in the aftermath some users of Windows appear to have been more seriously damaged than others. In some cases, machines that were rebooted repaired themselves by retrieving the updated and repaired template file, whereas in other situations, such as Delta Airlines, the effects from having Windows system crashing lasted days.

I have no direct experience with CrowdStrike, but not a single one of our listeners, from whom we have heard even after enduring this pain, sounded like they would prefer to operate without CrowdStrike level protection and monitoring in the future, and I think that's a rational position. No one was happy that this occurred, and it really is foreseeable that CrowdStrike has learned a valuable lesson about using belts, suspenders, velcro and probably some epoxy. They may have grown a bit too comfortable over time, but I'll bet that's been shaken out of them today, and I have no doubt that it will be like that. They've really raised the bar on on having this happen to them again. Another little bit of feedback, since it's relevant to the CrowdStrike discussion. I wanted to share what another listener of ours, vernon Young. I am the IT director for a high school and manage 700 computers and 50 virtual servers.

A few weeks before get this, leo. A few weeks before the Kaspersky ban was announced, I placed a $12,000 renewal order with Kaspersky $12,000, which will now be lost since the software won't work after September, he wrote. He said after the ban was announced, I started looking for alternatives. He said after the ban was announced, I started looking for alternatives. I decided on Thursday, july 18th, to go with CrowdStrike. Oh my God.

1:04:34 - Leo Laporte

The day before.

1:04:40 - Steve Gibson

Oh my God, he says, the day before didn't feel like walking to the copier to scan the purchase order to send to the sales rep before I left for the day. Needless to say, I changed my mind Friday morning.

I cannot imagine being Vernon our listener and needing to corral a high school campus full of mischievous and precocious high schoolers People like you, I can't imagine you know as high schoolers will that they're more clever than the rest of the world and whose juvenile brains sense of right and wrong hasn't yet had the chance to fully develop. But think about the world Vernon is facing. He invests $12,000 to renew the school's Kaspersky AV system license, only to have that lost. Then decides that CrowdStrike looks like the best alternative, only to have it collapse the world For what it's worth worth. I stand by the way I ended that crowd strike discussion. I would go with them today.

Yes all of the feedback we've received suggests that they are top notch, that they're very clearly raising the bar to prevent another mistake like this from ever slipping past. There's just no way that they haven't really learned a lesson from this debacle, and I think that's all anyone can ask at this point, and the fact that our listeners are telling us they are the best there is.

1:06:22 - Leo Laporte

There's no other choice, no choice.

1:06:26 - Steve Gibson

Microsoft is number two and they don't hold a candle Right. I mean, certainly all of the systems that we talk about succumbing to ransomware are at least running Microsoft Defender, and that's not helping them, okay. So who is to blame? Our wonderful hacker friend, marcus Hutchins, posted a wonderfully comprehensive 18-minute YouTube video which thoroughly examines, explores and explains the history of the still-raging three-way battle among Microsoft, third-party AV vendors and malware creators. That video is on YouTube. It's this week's GRC of the week, so your browser can be redirected to it. If you go to grcsc slash 985, today's episode number, grcsc, as in shortcut, grcsc slash 985. When you go there, be prepared for nearly 18 minutes of high-speed, non-stop, perfectly articulated techie detail, because that's what you're going to get. I'll summarize what Marcus said.

Since the beginning of Windows, the appearance of viruses and other malware and the emergence of a market for third-party antivirus vendors the emergence of a market for third-party antivirus vendors there's been an uncomfortable relationship between Microsoft and third-party AV To truly get the job done correctly. Third-party antivirus has always needed deeper access into Windows than Microsoft has been willing or comfortable to give, and the CrowdStrike incident shows what can happen when a third party makes a mistake in the Windows kernel. But it is not true. Marcus says that third-party antivirus vendors can do the same thing as Microsoft can without being in the kernel. This is why Microsoft themselves do not use the APIs they have made available to other antivirus vendors. Those APIs do not get the job done and the EU did not say that Microsoft had to make the kernel available to other third parties. The EU merely said that Microsoft needed to create a level playing field where the same features would be available to third parties as they were using themselves.

Since Microsoft was unwilling to use only their own watered-down antivirus APIs and needed access to their own OS kernel, must recognize that this area of Windows has been constantly evolving since then and that the latest Windows 10 1703 has made some changes that might offer some hope of a more stable world in the future. The problem, of course, is that third parties still need to be offering their solutions on older Windows platforms which are still running just fine, refuse to die and may not be upgradable to later versions. So Marcus holds Microsoft responsible. That's his position, and I commend our listeners to go to grcse. Slash 985 to get all the details. Slash 985 to get all the details. Anyone who has a ticky bent will certainly enjoy him explaining pretty much what I just have in his own words. And Leo, we're at an hour.

Let's take another break, and then we're going to look at what happened during Entrust's recent webinar, where they explain how they're going to hold on to their customers.

1:11:11 - Leo Laporte

Some of these never-ending stories are really quite amusing, I must say. I must say Our show today, brought to you by Panoptica. Panoptica is Cisco's cloud application security solution. It provides end-to-end lifecycle protection for cloud-native application environments. It empowers organizations to safeguard their APIs, their serverless functions, their containers, their Kubernetes environments, and on and on. Panoptica ensures comprehensive cloud security, not to mention compliance and monitoring at scale, offering deep visibility, contextual risk assessments and actionable remediation insights for all your cloud assets. Powered by graph-based technology, panoptica's attack path engine prioritizes and offers dynamic remediation for vulnerable attack vectors, helping security teams quickly identify and remediate potential risks across cloud infrastructures. A unified cloud-native security platform minimizes gaps from multiple solutions. It provides centralized management and reduces non-critical vulnerabilities from fragmented systems. You don't want that. You want Panoptica. Panoptica utilizes advanced attack path analysis, root cause analysis and dynamic remediation techniques to reveal potential risks from an attacker's viewpoint. This approach identifies new and known risks, emphasizing critical attack paths and their potential impact. Panoptica it's got several key benefits for businesses at any stage of cloud maturity, including advanced CNAP, multi-cloud compliance, end-to-end visualization, the ability to prioritize with precision and context, dynamic remediation and increased efficiency with reduced overheads.

It sounds like a lot. It is a lot. That's why you, right now, should go to panopticaapp and learn more. P-a-n-o-p-t-i-c-a panopticaapp Secure your cloud with confidence with Panoptica. We thank Panoptica so much for supporting steve's hard work at security. Now see you know you you talk about. Well, are there options to bit warden? There are, and a lot of them are. Are seeing this as a as an opportunity? Right? This is the time? By the way, did my clock disappear? I think stuff's leaving the building.

1:13:46 - Steve Gibson

Yeah, the Nixie clock is gone.

1:13:47 - Leo Laporte

I had an interesting giant sword that seems to have disappeared as well. So if you see somebody going down the street with a sword about yea long, you might call the authorities.

1:13:59 - Steve Gibson

Looks like the ukulele is still there, though.

1:14:01 - Leo Laporte

The uke. No one's taking the uke. I don't know why.

1:14:06 - Steve Gibson

All right, continue on. My friend, entrust held a 10 am webinar last week, which included the description of their solution with the partnership we mentioned last week with SSLcom. It was largely what I presumed from what they had said earlier, which was that behind the scenes, the certificate authority, sslcom, would be creating and signing the certificates that Entrust would be purchasing from SSLcom and effectively reselling. There were, however, two additional details that were interesting. Before any certificate authority can issue domain validation certificates, the applicant's control over the domain name in question must be demonstrated. So, for example, if I want to get a certificate for GRCcom, I need to somehow prove to the certificate authority that GRCcom is under my control. I can do that by putting a file that they give me on the root of the server, the web server that answers at GRCcom, showing that, yeah, that's my server, because they asked me to put this file there and I did. I can put a text record into the DNS for GRCcom again, proving that I'm the guy who's in charge of GRCcom's DNS. And there's a weak method the weakest is to have email sent from the GRCcom domain, but when all else fails you can do that. So, anyway, you need to somehow prove you own the domain, you have control over it. So it turns out SSLcom is unwilling to take Entrust's word for that. Entrust customers who wish to purchase web server certificates from Entrust after this coming October 31st is that they will need to prove their domain ownership, not to Entrust as they have in the past, but to SSLcom. Not surprising, but still not quite what Entrust was hoping for.

The second wrinkle is that Entrust does not want SSLcom's name to appear in the web browser when a user inspects the security of their connection to see who issued a site's certificate. No, it's got to be Entrust. So, although SSLcom will be creating each entire certificate on behalf of Entrust, they've agreed to have SSLcom embed an Entrust intermediate certificate into the certificate chain. Since web browsers only show the signer of the web server's final certificate in the chain, by placing Entrust in the middle, sslcom will be signing the Entrust intermediary and Entrust's intermediary will be signing the server's domain certificate. In this way, it will be Entrust's name that will be seen in the web browser by anyone who is checking.

So you know the webinar was full of a lot of you know all of this, how they're going to get back into good graces of the CA browser forum and all the steps they're taking and blah, blah, blah. We'll see how that goes with the passage of time. For now, that's what they're doing in order to, in every way they can hold on to the customers that they've got who've been purchasing certificates. I should mention that the webinar also explained that all of the existing mechanism of using Entrust is in place. Everything is Entrust-centric with whoops, the exception of needing to prove domain ownership to somebody else. No way around that one, leo. This actually came as a result of our talking about the GRC cookie forensic stuff last week.

Something is going on with Firefox that is not clear and is not good. After last week's discussion of third-party cookies and you playing with GRC's cookie forensics pages and you playing with GRC's cookie forensics pages, several people commented that Firefox did not appear to be doing the right thing when it came to blocking third-party cookies in what it calls strict mode. Strict mode is what I want, but, sure enough, strict mode behavior does not appear to be what I'm getting. Under Firefox's Enhan protection, we have three settings, three overall settings standard, strict and custom. Standard is described as quote balanced for protection and performance. Pages will load normally. In other words, third-party cookies we love you, you can. Anybody who wants one can munch on one. Strict is described as stronger protection but may cause some sites to or content to break details this further by claiming it says firefox blocks the following social media trackers, cross-site cookies in all windows, tracking content in all windows, crypto miners and finger printers. Well, that all sounds great. The problem is it does not appear to be working at all under Firefox. This issue arose initially came to my attention in our old school news groups, which, where I hang out with a great group of people, so it grabbed a lot of attention, and many others have confirmed that, as have I, as have I. Firefox's strict mode is apparently not doing what it says, what we want and expect. It says cross-site cookies in all windows. That's not working. Chrome and Bing work perfectly.

In order to get Firefox to actually block third-party cookies, it's necessary to switch to custom mode. Tell it that you want to block cookies. Then under which types of cookies to block? You cannot choose cross-site tracking cookies. I mean you can, but it doesn't work. You need to turn the strength up higher. So cross-site tracking cookies and isolate other cross-site cookies Nope, that doesn't work either. Neither does setting cookies from unvisited websites. Nope, still doesn't work. It's necessary to choose the custom mode and then the cookie blocking selection of all cross-site cookies, which then, it says in parens, may cause websites to break. Once that's done, grc's cookie forensics page shows that no third-party session or persistent cookies are being returned from Firefox, just as happens with Chrome and Bing. When you tell them to block third-party cookies, they actually do. Firefox actually does not Back.

When I first wrote this, when third-party cookies were disabled some of the broken browsers they had really weird behavior. It's why I'm testing eight different ways of setting cookies in a browser because they used to all be jumbled up and some worked and some didn't. Some were broken, some weren't, so in some cases, when you told it to disable third-party cookies, it would stop accepting settings for new cookies, but if you still had any old, you'd call them stale cookies. Then those would still be getting sent back. All of that behavior's been fixed, but it's broken under firefox.

Looking at the wording, which specifically refers to cross-site tracking cookies, it appears that Firefox may be making some sort of value judgment about which third-party cross-site cookies are being used for tracking and which are not. That seems like a bad idea. I don't want any third-party cookies. Chrome and Safariaris had that all shut down for years. Chrome and bing will now do it if you tell them to. So what do they imagine? That they can and and have somehow maintained a comprehensive list of tracking domains and won't allow third-party cookies to be set by any of those. The only thing that comes to mind is like some sort of heuristic thing, and all of that seems dumb. Just turn them off, like everybody else does.

You know, there may be more to what's going on here, though. One person in GRC's news group said that they set up a new virtual machine installed Firefox and it is working correctly. If that's true and it's not been confirmed, that would suggest that what we may have is another of those situations we have encountered in the past. We have encountered in the past where less secure behavior is allowed to endure in the interest of not breaking anything in an existing installation, whereas anything new is run under the new and improved security settings. But if so, that's intolerable too, because it appears to be completely transparent.

That is no sign of that is shown in the user interface, and if that's really what's going on, then Firefox's UI is not telling us the truth, which, anyway, that's a problem. I wanted to bring all this up because you know it should be on everyone's radar. In case others, like me, were trusting and believing Firefox's meaning of the term strict, I would imagine that some of our listeners will be interested enough to dig into this and see whether they can determine what's going on. As everyone knows, you know, I now have an effective incoming channel for effortless sending and receiving of email with our listening community, so I'm really glad for that, and you know I'm getting lots of good feedback about that from our listeners.

1:25:09 - Leo Laporte

Okay.

1:25:10 - Steve Gibson

Leo, get a load of this one. Pc Magazine brings us the story of a security training firm who inadvertently hired a remote software engineer, only to later discover that he was an imposter based in North Korea. They wrote a US security training company discovered it mistakenly hired a North Korean hacker to be a software engineer after the employee's newly issued computer became infected with malware. The incident occurred at known before or, I'm sorry, no, before and apparently they didn't. Uh, k-n-o-w-b-e. And then numeral four, which develops security awareness programs to teach employees about phishing attacks and cyber threats. So yeah, you know they're certainly a security forward security aware company. The company recently hired a remote software engineer who cleared the interview and background check process, but last week KnowBe4 uncovered something odd. After sending the employee a company-issued Mac, knowbe4 wrote in a post last Tuesday quote the moment it was received it immediately started to load malware. The company detected the malware thanks to Mac's onboard security software. An investigation with the help of the FBI and Google's security arm Mandiant then concluded that the hired software engineer was actually a North Korean posing as a domestic IT worker. Fortunately, the company remotely contained the Mac before the hacker could use the computer to compromise NoB4's internal systems. Right, so it was going to VPN into their network and get up to some serious mischief.

When a malware was first detected, the company's IT team initially reached out to the employee who claimed quote that he was following steps on his router guide to troubleshoot a speed issue unquote. But in reality KnowBe4 caught the hired worker manipulating session files and executing unauthorized software, including using a Raspberry Pi, to load the malware. In response, nobe4's security team tried to call the hired software engineer, but he quote stated he was unavailable for a call and later became unresponsive. Yeah, I'll bet. Oh, I should also say a stock photo of a Caucasian male was modified by AI to appear to have Asian descent, and that's the photo that this employee submitted as part of his hiring process. This employee submitted as part of his hiring process, know before says it shipped the work computer and get this to an address. That is basically an IT mule laptop farm, which the North Korean then accessed via VPN.

Although no before, yeah VPN, although no before, managed to thwart the breach. The incident underscores how North Korean hackers are exploiting remote IT jobs to infiltrate US companies. In May, the US warned that one group of North Koreans had been using identities from over 6D60 real US citizens to help them snag remote jobs. The remote jobs can help North Korea generate revenue for their illegal programs and provide a way for the country's hackers to steal confidential information and pave the way for other attacks. In the case of KnowBe4, the fake software engineer resorted to using an AI edited photo of a stock image to help them clear the company's interview process. So this should bring a chill to anyone who might ever hire someone sight unseen, based upon information that's available online, as you know, opposed to the old-fashioned way of actually taking a face-to-face meeting to interview the person and discuss how and whether there might be a good fit. One of the things we know is going on more and more is domestic firms We've talked about it recently are dropping their in-house teams of talent in favor of offshoring their needs as a means of increasing their so-called agility and reducing their fixed cost. This is one of those things that accountants think is a great idea, and I suppose there may be some places where this could work, but remote software development I'd sure be wary about that one. The new tidbit that really caught my attention, though, was the idea of something that was described as an IT mule laptop farm. Whoa, so this is at a benign location where the fake worker says they're located. Being able to receive a physical company laptop at that location further solidifies the online legend that this phony worker has erected for themselves. So this laptop is received by Confederates who set it up in the IT mule farm and install VPN software, or perhaps attach the laptop to a remote KVM over IP system to keep the laptop completely clean. Either way, this allows the fake worker to appear to be using the laptop from the expected location, when instead they're half a world away in a room filled with North Korean hackers North Korean hackers all working diligently to attack the West. I wish this was, you know, just a B-grade sci-fi movie, but it's all for real and it's happening as we speak. Wow, the world we're in today. Okay, some feedback from our listeners.

Robert said hello, steve. I'll try not to take too much of your time, but I'd like to mention one thing that irked me about the entire Google trying to eradicate third-party cookies is a good thing. Business, he said. Tldr Google tries to get rid of third-party cookies to gain a monopoly in the ad market, not to protect users. He says that. I don't see it that way. But okay, he said.

First and foremost, eradicating third-party cookies is a good thing as a vehicle to stop tracking of website visitors. The only reason why google would be able to actually force website owners to move from their cookie-based ad strategies to something else flock topics, labels, whatever they call it is that they have a near monopoly in the browser market. Of course, I 100% agree with that. The only way the world would ever be able to drop third-party cookies would be if it was forced to do so, and at this time in history, only Google is in the position to have the market power to force such a change. Anyway, he goes on, it's important to keep in mind that Google is still a company that makes most of their money selling ads. Right, okay, agreed, every move they have made so far smelled like they wanted to upgrade their browser monopoly into an ad tech monopoly. Ok, I would argue they already have that, he said. My suspicion is that it wasn't necessarily the ad companies directly that threatened Google about its plan to eradicate third party cookies, but rather some pending monopoly concern about the ad market. But maybe I'm just too optimistic about that. So well, just a thought I felt was a bit underrepresented. Thanks again for the work, robert.

Okay, so the problem I have with Robert's analysis is that I cannot see how Google is giving itself any special privileges through the adoption of their privacy sandbox, while it is absolutely true that they were dramatically changing the rules. Everything that they are doing was a 100% open process with open design and open discussion and open source, and they themselves were also being forced you know, forcing themselves to play by those same rules that they were asking everyone else to play by. You know very much like Microsoft we were just talking about them versus AV. Microsoft is unwilling to accept the same limitations that that they're asking the AV vendors to accept by using the API that they provide. Google not doing that. They're going to use the same privacy sandbox that they're saying everyone else should. So there was no advantage that they had over any other advertiser just because it was their Chrome web browser, and had they been successful in bringing about this change, the other browsers would have eventually adopted the same open privacy sandbox technologies, but, as we know, that hasn't happened. I did not have time to address this fully last week due to the CrowdStrike event. So anyway, I'm glad for Robert's note. The EU bureaucrats' apparent capitulation to the slimy tracking and secretive user profiling underworld, which in turn forced Google's browser to retain its historically abused third-party cookie support, represents a massive privacy loss to the world. This was the wrong outcome and I sincerely hope that it's only a setback that will not stand for long.

Lisa in Worcester Massachusetts wrote Steve another intriguing podcast, many thanks. I find it interesting the influence Google has and doesn't have. It seems more powerful over one company like Entrust than a whole market like third party cookies. Is it influence or is it calculated cost benefit analysis that helps Google slash Alphabet decide where to flex its muscles? Thoughts from Worcester Massachusetts, lisa.

Okay, as an observer of human politics, I often observe the simple exercise of power In US politics. We see this all the time. Both major political parties scream at each other, crying foul and unfair, but they each do what they do simply because they want to and they can, when they have the power to do so. And I suspect the same is true with Google. Google has more power than Entrust, but less power than the European Union, so Google was able to do what it wished with Entrust, whereas the EU had the power to force Google to do what it wished. And who knows what's really going on inside Google? I very much wanted to see, as we know, the end of third-party cookie abuse, but we don't really know that Google or at least that all of Google did.