Security Now 966 Transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show.

0:00:00 - Mikah Sargent

Coming up on security now. First, we talk about Voyager. You may have thought that Voyager was buh-bye, but it is not. It is still sending signals. Plus, you know, Tim Berners-Lee, the father of the web, sits down and has a serious talk well, at least as far as Tim Berners sees it about the state of the internet and maybe some disagreements he has with the way it is all going. We also have a lot of feedback from listeners about last week's episode regarding pass keys and the current state of security online when it comes to passwords and two-factor authentication and what you should or shouldn't use, and we also talk about Morris II, a worm that has some serious implications for generative AI agents. All of that and so much more coming up on security now.

0:01:00 - Leo Laporte

Podcasts you love From people you trust.

0:01:10 - Mikah Sargent

This is Security, Now with Steve Gibson and this week Micah Sargent, episode 966, recorded Tuesday, March 19th 2024. Morris II.

0:01:23 - Leo Laporte

This episode brought to you by Robinhood. Did you know that even if you have a 401k for retirement, you can still have an IRA? Robinhood is the only IRA that gives you a 3% boost on every dollar you contribute when you subscribe to Robinhood Gold. But get this now. Through April 30th, robinhood is even boosting every single dollar you transfer in from other retirement accounts with a 3% match. That's right. No cap on the 3% match. Robinhood Gold gets you the most for your retirement thanks to their IRA with a 3% match. This offer is good through April 30th. Get started at Robinhoodcom. Slash boost Subscription fees apply. And now for some legal info Claim as of Q1 2024, validated by Radius Global Market Research. Investing involves risk, including loss. Limitations apply to IRAs in 401ks. 3% match requires Robinhood Gold for one year from the date of first 3% match. Must keep Robinhood IRA for five years. The 3% matching on transfers is subject to specific terms and conditions. Robinhood IRA available to US customers in good standing. Robinhood Financial LLC member. Sipc is a registered broker dealer.

0:02:37 - Mikah Sargent

Hello and welcome to Security Now, the show where the cybersecurity guru, steve Gibson, provides a week's worth of cybersecurity news in but a small package so you can just plug in directly and download it into your cranium. I am just here to help facilitate this. I am how do you say, the let's go with the super safe USB flash drive that you can plug into your cranium. I'm just providing the means by which you connect to Steve Gibson, who actually is providing all of the information. That's the role I play here, also the role of shock at all, because occasionally I am gobsmacked by what Steve ends up telling us. But, steve, it is good to see you again this week. How you doing.

0:03:30 - Steve Gibson

Micah, great to be with you for our second week in a row as Leo finishes working on his tan and presumably gets ready to return. So we are going to do as we thought we were going to do last week but got pushed because of wow, what turned out to be a surprisingly interesting episode for our listeners the past keys versus multi-factor authentication. In fact, I was so overwhelmed with feedback from that and it was useful stuff, comments and questions and so forth that it ended up still being a lot of what we end up talking about today, just because you know, certainly the whole issue of cross network, proving who you are, proving who you say you are is, which is to say, authentication is a big deal and important to everybody who's using the internet. So we're going to talk about that some more. But we are going to get to what was supposed to be last week's topic, which I had it originally as Morris Roman, numeral 2, but I saw that they're referring to themselves or their creation as Morris the second. So Morris the second is today's number 966 and counting a security now podcast title.

But first we're going to talk about how it may be that we were doing the requiem for Voyager 1 a little prematurely. It may not be quite dead or insane or whatever it was that it appeared to be a week ago. Also, the World Wide Web has just turned 35. What does?

its dad, think about how that's going. What's the latest or unbelievably horrific violation of consumer privacy which has come to light? We're going to talk, we're going to share a lot about what our listeners thought about past keys and multi-fact authentication and the ins and outs of all that, and then, as I promised, we're going to look at how a group of Cornell University researchers managed to get today's generative AI to behave badly and at just how much of a cautionary tale this may be. So I think, a lot of interesting stuff for us to talk about.

0:06:06 - Mikah Sargent

Absolutely. Again, shock and awe. I can't wait, I'm looking forward to it.

Before, though, we get to that, we will take a quick break so I can tell you about a sponsor of today's episode of security. Now. It's brought to you by our friends at ITProTV, now called ACI Learning. You already know the name ITProTV. We've talked about them a lot on this here network and now, as a part of ACI Learning, itpro has expanded its capabilities, providing more support for IT teams.

With ACI Learning. It covers all of your teams audit, cybersecurity and information technology training needs. It provides you with a personal account manager to make sure you aren't wasting anyone's time. Your account manager will work with you to ensure your team only focuses on the skills that matter to your organization, so that you can leave unnecessary training behind. Aci Learning kept all the fun and the personality of ITProTV while amplifying the robust solutions for all your training needs. So let your team be entertained while they train, with short format content and more than 7,200 hours to choose from. Visit goacilarningcom slash twit. For teams that fill out ACI's form, you can receive a free trial and up to 65% off an ITPro Enterprise Solution Plan. That's goacilarningcom slash twit. That's T-W-I-T. Thanks so much to ACI Learning for sponsoring this week's episode of Secure Ready. Now let's get back to the show with our photo of the week. Oh, we've got you muted, or you've got you muted one of the two.

0:07:50 - Steve Gibson

I'm You're back.

0:07:51 - Mikah Sargent

you're back, oh hello. So let's retake that.

0:07:56 - Steve Gibson

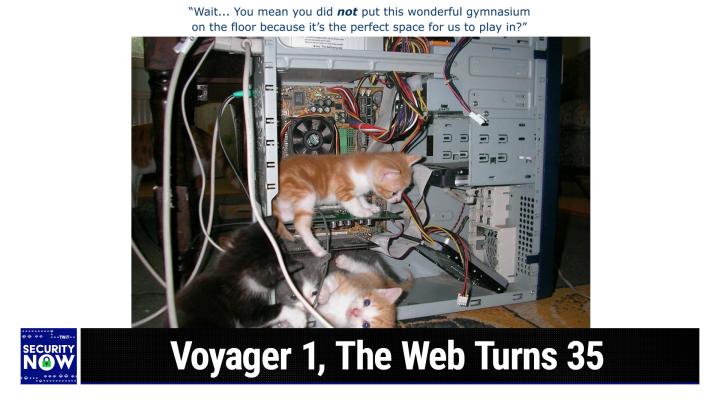

So, okay, I have a large collection of photos in my archive that I'm ready to deploy on demand, but this one just caught me by surprise. Somebody tweeted it and I thought it was so cute. The caption I gave it was wait, you mean you did not put this wonderful gymnasium on the floor because it's the perfect space for us to play in and we have an open old school large computer case which three kittens have managed to get themselves into, and a fourth one is sort of looking on enviously. One of them, the upper kitten, looks like it's standing on one maybe an audio card or a graphics card that's been added to the case. I actually have a number of these exact cases. I look at it and it's very familiar.

The motherboard in there is actually pretty ancient, so I don't know what the story is, where this came from or what's going on, and the hard drives look like they are in need of some help. But anyway, just a bunch of just. Oh my God, these little kittens are adorable, they're so adorable. I mean, like how could anything be that cute as these things are? So, not exactly a cat video, but cat video done. Security now style.

0:09:33 - Mikah Sargent

Yes, indeed, those adorable little cats that you should absolutely keep. I like to imagine that this is somebody who brought in their computer to a place and said there's something wrong with this thing. The person that's cleaning it out opens it up and these little kittens are inside playing and say, ah, that's what's wrong. Yeah, a little fur ball Isn't that kind of? That's what spinrite does, right? It just sends little kittens inside to fix the hard drives.

0:09:57 - Steve Gibson

That's my secret formula. Ah, I figured it out.

0:10:01 - Mikah Sargent

That's right All right, let's get to the security news.

0:10:04 - Steve Gibson

Okay, so we have a quick follow up to, as I said, our recent, perhaps premature, eulogy for the Voyager 1 spacecraft. It may just turn out to have been a flush wound. The team occupying that little office space in Pasadena instructed Voyager to alter a location of its memory in what everyone who's covering this news is calling a poke instruction. Okay now, peak and poke were the verbs used by some earlier high level languages when the code wished which you know, the code which is talking in terms of variables and not in terms of storage wished to either directly inspect, which was to say to peak, or to directly alter, to poke, the contents of memory. So for the past several months there has been a rising fear that the world may need to say farewell to the Voyager 1 spacecraft after it began to send back just garbled data that nobody understood. And so we were saying, well, it's lost its mind, it's gone insane, it's just spitting gibberish. But after being poked, just right, and then waiting, what 22 and a half hours, twice, I think, is the current round trip time, the speed of light round trip. So you know, you poke and then you're very patient. The Voyager 1 began to read out the data from its flight data subsystem, the FDS. That is basically that it began doing a memory dump and this brought renewed hope that the spacecraft is actually still somewhat you know, miraculously in better condition than was feared. In other words, it hasn't gone insane, and the return of the flight data subsystem memory will allow engineers to dig through the returned memory readout for clues. Although, paradoxically, the data was not sent in the format that the FDS is supposed to use, when it's working correctly, it is nevertheless readable.

So you know we're not out of the woods yet and you know it still could be unrecoverable, and this is just another one of its death throws. You know, and and really I mean realistically at some point it will be. I mean, these veterans are going to have to turn the lights off for the last time and put their office space back up for release, but apparently not yet. So I expect that we'll be, you know, checking in from time to time to see how our beloved Voyager 1 spacecraft is doing. But the game is not up yet.

So that's very cool. And at this point, you know it's not clear how much new science is being sent back. I mean, it was incredibly prolific while it was moving, you know, through the planets of the solar system and sending back amazing photos of stuff that we'd never seen before. At this point, you know, it's sort of being kept alive just because it can be. So you know why not? It's not very expensive to do. Okay, so the web officially turned 35. And its dad, tim Berners-Lee, has renewed his his expression of his disappointment over how things have been going recently.

0:14:01 - Mikah Sargent

So this is one of those I'm not angry, I'm disappointed situations. I was hoping he was happy with the, with his child yes, my son it is.

0:14:08 - Steve Gibson

I'm disappointed in the way you have turned out. So one week ago, on March 12th, tim wrote. He said three and a half decades ago, when I invented the web which you know few people can say he said its trajectory was impossible to imagine. There was no roadmap to predict the course of its evolution. It was a captivating odyssey filled with unforeseen opportunities and challenges. Underlying as whole infrastructure was the intention to allow for collaboration, foster compassion and generate creativity. Okay, I would argue that we got two of those three. At least. You know what I he says, what I termed the three C's. Now, of course, a lot of this is retrospective. Right, it's like it's easy to rewrite history 35 years later, but we'll see. Anyway, he says it was a. It was to be a tool to empower humanity.

The first decade of the web fulfilled that promise. The web was decentralized, with a long tail of content and options. It created small, more localized communities, provided individual empowerment and fostered huge value. Yet in the past decade, instead of embodying these values, the web has instead played a part in eroding them. The consequences are increasingly far reaching, from the centralization of platforms to the AI revolution, the web serves as the foundational layer of our online ecosystem, an ecosystem that is now reshaping the geopolitical landscape, driving economic shifts and influencing the lives of people around the world. Five years ago, when the web turned 30, I called out some of the dysfunction caused by the web being dominated by the self-interest of several corporations that have eroded the web's values and led to breakdown and harm. Now, five years on, as we arrive at the web's 35th birthday, the rapid advancement of AI has exacerbated these concerns, proving that issues on the web are not isolated but rather deeply intertwined with emerging technologies.

There are two clear, connected issues to address. The first is the extent of power concentration, which contradicts the decentralized spirit I originally envisioned, if indeed he originally did. He says this has segmented the web, with a fight to keep users hooked on one platform gee wonder what that could be to optimize profit through the passive observation of content you know like while they drool. This exploitive business model is particularly grave in this year of elections that could unravel political turmoil. Compounding this issue is the second the personal data market that has exploited people's time and data, with the creation of deep profiles that allow for targeted advertising and, ultimately, control over the information people are fed. How has this happened? Leadership hindered by a lack of diversity, has steered away from a tool for good, for public good, and one that is instead subject to capitalist forces, resulting in monopolization. Governance, which should correct for this, has failed to do so, with regulatory measures being outstripped by the rapid development of innovation, leading to a widening gap between technological advancements and effective oversight.

The future, he writes, hinges on our ability to both reform the current system and create a new one that genuinely serves the best interests of humanity, to which I'm just going to insert here Good luck with that. Anyway, to achieve this, he writes, we must break down data silos to encourage collaboration, create market conditions in which a diversity of options thrive, to fuel creativity and shift away from polarizing content to an environment shaped by a diversity of voices and perspectives that nurture empathy and understanding. Or we could just all watch cat videos, because you know those are cute. Anyway, he says, to truly transform the current system, we must simultaneously tackle its existing problems and champion the efforts of those visionary individuals who are actively working to build a new, improved system.

A new paradigm is emerging, one that places individuals in tension rather than attention at the heart of business models, freeing us from the constraints of the established order and returning control over our data. Driven by a new generation of pioneers, this movement seeks to create a more human centered web aligned with my original vision. These innovators hail from diverse disciplines research, policy and product design united in their pursuit of a web and related technologies that serve and empower us all. Blue Sky and Mastodon don't feed off of our engagement, but still create group formation. Github provides online collaboration tools, and podcasts contribute to community knowledge as this emergent paradigm gains momentum. I should mention podcasts that are disappearing rapidly, unfortunately.

As this emergent paradigm gains momentum, we have the opportunity to reshape a digital future that prioritizes human well-being, equity and autonomy. The time to act and embrace this transformative potential is guess what? Now. As outlined in the contract for the web, a multitude of stakeholders must collaborate to reform the web and guide the development of emerging technologies. Innovative market solutions like those I've highlighted are essential to this process. Forward thinking legislation okay, now there's an oxymoron for you from governments worldwide can facilitate these solutions and help manage the current system more effectively. Finally, we, as citizens all over the world, need to be engaged and demand higher standards and greater accountability for our online experiences. The time is now to confront the dominant system's shortcomings while catalyzing transformative solutions that empower individuals. This emergent system, ripe with potential, is rising, and the tools for control are within reach.

It's starting to sound like a manifesto a little bit it really is, and I only have a little bit more, and then I'm gonna. We will discuss this. Part of the solution is the so-called solid protocol, capital S, capital P, a specification and a movement to provide each person with their own personal online data store, known as a pod, pod, right, personal online data. We can return the value that has been lost and restore control over personal data by putting it in a pod With solid, individuals decide how their data is managed, used and shared. This approach has already begun to take root, as seen in Flanders, where every citizen now has their own pod after Jan Jambon announced four years ago that all Flanders citizens should have a pod. This is the result of data ownership and control, and it's an example of the emergent movement that is poised to replace the outdated, incumbent system.

And finally realizing this emergent movement won't just happen. Boy is he right about that. Oh, I mean, he says it requires support for the people leading the reform, from researchers to inventors to advocates. We must amplify and promote these positive use cases and work to shift the collective mindset of global citizens. The web foundation that I co-founded with Rosemary Lay has and will continue to support and accelerate this emergent system and the people behind it. However, there is a need, an urgent need, for others to do the same, to back the morally courageous leadership that is rising, collectivize their solutions and overturn the online world being dictated by a profit to one that is dictated by the needs of humanity. It is only then that the online ecosystem we all live in will reach its full potential and provide the foundations for creativity, collaboration and compassion. Tim Berners-Lee, 12th of March 2024.

0:24:45 - Mikah Sargent

Well, you've got your pod right.

0:24:48 - Steve Gibson

Call me jaded, call me old, but I do not see any way for us to get from where we are today to anything like what Tim envisions. The web has been captured Hook line and sinker by commercial interests and they are never going to let go Diversity. Well, one browser most of the world uses is maintained by the world's largest advertiser, and no one forced that to happen. For some reason. Most people apparently just like that colorful round Chrome browser icon, you know. And Chrome is cleaner looking. Its visual design is appealing. Somehow the word spread that it was a better browser and nothing convinced people otherwise. And what Microsoft has done to their edge browser would drive anyone anywhere else.

But I've wandered away from my point. People do not truly care about things that they neither see nor understand. How do you care about something that you don't really understand? The technologies that are being used to track us around the internet and to collect data on our actions are both unseen and poorly understood. People have some dull sense that they're being tracked, but only because they've heard it said so many times oh, I'm being tracked, you know. But they don't know, they don't see it. They just kind of think okay, it makes it feel uncomfortable, but they still do what they were doing. You know they don't have any idea what that really means. They certainly have no idea about any of the details and they have better things to worry about.

0:26:48 - Mikah Sargent

Yes, most importantly, they have better things to worry about.

0:26:51 - Steve Gibson

Yeah, that's right, tim writes. Part of the solution is the solid protocol, a specification and a movement to provide each person with their own personal online data store, known as a pod. We can return the value that has been lost and restore control over personal data. Now, okay, while I honor Tim's spirit and intent I really do I seriously doubt that almost anyone could be bothered to exercise control over their online personal data repository. I mean, I don't even know what that looks like. The listeners of this podcast would likely be curious to learn more, but, as one of my ex-girlfriends used to say, we're not normal.

My feeling is that the web is going to do what the web is going to do. Yes, there are things wrong with it and yes, it can be deeply invasive of our privacy, but it also appears to be largely self-financing, apparently, at least in part thanks to those same privacy invasions. We pay for, act for bandwidth access to the internet, and the rest is free. Once we're connected, we have virtually instantaneous and unfettered access to a truly astonishing breadth of information, and it's mostly free. There are some annoying sites that won't let you in without paying, so most people simply go elsewhere.

The reason most of the web is free is that, with a few exceptions, such as Wikipedia, for-profit commercial interests see an advantage to them for providing it. Are we being tracked in return? Apparently? But if that means we get everything for free, do we really care? If having the internet know whether I wear boxers or briefs means that all of this is opened up to me without needing to pay individually for every site I visit, then okay, life's have always been my thing. Tim may have invented the World Wide Web 35 years ago, but he certainly did not invent what the web has become. That, of course, is why he's so upset. The web has utterly outgrown its parent and it's finding its own way in the world. It is far beyond discipline and far beyond control and, most importantly of all, today it is already giving most people exactly what they want. Good luck changing that.

0:29:53 - Mikah Sargent

Well, put Steve, honestly, when I think about this. And here you go, you said maybe you're jaded and old and this and that and the other, I may be jaded, but I'm not exactly aged. So, even as a relative youth hearing that I want to put on a French beret and chant and say hurrah and feel it, I do feel it, but I think realistically it is not realistic, right, if we're being honest and so, as cool as that would be and as amazing as that would be, yeah, ultimately what you're saying about the stuff that Tim is talking about here you know, I should say Tim Berners-Lee is talking about here is so abstracted from how people use these devices to connect to the internet and to communicate with one another that, yeah, it would require some level of sitting everyone down across the entire world and explaining to them how all of this works for there to be even the beginning of a concern about what would be necessary to convince everybody that they should care about this. And, as I'm saying just then, you heard all the hedging that kind of took place there. It wouldn't even necessarily make a difference even if you did explain it, because they still have to care about it and, most importantly, most people don't need to care about it, and so they and they have bigger, better things in their world that they have to care about, and that is, I think, always going to be the case, and that's, you know, the people who do care about this stuff.

We do our best to communicate and educate, but, yeah, I don't know, I mean as much as you might, I mean, I don't know, to take the time to write all of this out and, to, you know, put forth this idea. I think is a very noble thing, but I do wonder. I wish I could talk to Tim Berners-Lee, sort of just, you know, face to face, and say yeah, what do you think?

Do you really think that anyone's going to do this, or are you just? This is just a hopeful sort of? I'm putting it out into the world. How high is that ivory color Exactly exactly? We're down here. Yeah, I don't know.

0:32:29 - Steve Gibson

Yeah, and again, I really do believe that most users' wishes are now being fulfilled. Right, you know, I mean, my wife asks me a few questions every evening and I say, well, did you Google it? You know, it's like that's what I do I just ask.

I ask the internet and it tells me the answer. Because there's so much going on, it's so complicated now that the right model is no longer to try to know everything, it's simply to know how to find out. You know, that's the future, with the knowledge explosion that we're now in and the content explosion. So I, just I, again, I, you know. There was an anecdote for a while I don't remember now exactly what it was, but it was something like I have.

Would you back, when people were typically using a single password, like universally for all their stuff, someone did an experiment where they went up to people and said here's a lollipop, I'll trade you for your password. And most people said, okay, you know, I mean, they just didn't give a rat's ass about security, right, and most people just just aren't as focused. I mean, this podcast is all about this kind of focus and, as I said, an ex-girlfriend used to say to me you're not normal. So yeah, you know we're not, but you know, most the world, they just, you know the internet does what they want and start asking them to pay in some significant way. I mean, look at the club, look at club twit.

0:34:18 - Mikah Sargent

Yeah, I mean it's, it's a very small percentage of the overall listener base. Yes, and I mean, and you say paying some significant way, anecdotally, it doesn't have to be a significant amount of payment for somebody to go. Well, suddenly I don't care about that anymore. I there have been a number like an app. Oh, I, I saw that everybody's posting these photos of themselves. They've been AI generated. How do they do that? I say, oh, it's this app and you pay like 50 cents to get a photo generation. Oh, never mind, I don't care about that anymore. Yeah, 50 cents, but yeah, it doesn't take that much for them to be like no, no, no, no, no, no, that's not something I'm into.

0:34:55 - Steve Gibson

No, and, as we know, there are some sites which have survived in the you know, in the pay-to-enter model. So many of the early attempts fell flat because the moment the you know sites put up a pay wall, most people said, eh, you know, I clicked the first link in Google and it took me to the pay wall. What's the second link Take me to? Oh look, that's free.

0:35:21 - Mikah Sargent

Able to get to it? Yeah.

0:35:23 - Steve Gibson

Okay.

So, uh, in the show notes, I gave the title of this next bit of news the title wow, just wow, because it tells the story of something that's so utterly violating of consumer rights and privacy that it needed that that title. The headline in last week's New York Times read huh, automakers are sharing consumers driving behavior with insurance companies. Oh, in the subhead read Lexus Nexus, which generates consumer risk profiles for insurers, knew about every trip GM drivers had taken in their cars, including when they sped, break too hard or accelerated rapidly. Okay, so I, it's astonishing. Wow, here's the. I know exactly. Here's the real world event that the New York Times used to frame their disclosure. They wrote Ken doll says not's not D O L L, that's D A H L. And, as K E N N, ken doll says he has always been a careful driver. The owner of a software company near Seattle, he drives at least Chevrolet Bolt. He's never been responsible for an accident.

So Mr Dahl, at age 65, was surprised in 2022 when the cost of his car insurance jumped by 21%. Quotes from other insurance companies were also high. One insurance agent told him his Lexus Nexus report was a factor. Lexus Nexus, they write, is a New York based global data broker with a risk solutions division that caters to the auto insurance industry and has traditionally kept tabs on car accidents and tickets. Okay, right, public record things right, I mean like accidents and tickets that's out there.

Upon Mr Dahl's request, lexus Nexus sent him a 258 page consumer disclosure report, which it must provide per the Fair Credit Reporting Act. What it contained stunned him More than 130 pages dealing, I'm sorry detailing each time he or his wife had driven the bolt over the previous six months. It included the dates of 640 trips, their start and end times, the distance driven and an accounting of any speeding, hard braking or sharp accelerations. The only thing it didn't have is where they had driven the car. On a Thursday morning in June, for example, the car had been driven 7.33 miles in 18 minutes. There had been two rapid accelerations and two incidents of hard braking. According to the report, the trip details had been provided by General Motors, the manufacturer of the Chevy Bolt Nexus. Lexus analyzed that driving data to create a risk score quote for insurers to use as one factor of many to create more personalized insurance coverage.

According to a Lexus Nexus spokesman, dean Carney, eight insurance companies had requested information about Mr Dahl from Lexus Nexus over the previous month. Mr Dahl said it felt like a betrayal. They're taking information that I didn't realize was going to be shared and screwing with our insurance. Okay, now, since this behavior is so horrifying, I'm going to share a bit more of what the New York Times wrote. They said in recent years, insurance companies have offered incentives to people who install dongles in their cars or download smartphone apps that monitor their driving, including how much they drive, how fast they take corners, how hard they hit the brakes and whether they speed.

That quote Ford Motor put it. Drivers are historically reluctant to participate in these programs, and this occurred. This was written in a patent application that describes what is happening instead. Quote car companies are collecting information directly from internet connected vehicles for use by the insurance industry, in other words, monetizing right, because you know the insurance industry is paying to receive that information. So another means by which today's consumer is being monetized without their knowledge. New York Times says something that sometimes this is happening with a driver's awareness and consent.

Car companies have established relationships with insurance companies so that if drivers want to sign up for what's called usage-based insurance, where rates are set based on monitoring of their habits, it's easy to collect that data wirelessly from their cars, but in other instances, something much sneakier has happened.

Modern car companies are I'm sorry, modern cars are internet enabled, allowing access to services like navigation, roadside assistance and car apps that drivers can connect to their vehicles to locate them or unlock them remotely. In recent years, automakers including GM, honda, Kia and Hyundai have started offering optional features in their connected car apps that rate people's driving. Some drivers may not realize that if they turn on these features, the car companies then give information about how they drive to data brokers like Lexus, nexus and, again, not give sell. Automakers and data brokers that have partnered to collect detailed driving data from millions of Americans say they have drivers' permission to do so, but the existence of these partnerships is nearly invisible to drivers, whose consent is obtained in fine print and murky privacy policies that few ever read. Especially troubling is that some drivers with vehicles made by GM say they were tracked even when they did not turn on the feature called OnStar Smart Driver, and that their insurance rates went up as a result.

0:43:13 - Mikah Sargent

So I do have a problem with that last bit, simply because someone says that I almost wish that there was some due diligence there. I'm sure you've seen it. If you say here's how you fix it and they say I've done that, and then you go and check and they didn't do that thing that you told them to do and that they should have done it, I wouldn't be surprised that they did accidentally opt in. But all that's to say, whether you opt in or not, this is still something that should be brought to light. As for all of it especially if it's kind of being put forth as an idea of here are these cool features you get and secretly underneath, what it's doing is giving access. Yeah, that's bad. I don't know if I like that from the New York Times there at the end.

0:44:06 - Steve Gibson

Well, so one analogy that occurs to me is how and we've mentioned this a number of times in prior years during the podcast is employees in an organization sometimes believe that what they do on their corporate computer is private, is like their business, even when the employee agreement and occasional reminder meetings and so forth say that's not the case. This is a corporate network, corporate bandwidth, a corporate computer, and what you do is owned by the company. We have suggested that that really ought to be on a label running across the top of their monitor. Yes, it literally ought to say right in front of them. Please remember that everything you do on this computer, which is owned by the company, on the bandwidth owned by the company and the data owned by the company is not private, right? So the analogy, the screens that all these computers have, imagine, if they said along the bottom, your driving is being monitored by the company you purchased this from and is being sold to your car insurance provider.

0:45:32 - Mikah Sargent

They don't want to do that, Steve, Obviously we're never going to see that.

0:45:39 - Steve Gibson

But that's the point is that this is going on surreptitiously, and it being surreptitious is clearly wrong. Anyway, stepping back from the specifics of this particularly egregious behavior, add the context of Tim Berners-Lee's unhappiness with what the web has become and the growing uneasiness over the algorithms being used by social media companies to enhance their own profits, even when those profits come at the cost of the emotional and mental health of their own users. We see example after example of amoral, aggressive profiteering by major enterprises, where the operative philosophy appears to be we'll do this to make as much money as we can, no matter who is hurt, until the governments in whose jurisdictions we're operating get around to creating legislation which specifically prohibits our conduct. But until that happens, we'll do everything we can to fight against those changes, including, where possible, lobbying those governmental legislators.

0:47:03 - Mikah Sargent

Honestly, we could just take that text and slap it on the screen anytime we talk about any antitrust legislation across any of our shows, and that perfectly sums up exactly what's going on in every single case. It's like make us stop. Yeah, exactly.

0:47:24 - Steve Gibson

And until you do, we're going to use every clever means we have of profiting from every area in which we have not been made to stop. There's no more morality, there's no more ethics.

0:47:44 - Mikah Sargent

It's profit wherever possible.

0:47:48 - Steve Gibson

That is exactly Tim Berners-Lee's complaint and it's never going to change because it's just too pervasive. And again, as a consequence of this, the internet is largely free and I think that's a trade-off most people would choose to make rather than having to pay like remember that there was early talk about micropayments, where when you went to a website, it would ding you some fraction of something or other and that would make people very uncomfortable. They'd be like, well, I wait a minute. Suddenly, links are not free to click on. Yeah, exactly.

0:48:34 - Mikah Sargent

There's a cost to clicking on that link. How many times per month should I click on this? And then you're telling your kids don't click on links and it would just completely reshape everything. And then there would be so many. I can't imagine how much more money and time would have to go into customer support because someone would click on a link and then say I'm not satisfied with this page and I don't want to have paid for this page because I didn't get the answer I wanted. That's a very good point.

0:49:05 - Steve Gibson

That's a very good point, because right now it's like well, it's free, so go pound sale somewhere. It's like tough, yeah, yeah. Well, again, I don't mean to be just simply complaining, because I also recognize, as I said, this is why the web is here. I mean, I was present during pre-web, during the early days and when there was not much on the net and the question was well, why is anyone going to put anything on the internet? Because there's nobody on the internet to go see it. So there was like this chicken and egg problem no one's at no vast population, or you are actually going onto the internet to do anything, so why is anyone going to put anything there? And if no one puts anything there, then no one is going to be incentivized to go and get what's not there.

So it happened anyway and the way it's evolved. As I said, I'm actually not complaining. This is not a I mean, this sounds like I'm doing some holier than thou rant. It's not the case. I like it the way it is. And those of us who are clever enough to mitigate the tracking that's being done and the monetizing of ourselves, well, we get the benefit that is being reaped by all those who aren't. So it works and I think, on that note, we should take our second break.

0:50:43 - Mikah Sargent

Let us take a break so I can tell you about Delete Me. Who is bringing you this episode of security? Now, If you've ever searched how appropriate for your name online and you were shocked like I was, to see how much of your personal information is actually out there, Well, that is where Delete Me comes into play. It helps reduce the risk for identity theft, credit card fraud, robo calls, cybersecurity threats, harassment and unwanted communications. Overall, I've used this tool personally and as a person who has an online presence. I've written for sites, I've got lots of news video out there and then, of course, my work here at Twitter and other places doing podcasting.

I didn't want that part of me to disappear from the internet and it doesn't have to. Delete Me will go and look at those different data brokers and those different sites that are storing my information, like where I live, where I have lived, who my relatives are that kind of information and make sure that that was removed. And I have found it very easy to do and I love that they keep me updated as they continue to remove stuff. See, the first step for Delete Me if you decide you want to use it, is to sign up and submit some of your basic personal information for removal. You got to tell them who you are so they know what to look for and actually find it and get rid of it. Then Delete Me experts will find and remove your personal information from hundreds of data brokers, helping reduce your online footprint and keeping you and your family safe. Most importantly, Delete Me continues to scan and remove your personal information regularly, because those data brokers will continue to scoop it up and put it back into those data broker sites. This includes addresses, photos, emails, relatives, phone numbers, social media, property value and more.

If you look up yourself, you're probably going to see some stuff out there and go. What in the world? Why is that out there? And since privacy exposures and incidents affect individuals differently, their privacy advisors ensure that customers have the support they need when needed. So protect yourself and reclaim your privacy by going to joindeletemecom slash twit and using the code TWIT. Since joindeletemecom slash twit with the code TWIT for 20% off. Thank you to Delete Me for sponsoring this week's episode of Security. Now we are back to the show. Steve Gibson, let's close that loop.

0:53:16 - Steve Gibson

Let's do it. So Montana J wrote hey, a flaw in past key thinking? I teach computer science at a college. Like many in the educational field, I log on to a variety of computers a day that are used by myself, fellow instructors and students. Using a past key in this environment would allow others to easily gain access to my accounts. Not a good thing. So turning off passwords is not an option, just something to think about, jim. Okay, right, well, it's a very good point, which I tend to forget, since none of my computers are shared. But in a machine sharing environment, there are two strong options.

Fido in a dongle is one way to obtain the benefits of past key, like public key identity authentication, while achieving portability.

Your past keys are all loaded into this dongle and that's what the website uses. But also reminiscent of the way I designed Squirrel originally, a smartphone can completely replace a Fido dongle to serve as a past keys authentication client by using the QR code presented by a past keys website and in that model, past keys probably provides just about the best possible user experience and security for shared computer use. So you go to a site, you log in only with giving them your username. At that point, the site looks up your username, sees that you have registered a past key with a site and moves you over to the past key login. But if that will be a QR code, you take your Android or Apple phone, open the past key app, let it see the QR code and you're logged in so that computer and the web browser never has access to your past keys. They remain in your phone. So it's absolutely possible to get all the benefit of past keys in a shared usage model with arguably the best security around.

0:55:45 - Mikah Sargent

I have to say I'm kind of confused by Jim's suggestion. I don't understand. Jim is suggesting somehow that after he sits down, logs into stuff is done, logs out, that another person could sit down and log in because of a past key, Is it? How is that? I don't understand how that would even work. Is Jim suggesting that there's a?

0:56:09 - Steve Gibson

I believe it's because last week one of the things we talked about was past keys being stored in a password manager in the browser.

0:56:22 - Mikah Sargent

But you would so if you forgot to log out of the. Oh, I see If it's in the browser and this person uses the browser, right, okay, gotcha, that makes sense, okay got it.

0:56:32 - Steve Gibson

Yeah, so it's not obviously a shared machine to store anyone's past keys. You want that to all be provided externally on the fly.

0:56:46 - Mikah Sargent

Yeah, I mean in theory. You also don't want an in-browser system storing passwords if you're in, I agree completely.

0:56:55 - Steve Gibson

Exactly. There should be no password manager and like would you like me to remember this password for you? I don't even know if there's a way to turn that off, but that should be like yeah, heck, no, porsed off so that it's so that it can't even ask you. Gilding timings, he wrote. Hey, steve, I just finished watching episode 965 on past keys versus two-factor authentication. I was wondering don't past keys just change? Who is responsible for securing your authentication data? With passwords and two-factor authentication, the responsibility is with the website. With past keys, the responsibility is with the tool storing the past keys, for example, a password manager. If the password manager is compromised, an attacker has all they need to authenticate as you. So again, we're talking about storing past keys in the password manager, which is something that we talked about last week, which is why our listeners are coming back with they're asking questions about this practice. You know, deservedly so. So it says if the password manager is compromised, an attacker has all they need to authenticate as you. I would think that if the website doesn't allow disabling password authentication, then two-factor authentication still has some value If we're talking about password managers being compromised and, of course, there he's talking about external storage of the two-factor authentication code like in your phone, which is again something we've also talked about in the past. He said you can at least store the two-factor authentication data separately from your password manager. He says I'm loving Spenright. It's already come in handy multiple times. He's Spenright 6.1,. He said Thanks so much for continuing the show. I look forward to it every week. Okay, so first thank you. After three years of work on it, I certainly appreciate the Spenright feedback and I'm delighted to hear that it's come in handy. So here's the way to think about authentication security.

All of the authentication technology in use today requires the use of secrets that must be kept, all of it. The primary difference among the various alternatives is where those secrets are kept and who is keeping them. In the username password model, assuming the use of unique and very strong passwords, the secrets must be kept at both the client's end, so that they can provide the secret, and the server's end, so that it can verify the secret provided by the client. So we have two separate locations where secrets must be kept. By comparison, thanks to PASCII's entirely different public key technology, we've cut the storage of secrets in half. Now only the client side needs to be keeping secrets, since the server side is only able to verify the client's secrets without needing to retain any of them itself. So it's clear that by cutting the storage of secrets in half we already have a much more secure authentication solution, but the actual benefit is far greater than 50%. Where does history teach us the attacks happen? When the infamous bank robber Willie Sutton was asked why he robbed banks, his answer was obvious.

1:00:52 - Leo Laporte

In retrospect he said because that's where all the money is.

1:00:57 - Steve Gibson

For the same reason, websites are attacked much more than individual users because that's where all the authentication secrets are stored. So when the use of PASCII's cuts the storage of authentication secrets by half, the half that it's cutting is where nearly all of the theft of those secrets occurs. So the practical security gain is far more than just 50%. Now our listener said I would think that if the website doesn't allow disabling password authentication, then two-factor authentication still has some value. If we're talking about password managers being compromised, you could at least store the two-factor authentication data separately from your password manager. That's true, and there's no question that requiring two secrets to be used for a single authentication is better than one and that storing those secrets separately is better still. But, as we're reminded by the needs of the previous listener, who works in a shared machine environment, just like two-factor authentication, pascii's can also be stored in an isolated smartphone and best kept separate from the browser. Storing our browsers or password manager extensions, storing our authentication data is the height of convenience, and we're not hearing about that actually ever having been a problem. That is to say, browser extension compromise. That's very comforting, but a separate device just feels as though it's going to provide more authentication security. If only in theory, the argument could be made that storing PASCII's in a smartphone still presents a single point of authentication failure. But it's difficult to imagine a more secure enclave than what Apple provides, backed up by per-use biometric verification. Before unlocking a PASCII, go to wwwinstallationcom for all. The fifth time we living in a byet to use the strongest protection I think you can get.

Today, mike Shepers says Hi, steve, I'm a long time listener of Security Now and love the podcast. Thank you so much for all your contributions from making this world a better place and freely giving your expertise to educate many people like myself. I do have a question for you related to PASCII's episode 965, that I'm hoping you could help me understand. There are many accounts that my wife and I share for things like banking and health benefits websites where we both need access to the same accounts. If they were to use only PASCII's for authentication, is sharing possible? Thank you, mike. In a word, yes, whether PASCII's are stored in a browser side password manager or in your smartphone. The various solutions have all recognized this necessity and they provide some means for doing this. For example, in the case of Apple, under settings passwords, it's possible to create a shared group for which you and your wife would be members. It's then possible for members of the group to select which of their passwords they wish to share in the group, and Apple has seamlessly extended this so that it works identically with PASCII's. Apple's site says Shared password groups are an easy and secure way to share passwords and PASCII's with your family and trusted contacts Very trusted. Anyone in the group can add passwords and PASCII's to the group share. When a shared password changes, it changes on everyone's device. So it's a perfect solution and, yes, that appears to be universal. So PASCII sharing has been provided.

Sennreith says well, we got a posting or I got a tweet from him and there's been such an outsized interest shown in this topic by our listeners that I wanted to share his restatement and summary of the situation, even though it's a bit redundant, so that everyone could just kind of check their facts against the assertions that he's making. He said Hi, steve, let's listen to SN965 and have a thought about PASCII's security. Completely agree with your assessment of the security advantages of PASCII's versus PASCII's and multi-factor authentication in general. But another practical difference occurs to me when using a password manager to store your PASCII's With PASCII's plus MFA. If your password manager's breached somehow, you can still rest easy knowing that only your passwords were compromised. Again, assuming multi-factor authentication is in a separate device, right, because password managers are now offering to deal to do your multi-factor authentication for you too.

He said you can still rest easy knowing that only your passwords were compromised and that hackers could not actually gain access to any of the accounts in your vault that were also secure with a second factor. Of course, this is not true if you also use your password manager to store your MFA codes, which is why you've said in the past that you would not do that, as it puts all your eggs in one basket right. With PASCII stored in a password manager, this is no longer the case. If the password manager's breached, the hacker can gain access to every account that was secured with the PASCII's in your vault. So while PASCII's most definitely make you less vulnerable to breaches at each individual site, the trade-off is making you much more vulnerable to a breach of your password manager.

If I'm understanding this correctly, he writes Like the original listener from last week, stefan Janssen. This leaves me feeling hesitant to use PASCII's with a password manager. I think using PASCII's with a hardware device like a Yuba key would be ideal. But then you have to deal with the issue of syncing multiple devices, he says, which of course wouldn't have been an issue with Squirrel True. Thanks for all you do so. Apple and Android smartphones support cross-device PASCII syncing and website logon via QR code. So PASCII's remains the winner. No secrets are stored remotely by websites, so the impact of the most common website security breaches is hugely reduced.

If you cannot get rid of or disable a website's parallel use of passwords, then by all means protect the password with MFA, just so that the password by itself cannot be used, and perhaps remove the password from your password manager if its compromise is a concern. So that leaves a user transacting with PASCII's for their logon and left with a choice of where they are stored in a browser or browser extension or on their smartphone. I would suggest that the choice is up to the user. The listeners of this podcast will probably make a different choice than everybody else, right? Because ease of use generally wins out here. The browser presents such a large attack surface that the quest for maximum security would suggest that storing PASCII's in a separate smartphone would be most prudent. But that does create smartphone vendor ecosystem lock-in.

And I'll remind everyone that we do not have a history of successful major password manager extension attacks. Why, I don't know, but it just doesn't, you know, like attacks on our, although we're all worried about them. We're worried about the possibility because we know it obviously exists, but what we see is websites being attacked all the time, not apparently with any success the password manager extensions, which is somewhat amazing, but it's true. So the worry over giving our PASCII's to our password managers to store is only theoretical, but it's still a big what if? And I recognize that At this point I doubt that there's a single right answer that applies to everyone. You know, when a user goes to a website that says how would you like to switch to PASCII's, and they say okay, and they press a button and their browser says done, I know you're PASCII, now I'll hand a login for you from that one, they're going to go, yay, you know, like great With. You know, not a second thought, not this podcast audience, but again the majority. And I'll just finish by saying the lack of PASCII portability is a huge annoyance, you know, but we're still in the very early days and we do know that the Fido group is working on a portability spec, so there is still hope that.

I think one of the things that make us feel a little queasy about PASCII's is that we, you know we can't see them, we can't touch them, we can't hold them. You know the password, you can see, you can write it down, you can copy it somewhere else, you can copy and paste it. It's tangible and, as I've said on the podcast, I print out the QR codes of all of my one-time password authenticator QR codes. Whenever a site gives me one and I'm setting it up, I make a paper copy and I've got them all stapled together in a drawer because if I want to set up another device and I'm unable to export and import those, I'm able to, you know, to expose them to the camera again and recreate those. So the point is they're tangible, but at this point no one has ever seen a PASCII. They're just like somewhere in a cloud or imaginary or something, and it makes us feel uncomfortable that you know they're just intangible the way they are. Cr said hi, steve.

On episode 965, a viewer commented on how some sites are blocking anonymous HTTPS colon slash, slash, duck dot com email addresses or stripping out the plus symbol. I want to share my approach that gets around these issues, he said. First I registered a web domain with Whois privacy protection to use just for throwaway accounts. I then added the domain to my personal proton mail account, which requires a plan upgrade, but I'm sure there are many other email hosting services out there that are cheap or possibly free. Finally, I enabled the catch all address option. With this in place, I can now sign up on websites using any name at my domain and those emails are delivered to the catch all. In proton mail you can set up filters or real addresses if you want to bypass the catch all, should you want some organization. Proton mail also makes it really easy to block email senders by right clicking the email item in your inbox and selecting the block action. So far this setup has been serving me well for the past year without any problems, okay.

So I wanted to toss this idea just out there, you know, into the ring as an idea that might work for some of our listeners, and I agree that it solves the problem of creating per site or just random throwaway email addresses. But the problem it does not solve for those who care is the tracking problem, since all of those throwaway addresses would be at the same personalized domain. The reason the at duckcom solution was so appealing is that everyone using at duckcom is indistinguishable from everyone else using at duckcom. Being obtaining any useful tracking information from someone's use of at duckcom or any other similar mass anonymizing surface futile, and this, of course, is exactly why some websites are now refusing to accept such domains and why this may become, unfortunately, a growing trend for which there is no clear solution at this point, and I don't think there can be one. Really, it's going to be a problem.

Gabe Van Engel said hey, steve, I wanted to send you a quick note regarding the vulnerability report topic over the last two episodes. I don't know the specifics of the issue the listener reported, but I can provide some additional context as someone who runs an open bounty program on hacker one. We require that all reports include a working proof of concept to be eligible for bounty. The reason is that many vulnerability scanners flag issues simply by checking version headers. However, most infrastructure these days does not run upstream packages distributed directly by the author and instead use a version package by a third party providing back ported security patches, for example, repositories from Red Hat Enterprise, linux, ubuntu, debian, freebsd, etc. It is totally possible. The affected company is vulnerable to the trivial engine X remote code execution. But if they think the report isn't worth acting on, it's also possible they're running a version which isn't actually vulnerable but still returns a vulnerable looking version string. To be clear, I'm not trying to give the affected company a free pass, even if they aren't vulnerable.

The time frame over which the issue was handled and the lack of a clear explanation as to why they chose to take no action is inexcusable. All the best, keep up the good work, gabe. When he said PS looking forward to email so I can delete my Twitter account, I thought Gabe's input as someone who's deep in the weeds of vulnerability disclosures at Hacker One was very valuable. It's interesting that they don't entertain any vulnerability submission without a working proof of concept. Given Gabe's explanation, that makes sense. It's clear because they just have too many false positive reports. People say, hey, why didn't I get a payment for my valuable discovery? He's like well, it didn't work. Yeah, you didn't prove that it actually worked Exactly. And it's clear that a working proof of concept would move our listeners. Passive observation from a casual case of hey, did you happen to notice that your version of NGINX is getting rather old to hey. You better get that fixed before someone else with fewer scruples happens to notice it too.

As we know, our listener was the former of those two. He only expressed his concern over the possibility that it might be an issue and he, even in his conversation with me, recognized that it could be a honeypot where, like they deliberately had this version header and were collecting attacks, though I think he was being very generous with that possibility. He understood that the only thing he was seeing was a server's version headers and that therefore there was only some potential for trouble, and had the company in question clearly stated that they were aware of the potential trouble but that they had taken steps to prevent its exploitation, the issue would have been settled. It was only their clear absence of focus upon the problem and never addressing his other questions that caused any escalation in the issue beyond an initial casual nudge. But Gabe also said looking forward to email, meaning GRC's soon-to-be-brought-on-line email system. He said so that he could delete his Twitter account.

I also wanted to take a moment to talk about Twitter. You know many of this podcast's listeners take the time to express similar sentiments and at the same time, I receive tweets from listeners arguing that I'm wrong to be leaving Twitter, as well as the merits of Twitter and how much Elon has improved it since his purchase. Okay, so for the record, let me say again that I am entirely agnostic on the topic of Elon and Twitter. In other words, I don't care one way or the other. More than anything, I'm not a big social media user. What we normally think of as social media doesn't interest me at all. That said, grc has been running quiet backwater NNTP-style text-only news groups for decades, since long before social media existed, and we have very useful web forums, but Twitter has never really been social media for me. I check in with Twitter once a week to catch up on listener feedback, to post the podcast's weekly summary and link to the show notes, and then recently to add our picture of the week.

What caught my attention and brought me out of my complacency with Twitter was Elon's statement that he was considering charging a subscription for everyone's participation, thus turning Twitter into a subscription-only service. That brought me up short and caused me to realize that what was currently a valuable and workable communications facility for as little as I use it might come to a sudden end because it was clear that charging everyone to subscribe to use Twitter would end it as a means for most of our current Twitter users to send feedback. They're literally only using Twitter, as I am, to talk to me, we don't all have Twitter, but we do all have email, so it makes sense for me to be relying upon a stable and common denominator that will work for everyone, and since I proposed this plan to switch to email, many people, like Gabe, have indicated to me through Twitter that not needing to use Twitter would be a benefit for them too. So I just wanted to say again, to explain again, you know, because their people are like fine, you know, I don't have an issue.

1:22:05 - Mikah Sargent

You're not taking a stand You're trying to make it available to more people.

1:22:08 - Steve Gibson

That's it, that is exactly it and Elon appears to be making it available to fewer and maybe many fewer. So that would be a problem for me. So I'm switching before that happens. Mark Zipp wrote at SGGRC just catching the update about the guy who found the flaw in the big site and the unsatisfactory response from CISA. I think he should not take the money. I think he should tell Brian Krebs or another high profile security reporter. They can often get responses. Okay, now this is another interesting possible avenue.

My first concern, however, is for our listeners safety, and by that I don't mean his physical safety, I mean his safety from the annoying tendency of bullying corporations to launch meritless lawsuits just because they easily can.

Our listener is on this company's radar now and that company might not take kindly to someone like Brian Krebs, using his influential position to exert greater pressure. This was why my recommendation was to disclose to CISA insert being US government bodies. Disclosing to them seems much safer than disclosing to an influential journalist. Now recall from earlier Gabe from Hacker One. I subsequently shared my reply with him and he responded to that, and he said this is one of the benefits of running a program via Hacker One or other, by having a hacker register and agree to the program terms. It both lets us require higher quality reports and to also indemnify them against otherwise risky behavior, like actually trying to run remote code executions against a target system. So, yeah, that indemnification could turn out to be a big deal. And, of course, when working through a formal bug bounty program like Hacker One, it's not the hacker who interfaces with the target organization, it's Hacker One who is out in front, so not nearly as easy to ignore or silence an implied threat.

1:24:51 - Mikah Sargent

Are you hearing that secret person who messaged before? Perhaps Hacker One would be a good place for you to go next.

1:25:01 - Steve Gibson

Yep. Another of our listeners said this website with this big vulnerability should be publicly named. You are doing a disservice to everyone who uses that site by keeping it hidden. To quote you in your own words, security by obscurity is not security. Let us know which site it is so that we can take action. Well, wouldn't it be nice if things were so simple In the first place.

This is not my information to disclose, so it's not up to me. This was shared with me in confidence. The information is owned by the person who discovered it and he has already shared it with government authorities, whose job, we could argue, it actually is to deal with such matters of importance to major national corporations. The failure to act is theirs, not his nor mine. The really interesting question all of this conjures is whose responsibility is it? Where does the responsibility fall? Some of our listeners have suggested that bringing more pressure to bear on the company is the way to make them act, but what gives anybody the right to do that? Publicly naming the company, as this listener asks, would very likely focus malign intent upon them and, based upon what I previously shared about their use of an old version of NGINX, the cat, as they say, would be out of the bag At this point. It's only the fact that the identity of the company is unknown that might be keeping it and its many millions of users safe. Security by obscurity might not provide much security, but there are situations where a bit of obscurity is all you've got. This is a very large and publicly traded company, so it's owned by its shareholders, and its board of directors, who have been appointed by those shareholders, are responsible to them for the company's proper, safe and profitable operation. So the most proper and ideal course of action at this point would likely be to contact the members of the board and privately inform them of the reasonable belief that the executives they have hired to run the company on behalf of its shareholders have been ignoring, and apparently intend to continue ignoring, a potentially significant and quite widespread vulnerability in their web-facing business properties. While some minion who receives anonymous email can easily ignore incoming vulnerability reports, if the members of the company's board were to do so, any resulting damage to the company, its millions of customers and its reputation would be on them.

Stepping back from this a bit, I think that the lesson here is that at no point should it be necessary for untoward pressure to be used to force anyone to do anything, because doing the right thing should be in everyone's best interest. The real problem we have is that it's unclear whether the right person within the company has been made aware of the problem. At this point it's not clear that's happened through no fault of our original listener, who may have stumbled upon a serious problem and has acted responsibly at every step. If the right person had been made aware of the problem, we would have to believe that it would be resolved, if indeed it was actually a problem. So my thought experiment about reaching out to the company's board of directors amounts to going over the heads of the company's executives, who do not appear to be getting the message, and that has the advantage of keeping the potential vulnerability secret while probably resulting in action being taken. I'm not suggesting that our listener should go to all that trouble, since that would be a great deal of thankless effort. The point I'm hoping to make is that there are probably still things that could be done short of a reckless public disclosure, which could result in serious and unneeded damage to users and company alike, and maybe even to the person who made that disclosure. I mean likely to the person who made that disclosure.

Marshall tweeted hi, steve, a quick follow up question to the last Secure Now episode. Okay, here's one more. I thought we were done with them On MFA versus PASCIs. Does the invention oh, this is actually a good one, I know why I put it in here Does the invention of PASCIs invalidate the something you have, something you know and something you are paradigm, or does PASCIs provide a better instantiation of those three concepts? Great question, because the idea with multi-factors is that you'd add another factor for greater security, but with PASCIs, do you still consider those factors? Thanks for everything you do. Okay, I think this is a terrific question.

The way to think of it is that the something you know is a secret that you're able to directly share. The use of something you have, like a one-time password generator, is actually you sharing the result of another secret you have, where the result is based upon the time of day and the something you are is some biometric being used to unlock and provide a third secret. In all three instances, a local secret is being made available through some means. It's what's done with that secret where the difference between traditional authentication and public key authentication occurs. With traditional authentication, the resulting secret is simply compared against a previously stored copy of the same secret to see whether they match. But with public key authentication such as PASCIs, the secret that the user obtains at their end is used to sign a unique challenge provided by the other end, and then that signature is verified by the sender to prove that the signer is in possession of the secret private key.

Therefore, the answer, as Marshall suggested, is that PASCIs provides a better instantiation of those original three concepts. For example, apple's PASCIs system requires that the user provides a biometric face or thumbprint to unlock the secret before it can be used. Once it's used, the way it's used is entirely different, because it's using pass keys, but a browser extension that contains pass keys merely requires its user to provide something they know to log into the extension and thus unlock its store of pass key secrets. As we mentioned earlier, all of these traditional factors were once layered upon each other in an attempt to shore each other up, since storing and passing secrets back and forth had turned out to be so problematic. We don't have this with pass keys, because the presumption is that a public key system is fundamentally so much more secure that a single, very strong factor will provide all the security that's needed. And just for the record, yes, I think the pass keys should be stored off a browser, because, even though we're not seeing lots of browser attacks, they do seem more possible than an attack on an entirely separate facility which is designed for it.

Rob Mitchell said "'Interesting to learn the advantages of pass keys. "'it definitely makes sense in many ways. "'the one disadvantage my brain sticks on versus TOTP, "'time-based one-time passwords "'is that I'd imagine someone who can get "'into your password manager? "'okay, so here's talking about it "'hack into a cloud backup or signed onto your computer, "'now can access your account with pass keys, "'like if pass keys were a thing, "'when people were having their last pass accounts accessed, "'but if your time-based token is only on your phone, "'someone who gets into your password manager "'still can't access a site, "'because they don't have the TOTP key stored on your phone. "'maybe pass keys are still better, "'but I can't help but see that weakness'". So again, I've been overly repetitive here. Rob's sentiment was expressed by many of our listeners, so I just wanted to say that I agree and, as I mentioned last week, needing to enter that ever-changing secret six-digit code from the authenticator on our phone really does make everything seem much more secure. Nothing that's entirely automatic can seem as secure. So storing pass keys in a smartphone is a choice. I think that makes the most sense and, as I've mentioned, the phone can be used to authenticate through the QR code that a pass keys enabled site presents to its users.

Christian Cherry said hi, steve, on SN965, you discussed the issue with Chrome extensions changing owner and how devs are being tempted to sell their extensions. There is a way to be safe when using extensions in Chrome or Firefox. Download now this is interesting download the extension, expand it and inspect it. Once you are sure it's safe, you can install it on Chrome by enabling developer mode under Chrome colon slash, slash extensions slash and selecting load unpacked. The extension will now be locally installed, which means it will never update from the store or change. It's frozen in time. If it ain't broke, don't fix it, and if the extension does break in a future update due to Chrome changes, you can get the update and perform the same process again. While using these steps requires some expertise, it should be fine for most security.

Now listeners Interesting. Anyway. Thank you, christian. Yes, I said I think that is a great tip and I bet it will appeal to many of our listeners, who generally prefer taking automatic things into their own hands. So again, chrome colon, slash, slash extensions and then select load unpacked and you're able to basically unpack and permanently store your extensions, which stops Chrome from having from auto updating them on from the store. So if an extension goes bad, you get to keep using the good one. Very cool.

And lastly, bob Hutzel, hi Steve, before embracing Bitwarden's PASCII support, it is important to note that it is still a work in progress. Mobile app support is still being developed. Also, pasciis are not yet included in exports. So even if someone maintains offline vault backups, a loss of access to or corruption of the cloud vault means PASCIIs are gone. Thank you for the great show, bob Hutzel. And finally, so yes, in general, as I said, with the FIDO folks still working to come up with a universal PASCIIs import-export format which, my God, do we need that? Yeah, seriously, it doesn't feel right to have them stuck in anyone's walled garden. The eventual addition of PASCII transportability should make a huge difference. Again, it'll allow us to see, to hold to touch PASCII, my precious PASCII.

I just think we need that you know, Like where is it?

1:38:51 - Mikah Sargent

That is honestly what's keeping me from using PASCIIs as anything other than a second factor of authentication. That's where I end up, because there are a few sites like GitHub that give you the option to either use it as just a straight up login or use it as the second factor of authentication. I'm okay with doing that, knowing that I can only have it in one place, but I haven't completely removed my password and username login yet because I want that transportability.

1:39:19 - Steve Gibson

before I feel comfortable completely saying okay, I'll shut off my username and password if I'm even given that option, right, I think that you know I've talked about like waiting for Bitwarden to add the support to mobile, because then we get it everywhere. But looking at the responses from our users and my own, I don't think I want PASCIIs in my password manager. I still need Bitwarden remember a sponsor of the Twin Network? I need it for all the sites where I still only can use passwords. So it's not going away. But I think that you know I mean I'm a 100% Apple mobile person for phone and pad, so I don't mind having Apple holding all those, but I still want to be able to get my hands on them.

1:40:19 - Mikah Sargent

Yeah, I want to see it, I want to touch it, I want to print it out and frame it.

1:40:23 - Steve Gibson

No, but yeah, I'm with you. Yeah, I don't write another chalkboard to find you when you're doing a video podcast. Very, long string Okay let's do our final break, and then we're gonna talk about Morris, the Second, and why I think we are in deep trouble.

1:40:43 - Mikah Sargent

All right, let's take a break so I can tell you about Vanta, who is bringing you this episode of Security Now. Vanta is your single platform for continuously monitoring your controls, reporting on security posture and streamlining audit readiness. Managing the requirements for modern security programs is increasingly challenging and it's time consuming. Well, that's where Vanta comes into play. See, vanta gives you one place to centralize and scale your security program. You can quickly assess risk, streamline security reviews and automate compliance for SOC2, iso 27001, and more. You can leverage Vanta's market leading trust management platform to unify risk management and secure the trust of your customers. Plus, use Vanta AI to save time when completing security questionnaires.

G2 loves Vanta year after year. Check out this review from a CEO. Vanta guided us through a process that we had no experience with before. We didn't even have to think about the audit process. It became straightforward and we got SOC2, type 2 compliant in just a few weeks. Help your business scale and thrive with Vanta. To learn more, watch Vanta's on-demand demo at vantacom slash security now. That's V-A-N-T-A dot com slash security now. Thank you, vanta, for sponsoring this week's episode of Security Now. Now let's hear about Morris II.

1:42:14 - Steve Gibson