Security Now 955 Transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show.

Leo Laporte & Steve Gibson (00:00:00):

It is time for security. Now Steve Gibson is here and we have perhaps the most interesting story. What a way to kick off. 2024, our first episode of the new year and Steve breaks down what looks to be a massive conspiracy around the security of the last five generations of iPhone. The details coming up in Security Now. Podcasts you love

TWiT (00:00:29):

From people you trust.

Leo Laporte & Steve Gibson (00:00:31):

This

TWiT (00:00:32):

Is Tweet.

Leo Laporte & Steve Gibson (00:00:37):

This is Security Now with Steve Gibson, episode 955 for Tuesday, January 2nd, 2024. The mystery of CVE 20 23 3 86 0 6. This episode of Security Now is brought to you by Bit Warden, the open source password manager, trusted by millions with Bit Warden. Your digital life is secured with end-to-end encryption and is only accessible by you. Now we know that AI has unlocked a whole new world of innovations and unexplored opportunities for individuals and businesses, but did you also know the information you enter into AI platforms can be used by hackers to attack and exploit end users? Balancing the potential of AI and the need for heightened security online is a daunting challenge. But the first step to use strong and unique passwords for every online account. That's where Bit Warden comes in. Easily generate and manage complex passwords with a trusted credential management solution like Bit Wharton. And by the way, it supports pass keys too.

(00:01:39):

For True Passwordless authentication, you can access your passwords, your pass keys, your sensitive information saved in bit warton across multiple devices and platforms for free forever. Keeping your secure solution at work, at home, or on the go At twit, we're fans of password managers. You know that And the one I use, the one Steve use is the only one we recommend. Bit Warden get started with their free trial of a teams or enterprise planner. Get started for free across all devices for free across all devices. It's open source Kids as an individual user at bid warden.com/quit. That's bid warden.com/twit. It's time for security now. The show we cover your privacy, your security, your safety online, how computers work a whole lot more with this guy. Right? Here's Steve Gibson, the Auto Didact, who knows all Tells all and is back again for 2024. Happy New Year, Steve.

(00:02:33):

Happy New Year to you Leo. And I was pleased to see that we've got continuing sponsors in 2024 that'll be familiar to our audience. Your show will never be hard to sell. People want to be on security now, that's for sure. But by the way, we're always looking for new advertisers. I don't want to discourage anybody. No. If you work for a company and you say, boy, I hear this a lot, they really ought to advertise in security now by all means, contact us advertising at twit tv. I can definitively state Leo that Apple will not choose to be an advertiser on this podcast. Well, I can think of many reasons for that, but what do you mean? Well, we know they have a very thin skin, right? You haven't been allowed back after you said one comment once or looked at your phone during a presentation or some nonsense.

(00:03:29):

I don't remember. No, I'm on a blacklist for sure. Yeah, this podcast has a very dry title. The mystery of CVE 20 23, 38, 6 0 6, whatever could that mean after everyone is updated with the state of my still continuing work on Spin Ride six one. And after I've shared a bit of feedback from our listeners, the entire balance of this first podcast of 2024 will be invested in the close and careful examination of the technical details surrounding something that has never before been found in Apple's custom proprietary silicon. As we will all see and understand by the time we're finished here today, it is something that can only be characterized as a deliberately designed, implemented, and protected backdoor that was intended to be and was let loose and present in the wild after we all understand what Apple has done through five successive generations of their silicon. Today's podcast ends as it must by posing a single one word question. Why?

(00:04:56):

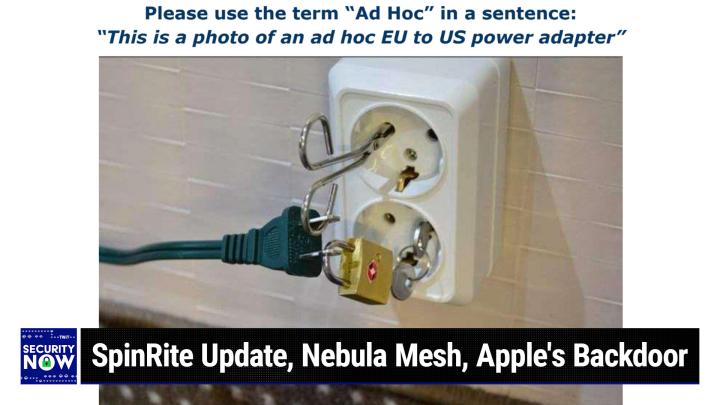

Well, well I think you're probably right, but I can't wait to, we talked a little bit about it on Mac Great Weekly, and I even said, I bet you Steve will have something to say about this. So I'm very, very interested in the deep details to breakdown. We got the details, everybody's going to understand it. We are left with some questions, not just one. How did this happen? Because there are things unfortunately we're never going to know, but there are definitely things we do know and it really takes a little bit of the polish off the apple. Oh my, yeah. Oh my. Well I will be very interested to hear more about this. Alright, we do have, oh my goodness, a great picture of the week. I gave it a caption that really actually like the caption. Can you say the word ad hoc in a sentence?

(00:05:49):

I don't know what it means, but we will get to the picture of the week in just a moment. But first a word from our sponsor. We love our sponsors. Collide has been a sponsor with us for some time now, what do you call an endpoint security product that works perfectly but makes users miserable? Well, most of 'em, but in this case, the old approach to endpoint security is to lock down employee devices to roll out changes through forced restarts. But you know what? It just doesn't work. It is miserable because they've got a mountain of support tickets. Employees start using personal devices just to get their work done and that's a nightmare. And executives, they opt out the first time, it makes 'em late for a meeting and now you've got a mess on your hands. You can't have a successful security implementation unless you work with end users, right?

(00:06:46):

They're part of your team. That's where Collide comes in. Their user first device trust solution notifies users as soon as it detects an issue on their device, teaches them how to solve it without needing help from it. And that way untrusted devices are blocked from authenticating, but users don't stay blocked. Collide is designed for companies with Okta and it works on Mac oss, it works on Windows, it works on Linux and mobile devices too. So it works everywhere you are. If you have Okta and you're looking for a device trust solution that respects your team but still gets the job done, visit collide.com/security now. Watch a demo today, see how it works. KOL id.com/security. Now we thank him so much for the support of the show and Mr. Gibson in 2024. Alright, picture of the week. This picture was of an insane person, something that a crazy person did.

(00:07:51):

Apparently they were traveling somewhere in Europe where the plugs are two round pins offset from the center, but they had a US standard, two-pronged straight prong power plug and they couldn't, I guess go downstairs to the hotel gift shop and buy themselves an adapter. So they managed to put one together. Oh Lord, this is insane. Oh my God. So I looked at this as, would this work? I go, yeah, I gave this, although you want to keep your distance, I gave this the caption. Please use the term ad hoc in a sentence and the sentence is, this is a photo of an ad hoc EU to US power adapter. It looks like he's got forceps and a key and a padlock. There's forceps stuck in one of the round European outlet holes and then there are on the US plug, the two prongs have holes through them as many of ours do.

(00:09:16):

So one of the plugs holes was threaded through the handle of the forceps, giving it an electrical attachment to one side of the European power. It's kind of clever because it's taking advantage of the hole in the us. Plug the metal. Yes, that was clever. Well then so does the padlock, which has been stuck. The hasp of the padlock has been stuck through the hole in the other prong of the US plug, the padlock then closed and of course it's got a key and linking it with a key ring to another key. And that second key is plugged into the opposing hole in the adjacent EU power outlet. Thus complete the circuit. If you use the term circuit very loosely, I'd be a little concerned about the impedance of the key ring. Yeah, yeah, you're right. That little key ring loop. If you're drawing too much power from this thing, it's going to against to glow, it's going to get a little warm. Oh god. Anyway, this is, it's hysterical, A great photo. Don't ever try this at home kids. This is not recommended by any means. Yes. We're not suggesting this. We're just saying if you are really No, no, no. Nobody's that desperate, then even don't do it. No. Even then, no.

(00:10:47):

Okay, so as I mentioned two weeks ago, I was hoping to be able to announce that spin right six one was finished and really it is all but finished. If I were not still making some incremental progress that matters, I would've declared it finished. I've been wrestling with the behavior of some really troubled drives, like finally someone FedExed me. I paid the FedEx shipping. There was no way I was going to have someone who's been testing spin, right pay shipping. He FedExed me his drive Friday. That arrived three days ago on Saturday because spin right was getting stuck at 99.9953% of the drive. Well stuck is no good. It turns out that drive, it was a Western digital was returning a bogus status, which was tripping up spin, right? Which wasn't prepared for a ridiculous status bite coming back from the drive. So spin right's better now and three other people's drives that were also getting stuck for the same reason no longer due.

(00:12:08):

So it's like the edgiest of edge cases, but I want this thing to be perfect and the things I'm doing will carry forward into the future. So I would have to do it sooner or later. I might as well do it now anyway, I need to give it a little more time to settle. But what I know is that as a consequence of this intense scrutiny at the finish line, this spin, right really does much more than any version of spin right ever has before. So anyway, I'm just days away. Okay, so I have a couple bits of closing the loop feedback. This is interesting. This is from Rotterdam in the Netherlands. The subject was which root certificates should you trust? Remy wrote, I made the tool requested in 9 51. He wrote, hi Steve, happy spin. Right customer here. Can't wait for 6.1. In episode 9 51 you discussed root certificates and Leo asked for a prune your CA app.

(00:13:22):

You said The good news is that this is generic enough that somebody will do it by the next podcast. He said, well, fun challenge and I happen to know a bit of c plus plus. So here's my attempt and we have a link in the show notes. It is also this week's shortcut of the week. Anyway, he wrote it parses the Chrome or Firefox browser history and fetches all certificates. It's a reporting only tool that does not prune your root store since I suspect he says that could break stuff. So if people want that, they can figure out how to do that themselves and make a backup. He says, I've compiled it as a 32 bit QT 5.15 c plus plus application specifically for you. So it runs on Windows seven. You've got a reputation, Steve, and up he says QT six. The current version of the c plus plus framework does not support Windows seven.

(00:14:30):

Cheers, Remy. So anyway, since I found this as I was pulling together today's podcast, I've not yet had the chance to examine it closely, but I quickly went over to Remy's page and it looks like he has done a very nice piece of work. It's very cool. Yeah, and I don't know if you noticed, but there are counts on on the number of instances of each of those certificates that he found and he's also supporting by, I mean he's sorting by the most hits on a given cert and not surprisingly, let's Encrypt is up there at the top anyway, he provides an installer for Windows users with the full source code. So for Linux users, he suggests installing QT and compiling his, what he calls cert util from the provided source code. He's deliberately removed all Google ads, Google and other tracking from his site.

(00:15:29):

So he suggests that if you find his work useful, you might perhaps buy him a cup of coffee. The link to Remy's page, as I said, is this week's GRC shortcut. So GRC SC slash 9 5 5 and that'll just bounce you directly to Remy's page. And so Remy, thanks for this. This is read only and I understand his reluctance to put a surgical tool in there, but how hard if seeing these, how hard would it be to remove them? Is there a mechanism for that manually? Yes. Well under Windows you, you down in the search box put MMC and hit enter. That brings up their desktop. MMC, enter and there it is. And so that brings up and yes, you want to run it. So then you go to file up on the file menu and add snap in, add, remove, snap in. Now find cert and add that.

(00:16:39):

You have to move it into the box on the right. You can't just click it. You have have to drag it. Yeah, have remove snap in. So there's the certificates snap in and you click on that little thing that Oh, I see added here. I get, oh yeah, I haven't used this in a while. All right. And my account, there it is. Now SEARCHs are in there and now I can see my certificates. Now you've created a certificate viewer, which allows you to see all the search you've got on your machine and the one you care about is trusted route, right? Yes. And if you click there, oh, there you go. There they all are. Now let's say I don't want Komodo, can I Right click on it and delete. Yeah. Yep. So you totally can do that manually one by one. You absolutely can.

(00:17:22):

Yep. And we'll see how this goes because this also could be automated. All the certs are in the registry. This is basically a's media viewer into the registry. That does a lot of interpretation for you too. This is good. I mean honestly, this is the tool that's kind of sufficient. It's just that you have to do A one Z two C. Well, and you got to know which ones. So his cert util that's useful, shows you to report, given your entire current browser history, what certificates has your browser ever touched and what hasn't it touched because there's a whole listing of unused. Exactly. cas. Yeah. And those are the ones you might consider removing. Yeah, ACTIS, who's ever heard of it? Milan Actus. It's Italian. It's an Italian. I don't think we need them. Yeah, we don't need them. And of course what this means is anything they ever sign our browser absolutely trusts. That's the great danger of this whole system is that it's carte blanche trust for every single certificate in our route. And as you pointed out when we first started talking about this, there's only a handful of certs that do 90% of the traffic into your computer. Seven certs gives you 99.96% of all non expired browser certific. I could probably remove the China financial certification authority from my even roots. I have a feeling I won't be needing that. Yeah. Now your light switch might not work anymore, but still, so that's the question.

(00:19:10):

It would tell you this is an untrusted site, but you simply go to it. You could bypass it. Absolutely. You could push past it and maybe that's what you want is something that says, whoa, wait a minute. Why am I going to the Chinese laundry? I don't really need that. I don't need that one. Yeah. Okay. Very handy. Thank you. Thank you. Good job, Remy. Yeah, Martin Berg was referring to last week's Schrodinger's bowls picture of the week, or rather two weeks ago. This was great. He said, show the picture of the week to my son, age 13. He immediately gave the obvious answer, break the top glass. Obviously that is cheaper to replace than the plates. And he said, very clever. Yes, he said, slightly annoyed. I didn't come up with it myself. Well, none of us did Martin, but happy that the future is looking bright.

(00:20:11):

That's great. Anyway, yeah, the upper glass was in front of an empty shelf so you could break the glass, then lift the shelf and have full access to all the bowls that are precariously trying to fall, but are being held in place by the paint of glass below that. So very, very clever. Sammy two two tweeted at SG grc. Steve, I planned to use DNS benchmark today when I ran the download by VirusTotal three security vendors flagged this file as malicious. While I trust you, I wanted to check in case there is a problem with the file. Thanks. So I just dropped the file on virus total and at the moment I did four of the AV tools at VirusTotal, dislike it. Now, I'll note however, that they're all rather very obscure av, so it's not Google or Microsoft or anybody. And since GRCs DNS benchmark is approaching 8.8 million downloads at a rate of 4,000 per day, I suppose it's a good thing that not everyone is as cautious as Sammy Sammy 2, 2, 2 or there would be a lot more concern than is being expressed.

(00:21:39):

But seriously, I poked around a bit in virus total because it allows you to see what things are setting off alarm bells, and I look at the behavior that is concerning to virus total, and I can't really say that I blame it. The program's behavior, if you didn't know any better, would peg it as some sort of bizarre DNS Doss zombie. It scans network adapters of every DNS server. You can imagine there's 505 of them or something. It does look kind of suspicious. Come to think of it. Yes. Yeah, good point. It's got all sorts of mysterious networking code. Unfortunately this is the world we're living in today. It doesn't matter that that program which has been around since what, 2007 or something, I think I wrote it. It's never hurt a single living soul and it never would. It's even triple SHA 2 56 signed with various of GRCs old and new certificates, all of them valid. Do the signatures matter? Apparently not. It still looks terrifying. So anyway, to answer your question, Sammy, Sammy, the files code has not changed in nearly five years since I remember I tweaked it. I think to add 1.1 0.1 0.1 or something, some or 9.9 0.9 0.9, some updated server I wanted to put in there, and that was five years ago. It's never actually harmed anyone yet. I can understand why virus total would go. So yeah. Anyway, I'm pretty sure you can rely on it being safe.

(00:23:31):

Christian Nissen said Hello, Steve. I'm one of all those who's glad you decided to go past three E seven Hex, so we know that's 9 9 9 in decimal. He said, you speaking of tail scale, et cetera, I strongly recommend Nebula mesh open source all the way and very easy to manage. I know it's been on your radar before. What's the reason for not advocating it in ssn? 9 5 3 Christian, no reason at all. I just forgot to mention it among all the others, and I agree 100% that Nebula Mesh, which was created by the guys at Slack, is a very nice looking overlay network. We did talk about it before Slack wrote it for their own use because nothing else that existed at the time, this was back now five years ago in 2019, did what they needed out of the box, so they rolled their own Nebula Meshes home on GitHub is under Slack HQ's Directory Tree, and I have the link in the show notes, but you can just search Nebula Mesh to locate it.

(00:24:52):

It's a fully mutually authenticating peer to peer overlay network that can scale from just a few to tens of thousands of endpoints. It's portable, written in Go. It has clients for Linux, Mac, oss, windows, iOS, Android, and even my favorite Unix free BSD. So absolutely thank you for the reminder and for those who are looking for a good 100% open, unlike tail scale where they are keeping a couple of the clients closed source and the Rendezvous server, which those nodes need to find each other closed. Nebula 100% open. So again, Christian, thanks for the reminder. Ethan Stone said, hi, Steve. I've noticed something recently that you might be interested in. First on my Windows 10 machine that on my Windows 11 machine, the Edge browser has kept itself running in the background. After I close it, excuse me, the only way to actually shut it down is to go into Task manager and manually stop the many processes that keep running after it's closed.

(00:26:13):

I noticed this because as one of your most paranoid listeners, he says, Parenza, I know that's a high bar. I have my browsers set to delete all history and cookies when they shut down. Boy, he must like logging into sites and I have CC Cleaner doing the same thing if they don't. CC Cleaner seems to have caught on to Microsoft's little scheme and now notes. That Edge is refusing to shut down and asking me if I want to force it anyway, it seems like it's just another little scheme to keep all of my data and activity accessible to their grubby little data selling schemes. And actually I think it's probably just, who knows what they figure? Just get more ram. If you don't want Edge continually running, actually what it probably is is it allows it to launch onto your screen much more quickly. When you start it again, it never really stopped, so it's still there.

(00:27:14):

It just turned off the ui. So you click on the icon and it's like bang. It's like, whoa. Okay, so anyway, I understand you want things that you don't need and that you stop to actually stop. That's a good thing. He finally says, I love the show and I'm looking forward to new ones in 2024 and the advent of spin, right? Six one, although I really, really, really need native USB support, so I hope spin right seven isn't far behind. Also, I'm looking forward to not having to log into Twitter to send you messages, although please give priority to spin right seven. Okay, so I have been gratified with the feedback from Twitter users that they're looking forward to abandoning Twitter to send these short feedback tidbits. My plan is to first get spin, right? Six one finished. As I said, that's just like moments away, but then I need an email system, which I don't currently have in order to notify all version 6.0 owners through the last 20, what?

(00:28:26):

21 years? 20 years? It was in 2004. I began offering 6.0. So I need an email system to let them know that it's available and basically of what has turned out to be a massive free upgrade to 6.0. So that need will create GRCs email system and we'll experiment then with my idea for preventing all incoming spam without using any sort of heuristic false positive tendency filter. And then I plan to immediately begin working on spin Write seven. I don't want to wait for several reasons. For one thing, I currently am spin, right? I have the entire thing in my head right now. My dreaming at night is about spin, right? And I know from prior experience that I will lose it if I don't use it. I barely remember how squirrel works now, so I want to immediately dump what's in my head into the next iteration of spin write code.

(00:29:39):

Another reason is that Ethan referred to is about USB. Unfortunately, USB is still spin, right? Six one's Achilles heel, all of spin, right? Has historically run through the bios, but I've become so spoiled now by six point one's direct access to drive hardware that the bios now feels like a real compromise, yet even under 6.1, all USB connected devices are still being accessed through the bios since Spin Right's Next platform, which I've talked about, R Toss 32 already has USB support, although not the level of support required for data recovery. That's what I'll be adding still. It made no sense for me to spend more time developing USB drivers from scratch for dos when at least something I could start with is waiting for me right now under R toss 32. So yes, I'm in a hurry to get going on Spin write seven. It'll be happening immediately.

(00:30:51):

And then I had already replied to Ethan and when I checked back into Twitter, he had replied to me and he wrote again, okay, but please spend less time on the email thing than you did on Squirrel. And so yes, I am not rolling my own email system from scratch this time. I've already purchased a very nice PHP based system that I've just been waiting to deploy. So I'll be making some customizations to part of it, but that's it. And then we'll have email. Okay, Leo, I'm going to catch my breath. You tell us why we're here and then oh boy, we're going to, so the whole show's going to be this Apple thing. Yes. Holy cow. There is a lot to talk about. Some other things happened but they pale in comparison to what we now act. Absolutely know that Apple has done well and they said in the information that it was ARM devices, other ARM devices might also be susceptible. All I devices, all I devices, but ARM makes stuff like my Pixel phone. No, not my pixel. My Samsung phone is arm based, so well, we'll find out. We'll find out. And as far as I know, those CVEs have been patched on Apple devices, but we'll find out about that as well. Well, we're going to find out why this was here for five years. Yeah.

(00:32:22):

Wow. I used your name in vain on Mac Break Weekly. I said if there's one thing I've learned from listening to Steve for almost 20 years is interpreters are a problem. I heard you. Yes, and you're absolutely correct. We know that. Well, let's first talk about our sponsor for this segment of security. Now data expires. If you have a customer list, a contact list, a supplier list, that kind of thing, your data's going bad up to 25% a year, and that's why you need to know about Melissa, the data quality experts. For over 38 years, Melissa has helped companies harness the value of their customer data to drive insight, maintain data quality, and support global intelligence. Melissa's flexible to fit into any business model and they've been doing address verification for, what is it now? Almost 30 years, 38 years, almost 40 years for more than 240 different countries.

(00:33:24):

That means you're going to only get valid billing and shipping addresses in your system. You could focus your spending on where it matters most. Melissa offers free trials, sample codes, flexible pricing. There's an ROI guarantee, and of course unlimited technical support to their customers all over the world. You can even try it for free. You can download the Melissa Lookups apps on a Google Play or the Apple App store. They're free. No signup is required. Very useful for onesie two Z validation. Melissa has also achieved the highest level of security status. They are FedRAMP authorized, so that's huge. That means that gives you some security that you know that your data is being treated with kid gloves as it deserves. Melissa's solutions and services are GDPR compliance. CCPA compliant. They meet SOC two and hipaa. High-trust standards for information security management. They treat your data like gold.

(00:34:20):

You should be treating your data like gold. Make sure your customer contact data is up to date because it is gold. It started today with 1000 records clean for free. melissa.com/twit, MELI SSA melissa.com/twit. We thank them so much for their support of security Now. Okay, let's get the CVEs here. I have in the past suggested that you buckle up and this is no exception. I don't have a seatbelt on this bicycle seat attached to a stick. Well, that's right. You're not on the ball anymore. That's good. That's good. I will plant my feet firmly. Plant your feet on tariff. Yeah, firmly apart. Okay. Our longtime listeners may recall that during the last year or so we mentioned an infamous and long running campaign known as Operation Triangulation, which is an attack against the security of Apple's iOS based products. This breed of malware gets its name from the fact that it employs canvas fingerprinting to obtain clues about the user's operating environment.

(00:35:34):

It uses WebGL the web graphics to draw a specific yellow triangle against a pink background. It then inspects the exact high depth colors of specific pixels that were drawn because it turns out that different rounding errors used in differing environments will cause the precise colors to vary ever so slightly. We can't see the difference, but code can. And since it uses a triangle, this long running campaign has been named Operation Triangulation. In any event, the last time we talked about Operation Triangulation was in connection with Kaspersky. I heard you putting the accent on the second syllable and I went and checked Leo and you're exactly right. I have been always saying, Casper Ski, but it's Kaspersky. I just put myself in the mind of Boris bad this a Russian. Russian. And I say, how would Boris bad enough pronounce it kaki. And then that's how I say it's.

(00:36:42):

Yeah, that's right. Well, you got it right. Okay. We talked about it with Kaspersky Labs because someone had used it. That is this Operation Triangulation attack to attack a number of the iPhones used by Kasperskys security researchers. In hindsight, that was probably a dumb thing to do. Since nothing is going to motivate security researchers more than for them to find something unknown, crawling around on their own devices. Recall that it was when I found that my own PC was phoning home to some unknown server that I dug down, discovered the Orate spyware as far as I know, coining that term in the process since these were the very early days. And then I wrote Opt Out the world's first spyware removal tool. My point is, if you want your malware to remain secret and thus useful in the long term, you need to be careful about whose devices you infect.

(00:37:52):

Although we haven't talked about Kaspersky and the infection of their devices for some time, it turns out they never stopped working to get to the bottom of what was going on. And they finally have what they found is somewhat astonishing. And even though it leaves us with some very troubling and annoying unanswered questions, which conspiracy theorists are already having a field day with what has been learned, needs to be shared and understood. Because thanks to Kasperskys dogged research, we now know everything about the what even if, how and why will forever probably remain unknown and there's even the chance that parts of this will forever remain unknown to Apple themselves. We just don't know Kasperskys researchers affirmatively and with question found a deliberately concealed, never documented, deliberately locked, but unlockable with a secret hash hardware backdoor, which was designed into all Apple devices, starting with the A 12 chip, the A 13, the A 14, the A 15, and the A 16.

(00:39:25):

This now publicly known backdoor has been given the CVE, which is today's podcast title, thus CVE 20 23 38 6 0 6. Though it's really not clear to me that it should be a CVE since it's not a bug. It's a deliberately designed in and protected feature. Regardless if we call it a zero day, then it's one of four zero days, which when used together in a sophisticated attack chain, along with three other zero days, is being described as the most sophisticated attack ever discovered against Apple devices. And that's a characterization I would concur with. Okay, so let's back up a bit and look at what we know. Thanks to Kasperskys work, I can't wait to tell everyone about 38 6 0 6, but to understand its place in the overall attack, we need to put it into context. The world at large. First learned of all this just last Wednesday on December 27th when a team from Kaspersky presented and detailed their findings.

(00:40:47):

During the 37th four day chaos communication Congress held at the Congress Center in Hamburg, Germany, the title they gave their presentation was Operation Triangulation. What You Get When Attack iPhones of Researchers, which I think is a perfect title because yeah, on the same day last Wednesday, they also posted a non presentation description of their research on their own blog titled Operation Triangulation, the Last Hardware Mystery. I've edited their lengthy posting for the podcast and I'm not going to go through all of it, but I wanted to retain the spirit of their disclosure since I think they got it all just right and they did not venture into conspiracies. So after some editing for clarity and length, here's what they explained. They said today on December 27th, 2023, we delivered a presentation titled Operation Triangulation. What You Get When Attack iPhones of Researchers At the 37th Chaos Communication Congress held at the Congress Center Hamburg.

(00:42:07):

The presentation summarized the results of our long-term. It is multi-year research into Operation Triangulation conducted with our colleagues. This presentation was also the first time we had publicly disclosed the details of all exploits and vulnerabilities that were used in the attack. We discover and analyze new exploits and attacks such as these on a daily basis. We've discovered and reported more than 30 in the Wild zero days in Adobe, apple, Google, and Microsoft products, but this is definitely the most sophisticated attack chain we have ever seen. Here's a quick rundown of this zero click iMessage attack, which used four zero days and was designed to work on iOS versions up to iOS 16.2. So attackers send a malicious iMessage attachment, which the application processes without showing any signs to the user. This attachment exploits the remote code execution vulnerability, and these are all CVE 2023. So I'm going to skip that.

(00:43:37):

So it's the remote code execution vulnerability 41 9 90 in the undocumented Apple only adjust true type font instruction. This instruction had existed since the early nineties until a patch removed it. It uses return slash jump oriented programming and multiple stages written in the NSS expression, NS predicate query language patching the JavaScript core library environment to execute a privilege escalation exploit written in JavaScript. This JavaScript exploit is obfuscated to make it completely unreadable and to minimize its size. Still IT around 11,000 lines of code, which are mainly dedicated to JavaScript core and kernel memory parsing and manipulation. It exploits the JavaScript core debugging feature dollar VM to gain the ability to manipulate JavaScript core's memory from the script and execute native API functions. It was designed to support both old and new iPhones and include a pointer authentication code bypass for exploitation of recent models. It uses the in integer overflow vulnerability 32 4 34.

(00:45:17):

In the OSS memory mapping SS calls to obtain read write access to the entire physical memory of the device. It uses hardware memory mapped IO MMIO registers to bypass the page protection layer known as PPL by Apple. This was mitigated as that CVE. That is this podcast's titled 38 6 0 6. A lot more on that in a minute. After exploiting all the vulnerabilities, the JavaScript exploit can do whatever it wants to the device running spyware, which is to say it is at this point the I device because this is in all the A 12 through a 16 chips in all of Apple's devices. The device is cracked wide open at this point. They've achieved absolute dominance. So they say after exploiting all the vulnerabilities, the JavaScript exploit can do whatever it wants to the device including running spyware, but the attackers chose to a launch the IMM agent process and inject a payload that clears the exploitation artifacts from the device, and B, run a safari process in invisible mode and forward it to a webpage with the next stage.

(00:46:54):

The webpage has a script that verifies the victim, and if the checks pass receives the next stage, the Safari exploit. The Safari exploit uses 32 4 35 to execute shell code. The Shell code executes another kernel exploit in the form of a mock object file, the Shell code reuses. The previously used vulnerabilities 32 4 34 and 38 6 0 6. It is also massive in terms of size and functionality, but completely different from the kernel exploit written in JavaScript, certain parts related to exploitation of the above-mentioned vulnerabilities are also are all that the two share still. Most of its code is also dedicated to parsing and manipulation of the kernel memory. It contains various post exploitation utilities, which are mostly unused. And finally, the exploit obtains root privileges and proceeds to execute other stages which loads spyware and those subsequent spyware stages have been the subject of previous postings of Kaspersky. Okay.

(00:48:22):

Okay, so now I'm going to summarize this and then we're going to zero in the view we have from 10,000 feet is of an extremely potent and powerful attack chain, which unbeknownst to any targeted iPhone user, arranges to load in sequence a pair of extremely powerful and flexible attack kits. The first of the kits works to immediately remove all artifacts of its own presence to erase any trace of what it is and how it got there. It also triggers the execution of the second extensive attack kit, which obtains root privileges on the device and then unloads whatever subsequent spyware the attackers have selected. And all of that spyware has full reign of the device because that subsequently loaded spyware is under obtains the permission of these kits. So it uses CVE 41 9 90, a remote code execution vulnerability in the undocumented Apple only adjust true type font instruction. That's where it begins with that. Still somewhat limited but useful capability it uses living off the land return jump oriented programming, meaning that since it cannot really bring much of its own code along with it at this early stage, instead it jumps to the ends of existing Apple supplied code subroutines threading that together to patch the JavaScript core library just enough to obtain privilege escalation. So they call that living off the land, right?

(00:50:25):

You can't put much of your own code in there, so you have to use existing code, right? And it just jumps to just before other subroutines return. So there might be a little memory there somewhere that you can, yeah, there are a few little instructions just before a subroutine finishes and returns, and so all they can do is jump to a sequence of the ends of existing code and subroutines as little bits of worker code, threading that together to achieve what they want. Now to do that, you have to know exactly where that stuff lives, right? You have to have the actual memory address. That's why address randomization works, right? Yes. Yeah. Address space layout. Apple doesn't do A SMR though, I guess with this code. Well remember that they said the bulk of that code is analyzing the kernel memory. So it's looking for all of these things in order to figure out where everything is.

(00:51:29):

So it may be moving around, but they look for a chunk and they recognize it and they say, oh, it'll be right after this, wherever that is. Yep. Holy cow. This is so sophisticated. They find like an API jump table and then know that the fourth API in that table will be taking them to the sub-routine, the end of which they need. So that's why ASMR doesn't always work, even if you use it because yeah, right. It definitely makes the task far more difficult. But here they're using it and they've managed, so I mean it really raises the bar, but it could still be worked around. So after that, they have obtained privilege escalation by using the libraries, the JavaScript core libraries, debugging features. Then they're able to execute native apple API calls with privilege. Oh my God. It then uses another vulnerability 32 4 34, which is an inner overflow vulnerability in the OSS memory mapping system, API to obtain read, write access to the entire physical memory of the device.

(00:52:47):

Oh my goodness. Yeah. That's different than the normal access because normally you are constrained. A program is constrained to what you have access to. So they use an integer overflow, another zero day to get read, write access to the entire physical memory and read write access to physical memory is required for the exploitation of the title of this podcast, CVE 38 6 0 6, which is used to disable the entire system's, right? Protection. Normally you can't change anything, you can't write anywhere, and so they figured out how to turn off, right. Protection. That's amazing so far. I mean, this is what the most sophisticated hacks often do is chaining vulnerabilities and you slowly escalate your capabilities till you get what you want. Yep. Wow. Okay. So far we've used three vulnerabilities. 41 9 90 32, 4 34, and 38, 6 0 6. The big deal is that by arranging to get 38 6 0 6 to work, which requires read, write access to physical memory because that's how it's controlled.

(00:54:17):

This exploit arranges to completely disable apple's page protection layer, which Apple calls PPL. It is what provides the great deal of the modern lockdown of iOS devices. Here's how Apple themselves describes PPL in their security documents. They said under page protection layer in iOS, iPad, oss, and watch OSS is designed to prevent user space code from being modified after code signature verification is complete building on kernel integrity, protection and fast permission restrictions. PPL manages the page table permission overrides to make sure only the PPL can alter protected pages containing user code and page tables. The system provides a massive, this is Apple, a massive reduction in attack surface by supporting system-wide code integrity enforcement even in the face of a compromised kernel. This protection is not offered in Mac OSS because PPL is only applicable on systems where all executed code must be signed. So what's interesting is this comes after system integrity is complete.

(00:55:54):

So you've verified, you go, okay, we're going to make sure everything's good, everything's good. Lock it down, lock it down, and you've gotten in after that. Yeah. The key sentence here was the system PPL, the system provides a massive reduction in attack surface by supporting system-wide code integrity enforcement even in the face of a compromised kernel. So that means that defeating the PPL protections results in a massive increase in attack surface. Sure. Because it's assumed you're good, right? Once those first three chained and cleverly deployed vulnerabilities have been leveraged. As Kaspersky puts it, the JavaScript exploit can do whatever it wants on the device, including running spyware. In other words, the targeted eye device has been torn wide open. Now the next thing Kaspersky does is to take a closer look at the subject of their posting and of this podcast. But before we look at that, I need to explain a bit about the concept of memory mapped IO.

(00:57:16):

From the beginning, computers used separate input and output instructions to read and write to and from their peripheral devices and read and write instructions, read and write to read and write from to and from their main memory. This idea of having separate instructions for communicating with peripheral devices versus loading and storing to and from memory seemed so intuitive that it probably outlasted its usefulness. What this really meant was that IO and memory occupied separate address spaces. Processors would place the address their code was interested in on the system's address bus. Then if the IO read signal was strobe, that address would be taken as the address of some peripheral devices register. But if the memory read signal was strobe, that the same address bus would be used to address and read from main memory to this day, largely for the sake of backward compatibility. Intel processors have separate input and output instructions that operate like this, but they are rarely used any longer.

(00:58:42):

As with a lot of stuff, it was for efficiency, right? So peripheral devices could just sit there and then every once in a while instead of you, was it like a spool or to the printer, instead of having to wait for the printer to hack it and all that, you just stick it there and the printer gets it when it wants it, basically. Well, yes, and for example, behind me, we have my PDP eight, I'm sure they used it in boxes. So when you only had 12 bits of a dress space, which is 4K, you don't want to give any of that up to peripheral io. You need every bite to be actual memory. So the idea was that the peripheral devices occupied their own memory space, or I'm sorry, their own not memory space, their own IO space IO space was separate. It was completely disjoint from memory space, and you access the IO space with IO instructions and memory space with standard read and write instructions.

(00:59:50):

Okay, so to be practical, one of the requirements is that the system, if you want to, okay, okay, I jumped ahead. What happened was that someone along the way realized that if a peripherals device hardware registers were directly addressable, just like regular memory, meaning in the system's main memory address space, right alongside the rest of actual memory, then the processor's design could be simplified by eliminating separate input and output instructions. And all of the already existing instructions for reading and writing to and from memory could perform double duty as IO instructions. It's all in the same spot now. It's all in the room. But to be practical, one of the requirements is that the system needs to have plenty of spare memory address bits, but even a 32 bit system, which can uniquely address 4.3 billion bytes of RAM can spare a little bit of space for its io, probably not a lot of space.

(01:01:09):

Right? And that's exactly what transpired. So for example, when today's spin right wishes to check on the status of one of the system's hard drives, it reads that drive status from a memory address near the top of the systems 32 bit address space. Even though it's reading a memory address, the data that's read is actually coming from the drives hardware. This is known as memory mapped io because the system's IO is mapped into the processor's memory address space and discrete input output instructions are no longer used. And for example, in the case of arm, they don't even exist. ARM has always been just direct memory mapped io. Interesting. So just by polling that address, you trigger a transfer from the IO device. You're not really reading ram, you're reading the IO device. Exactly. And if you've got 64 bits of a dress, plenty of space, you don't even have anything in most of that space, right?

(01:02:23):

So today's massive systems, and by massive I mean an iPhone because it has grown into a massive and complex system, they use 64 bit chips with ridiculously large memory spaces. So all of the great many various functions of the system, fingerprint reader, camera screen, crypto stuff, enclave, all of that stuff are reflected in a huge and sparsely populated memory map. Okay? Now, in the past we've talked about the limited size of the 32 bit IPV four address space and how it's no longer possible to hide in IP ipv four because it's just not big enough. The entire IPV four address space is routinely being scanned. This is not true for IPV six with its massive 128 bit address space. Unlike IPV four, it's not possible to exhaustively scan IPV six space. There's just too much of it. So here's my point. When there is a ton of unused space, it's possible to leave undocumented some of the functions of memory mapped peripherals, and there's really no way for code to know whether anything might be listening at a given address or not.

(01:04:08):

And not only that, there may be too many addresses to reasonably check. There could be millions, billions, trillions, gazillions. Yeah. Yeah. I think it is interesting. So this reminds me of the old peak and poke commands, right? On older machines like Commodore and Ataris peek an address or you'd poke an address and sometimes that would be an io. If you wanted to write to the hard drive, you'd poke it to an address and it wouldn't be a memory address. It'd poke it to the hard drive. So that's still built in to modern processors. In fact, it sounds like more so because there are many, many more devices attached or things. Yes. And it's so convenient to just be able to use the existing architecture of reading and writing to and from actual memory to do the same with your peripherals. It's a clever hack. And you know what that means?

(01:05:09):

What Kaspersky discovered was that Apple's hardware chips from a 12 through a 16 all incorporated exactly this sort of hardware backdoor and get this deliberately designed in backdoor, even incorporates a secret hash. In order to use it, the software must run a custom, not very secure, but still secret. I mean, it's not crypto quality, but it doesn't need to be hash function to essentially sign the request that it's submitting to this hardware backdoor. Here's some of what Kasperskys guys wrote about their discovery and note that even they did not discover it because it's explicitly not discoverable. They discovered its use by malware, which they were reverse engineering. The question that has the conspiracy folks all wound up is how did non apple bad guys discover it? Yeah. Where did they get the information of where to peak and poke? And we're going to be spending some more time on that because presumably they couldn't brute force it.

(01:06:38):

They couldn't go through every address and see what happened. It's not brute forceable, as we'll see in the section of their paper titled The Mystery of the CVE 20 23 38 6 0 6 vulnerability Kaspersky wrote. What we want to discuss is related to the vulnerability that has been mitigated as twenty twenty three, thirty eight, six oh six. Recent iPhone models have additional hardware, hardware-based security protection for sensitive regions of the kernel memory. This is the PPL. They're talking about this protection prevents attackers from obtaining full control over the device if they can read and write. That is to say, even if they can read and write kernel memory as achieved in this attack by exploiting CVE 32 4 34. So the point being, even if you have read and write to kernel memory, you still can't do anything with it. And that's what PPL Apple said protects from. Right? This thing is so locked down that you still can't get into user space.

(01:07:54):

They said, we discovered that to bypass this hardware-based security protection, the attackers used another hardware feature of Apple designed systems on a chip. If we try to describe this feature and how the attackers took advantage of it, it all comes down to this. They are able to write data to a certain physical address while bypassing the hardware based memory protection by writing the data destination address and data hash to unknown hardware registers of the chip unused by any firmware. Our guess is that this unknown hardware feature was most likely intended to be used for debugging or testing purposes by Apple engineers or the factory, or that it was included by mistake. Because this feature is not used by the firmware, we have no idea how attackers would know how to use it. We're publishing the technical details so that other iOS security researchers can confirm our findings and come up with possible explanations of how the attackers learned about this hardware feature.

(01:09:19):

Various peripheral devices available in the system on chip may provide special hardware registers that can be used by the CPU to operate these devices. For this to work, these hardware registers are mapped to the memory accessible by the CPU and are known as memory mapped. IO address ranges for M MiOS of peripheral devices in Apple products, iPhones, Macs, and others are stored in a special file format called the device tree. Device tree files can be extracted from the firmware and their context can be viewed with the help of the DT stands for device tree utility. While analyzing the exploit used in the operation triangulation attack I wrote, the Kaspersky researcher discovered that most of the M MiOS used by the attackers to bypass the hardware-based kernel memory protection, do not belong to any MMIO ranges defined in the device tree. In other words, they're deliberately secret.

(01:10:41):

The exploit targets Apple A 12 through a 16 bionic systems on a chip targeting unknown MMIO blocks of registers, which we have documented. He said, this prompted me to try something. I checked different device tree files for different devices and different firmware files. No luck. I check publicly available source code, no luck. I check the kernel images, kernel extensions, IBO, and firmware in search of a direct reference to these addresses. Nothing. How could it be that the exploit used MiOS that were not used by the firmware? How did the attackers find out about them? What peripheral devices do these MMIO addresses belong to? It occurred to me that I should check what other known MiOS were located in the area close to these unknown MMIO blocks. That approach was successful and I discovered that the memory ranges used by the exploit surrounded the system's GPU Co-processor.

(01:11:58):

This suggested that all these MMIO registers most likely belonged to the GPU Co co-processor. After that, I looked closer at the exploit and found one more thing that confirmed my theory. The first thing the exploit does during initialization is right to some other MMIO register, which is located at a different address for each version of Apple's system on chip. In the show notes, I have a picture of the pseudocode that Kaspersky provided. It shows an if CPU ID equals and then a big 32 bit blob. And the comment is CPU family arm, Everest Sawtooth A 16, and if it's equal, it loads two variables base in command with two specific values. Then else if CPU ID and another 32 bit blob, that refers to CPU family Arm Avalanche, avalanche blizzard A 15. And if that CPU ID equal is met, it loads two different base and commands into those values and so forth.

(01:13:30):

For a 14, a 13, a 12. In other words, all five CPU families from bionic a 12, 13, 14, 15, 16 require their own something. And what we learn is that it's their own special unlock in order to enable the rest of this. It is different for each processor family. Wow. It seems like Apple did a good job with this. That's what's interesting, right? Oh, Leo, wait, wait. They did a great job with this. There's more Kasperskys posting shows some pseudo code for what they found was going on. Each of the five different Apple bionic, a 12 through a 16 is identified by a unique CPU id, which is readily available to code. So the code looks up the malware code, looks up to CPU. ID then chooses a custom per processor address and command, which is different for each chip generation, which it then uses to unlock this undocumented feature of apple's recent chips.

(01:14:45):

Here's what they said. With the help of the device tree and SZaS utility PMGR Power Manager, he said, I was able to discover that all these addresses corresponded to the GFX register in the power manager MMIO range. Finally, I obtained a third confirmation when I decided to try to access the registers located in these unknown regions. Almost instantly, the GPU Co-processor panicked with a message of GFX error exception class and then some error details. This way I was able to confirm that all these unknown MMIO registers used for the exploitation belonged to the GPU Co-processor. This motivated me to take a deeper look at its firmware, which is written in arm code and unencrypted, but I could find not anything related to these registers in there. I decided to take a closer look at how the exploit operated. These unknown MMIO registers one register located at Hex, and I put this in the show notes just to give everyone an idea of how just obscure this is one register located at hex 2 0 6 0 4 0 0 0 0 stands out from, I know, I think it's more than nine.

(01:16:21):

Nine nine. I think that's a pretty large number. Yes, it is. He says it stands out from all the others because it is located in a separate MMIO block from all the other registers. It is touched only during the initialization and finalization stages of the exploit. It is the first register to be set during initialization and the last one during finalization. From my experience, it was clear he said that the register either enabled or disabled the hardware feature used by the exploit or controlled interrupts. I started to follow the interrupt route and fairly soon I was able to recognize this unknown register and also discovered what exactly was mapped to the register range containing it. Okay, so through additional reverse engineering of the exploit and watching it function on their devices, the Kaspersky guys were able to work out exactly what was going on. The exploit provides the address for a direct memory access, A DMA right, the data to be written and a custom hash, which signs the block of data to be written.

(01:17:51):

The hash signature serves to prove that whomever is doing this has access to super secret knowledge that only Apple would possess. This authenticates the validity of the request for the data to be written. And speaking of the hash signature, this is what Kaspersky wrote. They said, now that all the work with all the MMIO registers has been covered, let us take a look at one last thing, how hashes are calculated. And Leo, let us take a look at our last sponsor for the podcast. This is amazing and we're going to continue. Wow. Yes, it is really interesting. Truly astonishing. Yeah, I'm super curious what you think about it, but we'll get to that in just a little bit. But first, a word from our sponsor, the great folks at dta. We've talked about them before on the show. With dta, companies can complete audits, monitor controls, and expand security assurance efforts to scale With a suite of more than 75 integrations, RADA streamlines your compliance frameworks, providing 24 hour continuous control monitoring so you've can focus on scaling securely.

(01:19:18):

Rada easily integrates through applications such as AWS and Azure and GitHub and Okta and CloudFlare, and that's just scratching the surface draw. As automated dynamic policy templates support companies new to compliance by using integrated security awareness training programs and automated reminders, they'll ensure smooth employee onboarding as the only player in the industry to build on a private database architecture, RADA ensures your data can never be accessed by anyone outside your organization. And all RADA customers receive a team of compliance experts, including a designated customer success manager. They'll even do pre-audit calls, so you can prepare for when the audits begin and auditors and users alike love draw's audit hub. That's the solution to faster, more efficient audits. You'll save hours of back and forth communication. You'll never misplace crucial evidence. You could share documentation instantly all in one tool. Say goodbye to manual evidence collection.

(01:20:19):

Say hello to automated compliance when you visit draha.com/to dta.com/twit. Bringing automation to compliance, atta speed dta.com/twit. I thank you so much for supporting this very important program. Breaking down. We should mention though, apple has patched all four CVEs, right? Hardware's still there? Oh, so is some of this not patchable? Is that what you're saying? No, no, no. It has been closed and we're absolutely going to get to there. We'll get to that. Okay. I just want to say that upfront so people don't go, oh, no, and put their iPhone in a steel blind box or something. Just definitely update it. Yeah, keep it updated. Okay. So Kaspersky says, now that all the work with all the MMIO registers have been covered, let us take a look at one last thing, how hashes are calculated. The algorithm is shown, and as you can see, it is a custom algorithm.

(01:21:30):

And Leo, it's in the show notes on the next page. On page 11 on my show notes, it's a lookup table, right? They said, well, okay, I'll get there with a hash is calculated by using a predefined S box table. He says, I tried to search for it in a large collection of binaries, but found nothing. You may notice that this hash does not look very secure as it occupies just 20 bits, but it does its job as long as no one knows how to calculate and use it. It is best summarized with the term security by obscurity. You'd have to know the values in this Xbox every single value because they're random. They're not consecutive or anything like that. Correct? Yeah. He said, how could attackers discover and exploit this hardware feature if it is never used and there are no instructions anywhere in the firmware on how to use it.

(01:22:29):

Okay, so to break from the dialogue per second in crypto parlance, an S box is simply a lookup table. In this case, what 256 values exactly. It looks like it's typically an eight bit lookup table of 256 pseudorandom, but unchanging values, the S of S box stands for substitution. So S boxes are widely used in crypto and in hashes because they provide a very fast means for mapping one bite into another in a completely arbitrary way. The show notes shows, as I said, this S box and the very simple lookup and xor algorithm below that's used by the exploit. It doesn't have to be a lot in order to take an input block and scramble it into, basically it is a 20 bit secret hash. So the $64,000 question here is how could anyone outside of Apple possibly obtain exactly this 256 entry lookup table, which is required to create the hash that signs the request, which is being made to this secret undocumented never seen hardware, which has the privileged access that allows anything written to bypass apple's own PPL protection.

(01:24:18):

Some have suggested deep hardware reverse engineering popping the lid off the chip and tracing its circuitry. Wow. But because this is a known avenue of general vulnerability, chips are well protected from this form of reverse engineering, and that sure seems like a stretch. In any event, every one of the five processor generations had this same back door differing only in the enabling command and address. Did they have the same S box? Yes. Same S box. Yeah. They want to change that every time. Some have suggested that this was implanted into Apple's devices without their knowledge, and that the disclosure of this would've come as a horrible surprise to them. But that seems farfetched to me as well. Again, largely identical implementations with a single difference across five year separation generations of processor, and then to have the added protection against its use or coincidental discovery of requiring a hash signature for every request.

(01:25:39):

To me, that's the mark of apple's hand in this. If this was implanted without AM's, without apple's knowledge, the implant would've been as small and innocuous as possible, and the implanter would've been less concerned about its misuse, satisfied with the protection that it was unknown, that there was this undocumented set of registers floating out in this massive address space that only they would know about. They would want to minimize it. Add to that, the fact that the address space this uses is wrapped into and around the region used by the GPU Co-processor. To me this suggests that they had to be the GPU Co-processor, and this had to be designed in tandem. Okay. After Apple was informed that knowledge of this little hardware backdoor had somehow escaped into the wild and was being abused as part of the most sophisticated attack on iPhones ever seen, they quickly mitigated this vulnerability with the release of iOS 16.6 during its boot up, the updated oss UNM maps access to the required memory ranges through their processor's memory manager.

(01:27:07):

This removes the logical to physical address mapping and access that any code subsequently running inside the chip would need. Doesn't that break stuff though? I mean, no, just that. I mean, it wasn't supposed to be there anyway. Oh, yeah. It's literally just those critical ranges. The Kaspersky guys conclude by writing, this is no ordinary vulnerability and we have many unanswered questions. We do not know how the attackers learn to use this unknown hardware feature or what its purpose was. Neither do we know if it was developed by Apple or it's a third party component like Arm CoreSite. What we do know, and what this vulnerability demonstrates is that advanced hardware-based protections are useless in the face of a sophisticated attacker as long as there are hardware features that can bypass those protections. Hardware security very often relies on security through obscurity, and it is much more difficult to reverse engineer than software. But this is a flawed approach because sooner or later all secrets are revealed. Systems that rely on security through obscurity can never be truly secure.

(01:28:44):

That's how they end and Kasperskys conclusions here are wrong, that the Kaspersky guys are clearly reverse engineering geniuses and Apple, who certainly never wanted knowledge of this backdoor to fall into the hands of the underworld for use by hostile governments and nation states owes them big time for figuring out that this closely held secret had somehow escaped. But I take issue with kasperskys use of the overused phrase, security through obscurity. That does not apply here. The term obscure suggests discoverability. That's why something that's obscure and thus discoverable is not truly secure because it could be discovered. But what Kaspersky found could not be discovered by anyone, not conceivably, because it was protected by a complex secret hash. Casper Ski themselves did not discover it. They watched the malware, which had this knowledge use this secret feature, and only then did they figure out what must be going on. And that 256 entry hash table, which Kaspersky documented came from inside the malware, which had obtained it from somewhere else, from someone who knew the secret. So let's get very clear, because this is an important point. There is nothing whatsoever obscure about this. The use of this back door required a priority knowledge, explicit knowledge in advance of its use, and that knowledge had to come from whatever entity implemented this.

(01:31:00):

We also need to note that as powerful as this backdoor was access to it, first required access to its physical memory mapped IO range, which is and was explicitly unavailable, software running on these I devices had no access to this protected hardware address space. So it wasn't as if anyone who simply knew this secret could just waltz in and have their way with any Apple device. There were still several layers of preceding protection which needed to be bypassed, and that was the job of those first two zero day exploits, which preceded the use of 38 6 0 6. So we're left with the question, why is this very powerful and clearly to be kept secret faculty present at all in the past five generations of Apple's processors, I don't buy the argument that this is some sort of debugging facility that was left in by mistake. For one thing, it was there with subtle changes through five generations of chips.

(01:32:26):

That doesn't feel like a mistake, and there are far more straightforward means for debugging. No, no one hash signs a packet to be written by DMA into memory while they're debugging. That makes no sense at all. Also, the fact that its use is guarded by a secret hash reveals and proves that it was intended to be enabled and present in the wild. The hash forms a secret key that explicitly allows this to exist safely and without fear of malicious exploitation, without the explicit knowledge of the hash function. This is a crucial point that I want to be certain everyone appreciates. This was clearly meant to be active in the wild with its abuse being quite well protected by a well-kept secret. So again, why was it there until Apple fesses up? We'll likely never know. So before you go on, does this functionality have any purpose that we know of?

(01:33:55):

Is it used in any way by any apple? No. No, and that's the point. It's weird. It's in there. There is no reference to it, and that's what the Kaspersky guy looked for. He looked everywhere. Nothing uses it. There is no reference to it anywhere. So if that's the case, it imp applies. It might've been put in there for this purpose. I mean, what purpose? That's what I believe. Whether the purpose if's not being used currently, you made some extreme efforts to not only put it in there, but to protect it. You have a 256 byte secret that presumably like one Apple engineer knows, and it's kept in their lock and key in a basement office somewhere in Cupertino with the chip manufacturers know this hash, all they see is a mask, which they are imbuing on the silicon. They can't see. Nope. So there is a secret here, which Apple holds presumably holds tightly to a functionality which nobody apparently uses except maliciously. Yes.

(01:35:11):

Well, that's interesting. That is exactly it. Okay. So could this backdoor system have always been part of some large arm silicon library that apple licensed without ever taking a close look at it? Okay, well, that doesn't really sound like the hands-on Apple. We all know, but it's been suggested that this deliberately engineered backdoor technology might have somehow been present through five generations of apple's custom silicon without Apple ever being aware of it. One reason that idea falls flat for me is that apple's own firmware device tree, which provides the mapping of all hardware peripheral into memory, the documentation of the mapping accommodates these undocumented memory ranges without any description, unlike any other known component. Nowhere are these memory ranges described. No purpose is given and Apple's patch for this, that's what, that's what this CVE fixes Apple's patch for. This changes the descriptors for those memory ranges to deny, to prevent their future access.

(01:36:38):

Well, that further confirms that there's no reasonable use for this if you could just turn it off and it doesn't break anything. It's not being used by anybody. That's right. But it's in there and it's carefully put in there. Yep. The other question, since the hash function is not obscure, it is truly secret is how did this secret come to be in the hands of those who could exploit it for their own ends? No matter why Apple had this present in their systems, that could never have been their intent. And here I agree with the Kaspersky guys when they say quote, this is a flawed approach because sooner or later all secrets are revealed somewhere as Leo exactly as you said. People within Apple knew of this backdoor. They knew that this backdoor was present and they knew how to access it, and somehow that secret escaped from apple's control.

(01:37:47):

Do you think it's possible Apple put that in there at the behest of a government, a China or Russia as a back and Yeah, we have a backdoor you can use. That's two paragraphs from here. Okay, keep going. Sorry. What we do know, you got my mind going, this is fascinating. This is very good. Now you know why we're talking about nothing else this week. What we do know is that for the time being, at least I devices are more, that is after this patch more secure than they have ever been because backdoor access through this means has been removed after it became public. But because we don't know why this was present in the first place, we should have no confidence that next generation apple silicon won't have another similar feature present its memory mapped IO hardware location would be changed. Its hash function would be different, and Apple will likely have learned something from this debacle about keeping this closely held secret even more closely held.

(01:39:04):

Do they have a secret deal with the NSA to allow their devices to be backdoored? Those prone to secret conspiracies can only speculate. And even so, it does take access to additional secrets or vulnerabilities to access that locked backdoor. So using it is not easy. Could Apple though have gone to somebody and said, here's the step by step they would know. I mean, it does involve this font resizing and true type to get in the first place. Well, yeah, those are two particular zero days. As we know Apple is constantly patching zero days. They may have a more direct access to this that doesn't require a zero day perhaps. Well, remember this thing has been in place now since the A 12 five years. Yes, yes. So it's been blocking things yet people have still been getting in. This may have been used for a long time, and Kaspersky finally just found it, and we don't know the reason they found it was embedded on iPhone. Many Kaspersky employees. Yes. But what we don't know is the bad guys who did that. It's almost certainly a nation state. Would you agree?

(01:40:32):

Mistake. It's got to be fancy bear or somebody. Right? It was a mistake to attack Kaspersky because yeah, maybe that now the world, the world knows, and Apple turned it off. Was Apple forced to turn it off? Are they glad they turned it off? Were they surprised? I would prefer to believe that Apple was an unwitting accomplice in this, that this was an ultra sophisticated supply chain attack that some agency influenced the upstream design of modules that Apple acquired and simply dropped into place without examination, and that it was this agency who therefore had and held the secret hash function and knew how to use it as they say anything's possible, but that stretches credulity farther than I think is probably warranted. Occam's Razor suggests that the simplest explanation is the most likely to be correct, and the simplest explanation here is that Apple deliberately built a secret back door into the past five most recent generations of their silicon.

(01:41:52):

One thing that should be certain is that Apple has lost a bit of its security shine. With this one, a bit of erosion of trust is clearly in order because five generations of apple silicon contained a backdoor that was protected by a secret hash whose only reasonable purpose could have been to allow its use to be securely made available after sale in the wild. And that leaves us with the final question why? And we can only speculate, I mean, we don't know who put it on the Kaspersky iPhones could have been the Russian government much more likely the US government, the NSA, somebody had knowledge. Yes, it's speculation, but it's not a long stretch to think that the NSA required a backdoor of apple. Apple complied, gave the nssa, made it super inaccessible, made it hard. It was safe. It was safe to use. If you were going to design a backdoor, Steve, this is how you would do it.

(01:43:03):

Yes. Yes, it is absolutely safe. And so yes, you're right, Leo. The NSA could have said, we require a means in make it secure. And they made secure, and we know because of the Patriot Act, they could have done it within a national security letter that would require Apple not reveal that this had been done ever, ever. And certainly allowed Apple to foreclose it the instant it became public, and they did. And as I said, there is nothing now that would lead us to believe it will not reappear under a different crypto hash in the next iteration of silicon. Well, and not to be conspiracy theory minded, but there's nothing to make us think that every other phone manufacturer hasn't done the same thing for the NSSA or now. One interesting thing, and maybe this will come out after people have had a chance to look at other arm silicon.

(01:44:01):

That's a good question. Is this an arm problem? If this was inherited by an upstream supply, we would expect other devices to have the same thing. Yeah. Yeah. So that's what's unknown. Is this Apple only? Is this exclusive to Apple or do other devices have this too? It looks like it is though. Yes, you have to catch it in use. I mean, Kaspersky only caught it because they had malware that was doing it. It makes for, by the way, an excellent spy novel or movie because the secret is a 256 byte chunk that could be sitting on any variety of things. It could be put in a JPEG with ST iconography. It makes for a very interesting spy novel. Possibly the NSA got to an Apple engineer, but it'd have to be the Apple engineer. I can't imagine this in secret was held by many people, maybe not by any one person at all. If I were Apple, I'D split it up. I Very interesting, very interesting. I mean, the most obvious explanation is, as you said, the NSA and A secret backdoor said, you have to put this in. We need access.

(01:45:24):

And in which case, maybe you can't find fault with Apple because that's the problem with being a business in the us. You have to follow the laws of whatever country you're doing business in, whether it's China or the us. They're not in Russia anymore, and Apple could argue it is safe. I mean, what they did, it might as well not exist except for the fact that the secret got loose. Well, or maybe it didn't. Maybe the NSA still has it closely held. On the other hand, reverse engineering the malware. It hasn't mal. See, that's right. We only know because we've got the malware. Somebody in the Discord is pointing out bad rod points out that both the Chinese government and the Russian government have within the last year forbidden the use of iPhones in government business. Yep, that's true.

(01:46:16):

Wow. This is quite a story. This has been present for the last five years. This went into this first appeared in the A 12 five generations ago. And if they can do it once, they can do it again. There's no guarantee that That's exactly my point. If they turn this one off, it'll be in the next generation of silicon. And again, we'll have no idea. Well, for all we know there's three back doors in the existing generation. That's a very good point. That's a very good point. There could be two other hashes and the same access so that if one is discovered, the other two remain active. I am going to vote in favor of Apple and say they were compelled to do this by the NSA. They had no choice. They couldn't reveal it. They did it in a secure way as possible. That's all the evidence suggests that so far, and I think in their favor, they had no choice.

(01:47:16):