Security Now 940, Transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show.

Leo Laporte (00:00:00):

It's time for Security Now. Steve Gibson is in the house, so am I and we have lots to talk about. We'll talk about fast file hash calculations, why you don't have to overwrite your hard drive over and over and over against. Steve explains the issues in wiping your hard drive, whether an SSD or a spinning drive, and then why he's leaving Twitter. It's all coming up next. On Security Now!

(00:00:30):

[00:00:30] This is security now with Steve Gibson, episode 940, recorded Tuesday, September 19th, 2023 when hashes collide.

(00:00:50):

Security now is brought to you by Bit Warden. Get the open source password manager that can help you stay safe online. Get started with a free teams [00:01:00] or enterprise plan trial or get started for free across all devices as an individual user@bitwarden.com slash twi. And by delete me, reclaim your privacy by removing personal data from online sources. Protect yourself and reduce the risk of fraud, spam, cybersecurity threats and more by going to join delete me.com/twit and using the code TWIT for 20% off and by [00:01:30] Prada. All too often security professionals undergo the tedious and arduous task of manually collecting evidence. With draha, companies can complete audits, monitor controls, and expand security assurance efforts to scale. Say goodbye to manual evidence collection and hello to automation. All done at draha speed. Visit dda.com/twit to get a demo and 10% off implementation. It's time for security. Now the show we cover the latest news [00:02:00] from the security world with the king of all security news.

(00:02:04):

Steve Gibson. Hi Steve.

Steve Gibson (00:02:06):

My hand looks pretty big. I'm going to move it back for you. Get smaller say, told me

Leo Laporte (00:02:10):

so I did some of the shows from Mom's house last week and they told me my head was too big, so I'm going to do the same thing next time. Well, lemme tell you. I know it's easy to get too close. Exactly. Thank you Jason Howell for filling in for me last week. I really appreciate that. Oh, and by the way, I did want to mention because you were [00:02:30] concerned about audio volume, the last two podcasts have been absolutely uniform. Good. So whatever it was that was causing those weird volume diminishes, diminishments diminishing dominion. Didn't happen. We're fixed. Yes. Good. So we have a really fun podcast. This is going to be a goodie.

(00:02:53):

As I said before we began recording, we are going to start by quickly filling you in on two important pieces of [00:03:00] info. One I already know you know about because you mentioned it on the previous Sunday show before your absence last week, but just need to keep synchronized and you may have some things to add as well. Of course. Then guided by some great questions and comments from our listeners. We're going to look into the operating, the more detailed operation of hardware security modules. Since that question came up. The need for fast file hash calculations, browser [00:03:30] identity segregation, the non hysterical requirements for truly and securely erasing data from mass storage, A cool way of monitoring the approaching end of Unix time and maybe the end of the simulation that we're all in my plans to leave. I forgot that was your theory.

(00:03:57):

I hope to God I'm here in 2038. That's all I can [00:04:00] say. Also, my plans to leave Twitter, Leo and what I think will be a very interesting deep dive into cryptographic hashes and the value of deliberately creating hash collisions. Thus, episode 940 of security now is titled When Hashes Collide. Well, you're going to leave Twitter. Well, I can't wait to hear that. [00:04:30] Yeah, probably. Maybe, maybe probably. He's talking about now charging everybody, which I think would cause a grand exodus to be honest. And Leo, that is the catalyst as a matter of fact. Yeah, yeah, that's going a little, if that happens, that ends it as far, I mean I think you'll back down on that one. I can't imagine going through with that. But anyway, we'll find out because it will kill Twitter. It will objectively kill it. Yeah. Security now is brought to you by Bit Warden. [00:05:00] Let me talk about something much more positive and something we love dearly, which is password managers.

(00:05:08):

My sister. Okay, I'm visiting my sister and I gave her my old iPhone because I'm going to get the new one Friday and she says, yeah, all my passwords are written down. Wait a minute, lemme see if I can find them. Just checking her pockets. Eva, you don't use a password manager. This is the sad truth. I think most normal people are still making [00:05:30] up their own passwords or variations of the same password, forgetting them, reusing them, doing all sorts of things that really we know are low hanging fruit when it comes to bad guys. You got to, I told her this, I said, look, get bit Warden, it's free and it's good. You got to have a password manager. Bit Warden is the one I use. The one Steve's uses the one I think most smart people now use because [00:06:00] it really gets the job done.

(00:06:01):

You have long, strong, unique passwords for every place you log into. Bit Warden keeps track of it, bit Warden stores it securely in a secure fashion. It helps you in a lot of other ways too, and I'll talk about that in a second. The main reason I went with Bit Warden is because it's open source, it's cross platform. There are other open source password managers, but only Bit Warden works on Mac, windows, Linux, iOS and Android, I mean everywhere. And [00:06:30] it works in business as well as at home. We're going to be moving to the business version, the enterprise version soon. We've all switched over and I want you to switch over too if you haven't thought about it. And Eva, I'm talking to you with Bit Warden. All the data in your vault is Zen to and encrypted, not just your passwords. That's important.

(00:06:47):

You'd say, well, what? Of course it is. No, LastPass was not encrypting the sites you visited and all sorts of metadata about the passwords was in plain text. Not with Bit Warden. The whole thing is encrypted. [00:07:00] In fact, in the summer 2023 G two Enterprise Grid bit Warden solidified its position as the highest performing password manager for Enterprise. And I think that's important. I want to mention that because I think people go, oh, open source is not for enterprise. No, no, no. It left the competitors in the dust bit. Warden protects your data and privacy by adding strong, randomly generated passwords for every account. But, and I said there's additional features there. They have a username generator [00:07:30] which can create unique usernames for each account. And if you use one of the five integrated email alias services, including by the way our sponsor FastMail, you can generate a unique email address for every single account.

(00:07:44):

So now the bad guy not only has to get your password or guess it, he has to guess the email. You're not using the same email everywhere. It's unique to each one. That's so cool. By the way, I do say open source is important whenever you're talking crypto. [00:08:00] That's one of the reasons I love a bit warden because their code is all available on GitHub so everybody can see it, but they don't stop there. They also have professional third party audits. They do those every year and all the results are fully published on their website. So you can read all of it and yeah, sometimes the audits find holes and I'm always impressed at how quickly bit Warden goes, oh, we'll fix that. And within it seems like minutes it's fixed. Open source security you can trust also because it's open source people can [00:08:30] contribute to it.

(00:08:32):

Next, who I think is a listener of the show who wrote an scrt and an Argon two implementation for the password derivative functions and submitted it. He did a pull request, submitted to Bit Warden. Bit Warden looked it, worked with him and said, you know what, we don't want to implement both because it confuses people. Let's do Argon two. It's memory hard. It's a great choice. It's easy to implement. They his code, they've vetted it of course, and they added it and now that's part of the bit warden [00:09:00] manager so you can use it. In fact, I immediately switched over to Argon two, which is a little better than pbk DF two, which still a lot of they still offer because I guess it's FIPs requires it. But that's nice to know. You don't have to worry about that. And then if you're curious and you turn it on and you see all those settings, the default settings are excellent.

(00:09:18):

Oasp agrees. Everybody agrees. That's the way to do Argon too. So that's a nice benefit of being open source. Others can contribute to it. Share private data securely. This is a new feature with coworkers [00:09:30] across departments. So the entire company with customizable and adaptive plans, there's the teams organization that's a $3 per month per user. The enterprise plan, the one we're going to use $5 per month per user, but there's always, and I asked them this specifically, always the basic free account. I said, you're not going to suddenly start charging or take features away. They said, no, it's not our business model and we can't, it's open source, remember? So if we change anything, somebody forks it and it lives on the basic [00:10:00] free account includes unlimited passwords on all platforms. If you want two factor, then you can upgrade to premium. I did that anyway, just I wanted to support them.

(00:10:09):

And that's $10 a year, that's nothing. Or use the family plan Family organization, six users for 3 33 a month. Bit Warden just released the new Passwordless S S O feature. I buried the lead on this. This a big deal. Single sign-on with trusted devices lets [00:10:30] users log into Bit Warden and decrypt their vault after using S S O on a registered trusted device, which means no more master password. So this new solution is better. It's even easier for enterprise users to stay safe and secure with Bit Warden. Learn more about SSO with trusted devices. Bit warden.com/twit. It's kind of really enterprise version of pass keys. It's very good with some nice improvements. Look, you got to have a password manager, [00:11:00] Eva, everybody, you got to have one get one. Why not start with one that's free forever. That free bit Warden personal account or get a free trial of the teams or enterprise plan bit warden.com/twit.

(00:11:14):

Bit warden, B I T W A R D E N, bit warden.com/twit. Alright, enough. I'm sorry I talked on and on, but I love this product and I want everybody to use it.

(00:11:26):

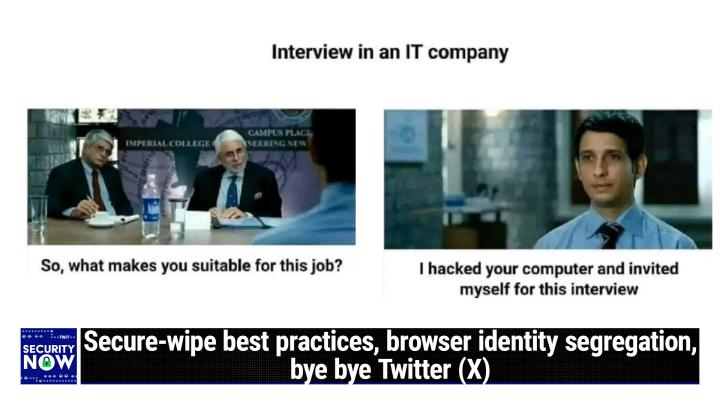

Picture of the week times, Steve.

Steve Gibson (00:11:28):

So yeah, it's [00:11:30] a two frame picture. We have two stuffed shirt looking guys, dudes wearing coats and ties and this looks like a real event maybe from TV because even though the second shot is the person who's over whose shoulder we're looking in the first frame, you could see the brick in the building is the same. So it's actually this kid was [00:12:00] actually facing these two stuffed shirt guys. And in the background you can also read looks like Campus Place and Imperial College. And then something about engineering knew something. So anyway, the caption, the question being posed in the first frame is these two stuffed shirts are looking at 'em saying, so this is a job interview.

(00:12:25):

What makes you suitable for this job? And this [00:12:30] very serious looking kid who does look sufficiently uncomfortable in his shirt and tie, which is good. He says, I hacked your computer and invited myself for this interview. That's one way to get a job. So there love it. Okay, so Leo, I needed to make sure you knew about this last week's podcast was titled Last Mess. [00:13:00] And that's because Wait, there's more. Oh baby. Yes. Oh geez. Brian Krebs reported the very strong and still mounting evidence that selective decryption of the stolen LastPass vaults has been occurring and that the cryptocurrency keys stored in the decryption targets have had their [00:13:30] funds emptied. I did see that story. We actually reported on it on twit before I left.

(00:13:38):

Once LastPass was hacked, then the next question was are they going to be able to decrypt the vaults, brute force decrypt them, or is it a nation state or maybe a competitor who's just trying to smear LastPass. And so we were just waiting and it does seem like there's some evidence that, and what they're doing is makes sense. Cherry picking the most [00:14:00] valuable accounts. They have no interest in logging into Facebook as Aunt Mabel. No, these guys, they want one thing money. And so they're going to target LastPass early adopters with low iteration counts because LastPass screwed up by never increasing those proactively.

(00:14:24):

And the analysts who looked at this noted that these were not [00:14:30] neophytes. These are people in the crypto industry. In fact, that's how they're being targeted, right? Because we know that the email address is in the clear, it's in plain text. So by scanning through email addresses in the 25 million plus vaults that were exfiltrated and stolen, they're able to identify targets who they know by their email address the companies that they're with and go, Hey, there's a good chance that [00:15:00] this guy has cryptocurrency. He may have made the mistake of giving LastPass his keys, didn't know it was a mistake at the time, and it's a mistake I wish I had made but didn't, so I can't get it in my wallet. I kept looking at the last pass so I don't have to worry about this. But that's interesting. And the iteration count is also in the clear as it must be because you need to know the count in order to know how to iterate in order to decrypt the vault.

(00:15:27):

Unbelievable. Unbelievable. They know where the [00:15:30] low hanging fruit is by low iteration counts. They know who the people are, meaning that they're likely targets. And if the iteration count is low, they can put a whole bunch of GPUs on the task of doing a brute force crack. Apparently they were able to crack a couple, I don't remember if it was a couple per week or per month, but it must be per week because there have been enough of them since the theft. And apparently about 35 million [00:16:00] worth of LastPass users cryptocurrency has been stolen. And that was the giveaway, or maybe not giveaway, but the clue that it might be because of the last pass breach breach, the one common factor that was what was in common among all these accounts, and it makes sense. They were security aware, they were early adopters. They probably had strong passwords, but they were early adopters.

(00:16:24):

And so the p K two, well actually one guy admitted to only having an eight character password [00:16:30] and a low iteration count. It was like, oh, he was the first. And this guy thought maybe this was two months ago before the news broke, he said, maybe I should move my cryptocurrency. He lost three and a half million dollars. So he had already set up another wallet and he was just procrastinating. He said, yeah, he was ready to go and he didn't do the transfer. So the three and a half million was [00:17:00] still sitting in his wallet whose keys were stored in LastPass and now it's gone. Wow. So no new home for you. Okay. Oh, the other thing I just wanted to make sure, and I know you did talk about this, was the UK apparently blinking on this issue of no exception, C Ss a monitoring the OFAC or of [00:17:30] their regulatory body added a clause to this. The online Child Protection Act added the clause where technically feasible and where technology has been accredited as meeting minimum standards of accuracy in detecting only child sexual and exploitation content. Well, that wasn't there originally that got added.

(00:17:58):

So as far as all [00:18:00] of the secure crypto companies are concerned, that's their out card. I mean they're done. This is no longer a problem. The politicians get to say, oh look, we've enacted really the strongest legislation we could and Apple and Signal and WhatsApp. And everybody's like, okay, oh, telegram, okay, yeah, great, glad you did that. We will be able to keep offering our services because it is not technically [00:18:30] feasible to do what you have asked us to do. And this added clause here late just before the legislation closed, gives us our out. So of course now the big question is what will the EU do? But the gal that's running Signal was extremely happy saying that this broke the inertia that had been mounting among the various nation states about this, and it looks like now [00:19:00] it's probably not going to happen again.

(00:19:02):

They'll all claim that they enacted great legislation so they can always get themselves reelected. It's perfect. It won't matter. It's a perfect solution. Everybody wins. Everybody wins. Everybody wins. Okay, so Chris Smith tweeted, he said, I'll politely ask that you ponder the scalability of your personal H SS M and whether it could even [00:19:30] keep up with the IO of your own domain. Yes, it would be awesome if prevailing enterprise class H SMSs could commonly do more than just securely hold the keys. Rainbow Crypto accelerator cards paved the way for ubiquitous T L s and perhaps someday enterprise H S M will similarly step up. Okay, now, so Leo, what Chris is referring to is that we learned how [00:20:00] we learned last week, how it was that Microsoft lost that key. Wasn't that a story? And I'm sure you said this, but credit to Microsoft for being very honest about this.

(00:20:13):

Very forthright except for one thing. Oh, so yes, honest. It was a very detailed technical description of what happened. It was a technical description. The fumble was the key was in RAM when the server crashed, [00:20:30] which caused a snapshot of the crash dump to then as a consequence of five bugs, I've seen security experts tweet, you would be amazed at how many crash dumps contain secrets. That's the first place you look, right? Yes. And of course, and so Microsoft knew this, right? So they actually had filters, secret filters in the pipeline that this crash dump moved through and they all missed it.

(00:21:00):

[00:21:00] So they were like five bugs that all had to be there for this crash dump to make it onto a developer's computer out of the production environment into the operating environment, which then allowed China to come in and grab it. Okay? So yes, there were bugs, but the original sin was that this key was in ram. And [00:21:30] this brings us to Chris's point. I was saying that even G R C I have a cute little H S M, which is where Codesign, what is that? Yes. Okay, so that's the issue. That's a hardware security module. So we actually have two questions here. We have Chris's. So he's referring to my comment from last week about Microsoft having lost control of their secret [00:22:00] signing key, which then allowed bad guys to sign on their behalf and thus gain access to their customer's enterprise email. And so the point is it should have never been in ram.

(00:22:13):

The question raised is why in this day and age where we have hardware security modules, so an H SS M is very much like a T P M A trusted platform module, which we all now have built into our motherboards. So maybe [00:22:30] Microsoft will address this oversight, but I made the point that even GRCs code signing keys are locked up in HSMs and are thus completely inaccessible crash or no crash. My keys never exist in RAM and are thus never subject to theft. Now, Chris reminds us that cryptographic signing imposes significant [00:23:00] overhead like compared to none, right? Because that's what you're comparing it to. Either you don't have any signing or you do, and if you're going to sign something, there's a computational cost to doing that. So he said, and that the use of custom hardware for accelerating this process has historically been necessary.

(00:23:22):

Okay? True. But the hardware for doing this and at today's internet scale exists [00:23:30] today. Now, it's true that my little U S B attached H S M dongle, it would melt down if it were asked to sign at the rate that Microsoft probably needs. But apparently Microsoft already had software doing that on a general purpose. Intel chip running windows, which is what crashed. Whereas to Chris's point, special purpose hardware can always be [00:24:00] faster. So my point for whatever reason was Microsoft was not using such hardware and it is difficult to discount that as being anything less than sloppiness and it did bite them. Now in the show notes, Leo, for the next question, I have a picture of the H S M that I'm using Rich Carpenter, it's just a dinky little US B key. Yes, [00:24:30] that's it. Okay, so Rich said, Hey Steve, thank you for all your contribution to the security community and delighted to hear you're extending your tenure to four digit episode numbers.

(00:24:41):

In episode 9 39, you mentioned that the G R C code signing key is sequestered in a hardware security module. I'm familiar with HSMs in finance and banking, but would you consider a technical tear down of what HSMs are and how they operate without exposing [00:25:00] the contained key material for the security now audience? Many thanks, rich in sunny Devon uk. Oh, that's a nice question. I'm very, and Devon is not sunny. Okay. Oh, so maybe it was a sarcastic, that's why he made a point. Oh, it'd be like sunny Seattle. Yeah, well, when it is sunny though, it's such a red letter day, I could see why he might sign it that way. It's like a big deal. Yeah. Okay, so Rich's [00:25:30] question of course followed nicely from Chris's. So the idea is simplicity itself. The particular key I use came from DigiCert already containing the code signing key I obtained from them.

(00:25:44):

That's cool. Now, that was several years ago and I've since updated it several times. What's significant is that the signing key, the signing keys, which this little dongle stores are [00:26:00] right, only this little appliance, and this is true of HSMs on any scale, lack the ability to divulge their secrets. They don't, they just don't have it. Once a secret has been written and stored in the device's, non-volatile memory, very much like a little memory stick, it can be used, but it cannot be divulged. And this brings us to the obvious question. How [00:26:30] can the key be used without it ever being divulged? The answer is that this H S M is not just storage for keys. It contains a fully working cryptographic. So when I want to have some of my code signed, the signing software outside of the H SS M creates a 2 56 bit SS h a 2 56 cryptographic [00:27:00] hash of my code.

(00:27:03):

Then the resulting 32 bytes of the code's hash are sent into the H SS M where they're encrypted using its internal private key, which never leaves. It can't leave. There's no read key command. All you can do is give it something to do and it does it. So the 32 bytes of the hash of [00:27:30] my code are sent into the key and the encrypted result is returned. So the whole point of this is that all the work is done inside the H SS M by the H S M and only the encrypted result is returned. There is no way for the key to ever be extracted from the hardware dongle. It doesn't have any read key command only right key. And just to complete a statement [00:28:00] of this entire process that the so-called signature that's then attached to my code is the matching public key that is the public key that matches the private key inside the dongle. So it's the matching public key, which has been signed by a trusted route certificate authority in my case by DigiCert. So when someone downloads and wishes to verify [00:28:30] the signature of my code, that the signature of its attached public code signing key is first checked to verify that the public key was signed by DigiCert. So that verifies the DigiCert assertion that this public key belongs to G R C.

(00:28:58):

Then that public [00:29:00] key is used to decrypt that encrypted hash, which is the signature and the private key can only correctly decrypt the hash if the hash was encrypted by its matching private key, which G R C has and which is embedded in that H S M. So then the rest of the code that was downloaded [00:29:30] is hashed with S H A 2 56. And that hash is compared to the decrypted hash, which the private key decrypted only they match. Do we know a whole bunch of things. We know that the GRCs matching private key encrypted the code and that because the hashes match, not one single bit of the code has changed otherwise [00:30:00] the hashes would never match. So although this whole process does have a bunch of moving parts, when everything lines up, it works. It's quite clever. That's quite clever. It's really clever.

(00:30:13):

I'm thinking about, I don't know, there must be some analogy we can use to describe this. Essentially the key knows who you are, but it has a secret of yours that only the key has and will never reveal, but it can use that secret to create now public keys, which it can then send [00:30:30] out into the real world and then somebody getting that public key. This is how public key crypto works. Getting that public key knows that it's you because the public key can only be created by somebody who knows your secret in effect. Right? That's how I log into my S S H server, which is it has my public key. It doesn't have my private key only. I have my private key. So when I log in with ss, SS, H S Ss, SSH says, yeah, this is him because the public key matches the private key, which only [00:31:00] I have.

(00:31:01):

And then they've added this really nice additional feature because you still need this hash of your code to verify the code's not been modified. So they take that hash, they I guess hash it right? With your public key or Well, yeah. So they take the hash, I take the hash when I'm publishing this and I encrypted the hash with my private key. You've encrypted it so it only can be decrypted by you, essentially by your team? No, it's [00:31:30] encrypted by me, but it can only be decrypted by the public key. By the public key. Got it. And the public key is signed by DigiCert attesting that it's GRCs public key. Very clever. That's a nice system I like. It's beautiful. That's taking kind of the simple public key crypto I use to do my S S H and adding this additional feature that it verifies some code.

(00:31:52):

Yes, you could do that when you download code from open source, you download the code, but you'll also download a hash that [00:32:00] needs to match the key public key that is posted on the key servers to make sure that that developer did write this code and it's unmodified. So we see that all the time without this certificate thing. And the nice thing for Windows users is if you right click on the file and bring up the little property dialogue, one of the tabs up there will say digital signature, and so you're able [00:32:30] to click on it and it says, this signature's been verified and it shows who signed it. So it shows DigiCert in this case. Yeah. Yes. Yeah. And so not only does Windows itself, all of Windows wacky security stuff see that a valid signature signed the code, which tends to calm down Windows antiviral knee jerk reactions, but then the user is able to easily see who signed [00:33:00] it themselves at any time.

(00:33:02):

Very nice. It's just a slick system. Public key Crypto is amazing. It's brilliant. Yeah, it is. So cool. And Leo, you are going to love this podcast topic when we get there. Okay, good. Something more. Very cool is going to happen. Okay, so Clint and I withheld his last name so as not to embarrass him, he said, Hey, Steve, longtime security, now follower. My name is Clint. I wanted to bring to your attention [00:33:30] that I just fell for a Verizon wireless fraud call. Oh, he says, since I wasn't aware of Verizon's fraud policy, meaning that they will never call you, I responded to a call that said someone tried to log into my account. In doing so, I actually gave them information they obviously didn't already have. After calling to talk to Verizon directly, I've changed my account pin and my password. [00:34:00] I'm working on letting Social security and other services I have know of potential activity that would not be me.

(00:34:08):

Hopefully nothing comes of this as I change my info quickly, but I'm still being proactive on this. Just thought I would share this in case it's something everyone needs to be aware of new that's going around. So it's not that new, but this seems significant since Clin explains that he's also a long time [00:34:30] security now podcast listener. The lesson here is not knowing whether or not this is Verizon's policy, which really shouldn't factor in it's skepticism first and never for one moment let down our guard. It's unfortunate because it's not the way humans are built, right? We tend to believe our senses, which is what stage magicians depend upon. [00:35:00] So when something reasonable and believable appears to happen, most people's first reaction is to believe it and to take some action. Clint now knows that his first reaction should have been to place his own call to Verizon rather than accepting a call ostensibly from Verizon.

(00:35:22):

In fact, he knew it then, right? But these scammers count on their targets getting caught up in the moment and [00:35:30] reacting to an emergency rather than taking the time to think things through. So I cannot say this often enough, which is why I've decided to say it again. It doesn't matter how many bits of encryption you have, the human factor remains the weakest link and the bad guys are incessantly clever. I dread the day when I will make such a mistake and it could happen to any of us. If I could [00:36:00] type with my fingers crossed, I would. So anyway, just another reminder, Stan Bold said, greetings from a dedicated listener from Barat, India. He says, I found your site around 2004 and has always given me peace of mind. It was comforting to learn everything was secure then. And thanks to your weekly episodes, I remain confident referring to last week's episode about [00:36:30] check some verification with virus total.

(00:36:33):

Here's a handy tip for Windows users unfamiliar with the command line. I had mentioned that you could use cert util hash file, then the file name space s a 2 56, and somebody subsequently noted that SS H A 2 56 is the default, so you don't actually have to be specific about that anyway, so he said, install the seven zip file archiver. [00:37:00] He says, this adds a C R C S H A verification option to the Windows right click menu. He said, I frequently use it to confirm file check sums. By the way, it's delightful to learn. You'll be continuing the show for the foreseeable future. Many thanks. Okay, so this listener's comment caused me to check my own windows, right click file context menu, and I have a check summing option there too. [00:37:30] I wanted to mention that there are a number of simple check summing add-ons for Windows and Linux desktops. My file properties dialogue has a check sums tab. I'm just talking about how it also has a digital signatures tab when the file you right clicked on has a digital signature. I always get a checksums tab and I recalled deliberately installing it many years ago and right clicking [00:38:00] on a file and using this tab is the approach I prefer. I think it makes sense for file check summing to be because it's so useful. I mean, it's like a signature, right? So it allows you to very quickly make sure that two things are the same.

(00:38:17):

The Shell extension is the solution I've been using for years, and having something at your fingertips means, as we know, you'll tend to do it more often. So I have a couple links in the show note. [00:38:30] The one I was using was, it's called Hash Check. It's open source. It has not been updated since 2009. Unfortunately. It's only unfortunate because it works great because it does not support S H A 2 56, which I think any state-of-the-art checksum probably should. It's not that SS h a one, which it does support is not good enough. It's that virus total is using S H A 2 56 [00:39:00] and other things you may be wanting to cross-reference with use s h a 2 56. So it should too. For what it's worth, the source code is published, he offers a source code. It's got a visual studio project in it. So it would not be difficult for one of our listeners to update that.

(00:39:20):

And in fact, if one of our listeners wants to, I will be happy to tell everybody about it because it's really very clean and very cool, and I'm [00:39:30] not going to take the time away from my work to do that because I know that we have some listeners who could. So the link is in the show notes. There are also two other solutions on GitHub, both open source, one called Hash Check and one called Open Hash tab. So the options are abound, and I just wanted to say, Hey, it's easy to add that. Oh, and there is also one for Linux desktops for the rest of our population. We do it by a command line. [00:40:00] Steve, we know extensions. You get out your remarkable tablet and you just do the math by hand. I calculate it by hand. Of course. That's what real men calculate.

(00:40:15):

2 56 s h a by hand. Yeah, no, there's plenty of command line tools that'll do that on Linux. And a lot of times in Linux you got the terminal open. You just say, well check the hash on this. Yep. Yep. Okay, so finally before [00:40:30] our second break, Chris Sheer said, hello, Steve. This week you mentioned running a separate browser for segregating extensions, actually for segregating like logged on cookies and so forth. I was saying if you normally use Firefox, you could use Chrome and not have extensions loaded there and so forth. Anyway, he said Chrome and Edge have a profiles feature where you can run the [00:41:00] browser separately as a different identity with different extensions, sessions, cookies, et cetera. I've used this to maintain sessions to multiple Azure Gmail or internal app sessions simultaneously. This is great for separating dev prod, test, personal, et cetera.

(00:41:21):

They even get separate toolbar icons and windows. So you can launch right into the one you want, meaning launch the browser with the profile [00:41:30] that you want. Is that in Edge and Chrome? You click your avatar or icon, and there's a profiles area where there's an option to make a new one or use a guest profile, either of which will be completely vanilla. Then if he finishes, I believe Firefox has similar features, but I don't use it as often. He says 99 and beyond plus. Okay, so I had completely overlooked profiles, so I'm glad Chris reminded me and anyone [00:42:00] else who might have a use for them. As always, I strongly prefer using features that are built into our existing systems rather than adding superfluous and unnecessary extras. And sure enough, if in Firefox entering about colon profiles took me to my profiles page where I saw that I currently have only one profile in Firefox, but there is an option to create more. So that's very cool, and thanks [00:42:30] Chris for pointing that out. And that would allow you to have your banking or super secure profile where you don't load all the other extensions, all of those requiring trust because they all have access to whatever you do while you're working with your browser. So very nice tip. And Leo, let's tell our listeners while we're here, yes, and then I'm going to do a deep dive into what's actually required to securely erase [00:43:00] mass storage. That's a good subject, and we've talked about this before, especially with SSDs.

(00:43:09):

It's not, the question is, is this whole business of overriding hard drives, right? Oh, oh, yeah. Yeah. Okay, good. Can't wait. Yeah. Our show today brought to you by the good folks at delete me. This is something I wish we didn't need. In a perfect world, we wouldn't need delete me, but as you probably have gathered, this [00:43:30] is a far from perfect world and one of the issues is data brokers. Privacy is a hard thing to achieve. Thanks to the internet these days. Have you ever searched for your name? You notice how much queasily personal information is available about you online and you're not even seeing all of it. Did it make you feel, I don't know, exposed since 2010, delete Me has been on a mission to empower individuals [00:44:00] and organizations by allowing them to reclaim their privacy and help them remove personal data from online sources.

(00:44:09):

It can be done. The Delete Me team really cares about this stuff. They're driven by a passion for privacy and a commitment to make it easy to use. Delete me helps reduce risk from identity theft, from credit card fraud, from robocalls and spam, cybersecurity threats, harassment, unwanted communications overall, and your business may need to use Delete [00:44:30] Me. Ours does because it's part of a spear phishing attack is gathering information about executives at a company so that they can be spoofed, credibly, spoofed with phishing, spear phishing males. And if they know a lot about you, it's easy to convince an employee they are you. Oh, that's not good. Delete Me is the most trusted privacy solution available. It helps thousands of customers, including our [00:45:00] employees, remove their personal information online. The average person has more than 2000 pieces of data about them. Online pieces of data that you may be surprised, things like the value of your home, your home address, your home phone, your cell phone, your emails, pictures of you stuff perhaps you could remove on your own if you knew where to go.

(00:45:22):

The problem is there are hundreds, literally hundreds of data brokers and new ones all the time. They also play a game, which I've [00:45:30] discovered myself, that you go there, you fill out the form, you get the data removed. You got to do that hundreds of times, and then a couple of days later, you go back and it's been repopulated. They say, well, we found new sources of information and we're going to repopulate it. This is why you need to delete me. One customer said, I signed up. They took it from their awesome service already seeing the results. So when you sign up, you will give them some personal information you need to, it's just basic stuff because that's how they find your stuff, birth [00:46:00] date, name, things like that, that helps them get the search engines taken, get it down. And then this is the most important part, delete me.

(00:46:11):

Experts will go out and look at hundreds of data brokers, find your information and remove it, reducing your online footprint, keeping you and your family safer. Once that starts, you're going to get a detailed Delete Me report within seven days. You'll know immediately what they're doing. And this is really important. It doesn't stop there. Delete me, continues [00:46:30] to scan and remove your personal information every three months. And with automated removal, opt out monitoring, they will ensure records don't get repopulated after being removed again, you could do this yourself, but I don't think anyone has the time to, or maybe in many cases, the expertise to this is what Delete Me does. They will get rid of names, addresses, photos, emails, relatives, phone numbers, social media, property values, and more. As the data broker industry evolves, delete [00:47:00] Me, continues to add new sites, new features to ensure their service is easy for you to use and effective in removing personally identifiable information.

(00:47:08):

B I I. Since privacy exposures and incidents affect individuals differently, you'll be glad to know they have a privacy advisors for you, which will help ensure all your data has been removed, the data that's still there, whether it's important. These are experts who will help you understand what's going on, protect yourself, reclaim your privacy. [00:47:30] Go to join delete me.com/twit, join delete me.com/twit. The offer code TWIT will get you 20% off and will get us some credit, which is nice. They'll know you saw it here, join delete me.com/twit. You may need it for yourself as an individual, but companies, you really ought to think about this for your executive suite, your C-suite, and anybody who could credibly send an email saying, Hey, Jones, I can't get to it right [00:48:00] now. I'm in the middle of a meeting. But perhaps you could help us by doing this is by the way, and the only reason I know all about this, I want to see if I can find it.

(00:48:11):

Such a text was sent out to many of our employees by somebody pretending to be Lisa and wow, of course it wasn't. And I guess it's okay to show you this phone number because it is a scammer's phone number after all. Hi Jason. [00:48:30] This went to I think, Jason Howell. I'm busy with meetings at the moment with limited phone calls, and I need you to complete a task for me right away. Read my text as soon as you get this. Thanks Lisa LaPorte. Guess what wasn't Lisa. This is why you got to get this personal information off the internet because they know the company's hierarchy, its structure. Who works for whom they could throw in stuff that makes it even more credible. Hi, it's Lisa. I'm at home [00:49:00] where I live, the address, that kind of thing. Got to do it. Join delete me.com/twi. Use the promo code TWIT at checkout.

(00:49:08):

It was really terrifying. This happened in the summer. Most of our employees got these messages from somebody pretending to be Lisa. That's why Lisa uses Delete Me. Ah, back to the show, Mr. Gibson. Okay, so at Lee a T l i at Lee Davidson, he said, hi Steve, [00:49:30] longtime listener and spin right owner. I'm giving away an external U S B hard drive and want to securely erase the drive. A few years back I stumbled upon the format P option to specify how many times to overwrite the drive. There's a lot of mixed information on how many times to overwrite the drive. So I picked seven as some articles suggested. What would be the sufficient times to overwrite the drive? Some say three. [00:50:00] Some say one I used three times for my SS S D a few days back and now using seven for a large spinning drive, which will probably take days.

(00:50:11):

I probably should have at least swapped those numbers and used a larger value for the S S D. Any thoughts? Looking forward to the next few hundred security now episodes. Okay, so as I mentioned previously since at Lee's problem is going [00:50:30] to become increasingly common for many of us, GRCs planned second commercial product to follow spin, right? Seven will be an easy to use, fast and extremely capable secure data. Erasure utility, its sole purpose will be to safely and securely remove all data from any form of mass storage. It will understand every nuance strategy and variation available and will take care of every detail. But since [00:51:00] that future utility is not yet available, here's what needs to be done in the meantime. And of course, even after it's available, you could still do this and be doing just as good a job, but that advice won't be updated constantly and automatic and you won't necessarily know all the features that each device offers.

(00:51:20):

So that's where it makes sense, I think, to bring everything together into a single place. But the first thing to appreciate is that there are [00:51:30] similarities and differences between the wiping needs for spinning versus solid state storage. The differences are largely due to their differing physics for bit storage and their similarities are largely due to the need for defect management and wear leveling. So let's look at solid state storage first. The first thing to note is that electrostatic data storage, [00:52:00] which is the physics underlying all current solid state storage, has no notion of remnants or residual data that is in the individual bits. The individual bits of data are stored by injecting and removing electrons to and from individual storage cells. This process leaves no trace. Once those electrons have been drained [00:52:30] off, nothing remains to indicate what that cell may have ever contained. If anything, consequently, nothing whatsoever is gained from multiple rights to an SS S D.

(00:52:46):

The bigger problem with SSDs is wear leveling. As I've mentioned before, this process of electrostatically injecting and extracting electrons across an insulating [00:53:00] barrier inherently fatigues that barrier over time. Earlier, single level cell SS L C storage was rated to have an endurance of at least 100,000 right cycles. The move to multilevel cell M L C storage has reduced that by a factor of 10 to around 10,000. Okay, still 10,000 rights to every bit is a lot [00:53:30] of writing. So that's why in general, solid state storage is still regarded as being more reliable than mechanical storage. But the problem is that if only five gigabytes of a 128 gigabyte drive solid state drive was written over and over, those remaining 123 gigabytes [00:54:00] would remain brand new and unused while the first five gigabytes that was being read over and over and over would be fatiguing due to the stress of being written over and over, which is a real thing for this form of solid state storage.

(00:54:19):

So the process known as where leveling was quickly introduced, where leveling introduces a mapping layer, which is interposed [00:54:30] between the user on the outside world and the physical media. This is known as the F T L for flash translation layer. So now just say for example, that the first four K bytes of memory is read from a flash memory, the user then makes some changes to that data and rewrites it back to the same place. [00:55:00] What actually happens is that the rewrite occurs to some lesser used physical region of memory and the F T L, that flash translation layer is a updated to point the logical address of that memory region to the physical location where the updated memory now actually resides. This beautifully spreads the fatiguing [00:55:30] that's been caused by rights out across the entire physical surface of the memory to prevent the overuse of any highly written memory addresses.

(00:55:43):

But there's a downside to this when it comes to data erasure, remember that the four K bytes were originally read from the beginning of the drive at that location, but then they were rewritten to another location and the F T L was [00:56:00] updated so that the next read of that same four K by region will be from the most recently written location where the updated data was stored. But what happened to the original four K byte region that was read first, when the F T L is updated to point its address to the new location, it becomes stranded. It is literally unaddressed [00:56:30] physical memory. If the F T L does not have a mapping for it, it cannot be reached from the outside, it's still there. It still contains an obsoleted copy of the four K bytes region's previous data, but there's no way to access it any longer.

(00:56:53):

And if there's no way to access it any longer, there's no way to deliberately overwrite it with all zeros or [00:57:00] whatever to assure that its old data has been permanently eliminated. It's you can't get to it now, okay, you might say if there's no way to access it, then no one else can either, but that's probably not true. But solid state memory controllers, which is where this F T L lives contain undocumented manufacturing commands to allow external manipulation of the F T L. [00:57:30] So while it's not easy to do, it's likely that there are powers higher up that could perform recovery of all obsoleted data even after all of the user accessible data had been wiped clean. And if the information was important enough, it's also possible to solder the memory chips, which are separate from their controller and access them directly, thus bypassing the F T [00:58:00] L entirely. And there are videos on YouTube showing how this can be done.

(00:58:07):

It is for all of these reasons, that solid state mass storage added special secure erase features to erase data that cannot be accessed through the normal IO operations of reading and writing from the outside. When a secure erase command is sent to solid state memory, all [00:58:30] of the devices memory is wiped clean, whether or not it is currently accessible to its user, this command is carefully obeyed by today's manufacturers since there's no upside to being found not to be doing this correctly. So in short, the only thing that needs to be done for any solid state drive, which supports the secure erase feature, is to arrange to trigger the command, then sit [00:59:00] back and wait for the command to complete. Once that done, the device will be free to discard, to be discarded or given away to somebody else. It will be empty. I mean, this is a big deal because for a long time we said you can't really effectively erase solid state because of this slack space problem.

(00:59:21):

And so that's good to know. That's good. You actually can. Yeah, that's really good to know. Although you need to know how to issue the command. There are various levels of command. [00:59:30] Some drives will allow you to securely erase only the unused area, so you get to keep the used area. This is why we need Steve to write an app, by the way. That's why I believe there will be a place for this and for what it's worth, I would still do one pass of overriding just because belt and suspenders do one pass of overriding, which does not fatigue the device very much when you consider it's got 10,000 available [01:00:00] and then tell it now, erase yourself and that way for sure. But what's interesting, Leo, is the story with hard drives is somewhat murkier due to their long history and some bad advice that has outgrown its usefulness. And that's coupled with some panic that aliens may have more advanced technology than the N SS A, which would enable them to somehow unearth layers of previously written [01:00:30] data from long ago. Okay, here comes my favorite line of the whole thing. Go ahead, keep going.

(01:00:39):

So here's what I believe to be true for any hard drives manufactured since around the year 2000. The earliest hard drives used an extremely simple encoding of user data into magnetic fluxx reversals. It was a modified form of frequency [01:01:00] modulation, so we of course call it M F M then by dividing time into smaller quanta and using the precise timing of reversals to convey additional information, 50% more data could be stored using the same total number of flex reversals. We called that run length limited coating or R L L I remember those days, and that was probably [01:01:30] the last time that it might have even been theoretically possible to recover any data that had previously been overwritten. Everything since then has been hysteria. I love your line in here. Go ahead, say it.

(01:01:52):

It's a miracle that it's possible to read back even what was most recently written that they work at all [01:02:00] is a miracle. That's right. When discussing solid state memory previously, I was careful to mention that once the electrons had been removed from a storage cell, there was no remnant of the data which had previously been recorded. No footprint remains. That's not entirely true for magnetic storage, and that was the original source of the hysteria. To some degree, [01:02:30] magnetism is additive because residual magnetism arises from the alignment of individual magnetic domains, and it's their vector sum that determines the resulting overall magnetic field vector. Thus, the greater the number of domains having the same alignment, the stronger the resulting field. So when a new [01:03:00] magnetic field is imposed upon a Ferris substance, which was previously magnetized, the resulting magnetic field will be a composite which is influenced by the field that was there previously.

(01:03:17):

If the previous field was aligned with the newly imposed field, the result will be a somewhat stronger field. But if the previous field had the opposite alignment, although the new field [01:03:30] will dominate, the result may be ever so slightly weaker as a result of the memory of the previous opposite field still being present. So in theory, if we assume that a new magnetic field pattern was uniformly written, tiny variations occurring when that magnetic field is later read back, might be due to the data [01:04:00] that was previously present. In other words, something remains however tiny, that is the source of the concern over the need to overwrite and overwrite and overwrite a hard drive in order to adequately push it back, push what was there back into history. The original data essentially burying it [01:04:30] so that it is beyond any recovery, none of that has been necessary for the past 20 years.

(01:04:39):

This was only theoretically possible when the encoding between the user's data and the drives flex reversal patterns were trivial as they were back in the early M F M and R L L days. They have not been trivial for the past 20 years today. And here's your line, Leo. [01:05:00] It's truly, and I mean this a miracle, that it is possible to read back even what was most recently written. It's just astonishing how dense these hard drives have become, and many drives have become utterly dependent upon their built-in error correction to accomplish even that today's magnetic fluxx reversal patterns, which are recorded [01:05:30] onto the disc surface bear only a distant relationship to the data that the user asked to be recorded, where it was once true, that bits of user data created flux reversal events on the drive. Today, large blocks of user data are massaged and manipulated to something called whitening is done.

(01:05:54):

To them, they're bleached. It's kind of amazing. These things work at all. It is insane, [01:06:00] Leo, how far this technology has gone. It's because of the density of the bits, right? I mean, we're just storing, yes. It's because of the density and because it is so difficult to get any more of them in the same amount of space, yet they just, management keeps demanding it, and the engineers go, well, I guess we could run it through a pasta maker in order to, let's whiten it. I don't know, shred it and then recombine it or something. I got to say there's a lot of credit to technology. I mean, you and I both, I'm sure back [01:06:30] in 2000 thought there's no way we'll still be using spinning drives in 2023, or people had been predicting the demise of the spinning hard drive for since the nineties.

(01:06:42):

A decade, yeah. Yes. And we're still here, baby. They keep upping the density. I mean, you can get 20 terabyte drives now. Insane. Insane. It's unbelievable. Okay, so these large blocks of user data are massaged and manipulated, and then the result is what's [01:07:00] written back down. And then somehow this process is reversed. And after it's reversed, it's UNM massaged and de whitened, and the contrast has turned backed up, and then error correction is used in order to give you back what you asked to have written in the first place that's actually going on. So given all of these facts, here's my own plan for GRCs secure spinning [01:07:30] disc data erasure program, which will closely follow after spin, right? Seven, actually, I'm going to use pretty much all of spin right? Seven in order to create the secure wiping utility. When GRCs program is asked to securely erase a spinning hard drive, it will use an extremely high quality random data generator to fill the entire drive with noise, pure noise.

(01:08:00):

[01:08:00] Then it will go back to the beginning and do it again. And that's it. If its user wishes to follow that with a wipe to all ones or all zeros for cosmetic purposes, so drive appears to be empty rather than full of noise, they may elect to do so, but that won't be needed for security. The theory is that it's always possible to read what was most recently written, right? I mean, [01:08:30] that's what a drive does. So in this case, that'll be the noise that was written by the final second pass over the drive. Then also by the Sheerest most distant theory of data recovery that really no longer has any support. It might theoretically be possible, and you may need to consult the aliens for [01:09:00] this to get something back from that first noise writing pass.

(01:09:10):

But all that anyone would then ever get back is noise. The noise that was first written absolutely unpredictable noise with no rhyme nor reason. And that noise will have absolutely, completely obscured any data [01:09:30] that was underneath it. I've been saying this for years. You don't need these 18 rights and over rights and all that stuff that's long gone. Given today's complexity of the mapping between the date of the user rights and the pattern of flex reversals that result, there is no possibility of recovery of any original user data following two passes of writing pure noise on top of that data. [01:10:00] And thanks to Spin Wright's speed improvements, this two pass noise wipe will be able to be done at probably around a quarter terabyte per hour because this utility will be using all of spin right seven's technology, so it will be feasible to wipe multi terabyte drives in a reasonable amount of time and absolutely no that the data is gone.

(01:10:25):

Now, spinning hard drives do still also [01:10:30] have the problem of inaccessible regions when defects are detected after the drive is in the user's hands. Those defective regions, which contain some user data at the time are spared out, taken out of service, and are no longer accessible. Although the amount of user data is generally very small, if such spinning drives offer any form of secure erase feature and many do now GRCs [01:11:00] utility will follow its two passes of noise writing with the drive's own secure erase command, which is designed to eradicate data even from those spared out sectors in order to get to all the final little bits. And one final note before I close this answer, there are also drives that have built-in a e s encryption hardware that's [01:11:30] always active. When the drive comes from the factory, it will have an initial default key already established.

(01:11:40):

So it looks like just any regular drive, but this means that any user data that is ever written to such a drive will have first passed through the a e s cipher using the drive's built-in key and [01:12:00] that everything that's ever written to the physical drive media will be encrypted on the drive, whether it's spinning or it's solid state. Both technologies now have this available, and that means that securely eradicating all recoverable traces of the user's data will be as simple as replacing and overriding that one key with a new randomly generated key. At that point, [01:12:30] nothing that was ever previously written to the drive will ever be recoverable. And again, GRCs utility will know if the drive offers that feature and offer it, and you can, if you wanted to belt and suspenders and I don't know, a parachute, you could still do something that took longer, but there's really no point in doing that.

(01:12:52):

So to finish answering Altis question, due to the physics of electrostatic storage, [01:13:00] that is SSDs. There's no need to ever perform more than a single eraser pass over any current solid state storage. If you did not trust the drives secure erase command, then if possible, follow that with a secure erase command if one's available. The drives manufacturer will typically have a utility that knows how to do that with their own drives. And due to the physics of electromagnetic storage, [01:13:30] it might theoretically be useful to perform a second overriding pass. And if possible, I would do that with random noise. That's what G R C will do. And again, follow that with a secure eraser command if one is available. And finally, if you're lucky enough to have a drive with built-in encryption, just instruct it to forever forget its old key and obtain another one, and the entire drive will then be scrambled with nonsense that nobody [01:14:00] will ever be able to decode.

(01:14:02):

So now everyone knows everything I know about securely erasing data from today's mass storage devices. So huge because you've debunked. I mean, I've tried to tell people this for years, but you've debunked years of misinformation. People still run dfr disc optimization on their SSDs, things like that. I mean, we carry old habits with us, I guess is the problem. And thankfully people listen [01:14:30] to you. So good job. Good job. Okay, so I think I have two more pieces and then we'll get to our main topic. Ethan Stone. He said, hi, Steve, apropos of yesterday's show. Here's a link to California attorney General's seven slash 31 press release regarding a new investigation of connected vehicles. Okay, so Ethan is referring back to last week's coverage of the Mozilla Foundation's revelations, which [01:15:00] they titled It's Official Cars are the Worst Product category we have ever reviewed for Privacy.

(01:15:10):

And Leo, that's another one of our main topics that you missed last week. There are I read that article, it was incredible. Unbelievable. So you know that they're even saying that they could report on the sex life of the occupants of the car. Yeah, unbelievable. Yeah. So anyway, for what it's worth, the California Privacy [01:15:30] Protection Agency, that's the C P P A enforcement division they wrote today, announced a review of data privacy practices by connected vehicle manufacturers and related connected vehicle technologies. These vehicles are embedded with several features including location sharing, web-based entertainment, smartphone integration, and cameras. Data privacy considerations are critical because these vehicles often automatically gather consumer's [01:16:00] locations, personal preferences and details about their daily lives. CPA's, executive director, Ashcan Sultani said modern vehicles are effectively connected computers on wheels. They're able to collect a wealth of information via built-in apps, sensors, and cameras, which can monitor people both inside and near the vehicle.

(01:16:21):

Our enforcement division is making inquiries into the connected vehicle space to understand how these companies are complying [01:16:30] with California law when they collect and use consumers data. So I think we can presume that since then Sultani or his people have been informed of Mozilla's connected vehicle privacy research that ought to make the CPA's hair curl or more likely catch on fire. So yeah, Leo, I'm glad you saw that and yikes. Mozilla has been doing great work, I have to say [01:17:00] along these lines, these privacy lines. That's really good. Yeah. So I have two pieces of miscellaneous, the first of two pieces of Leo, this is so cool. Go to grrc SC slash 2 0 3 8, obviously 2038 GRC SC slash 2038.

(01:17:22):

This is courtesy of our listener, Christopher Lasell who, or LOEL I guess [01:17:30] who sent the link to a super cool end of the world as we've known it. Eunuchs 32 bit countdown clock. It's beautifully. It is beautifully done. Okay. I just really admire this. It's a simple, very clean JavaScript app that runs in the local browser. Since 32 bit UNIX time, similar to a 32 bit IP address can be broken [01:18:00] down into four eight bit bytes. This clock has four hands with the position of each hand representing the current value of its associated bite of 32 bit Unix time. It's brilliant. Since a bite can have 256 hex values ranging from zero zero to ff, the clock's face has 256 index marks [01:18:30] numbered from zero zero to ff. So collectively, the four hands are able to represent the current 32 bit Unix time since each hand is a bite of that time.

(01:18:46):

Unfortunately, Unix time is a 32 bit signed value. Whoops. So we only get half of the normal unsigned 32 bit value [01:19:00] range. Did they do that so they could have negative time? Why did they I just don't think anyone paid attention. They never just thought people would still be using it in 2038. That's obviously, I don't think they even thought about it. I think they just said, okay, 32 bits, that's big and we'll make it an integer. Unfortunately, unless you say unsigned in a jerk, it's a signed in a jerk. Remember though, this is 1970 or 69 when an eight bit computer was like, wow. [01:19:30] So a 32 bit value must've seemed infinite. Well, and that's why, of course the internet protocol IP only got 32 bits. They were like, come on, 4.3 billion computers. We only have two. We have two right now. Exactly one on each end of this packet link.

(01:19:55):

So if UN's time had originally been defined as [01:20:00] an unsigned integer, we would have 68 additional years added to the 15 that we still have left. We're in almost 90% of the way to the end of time. Correct. Wow. Before the time would wrap around. So anyway, so what's going to happen is when the red hand on this clock gets down to pointing straight down at 80 Unix, time goes negative [01:20:30] because it's not good, Leo. It's not good. Nope. What's going to happen is the simulation that we've all been enjoying so much will crunch in on itself. Oh no. And that's otherwise known as game over.

(01:20:49):

Okay. Yeah. But this is a wonderful clock. GRC SC slash 2038. I commend it highly. It's just a beautiful, the green hand [01:21:00] is seconds. Well, yes. And you can see above it the lowest bite of eunuchs time in hex right above the clock dial is the last two digits. Yeah, yeah, yeah. Yes. And so that's the one you're able to see moving. And then every time the green one goes around, once the black one, which is the second most significant bite goes up one, it clicks up by one, and then there's the orange, which is whatever the next four significant bites, but there ain't no [01:21:30] red.

(01:21:33):

Actually the orange is bites. Three to a three is the third, the third most significant. I'm sorry. And then the red is the first two. Yeah. Right. So as soon as red gets halfway around, unfortunately halfway, because it's a signed value, it hits eight zero. That means the high bid is on in the 32 bit signed value, and that's a negative event. And so [01:22:00] again, that's at that point, it's over. We will solve this. We solved the 1999 problem. We'll solve this. Well, I got sun coming in off of. Look at that. I mean, not only that's apocalyptic. Not only in the UK do we have sun. Yes, maybe. Okay. So speaking of game over yesterday, Elon Musk complained that to exactly no one's surprise, since [01:22:30] taking the helm at Twitter, advertising revenue has dropped 60%. Apparently everyone except Elon knows exactly why this has occurred.

(01:22:42):

Major brands have alternatives and they don't wish to have their ads appearing next to controversial and often obnoxious tweets posted by the raving lunatics who Elon has deliberately allowed back onto his platform. Elon is all about [01:23:00] the free market. Well, this is the free market in action, Elon. Objectively, he is managing to destroy Twitter. Now, renamed X, which as an aside, is about the worst branding imaginable. Oh yeah. A brand is bad when everyone is continually forced to refer to it as X, formerly known as Twitter. In other words, the letter X is not sufficiently distinctive to establish [01:23:30] a brand. Well, go ahead, do a Google search for X and tell me what you find. I mean, whoever thought this was a good idea, Elon, by the way, he's named all his children with X as well. So he just loves this letter for some reason.

(01:23:44):

Wow. He's been casting around for more revenue first, as we know. He decided that he'd make people pay for the use of the blue verified seal. I had no problem whatsoever with losing my years [01:24:00] long verified designation since my entire use of Twitter is to connect with our listeners here, and no one here needs me to be any more verified than I already am. But then a month ago in mid-August, Elon decided that hadn't worked. So he decided to also take away the use of the tweet deck UI for all non-paying users. That was my sole interface to Twitter. So [01:24:30] losing it was painful. I refused to pay this extortionist any money for several weeks. I attempted to use the regular Twitter web browser user interface. I hated it in so many ways. I tried to get accustomed to it, but I finally gave up.

(01:24:49):

So I decided two weeks ago that because I was using Twitter for the podcast to broadcast each week's show note link, and now also the picture [01:25:00] of the week, and to communicate with our Twitter using listeners, I would pay this man $8 per month, $2 per security now episode in return for having access to a user interface that made assembling these show notes, communicating with our listeners and announcing each week's show note link, far less painful. So for the past two weeks, I've been back to using Tweet deck [01:25:30] and Joy has returned to the Valley, but still not satisfied with his revenue stream, and apparently unable to combat the storm of automated bot postings, which by the way, the previous management with a much larger staff was managing to hold at Bay. Yesterday. Elon announced that everyone, yes, everyone, all users of Twitter would soon [01:26:00] need to pay for access to his service.

(01:26:04):

Elon has now stated that he will be moving Twitter X behind a paywall, and I suspect that if he really goes through with it, this will finally spell the end of Twitter, at least the Twitter that we've known. Now, many of our listeners have communicated that they don't use Twitter and never will. Period. I mean like three quarters of the listeners [01:26:30] of this podcast, I'm estimating just yesterday, it's just tech journalists and crazy people. Well, I have 64,000 followers. Who are our listeners? I have half a million followers. I don't know if they're listeners though that they were paused. Well, I know I'm saying that all of this communication that I share is via Twitter, right? So for example, just yesterday I received a DMM from a listener who had just joined Twitter to send me that dmm. He said, I created an account [01:27:00] so I could send this message to you.

(01:27:01):

See the damage you're doing, Steve, get off of that place. So yes, this is not acceptable and it's apparently about to become even less acceptable. So I wanted to let all of our listeners know that because I value it so highly, I'm arranging for an alternative conduit for our communication. Thank you. Now, this sentiment of I don't use Twitter and I never Will, is [01:27:30] strong among the majority of our listeners. I understand that especially as time goes by, it's gotten worse and worse and worse. Yes. I also understand though that it's actually less specific than Twitter. The real underlying sentiment is I don't use social networking and I never will. So Twitter was just the specific instance of social networking that was most prominent at the time. Therefore, [01:28:00] by the way, you may not remember it, but some of our listeners do. I worked for years to get you on Twitter.

(01:28:08):

You were very resistant, but this was a decade ago, but you did not, wasn't on there. You were one of 'em. I was one of our listeners, and many of them have outlasted me in this. So the point is, I don't believe that moving to Mastodon Discord, blue Sky Threads, LinkedIn, Tumblr, Instagram, pebble, or any other, got to add Pebble. [01:28:30] I don't even know what that one is. Pebble. Pebble is t2. They rebranded the Pebble. So I don't think that's the solution for this particular community, for our listeners, even the fact that there are so many disjoint and competing platforms now demonstrates the problem, right? The answer I believe is good old fashioned email. We all have it. We all already have it. It works and it does [01:29:00] exactly what all want. I've previously mentioned that it has been my intention to set up good old tried and true emailing lists.

(01:29:09):

I'll create one for security now and invite this podcast listeners to join it each Tuesday before the podcast. I'll send a link to the week's show notes, a brief summary of the podcast and the picture of the week. Much as I've been doing on Twitter and anyone will be free to unsubscribe, resubscribe, subscribe [01:29:30] multiple accounts, do whatever they want. Since I very much want the communication to be both ways, I also need a means of for receiving email from our listeners while avoiding the inevitable spam. And I believe I have an innovation that will make that practical too. So I just wanted to make certain that everyone knew in advance of the apparent pending end of Twitter as a practical social media [01:30:00] communications platform for us that I would be arranging for its replacement. And I already know that this will be good news for the majority of our listeners who never have been and never will be participating on Twitter or any other social media platform.

(01:30:18):

So good. I think email is as universal as you can get. Yep, it is. And I think I have an extremely cool way of avoiding incoming spam, which all [01:30:30] of our listeners are going to get a huge kick out of, even get a kick out of using just to try it. So I will have news of that here at some point. Good. And in the meantime, Leo, our last sponsor, and then I'm going to share something I think everyone's going to find really interesting. Are you going to completely abandon Twitter or what's the deal? Are you going to stick around until he starts charging? The only thing that email replacement doesn't create [01:31:00] is a community, and there is, although I don't participate in it, there are a lot of people who are tweeting at SS G G R C and seeing each other's tweets, and so it kind of creates a community there.

(01:31:13):

But I mean, literally the only thing I use it for is to talk to our listeners and to receive dms and to look at my feed. And I've been complaining. It's like I can't also have a Mastodon presence because I just can't [01:31:30] hold. I'm already, I'm so spread thin. I've got the GRCs N N TP news groups, I've got GRCs forums. I would love just to move everybody to email. So that's my plan. Yeah. Well, if you ever change your mind, twit social is always open for you and you're, well, I'm already at SG GRC over at InfoSec social Good. I grab that because that's where InfoSec just have it just in it ever happens. [01:32:00] But I mean, there are so many listeners, Leo, who say, I don't do Twitter. Right? Yeah. Well, those people probably wouldn't do Mastodon either, so that makes sense. They won't go anywhere.

(01:32:10):

Right. Our show today, we will get to the highlight of the show in a bit, but our first, our show today brought to you Byta. Question for you. Is your organization finding it difficult to manually collect evidence and achieve continuous compliance as it grows and scales? It's funny, this requirement [01:32:30] kind of snuck up on us, didn't it? But you got to do it now, right? And so a lot of people are doing it manually as a leader in cloud compliance software. That's what G two says. D RADA is here to help. They streamline your SOC two, your ISO 27 0 0 1 P C I D S S G D P R, HIPAA, and other compliance frameworks. By providing 24 hour continuous control monitoring automatically, you focus on the job securing [01:33:00] your business and scaling and all of that. Let Rada collect the evidence with a suite of more than 75 integrations.

(01:33:06):

It works with pretty much everything you're using and more. Rada easily integrates through applications as a w s Azure, GitHub, Okta, CloudFlare, countless security professionals from companies like Lemonade and Notion Bamboo HR have all said how critical it's been to have Rada as a trusted partner in the compliance process. With rada, [01:33:30] you can expand your security assurance efforts using the ADA platform that lets you see all of your controls. You can easily map them to compliance frameworks. You'll gain immediate insight into framework overlap, which is of course in the long run, going to save you money. As well as time ADA's automated dynamic policy templates, support companies new to compliance using integrated security awareness training programs and automated reminders to ensure smooth employee onboarding. They're the only [01:34:00] player in the industry to build on a private database architecture. That means your data can never be accessed by anyone outside your organization.

(01:34:09):

I think that's pretty important. Customers will get a team of compliance experts working with you, working for you, including a designated customer success manager. And oh, I love this. Strada has a team of former auditors who've conducted more than 500 audits between them. Your ADA team keeps you on track to ensure there are no surprises, no barriers, [01:34:30] plus ADA's, pre-audit calls really help prepare you for when those audits begin. For real, ADA's audit hub is a solution to faster, more efficient audits, save hours of back and forth communication, never misplaced crucial evidence, shared documentation easily. Auditors love this because all the interactions and data gathering occurs in draha. So between you and your auditor, everything is there so you don't have to switch between different tools, different correspondence [01:35:00] strategies, auditors, it makes their life easier too, and that's probably good for you. With ADA's risk management solution, you can manage end-to-end risk assessment and treatment workflows, flag risks, score them, decide whether to accept, mitigate, transfer or avoid them draw to maps appropriate controls to risks, which simplifies risk management automates the process.

(01:35:21):