Security Now 1062 transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show.

Leo Laporte [00:00:00]:

It's time for Security Now. Steve Gibson is here. He's worried. He's worried about the future of CISA in the United States. He's worried about Ireland's new lawful interception law that makes spyware legal. He's worried about AI generated malware. Yes, it's here. All that and more coming up next on Security Now.

TWiT.tv [00:00:23]:

Podcasts you love from people you trust.

Leo Laporte [00:00:28]:

This is Twit. This is Security now with Steve Gibson. Episode 1062 recorded Tuesday, January 27, 2026. Void Link AI generated malware. It's time for Security now the show we cover the latest security news. This computer information that you need to know with this guy right here, the king of the hill when it comes to security, Mr. Steve Gibson. Hi Steve.

Steve Gibson [00:01:00]:

Leo, great to be with you again for another Tuesday, the last one of January. So say goodbye to January everybody. I don't know what where it went.

Leo Laporte [00:01:09]:

But be happy to let it go.

Steve Gibson [00:01:10]:

I'm not gonna say many, many people. It was a bad January and from many perspectives and certainly cold also. I guess the weather has just gone crazy too.

Leo Laporte [00:01:21]:

Yeah, really freezing cold. Well, so it's only 52 degrees here in California. It's just terrible.

Steve Gibson [00:01:28]:

I don't know what to do.

Leo Laporte [00:01:31]:

All right, what are we covering on the show today?

Steve Gibson [00:01:33]:

Enough for Vol are Checkpoint Research. I think they call themselves Checkpoint Research. Checkpoint is how we know them. Did a took a close look at what they recently discovered as a the first very impressive and very concerning purely AI generated malware because the developer made the mistake so not a rocket scientist here or a security guy, kind of an anti security guy actually of leaving a directory exposed. His like what one of his servers directories was exposed. They were able to get an literally an inside look at the production of an AI generated malware. And there's some important takeaways from that which we're going to get to. But first we're going to look at unfortunately that sisa's uncertain future which I kind of thought it had been resolved.

Steve Gibson [00:02:48]:

But no, our old friend Rand Paul has stuck his finger in this and is gonna maybe cause some trouble. We'll see. We've got a worrisome new law which has been passed in Ireland which we need to take a look at because for a while I actually had a working title for the podcast Leo was the State versus Encryption. And we're going to end up with a couple stories that are that which is why the podcast carried that working title until I saw that I really we need to talk about malware and AI There is a group in the eu, the Digital Rights Group organization, pushing back on some of what seems to be happening. So we're going to take a look at that. I. I never had a chance to hear what you guys and Alex Stamos on Sunday were talking about relative to Microsoft's acknowledgment that it has turned over some encryption keys to the FBI. We're going to discuss that.

Steve Gibson [00:03:58]:

And I have again, I didn't hear what happened on Sunday, but I have maybe a surprising takeaway for our listeners relative to Microsoft. I try to be very fair. I know I'm very hard on them. Like, most of the time in this case, I take a different position.

Leo Laporte [00:04:16]:

Yeah, I think you and Alex may actually be on the same page. I'll let you know what he thought about it when we get to it.

Steve Gibson [00:04:22]:

Also, our old friend Alex Niehaus had some really useful and insightful feedback about AI enterprise usage and the how it's fraught with some dangers. I want to share that with our listeners. Another listener, Gavin, confesses that he deliberately put a database on the Internet and explains why. Oh, and there are some worries, Leo, about the need to rewind podcasts and how there may be a massive backlog that is growing, which we need to deal with. And then we're going to take a look at the emergence of. Remember our DVD rewinder from last week? Oh, that's right.

Leo Laporte [00:05:11]:

No, people have been leaving this show at the end and not rewinding it. I think this is a massive problem.

Steve Gibson [00:05:18]:

It's really. You're not thinking about the next person. Right. You're just saying, thank you very much, I took the last napkin and F you, I don't care.

Leo Laporte [00:05:29]:

So I always do that. Or Lisa yells at me because I'll leave this much milk in the carton, you know, put it back in the fridge. And it's very disappointing to her.

Steve Gibson [00:05:38]:

And Leo, let's not even get into being married. And toilet rolls.

Leo Laporte [00:05:42]:

Oh, yes. Oh, yeah. Good. Oh, yeah.

Steve Gibson [00:05:45]:

And does it come off the front or off the back? But anyway, that's a whole other topic. We do have a picture of the week, which we may get to someday. Do we have four ads I didn't. Or five I didn't.

Leo Laporte [00:05:55]:

We have, I believe, three, which is for this show, a dearth, a paucity of ads. But we will. We will. I will pause. And sometimes people might have noticed this. An ad will sneak in after the fact.

Steve Gibson [00:06:09]:

Are a cunning linguist. That's all I have to say.

Leo Laporte [00:06:12]:

So as far as I know I will be reading three ads. Others may be inserted against your.

Steve Gibson [00:06:18]:

Okay, so we're going to do our five pauses.

Leo Laporte [00:06:21]:

Yes, as always.

Steve Gibson [00:06:21]:

Pause that refreshes my. My whistle.

Leo Laporte [00:06:25]:

Always want to keep that whistle wetted. I will pause now to tell you about a sponsor that you probably everybody should know about because this is exactly right up your alley, Steve. In fact, it's part of your presentation when we go to Florida for Zero Trust World. The problem being if you are a company, that your vulnerability sits right there in that seat right there at the. At the desk.

Steve Gibson [00:06:54]:

Yep.

Leo Laporte [00:06:55]:

Our show today, brought to you by Hawks Hunt. As a security leader, you know your job is you get paid to protect your company against cyber attacks, right? But that is getting harder and harder. Not only are there more cyber attacks than ever, but these phishing texts and emails and messages that are generated with AI are really good. Very deceptive. I got, as I mentioned, I got fished a couple of weeks ago. Here's the, here's the problem. You might be using one of those legacy one size fits all awareness programs. They really don't stand a chance in today's modern world.

Leo Laporte [00:07:30]:

They send at most 4 generic trainings a year. Most employees ignore them. And you know, in some ways worse, when somebody actually clicks, you know, the fake phishing email, then they're forced into embarrassing training programs that feel like punishment. And we know that's no way to learn. If you are hating the training, you're.

Steve Gibson [00:07:54]:

Not going to learn anything.

Leo Laporte [00:07:55]:

That's why more and more organizations are trying HOX Hunt. This sounds strange, but Hox Hunt makes it fun. Hox Hunt goes beyond security. Awareness actually changes behaviors. And they do it in the simplest of ways, by rewarding good clicks and coaching away the bad. Whenever an employee suspects an email might be a scam, Hox Hunt will tell them instantly, providing a dopamine rush. It gets your people to click, learn and protect your company. Like gold star stickers, Confetti as an admin hawkshunt makes it easy to automatically deliver phishing simulations as often as you want in every form.

Leo Laporte [00:08:35]:

You want email, slack teams, whatever. And you can use AI to mimic the latest real world attacks to make your simulations as good as the real phishing emails. Simulations can even be personalized to an employee based on department location and more. And these instant micro trainings are so much better than the kind of like two hour flash thing that you've had to sit through. These micro trainings are quick. They solidify understanding. They drive lasting, safe behaviors. You can trigger gamified security awareness training that awards employees with stars and badges, boosting completion rates and ensuring compliance.

Leo Laporte [00:09:13]:

Because it's fun, you'll choose from a huge library of customizable training packages, or you can even generate your own with AI Hawkshunt. They've got everything you need to run effective security training on one platform. Meaning it's easy to measurably reduce your human cyber risk at scale. But you don't have to take my word for it. Over 3,000 user reviews on G2 make Hox Hunt the top rated security training platform for the enterprise, including easiest to use best results also recognized as customers choice by Gartner. Thousands of companies love it. Qualcomm, AES, Nokia all use Hoxhunt to train millions of employees all over the globe. Visit hoxhunt.com securitynow today to learn why modern secure companies are making the switch to Hawkshunt.

Leo Laporte [00:10:01]:

That's hoxhunt.com security now. Hox Hunt like Fox Hunt, but with an H. So it's H O X h u n t.com security now instead of hunting the fox, you're hunting the wily hacker. Hoxhunt.com SecurityNow we thank him so much for their support of Steve and the efforts he makes every Tuesday for us. So I've got a picture of the week and we.

Steve Gibson [00:10:28]:

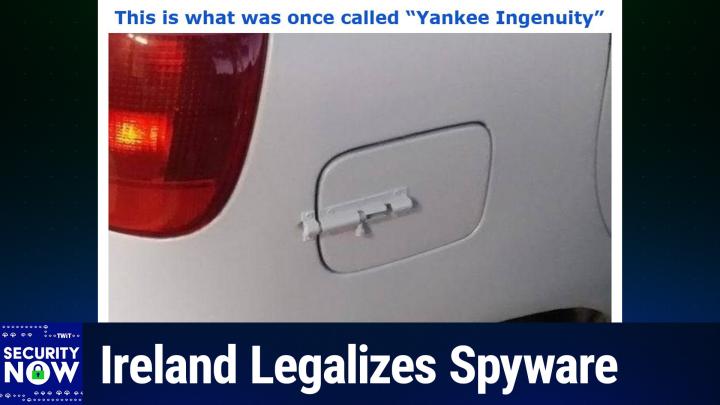

It's another popular one. It generated a lot of feedback as these have been recently. I gave this in the title. This is what was once called Yankee Ingenuity.

Leo Laporte [00:10:39]:

Okay, let me put it up on the big screen and we can look at it together here. I'm going to scroll up. Yankee Ingenuity. Okay, you want to describe that.

Steve Gibson [00:10:55]:

So we don't really know what the backstory is, what's going on here, but when we were growing up, we probably encountered like garden gates where you, you had a. It was a. It was a slider that slid into a mating capture retainer.

Leo Laporte [00:11:16]:

I'm sure there's a name if you go to the hardware store. It's some sort of slide bolt or something.

Steve Gibson [00:11:21]:

Yeah. And so you would lift the arm up, slide it over them, then put it back down and gravity would keep it rotated so that it would stay either locked open or locked closed. Anyway, it's meant for some barn, right? A barn door kind of thing. Well, apparently somebody's having some sort of problem with their gas cap cover of their car.

Leo Laporte [00:11:47]:

It's been popping open.

Steve Gibson [00:11:49]:

And what really impresses me, Leo, is they did not leave it looking like, you know, silver chrome. Oh, no, it's been body painted to be to match the car perfectly. So you, unless you were, I mean if you were walking by it, you might miss the fact they'd added a barn door closing lock to the outside of, of their gas cap cover. So one of our insightful listeners who received it, I got the email out actually early this week. They went out Sunday evening, although Microsoft took it upon themselves to decide that GRC was not trustworthy. So 1547 of the piece of the 9 of the nearly 20,000 is 19,800 and something. 1547 were all blocked at the way if they went to outlook.com or hotmail.com when I saw that that had happened I was able to collect them and they went out with no trouble at all last night. So it's like okay, Microsoft, I guess maybe Sunday freaked you out.

Steve Gibson [00:12:58]:

I don't know because normally I do on Monday or Tuesday anyway. No, I mean it just, that's some. I, you know, had a spasm and so.

Leo Laporte [00:13:07]:

It's never going to stop, Steve. There'll always be a little bit of this here or there, I'm sure. I'm convinced. It's just know.

Steve Gibson [00:13:13]:

Yeah, well the, it's, there's. The spammers are trying to look legitimate and so there, there's a value judgment that's having to be made. So any, in any event, this listener said, well, you know what that is? I thought no, said that's two factor authentication. You've got the in inside, you have to pull the trigger to release the cap and then you have to come around outside and use the second factor to slide the bolt over in order to open the cap. I suspect what most of our listeners do that the automatic cap closer holder broke. And so you know, the COVID was flapping on the breeze and they said hey, you know, we got it. We, we used to have a barn but we don't have it. But we do have the lock that used to keep.

Leo Laporte [00:14:01]:

They're probably closed padding themselves on the back because initially they used duct tape to hold it closed and then they decided to really upgrade it with the.

Steve Gibson [00:14:09]:

Slide which would explain the need to repaint the car in the same color. Yes, that would make sense. Okay. So I've said for years that I have been pleasantly surprised by the success and effectiveness of cisa. You know, it's been an amazing success. The Cyber Security and Infrastructure Security Agency, awkwardly named but boy are they doing a great job. Since its creation 11 years ago, 2015, it's been a CISA has been a huge win for our nation's. Cybersecurity.

Steve Gibson [00:14:49]:

You know, my, my default belief is that government has a difficult time getting out of its own way way more often than not. So CISA was a welcome and actually well needed exception to that. As this podcast has covered since its inception, they've been able to mandate that government agencies pay needed attention to many specific critical security problems that would otherwise have fallen through the cracks. You know, the government agencies have better things to do than oh well, why we really don't want to do an update. They'll have to have our network down for, you know, blah blah, blah, whatever. And, and besides, nothing's happened yet, right? You know, and CIS has also been empowered to set deadlines which had to be honored. Their creation of Kev Kev, the Known Exploited Vulnerabilities catalog was a brilliant means of focusing those always limited and readily, you know, distracted bureaucratic resources where they were needed. And yeah, it's true, 125 security things happen on the second Tuesday of the month.

Steve Gibson [00:16:05]:

But it turns out that two of those are really critical. And CIS has been been instrumental in saying get to the other things when you can, but do these now because these really matter. So that's all been good news. The bad news is that CISA was not created to be a permanent entity. Sadly, the Constitution of the United States is completely silent regarding the need for a permanent cybersecurity watchdog agency within the federal government. I guess our forefathers were unable to foresee that. This means that politicians created CISA and politicians are required to keep CISA funded and and authorized. Last week the record updated us on the state of CISA's continuation writing congressional leaders on Tuesday.

Steve Gibson [00:17:01]:

Meaning last Tuesday released a compromise government funding bill that would once again temporarily, temporarily extend the life of two key cybersecurity laws. The bipartisan legislation would reauthorize the 2015 Cybersecurity and Infrastructure Security Act, CISA or agency and the state and local cybersecurity grant program pushing it through the end of September. The extension in the $1.2 trillion that's the entire funding bill, $1.2 trillion is the latest short term solution in a months long saga for CISA 2015, which provides liability. We've talked about this just last week l crucial liability protections to encourage private companies to share digital threat information with the federal government. And as we've said, it's like they're not going to do it unless they have liability protection. It was the, the, the C Suite executives made that very clear. The Record says both Statutes received widespread support from the cybersecurity community and the Trump administration prior to their expiration last year. They received temporary reprieves in the continuing resolution that reopened the government in November.

Steve Gibson [00:18:31]:

The House did approve a bill to extend the grants effort, but there's been no action on the Senate side. Meanwhile, several proposals have been introduced to reauthorize the the 2015 CISA long term. Please let let that happen. We like why let's not make this another football that we keep kicking this we need and not having it has already like stalled the like there's now a gap that needs to be filled because industry went silent as soon as they lost their liability protections anyway, the record wrote. The House Homeland Security Committee last year passed legislation to renew it for a decade. Thank you. With minor updates but it hasn't been scheduled for a floor vote. A bipartisan Senate duo introduced a bill that would extend the law for 10 years.

Steve Gibson [00:19:26]:

Yes, and provide retroactive protect retroactive protections for companies to that shared cyber threat data even after the law lapsed. But as I mentioned, the record writes Senator Rand Paul, chair of the Senate Homeland Security Committee, has drafted a bill that would trash the legal protection outlined in the original statute. Well thanks. They wrote. House leaders plan to hold a vote later in the week on the spending deal which boosts defense funding to over 839 billion with a B dollars. Lawmakers have 10 days to clear the package for President Donald Trump's signature before federal funding is set to lapse for the programs it covers. With a Senate in recess this week meaning last week, the upper chamber will need to approve the legislation when they return next week, meaning this week if Congress is going to head off another funding lapse and a a partial government shutdown. And as a total aside, there's a lot of conversation now that looks like we may be going into another shutdown over the dsh, DHS and the the reauthorization and increase in other aspects of the budget.

Steve Gibson [00:20:49]:

So we'll see. And I don't know what's up with Senator Rand Paul. He's always something of a wild card and pretty much a pain in everyone's butt. But after first being elected to the Senate in 2010, he's been re elected twice since every six years. So he seems to be what his state of Kentucky wants in a senator. Of course he, he shares that position with Mitch McConnell. But in this case it will be very bad if he gets his way. As again as we noted last week, the executives of the nation's private infrastructure agencies Consider that their vulnerability and breach disclosure protections to be critical and a crucial feature of this legislation.

Steve Gibson [00:21:34]:

So much so that it. In this, in, in the, the. The bills that are being talked about, protections are being made retroactive because they've said we need to have that. You asked us to keep talking to you after CISA lapsed and we did, some of us. So you need to protect us from that anyway. No one can make these executives disclose information which is privately held if they choose not to. So if the government wants to know what's going on, as it should, then protecting those who are voluntarily disclosing is the entire point of this aspect of the reauthorization. You know, we should know in another week or two whether the politicians have now screwed up what had been a surprisingly well designed and well working system.

Steve Gibson [00:22:31]:

As I said, when, you know, we Talked about this 10 years ago on the podcast when it happened, it's like, oh, great, you know, another Homeland Security Agency. Well, then we got surprised because it just, it was so effective and so useful. Of course, at the time, it was really well managed. It was Chris Krebs who was, Was he the original. I don't know.

Leo Laporte [00:22:56]:

He was the one who was fired because he said the election was secure.

Steve Gibson [00:23:01]:

Right. They looked very closely at the 2020 election. Yeah.

Leo Laporte [00:23:05]:

Yeah.

Steve Gibson [00:23:06]:

So I would imagine he was, because if that was 2020 and the. It was created in 2015, then it would have only been five years old at that point.

Leo Laporte [00:23:14]:

Yeah, we talked. Okay, so Alex Stamus is a partner, of course, for a long time. Was Chris Grimes. Yeah.

Steve Gibson [00:23:18]:

Right. Okay. So one of the reasons that I was the. The working title of the podcast until I got to the news about AI and malware was the State versus Encryption. Is Ireland's New lawful. I just love this word. There's this phrase, lawful interception law. Right.

Steve Gibson [00:23:43]:

They can make whatever laws they want. So the first half of this next piece is the new. Is the news that Ireland has just passed a new lawful interception law granting the government significant new powers. The short blurb that carried that news, it. The, the short blurb just said. This is all I first saw. It said the Irish government has passed a new lawful interception law. The new legislation grants law enforcement and intelligence agencies the power to surveil any type of modern communications channel.

Steve Gibson [00:24:25]:

It also grants the agencies the right to use covert software for their operations, such as spyware. The new law will also. Huh. It's not. It's not. You don't have to be ashamed or bashful or shy or pretend you're not doing it anymore. Now it's a law. Now it's legal.

Steve Gibson [00:24:46]:

The new law, it's this little blurb said will also require communications service providers to work closely and aid any government operation. Okay, so add this to the other recent news of pending and enacted legislation. You know, we, we are clearly witnessing. Remember we talked last week about Germany. Basically we can't pronounce the name of the agency because it's got 25 letters in his name but. And mostly consonants, but they're doing the same thing. So we're seeing, we're witnessing an accelerating trend in governments legislating themselves, sweeping rights to intercept, monitor and, and eavesdrop upon pretty much anything they wish. Okay, so this week we have similar legislation to what we discuss we talked about in Germany last week, which has passed.

Steve Gibson [00:25:43]:

It was pending in Germany. Ireland passed this. So I scanned last Tuesday's press release from the Irish government from which I'm going to excerpt two pieces, just two notable pieces. The first point talks about the clear need for an update to their very old law. I don't think anybody would agree with, would argue with that because that was the original law that's being updated by this legislation is from 1993 and you know, need to update. That's non controversial. But point number two says that the new law includes, quote, a clear statement of the general legal principle that lawful interception powers needed to address serious crime and security threats are applicable to all forms of communications. So to call this sweeping.

Steve Gibson [00:26:42]:

You know, I don't know if I mean that's the right word for it. Right. They, they specifically write the Minister proposes and the language here is proposes. But this law passed. Just to be clear, the Minister proposes an updated legal framework which is flexible and includes comprehensive principles, policies and definitions to allow for lawful interception powers to be applied to any digital devices or services which can send or receive a communications message, for example, the Internet of things and email and digital messaging devices and services. The legislation will provide for a clear statement and which is to say the legislation does provide a clear statement of general principle that lawful interception powers apply to all forms of communications, whether encrypted or not, and can be used to obtain either content data, they say the substance of a communication of or related metadata data that provide information about a communication, but not its content, such as phone call or email, time, date, sender, receiver of a communication, the geolocation of an electronic service or source and destination IP addresses. But they're specifically also saying and the content. And it says the legislation will also apply to parcel delivery services.

Steve Gibson [00:28:16]:

The Minister's view, they write, is that effective lawful interception powers can be accompanied by the necessary privacy, encryption and digital security safeguards. Right. Because. Because they're expert in this, leo. The. These legislators, they know their crypto. It says. In June 2025, the EU Commission published a quote roadmap for lawful and effective access to data for law enforcement, unquote, which stated that terrorism, organized crime, online fraud, drug trafficking, child sexual abuse, we knew we were going to get to the kids online.

Steve Gibson [00:29:00]:

Sexual extortion, ransomware and many other crimes all leave digital traces. Around 85% of criminal investigations now rely on electronic evidence. Requests for data addressed to service providers tripled between 2017 and 2022, and the need for these data is constantly increasing. I love the fact that they're. That they treat the word data as plural. I always do that. Right. I know, it's just.

Steve Gibson [00:29:31]:

It's so nice. It's refreshing to see it and. But it always surprises me because I don't remember to do it. They said the com. The Commission paper includes proposals to deliver a technology roadmap on encryption issues with expert input and emphasis. Emphasizes the need to reconcile technology. Reconcile technology and lawful access concerns. Oh, gee, you think? Through industry standardization activities, this EU initiative complements the Minister's proposed approach to reforming the law on interception in Ireland and it will inform the development of the general scheme, and that's capital G, capital S.

Steve Gibson [00:30:14]:

So the general scheme is something that they're going to inform and develop. So. Okay, I have of course much to say about this, but I want to first share the pre release's fourth point regarding, quote, the inclusion of a new legal basis for the use of COVID surveillance software as an alternative means of lawful interception to gain access to electronic devices and networks for the investigation of serious crime and threats to the security of the State, they write. The Minister also proposes to provide a legal basis for the use of COVID surveillance software as an alternative means for lawful interception to gain access to electronic devices and networks for the investigation of serious crime and threats to the security of the State. This is used legally in other jurisdictions for a variety of purposes when necessary, such as gaining access to some or all of the data on an electronic device or network, covert recording of communications made using a device, or disrupting the functioning of a personal or shared IT network being used for unlawful purposes. The Minister proposes to take into account a 2024 report from the European Commission for Democracy through Law to the Council of Europe, the Venice Commission on this subject, which was titled, quote, Report on a Rule of law and human rights compliant regulation of spyware. So in other words, conduct that has historically been denied by governments which were doing it anyway and which no state agency would admit to using or doing, is now being ratified into law and made explicitly legal. I believe that any objective observer who's witnessed the earlier saber rattling and more recently both the pending and enacted legislation that governments are, are, you know, seem determined to, to pursue would have to conclude that we are currently in an environment of slowly eroding privacy protections.

Steve Gibson [00:32:46]:

Encryption happened, right? I mean the math happened. And along with everyone else who appreciated knowing that their communications were private, you know, which, you know, it was just kind of like okay, thanks, that's nice to have. Bad guys started using it and they soon discovered that it protected them from law enforcement. While encryption was not created by any means to protect criminals, the privacy it affords everyone, you know, the privacy it affords doesn't know or care whether you're doing good or breaking the law. It's your, your communications is encrypted. So when bad guys began hiding behind the same encryption that everyone else was using because it was there, law enforcement quite reasonably asked providers for the contents of the bad guys encrypted messages. And they were told that the system had been deliberately designed to provide absolute communications content privacy for all of its users, regardless of their use. And that we, the providers of this technology, we were unable to comply with lawful court orders to turn over their users data.

Steve Gibson [00:34:10]:

They said they did not have that data and they had no means of obtaining it. Now that stumped the world's governments for a few cycles until someone had the bright idea to simply require the world to work differently. They said, we all agree that citizens have fundamental rights to privacy, except in cases where that privacy is being abused and is not in the public interest. So we've decided that we will determine when and where people should have privacy. And since we're a nation of laws, we're going to make it legal to do whatever we need to in order to obtain the privacy violating access to our citizens communications that we've determined we need to have. Always of course, in support of the greater good. And besides, think of the children and you know, that same objective observer that I talked about before would see that we're currently in a period of transition. The truth of encryption caught the world's governments off guard.

Steve Gibson [00:35:21]:

They've all seen the same movies that we have. Those movies, think about it, uniformly depict both hackers and intelligence services cracking the encryption. Whether you know, like, like whenever they were asked to whenever it was really necessary to do so. So everyone knows that really good encryption just takes somewhat longer to crack. Right? That's what the movies all showed us. The politicians just assumed that was true. Why wouldn't they? They believed that was the way encryption really worked right up until they encountered the truth of today's encryption. They didn't really understand that modern encryption is absolutely unbreakable.

Steve Gibson [00:36:09]:

That's what the industry created, period. So what we've seen is that it took them several rounds of stumbling and failed legislation and. And trying to figure out how to ask for what they wanted to finally figure out that what's actually needed is for them to outlaw any encryption that no one can break. They want the encryption we have in the movies, and they're going to keep writing and rewriting legislation until they get it. So the formal legislation of the use of spyware is just the next step along that path. Now they're saying we're going to make our use of spyware legal. That will be lawful if we decide that we need to deploy it in order to obtain access to encrypted communications. Another step down that path.

Steve Gibson [00:37:08]:

So we're not yet where we're going to end up. But again, our objective observer of the last several years would have to conclude that the world's governments, their law enforcement and intelligence agencies will not be satisfied until it's possible to obtain access to the communications, probably of anyone they desire.

Leo Laporte [00:37:29]:

It strikes me this is a pretty savvy move on the part of the Irish government because I think that what they're recognizing is, well, we can't demand clear text from Signal, WhatsApp and all these companies. So the next step is, and we've talked about this before, to go pre encryption, to go where the messages are in plain text, that is on your device, pre encryption. And to do that, they need the spyware. So I think this is the next stage. And this is saying, all right, I get it, Signal is going to withdraw from Ireland if we make it illegal to have strong encryption. So, oh, I got it. We'll just get on everybody's phone. This is the next step.

Leo Laporte [00:38:18]:

After this is what Russia and China do, which is mandate that you put a special app on the phone so that we can see everything that's going on. Yeah, but. But that's. Now the interesting thing is they may have to go to that point, because I think they may be. Here's where they're technically less literate. They may overestimate the ability of spyware to do this. Right. But these kinds of exploits are not easy to get.

Leo Laporte [00:38:46]:

No, they're generally one time use because you know Apple will patch it the minute they figure it out.

Steve Gibson [00:38:52]:

Exactly. As soon as anyone finds it, they're able to say whoops, you know, so.

Leo Laporte [00:38:57]:

They may have a, this may be where they're, you know, they, before they thought oh we can break encryption now that maybe they're starting to realize we can't. Oh, but spyware. But maybe they don't really understand that it's not as trivial as you might think. I mean I guess the NSA had its tools. That's what we learned from Edward Snow.

Steve Gibson [00:39:14]:

And you're right, it, it may be that where we end up where, where they for example the EU ends up is requiring an app on everyone's phone.

Leo Laporte [00:39:24]:

I think it's the only. It's the ultimate. Right?

Steve Gibson [00:39:26]:

Yep.

Leo Laporte [00:39:27]:

Everybody has to run this app and then they can see everything. And it's all pre encryption. So signal. You can still use signal.

Steve Gibson [00:39:36]:

Well, yes, it's bad PI. We, we, we, we. You know pre Internet encryption was a great thing once.

Leo Laporte [00:39:42]:

Now Internet decryption, it's pit.

Steve Gibson [00:39:44]:

Yeah, yeah.

Leo Laporte [00:39:49]:

But the other, the other good side of this is as you say, the math happened and it's all, it's easy. It's well known how to do encryption now. So it's.

Steve Gibson [00:40:00]:

The counter argument is when it's illegal, only the criminals will use it.

Leo Laporte [00:40:04]:

Right. Or people who are motivated and, and, or smart enough to figure out how to do it without.

Steve Gibson [00:40:11]:

Well, it again, it. I mean they're going to outlaw the. They're in. They're going to outlaw their inability to spy.

Leo Laporte [00:40:18]:

Right.

Steve Gibson [00:40:19]:

In which case you will be a criminal even if you're just encrypting to talk to your mom. If they want to see what you said to mom and you're unwilling to give them the keys, then you're guilty of that.

Leo Laporte [00:40:31]:

You know, I said this in an interview 25, maybe no 30 years ago that ultimately hackers might be the freedom fighters of the 21st century. That the people who understand how to get around these things may actually be the people who are fighting for our freedom. Yeah.

Steve Gibson [00:40:47]:

Neo.

Leo Laporte [00:40:48]:

Neo, yeah, right. Neo. This is the point of the Matrix. All right, I'll let. Do you want to, do you want to refresh? Do you want to hydrate? Okay, he's nodding folks. You can't hear it, but his head is vigorously bobbing up and down and he has a gigantic mug of of Joe in his hand. Our show today actually brand new sponsor. I want to tell you about.

Leo Laporte [00:41:14]:

I'm very excited to welcome Trusted Tech to Security. Now, this is something you may need. If you're using Microsoft 365, there's a pretty good chance you're paying for licenses you don't need or can go the other way. You might be missing ones you do. And let's not forget that coming in July, Microsoft is going to implement a significant price increase for M365. And with it there'll be a lot of nuance, a lot of subtleties. Trusted Tech helps businesses of all sizes get the most out of their Microsoft investment by ensuring that their M365 environment is well supported and aligned with how the business actually operates. So you're not spending too little, you're not spending too much.

Leo Laporte [00:41:58]:

It's just right. But that's something it's nice to have some help with. Microsoft licensing is very complicated. The options can vary widely. But Trusted Tech, they're the experts. Their team helps organizations understand what they have, what they need, and how to get the most out of what they're paying for. If you want to make sure you're getting M365 done right, trusted Tech can help you. They are right now.

Leo Laporte [00:42:23]:

You can go there and get a free Microsoft 365 licensing consultation. It's very straightforward, won't take much time. They're really good at this. These are the pros. Visit trustedtech.team and don't forget this part. SecurityNow365 that's trustedtech.team SecurityNow365 where you get a clear data backed view of your current licenses, optimization opportunities and next step. You know, if you're wondering, Kevin Turner, former Microsoft coo, has very good things to say about Trusted Tech. He vouches for him.

Leo Laporte [00:43:02]:

He says Trusted tech has an incredible customer reputation and you have to earn that every single day. The relentless focus you guys have on taking care of customers gives them the value and differentiates you in the marketplace. That's high praise from a guy who knows. Trusted Tech also can elevate the Microsoft support experience with its certified support services. You can ask them about that. And by the way, some of the biggest and best use trusted tech for their certified support services. Enterprises like NASA, Netflix, Neuralink, Apple, Intel, Google and Lockheed Martin. Okay, you couldn't get a better list of Clientele and they're saving 32 to 52% compared to the average Microsoft Unified support agreement.

Leo Laporte [00:43:51]:

Great support for less. Whether you're looking to fine tune your Microsoft 365. Licensing or improve the way your organization receives proactive Microsoft support or both. Trusted Tech offers free consultations to help you understand your options. This is a name you need to know. Go to TrustedTech team SecurityNow365. You could submit a form to get in contact with Trusted Tech's Microsoft licensing engineers. The guys who know TrustedTech Team, slash SecurityNow 365.

Leo Laporte [00:44:26]:

Use that address. Make sure you do, because that way they'll know you saw it here. Trustedtech.team SecurityNow365 and we thank them for believing in us and supporting the mission of Steve Gibson and Security. Now thank you Trusted Tech. Back to you Steve.

Steve Gibson [00:44:46]:

So the day after Ireland proudly enumerated the various features of its newly passed expansive legislation, EDRi, the European Digital Rights Organization, perhaps in response to Ireland's announcement posted under the headline EDRi launches new resource to document abuses and support a full ban on on spyware in Europe. And you know, okay, good luck. It seems that the European Union has their own equivalent of the EFF and its edri. Their posted piece begins by stating the context Europe's spyware crisis remains unresolved. And they write, spyware remains one of the most serious threats to fundamental rights, democracy and civic space in Europe. And of course, Leo, as you just point out, spyware is where Europe wants to go because they want the access that they can't get, EDRI said. Over the past years, repeated investigations have shown that at least 14 EU member states have deployed spyware against journalists, human rights defenders, lawyers, activists, public political opponents and others. Notice, we're not, we're not saying criminals, we're saying people we don't like for one reason or another.

Steve Gibson [00:46:18]:

We're going to just spy on them. So who imagines that that won't accelerate hugely if spyware is made legal anyway? That's me, edri said. These cases have revealed the reality of an opaque, dangerous market that thrives on exploiting vulnerabilities and endangering us and the state's reluctance to provide any accountability or justice for victims. Right, so they're going to legalize. They're going to legalize what they've been doing. Despite the findings of the European Parliament's PEGA inquiry committee in 2023 and the push from human rights organizations, the European Commission has so far refused to follow binding legislation to prohibit spyware. Not only that, it has done nothing. Right now, no EU wide red lines exist against the use of Spyware.

Steve Gibson [00:47:15]:

Well, right, 14 states have done it and they want to be able to keep Doing it. So they wrote, this means that victims lack effective remedies, authorities face no scrutiny, and commercial spyware vendors continue to operate with near total impunity, enriching themselves by violating human rights and even benefiting from European public funding, because after all, this is taxpayer dollars that are, you know, and these spywares not cheap. At the same time, they said, this political inaction is increasingly being challenged. Investigative journalists, researchers and civil society organizations have continued to expose spyware's human impacts and the opaque markets behind its development and deployment. A broad coalition of civil society and journalism organizations has openly called on EU institutions to end their inaction and to adopt a full ban on commercial spyware. Adding to this push, EDRI has also adopted a comprehensive position paper calling for a full ban on spyware in the European Union as the only possible path forward from a human rights perspective. So, you know, basically the, the battle is escalating and, and it's being made more visible and more public. We've got, we got EU states wanting to legalize their use of spyware and the, the human rights privacy protecting organization saying, let's make it very clear that this is not legal.

Steve Gibson [00:48:55]:

They said our collective refusal to accept the normalization of the use of spyware is also visible inside the European Parliament. On 21st of January this year in Straussberg, an informal interest group against spyware was launched, bringing together MEPs from across political groups with the aid with the aim of maintaining scrutiny and challenging the Commission's inaction. While this does not replace legislative action, it signals that political pressure is growing instead of fading. Right. Like I said, it's becoming more and more public, so we'll see what happens. The. This spyware document pool that this posting introduces is a really terrific piece of work. I'm only going to share a tiny piece of it, but I've dropped a link to the entire pool into the show notes.

Steve Gibson [00:49:55]:

It's the. The end of the URL is spyware hyphen document hyphen pool and it's at the top of page 6 in the. In the show notes. The piece I wanted to share from it addressed the nature and the size of the commercial spyware market. They wrote, the commercial spyware market has grown rapidly over the past decade. This market is now worth billions of euros, driven by the sale of these tools to governments, law enforcement agencies, and sometimes private actors. Its growth is fueled by an ecosystem that combines technological sophistication with near total opacity, allowing companies to operate across borders and evading accountability. This makes spyware a Highly profitable, yet extremely dangerous sector where abuses remain hidden until uncovered by researchers or investigative journalists.

Steve Gibson [00:51:00]:

The global spyware industry is estimated to be worth on the order of, of 12 billion euros per year.

Leo Laporte [00:51:09]:

It's illicit though, right?

Steve Gibson [00:51:11]:

I mean, that's absolutely. It is not legal anywhere. Right.

Leo Laporte [00:51:14]:

That's amazing.

Steve Gibson [00:51:16]:

12 billion euros companies are, are paying, you know, like Maduro in Venezuela is like, you know, he would be a typical customer because he's got lots of money and he would like to spy on anybody who opposes him publicly.

Leo Laporte [00:51:34]:

Well, and now they're going to get the Irish government as a customer. So that's good, right?

Steve Gibson [00:51:39]:

More than 880 governments have contracted for commercial spyware, according to the UK's Cyber Security Agency.

Leo Laporte [00:51:49]:

Well, that's like half. That's like Everybody.

Steve Gibson [00:51:52]:

Yeah. In 2023, there were at least 49 distinct vendors, along with dozens of subsidiaries, partners, suppliers, holding companies and hundreds of investors across the supply chain. 56 of the 74 governments identified by the Carnegie Endowment procured commercial spyware from firms either based or connected to Israel. The Israeli firm Paragon was acquired in 2024 by an investment firm in a deal worth up to 900 million euros. And this is the market that Ireland, as we have been saying, has just taken out of the shadows and made legal for their own use for what they're calling lawful interception.

Leo Laporte [00:52:49]:

Wow.

Steve Gibson [00:52:49]:

The other piece of data that I thought our listeners would find interesting was about the market for the vulnerabilities that enable the creation and deployment of this spyware. They write, the buying and selling of zero day vulnerabilities is closely linked to the spyware market. As these flaws allow spyware to bypass security protections and operate undetected. The vulnerabilities market is dangerous because it magnifies risk. A single zero day can compromise millions of devices. Once a vulnerability is found, the risk is any. The risk is that anyone can exploit it. So, and they're saying, for example, by comparison, if you, if a good guy finds a zero day, they report it, probably receive a bounty and it's removed from the ecosystem, it's removed from the device.

Steve Gibson [00:53:45]:

However, spyware using a zero day never wants to disclose it. They want to use it as long as they can so that zero day remains present until it's somehow discovered, so thus magnifying risk. Also, it drives innovation in spyware. Spyware vendors continuously adapt their tools to exploit newly discovered vulnerabilities. Of course, as we know, it also drives Apple to, to keep revising their chips in this ongoing cat and mouse battle against what the Spy was able to do it lacks accountability. Vulnerabilities are traded secretly with minimal regulation, creating an ecosystem with no rules. That poses a risk to all of us. Concentration multiplies risk.

Steve Gibson [00:54:33]:

Many people are using only two OS, Android and iOS. And some apps are globally used. WhatsApp, Gmail, and so forth. Once someone breaks into one of these systems, they can have access to hundreds of millions of devices. And Leo, this is the point you often make about our monoculture. You know, the fact that there's basically either Android or iOS, there are not 20 different OSs, each, you know, struggling to maintain their own security. So, so this makes the point that because we have such a, a, a very vertical and, and narrow selection of platforms, you find a problem, you get access to a huge chunk of the world. They wrote a zero day vulnerability costs via brokers between first.

Steve Gibson [00:55:28]:

Okay, so this is what the payout is for. Zero day vulnerabilities today. Five to seven million dollars for exploits targeting an iPhone. That is you find a zero day for an iPhone today and through a broker you can obtain between 5 and 7 million dollars. Android phones get up to 5 million. Chrome and Safari. Zero days are between 3 and 3 and a half million dollars. WhatsApp and iMessage pull between 3 million for WhatsApp, 5 million for iMessage.

Leo Laporte [00:56:06]:

But this proves my point. They wouldn't be worth that much if they were so easy to use and you could use them in a widespread fashion. These are very targeted, very specific attacks.

Steve Gibson [00:56:17]:

Yes, yes. And the reason they're getting that much money is of course the spyware vendors then turn around and charge that much money per customer to the nation states. Yeah, yes, exactly. Which ultimately the taxpayers finance.

Leo Laporte [00:56:34]:

Yeah. Oh, that's nice.

Steve Gibson [00:56:35]:

You know, governments don't generate their own cash. In 2024 the Google Threat Analysis Group reported that 20 out of the. Okay, 20 out of the 25 vulnerabilities found on their products, which in this is Google's take group, so that's Android and Gmail. In 2023, 20 out of 25 were used by spyware vendors to perform their attacks. As of June 2025, more than 21,500 new vulnerabilities had already been published. So we're seeing a rate of 133 new vulnerabilities per day across, you know, not, not all high quality zero days in iOS obviously, but broad spectrum 133 vulnerabilities are being found of all types everywhere per day. They finish, or I'm finishing quoting this, this piece of it they, they by right. Even though at least 14 EU countries are reported to have used commercial spyware, regulation in Europe remains entirely absent.

Steve Gibson [00:57:53]:

So Germany is saying we want to do this. Ireland is saying now we can do this. The air in the European Union is for whatever reason making noises like, oh, this is bad, but they're not actually taking any action. So to say that the future of encryption currently exists in a state of tension and uncertainty I think would be no overstatement given the reality of the overwhelming power of the world's governments and the necessity for vendors to abide by their laws. Right. I mean as, as, as we know all signal can do is say, well we're leaving. They, they just can't ignore the laws in the prevailing regions where they want to operate. As much as I wish it were not the case, I do not see the interests of the EFF and the EDI ultimately winning out here.

Steve Gibson [00:58:51]:

Governments are never going to be satisfied until and unless they're able to intercept and monitor the communications of specific groups of individuals under the order of their courts. At a minimum, that's clearly the path that we're on. And as for the absolute or the legal use of absolute encryption, I would say enjoy it while it lasts. Eventually only criminals will be able to use unbreakable encryption. You know, its use will have been criminalized so that those who do use it, as I said earlier, will be guilty of at least that. And I think that's, I think that's where we're headed, Leo. I, I mean governments do not do. They're just going to object.

Steve Gibson [00:59:42]:

And it's, it's unfortunate too because, you know, while pre Internet, when law enforcement had to use more analog means, you know, wiretaps and, and physical searches, everything wasn't binary either. Like yes, encryption, you have encryption or you don't. I mean in the analog world there's, you know, hiding stuff in a mattress. I mean, you know, if it was, it was, it was a different way world. Now it's, it's either it, it is absolute. I mean it, it would almost be better if encryption actually worked the way it did in the movies, where, but, but was also very, very, very hard to break where if you really, really, really, really needed something, you could get it. Unfortunately, what's going to happen is governments are going to legislate themselves the ability to flip a switch and, and have it all. They're, they're going to say if, you know, a phone operating in, in within the European Union must have our software on it and oh, it'll have some benefits.

Steve Gibson [01:00:50]:

It'll, it'll be, you can use it to take the train and, and fly and you know, it'll be, you know, stand in for, for you and, and be a digital ID and other things. And it'll also be there, able probably to capture what they want to, when they want to.

Leo Laporte [01:01:09]:

It's, I mean I, you could absolutely have a program on a phone that would see all plain text, you know, everything that was typed in or dictated in.

Steve Gibson [01:01:20]:

Yep.

Leo Laporte [01:01:21]:

Before it went into an encrypted.

Steve Gibson [01:01:22]:

Yep.

Leo Laporte [01:01:23]:

That wouldn't be hard to do. You'd have to violate maybe some of Apple's rules. But if you're the government, you say, oh, Apple, you don't have to approve this app. We're just going to put it on every iPhone.

Steve Gibson [01:01:34]:

Yeah.

Leo Laporte [01:01:35]:

You can see everything.

Steve Gibson [01:01:36]:

Any macro program that we're used to is able to watch what you do, capture those actions and then store them. Well, it could also be capturing the keyboard.

Leo Laporte [01:01:47]:

This argues very strongly for an open source operating system. I wish I had a phone that I could really use with an open source operating system. And now with Vibe coding I could probably, before this show's over, seriously code up a, you know, I say use NACL or some well known crypto library that's, that's reliable. And I would like you to write me a encryption and decryption program and I'm going to send my friend Steve the decryption program. And you know, I, I think that would be. So it's going to be very difficult to control this. This is like a print your own gun thing.

Steve Gibson [01:02:26]:

I mean, but yes, it is difficult to control. But that encryption decryption program that you just mentioned, hypothetically, it uses os APIs.

Leo Laporte [01:02:38]:

Right.

Steve Gibson [01:02:39]:

So I mean it doesn't actually have, there's no action access to the XY coordinates that the user's touching on the screen. That service is provided by the os, so that can always be tapped at that level.

Leo Laporte [01:02:56]:

Yeah. I mean you could capture scan codes from a keyboard, but there's nothing to keep the OS from seeing those as well.

Steve Gibson [01:03:02]:

Yeah. You need to send me the leophone.

Leo Laporte [01:03:05]:

Right.

Steve Gibson [01:03:06]:

And leophone is open source.

Leo Laporte [01:03:09]:

We have open source hardware and open source software and make sure no government intrusion on either.

Steve Gibson [01:03:16]:

Yeah.

Leo Laporte [01:03:16]:

Well, you know that there are people who will be strongly enough incentive to do that. To do that. And that ironically is the people the government wants to catch. The normal people who aren't doing that. We're sitting ducks.

Steve Gibson [01:03:33]:

Yeah. Which brings us to Microsoft and BitLocker.

Leo Laporte [01:03:40]:

Oh yeah.

Steve Gibson [01:03:41]:

After this next break.

Leo Laporte [01:03:42]:

Okay, and we'll talk about this. This is a news story this week which we talked about on Twitter and Alex Stamos who is a very well respected security guru who did have his thoughts. I want to hear what you have to say about it and I'll give you Alex's thoughts as well.

Steve Gibson [01:03:55]:

Perfect.

Leo Laporte [01:03:55]:

Coming up on Security now, our show today, brought to you by Zscaler, the world's largest cloud security platform. You know we talk about AI and the, if you're a business, I think it's just generally accepted that if you're not using AI, that would be kind of the equivalent to a business saying, well, we don't really need a telephone either. We'll just take orders by carrier pigeon, I guess. So the rewards of AI cannot be ignored. But, but there are risks and those should not be ignored. Right? The, there's, and the risks aren't just bad guys. The risk is also loss of sensitive data through the use of AIs, both local and, and sas. And then of course there's the attacks against enterprise managed AI, the prompt injection.

Leo Laporte [01:04:44]:

And bad guys are also using generative AI and that's really increasing their capabilities. They can rapidly create phishing lures that are pitch perfect. They can write malicious code, they can automate data extraction. We're going to talk about writing malicious code in just a little bit. It's happening now. So there's all these issues. There were 1.3 million instances of Social Security numbers leaked to AI applications by businesses using AI applications. Right.

Leo Laporte [01:05:14]:

Not intentionally. ChatGPT and Microsoft Copilot together saw nearly 3.2 million data violations last year. There is a solution. It's time for a modern approach. Zscalers Zero Trust plus AI Zero Trust removes your attack surface. It secures your data everywhere. It safeguards your use of public and private AI. Yeah, Zscaler can do that.

Leo Laporte [01:05:39]:

They can even protect you against ransomware and AI powered phishing attacks. Check out what Siva, the director of security and infrastructure at Zuora says about using Zscaler. Watch this. AI provides tremendous opportunities, but it also brings tremendous security concerns when it comes to data privacy and data security. The benefit of Zscaler with ZIA rolled out for us right now is giving us the insights of how our employees are using various gen AI tools. So ability to monitor the activity, make sure that what we consider confidential and sensitive information according to, you know, companies data classification does not get fed into the public LLM models, et cetera. With Zero Trust plus AI you can thrive in the AI era. You can stay ahead of the competition and you can remain resilient even as threats and risks evolve.

Leo Laporte [01:06:29]:

Learn more@zscaler.com security that zscaler.com security we thank him so much for supporting Security now and Steve Gibson. All right, let's talk about this BitLocker thing, okay?

Steve Gibson [01:06:45]:

So on the heels of Microsoft news and eDri's pushback comes Microsoft's admission that they provided BitLocker keys to the FBI. When asked, the headline of Thomas Brewster's piece in Forbes, which set off this firestorm of discussion and controversy, was, quote, Microsoft gave FBI keys to unlock encrypted data, exposing major privacy flaw with the tagline. The tech giant said it receives around 20 requests for BitLocker keys a year and will provide them to governments in response to valid court orders. But companies like Apple and Meta set up their systems, so such a privacy violation is not possible. Okay, so here's what we know from Forbes reporting, Thomas wrote. Early last year, the FBI served Microsoft with a search warrant, asking it to provide recovery keys to unlock encrypted data stored on three laptops. Federal investigators in Guam believed the devices held evidence that would help prove individuals handling the island's Covid unemployment assistance program were part of a plot to steal funds. The data was protected with BitLocker, the software that's automatically enabled on many modern Windows PCs.

Steve Gibson [01:08:19]:

To safeguard all the data on the computer's hard drive, BitLocker scrambles the data so that only those with a key can decode it. It's possible for users to store those keys on a device they own. But Microsoft also recommends BitLocker users store their keys on its servers for convenience. While that means someone can access their data if they forget their password, or if repeated failed attempts to log in lock the device, it also makes them vulnerable to law enforcement subpoenas and warrants. In the Guam case, Microsoft handed over the encryption keys to investigators. Microsoft confirmed to Forbes that it does provide BitLocker recovery keys, and if it receives a valid legal order, Microsoft spokesperson Charles Chamberlain said, quote, while key recovery offers convenience, it also carries a risk of unwanted access. So Microsoft believes customers are in the best position to decide how to manage their keys, unquote. He said the company receives around 20 requests for BitLocker keys per year, and in many cases, the user has not stored their key in the cloud, making it impossible for Microsoft to assist.

Steve Gibson [01:09:38]:

The Guam case is the first known instance where Microsoft has provided an encryption key to law enforcement. Back in 2013, a Microsoft engineer claimed he had been approached by government officials to install backdoors in BitLocker but had turned the requests down. Senator Ron Wyden said in a statement to Forbes, quote, it is simply irresponsible for tech companies to ship products in a way that allows them to secretly turn over users encryption keys. Allowing ICE or other Trump goons to secretly obtain a user's encryption keys is giving them access to to the entirety of a person's digital life and risks the personal safety and security of users and their families, unquote. Ron Wyden, of course a Democrat the law enforcement Law enforcement regularly asks tech giants to provide encryption keys, implement backdoor access or weaken their security in other ways, but other companies have refused. Apple in particular has repeatedly been asked for access to encrypted data in its cloud or on its devices. In a highly publicized showdown with the government in 2016, Apple fought an FBI order to help open phones belonging to terrorists who shot and killed 14 in San Bernardino, California. Ultimately, the FBI found a contractor to hack into the iPhone.

Steve Gibson [01:11:16]:

Privacy and encryption experts told Forbes the onus should be on Microsoft to provide stronger protection for consumers personal devices and data. Apple, with its comparable file vault and Passwords system and Meta's WhatsApp messaging app, also allow users to backup data on their apps and store a key in the cloud. However, both also allow the user to put the key in an encrypted file in the cloud, making law enforcement requests for it useless. Neither Apple nor Meta are reported to have turned over encryption keys of any kind in the past. Matthew Green, cryptography expert and associate professor at the Johns Hopkins University Information Security Institute, said, quote, this is a, this is private data on a private computer and they made the architectural choice to hold and retain access to that data. They absolutely should be treating it like something that belongs to the user. If Apple can do it, if Google can do it, then Microsoft can do it. Microsoft is the only company that's not doing this.

Steve Gibson [01:12:30]:

He added, it's a little weird. The lesson here is that if you meaning Microsoft, have access to its users keys, eventually law enforcement is going to come for them. Jennifer Granik, the ACLU's surveillance and cybersecurity Council, raised concerns about the breadth of information the FBI could obtain if agents were to access gain access to data protected by BitLocker. And that's really a good point too. It's like, you know, they're not getting selective access to just what they want. They've got your drive. She said, quote, the keys give the government access to information well beyond the time frame of most crimes, everything on the hard drive, then we have to trust that the agents only look for information relevant to the authorized investigation and do not take advantage of the windfall to rummage around. In the Guam case, the court dockets show the warrant was successfully executed.

Steve Gibson [01:13:35]:

The lawyer for defendant Chesa Tanoro, who pleaded not guilty, said the information provided to her by the case's prosecutors included information from her client's computer that it included references to BitLocker keys that Microsoft had provided the FBI. The case is ongoing. Both Matthew Green and Jennifer Granik said Microsoft could have users install a key on a piece of hardware like a thumb drive, which would act as a backup or recovery key. Microsoft does allow for that option, but it's not the default setting for BitLocker on Windows PCs. Without the encryption keys from Microsoft, the FBI would have struggled to get any useful data from the computers. BitLocker's encryption algorithms have proven impenetrable to prior law enforcement attempts to break in, according to a Forbes review of historical cases. In early 2025, a forensic expert with ICE's Homeland Security Investigations Unit wrote in a court document that his agency did, quote, not possess the forensic tools required to break into devices encrypted with Microsoft, BitLocker or any other style of encryption, unquote. In one previous case, federal investigators obtained keys by discovering that a subject had stored them on unencrypted drives.

Steve Gibson [01:15:06]:

Now that the FBI and other agencies know Microsoft will comply with warrants similar to the Guam case, they'll likely make more demands for encryption keys, Green said. My experience is once the US Government gets used to having a capability, it's very hard to get rid of it. Okay, so the first takeaway from this is obvious, and it doesn't involve any sort of moral or ethical judgment either way. It's just the facts. Because encryption is absolute and unforgiving, it can be super useful to have a backup plan of some kind, right? You know, someone who will never forget someone to hold on to one's emergency encryption backup keys. There's no doubt about that. Only if you are willing to take sober and full responsibility for not never forgetting how to log in, would it make sense to have no backup whatsoever anywhere. That said, one option is to allow Microsoft to be the entity to hold on to your keys in the event of an emergency.

Steve Gibson [01:16:32]:

They're certainly the default. Easy choice. The only downside to that, and again, without any judgment here, is that they will also turn your keys over to law enforcement after a judge approves their request. And you know that may not be a bad thing if you're certain that this would never become an issue for you. But if that's a concern, it's a good thing that you're now aware that Microsoft cannot be a trusted guardian of your privacy. They will capitulate. And now all global law enforcement and intelligence services know that. So it might be better to entrust those secrets to a close friend who law enforcement would never think to ask.

Steve Gibson [01:17:25]:

But as I said, that's the first takeaway. There's another, and it's much more subtle, but I very much want to point it out to our listeners. This Forbes article reminded me of that previous instance 13 years ago, back in 2013, when a Microsoft engineer claimed he'd been approached by government officials to install backdoors in BitLocker. My recollection was that it was more than a claim and that it was also more than once. For one thing, there were multiple people involved, so it wasn't just hearsay from one guy, you know, and you know. So the FBI asked. I don't have a problem with them asking, as the saying goes, well, you can ask. Okay, so to set this up for our listeners, I needed to share that.

Steve Gibson [01:18:20]:

I want to share the first portion of Mashable's coverage of this incident from 2013. Mashable's coverage of the story was introduced with the leading question headline, did the FBI lean on Microsoft for access to its encryption software? They wrote, the NSA is not the only government agency asking tech companies for help in cracking technology to access user data. Sources say the FBI has a history of requesting digital backdoors, which are generally understood as a hidden vulnerability in a program that would, in theory, let the agency peek into suspects computers and communications. In 2005, when Microsoft was about to launch BitLocker, its Windows software to encrypt and lock hard drives, the company approached the nsa, its British counterpart, the gchq, and the FBI, among other government and law enforcement agencies, that is, say Microsoft approached them, saying, we're about to add encryption to Windows. They wrote Microsoft's goal was twofold. Get feedback from the agencies and sell BitLocker to them. However, the FBI, writes Mashable, concerned about its ability to fight crime, specifically child pornography, apparently repeatedly asked Microsoft to put a backdoor into the software. And then they tell their less technical audience, a backdoor or trapdoor is a secret vulnerability that can be exploited to break or circumvent supposedly secure systems.

Steve Gibson [01:20:07]:

For its part, the FBI categorically denies asking for such access, telling Mashable that the Bureau does not ask for back doors and that it only serves companies lawful court orders when it needs to access users data. And legally it would still need a warrant even if a backdoor did exist. Peter Bittle, the head of the engineering team working on BitLocker at the time, revealed to Mashable the exchanges he had with various government agencies. Biddle told Mashable, quote, I was asked multiple times, confirming that a government agency had inquired about back doors. Though he couldn't remember which one. He said, quote, and at least once the question was more like if we were to officially ask you, what would you say? According to two former Microsoft engineers, FBI officials complained that BitLocker would make their jobs harder. An FBI agent reportedly said, quote, it's going to be really, really hard for us to do our jobs. If every single person could have this technology, how do we break it? The story of how the FBI reportedly asked Microsoft to backdoor BitLocker to avoid, quote, going dark.

Steve Gibson [01:21:37]:

The FBI's term for a potential scenario where encryption makes it impossible to intercept criminals communications or break into a suspect's computer provides a snapshot into how US government agencies try to persuade tech companies to to weaken their security products or even poke a hidden hole to make them wiretap friendly last week. And this was written back in 2023. So 13 years ago, the New York Times, ProPublica and the Guardian Mashable Rights revealed that one of the ways the NSA circumvents Internet cryptography is to ask companies to put backdoors into their products. The FBI is reportedly doing the same in the name of fighting crime, and its persuasion techniques appear to be very similar. According to reports, both the NSA and the FBI are subtle in their requests, which are never formal, never written, but are usually uttered during casual conversations, almost jokingly.

Leo Laporte [01:22:48]:

Nico called plausible deniability. Right.

Steve Gibson [01:22:50]:

Exactly. Nico Cell, the former. I'm sorry, the founder of the privacy enhancing app Wicker was approached by an FBI agent after speaking at the RSA security conference at the end of February, again 13 years ago, as first reported by CET. According to Nico, the agent asked, so are you going to give us a back door? She declined, and after pressing the agent asked him to explain if he had a written request and to reveal his boss. The agent backed down.

Leo Laporte [01:23:28]:

Yeah.

Steve Gibson [01:23:29]:

Cryptography and security expert Bruce Schneier said he's heard of these same types of tactics from others. The government has. The government has approached, seeking technological backdoors. Bruce told Mashable, it's never an explicit ask. It's an informal Oblique mention, joking conversation where you're, you're felt out as to whether you might be amenable to it. If you're amenable to the, then the conversation continues. If you're not, well, it's like it never happened. Despite the requests being informal, Schneier and other surveillance experts are concerned.

Steve Gibson [01:24:11]:

A request is a request, and despite not being legal, Bruce said, it's in the case of Microsoft. According to the engineers, the requests came in the course of multiple meetings with the FBI. These kinds of meetings were standard at Microsoft, according to both Biddle and another folks former Microsoft engineer who worked on the BitLocker team who wanted to remain anonymous due to the sensitivity of the matter. Biddle said, quote, I had more meetings with more agencies than I can remember or count. He said the meetings were so frequent and with so many different agencies. He doesn't specifically remember if it was the FBI that asked for a backdoor. But the anonymous Microsoft engineer we, meaning Mashable, spoke with confirmed that it was in fact the FBI. During a meeting, according to Biddle and the Microsoft engineer who were both present at the meeting, an agent complained about bitlocker and expressed his frustration, saying, quote, you guys are giving us the shaft, unquote.

Steve Gibson [01:25:22]:

Though Biddle insisted he didn't remember which agency he spoke with, he said he did recall this particular exchange and Biddle wasn't intimidated. He replied, no, we're not giving you the shaft. We're merely commoditizing the shaft. Biddle, a believer in what he refers to as neutral technology, never agreed to put a backdoor in BitLocker and other Microsoft engineers when rumors spread that there was one, later denied that was ever a possibility. Niels Ferguson, Microsoft cryptographer and principal software development engineer, wrote, quote, the suggestion is that we are working with governments to create a backdoor so that they can always access BitLocker encrypted data. That will happen over my dead body, unquote. For Biddle, this. I mean, these guys were serious.

Steve Gibson [01:26:21]:

And, and if you take a look, Biddle has a, has a Wikipedia entry, you get a sense for him. I, I, you know, those were the good old days of Microsoft. Mashable writes. For Biddle, this was proof of a fundamental paradox facing government agencies and security software. How do you get secure software you can rely on while also retaining the ability to break into it if people use it to commit or cover up their crimes? Biddle said, quote, I realized that we were in this really interesting spot, sort of stuck in the middle between wanting to do a much better job at protecting our users information and at the same time realizing that this was starting to make government employees unhappy. Despite Microsoft's refusals to backdoor its product, the engineers kept working with the FBI to teach them about BitLocker and how it was possible to retrieve data in case an agent needed to get into an encrypted hard drive. At one point, the BitLocker team suggested the agency target the backup keys that the software creates. In some instances, BitLocker prompts users to to print out a piece of paper with the key needed to unlock the hard drive to prevent loss of data if the user forgets his or her key.

Steve Gibson [01:27:51]:

The anonymous Microsoft engineer said, quote, as soon as we said that, the mood in the room changed dramatically. They got really excited, unquote. In that instance, law enforcement agents wouldn't need a back door at all, as the engineer suggested. All they would need was a warrant to access a suspect's documents and retrieve the document that would unlock his or her hard drive. Okay, and this finally brings me to the point I wanted to make. Mashable quotes Christopher Segoyan Writing for Christopher Segoyan, a privacy and security expert at the ACLU, whether or not BitLocker has a backdoor or not isn't even that relevant again 13 years ago, since it's a feature that very few Windows users employ or even have access to. It's not included in most Windows versions and it's not a default setting, something that Segoyan said is not an accident. He told Mashable, quote, the impact is minimal because so few people use BitLocker, but it does speak to a friendly relationship between the companies and the government.

Steve Gibson [01:29:17]:

He said, if you want to keep your data out of the US government's hands, Microsoft is not your friend. Microsoft is unwilling to really make the government go dark. They're never really willing to protect their customers from the government. They're willing to take some steps, but they don't want to go too far, unquote.

Leo Laporte [01:29:39]:

This is from an era when we were still using TrueCrypt. Yes, that was the choice for people who really cared about privacy.

Steve Gibson [01:29:46]:

Right. So what I wanted to share about that last bit was I think it's wrong. Okay, so first of all, this is a reminder about the way the world has changed during the intervening 13 years. At the time Christopher Chigoyan was quoted, he correctly noted that bitlocker was a non issue since it was so infrequently used. Right. The FBI probably wouldn't actually encounter it in the field. I doubt he would feel the same way about bitlocker today. It will not be enabled on machines that have been upgraded to Windows 11 if earlier Windows was not using it.

Steve Gibson [01:30:30]: