Security Now 1047 transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show.

Leo Laporte [00:00:00]:

It's time for Security Now. Steve Gibson is here with good news on the EU chat Control vote. We'll talk about that Discord breach. Salesforce says we're not gonna pay. And then there is a very bad bug in 330,000 publicly exposed Redis servers. It's got a CVSS of 10. Stay tuned. All will be revealed next on Security Now.

Leo Laporte [00:00:29]:

Podcasts you love from people you trust. This is Twit. This is Security now with Steve Gibson. Episode 1047 recorded Tuesday, October 14, 2025. Reddish Shells, CVSS 10.0. It's time for Security now, the show we cover the latest in security privacy computing. Pretty much anything Steve Gibson wants to talk about because he's the man of the hour. Hello, Mr.

Leo Laporte [00:01:04]:

G. And I do try.

Steve Gibson [00:01:06]:

To largely keep us on topic as much as possible.

Leo Laporte [00:01:09]:

No, I'm the one who distracts. I'm the distractor.

Steve Gibson [00:01:13]:

There are, there are. Sometimes we wander a little bit off the range, but I always get feedback from our listeners saying, hey, that was fun or that was interesting or like with know, like sci Fi stuff. Some of the best sci Fi series that I've read have come from listeners saying, hey, try this.

Leo Laporte [00:01:30]:

That's true.

Steve Gibson [00:01:31]:

Also some of the worst. But that's just the nature of. That's just the nature of the game. So the, the topic I chose for today is, is just one of a bunch of interesting news that we're going to cover and you know, it came at the end. So okay, that's what we're going to talk about when we wrap things up. And that's a arguably really worrisome remote code execution exploit in all Redis servers which have been around for the last. The exploit has been around for the last 13 years. It's in Lua.

Leo Laporte [00:02:16]:

We use Redis. We use Redis for caching our, our website.

Steve Gibson [00:02:20]:

I do even, even I have a Redis server at grc. So anyway, it's got the it. It has earned itself the difficult to obtain 10.0 CVSS score and we're going to finish by talking about that. But we've got news on the EU's chat control vote, which we know is happening on the 14th of October, which is today for episode 1047 of Security Now Salesforce says it's not going to pay any ransoms and we're starting to see data leakage. Hackers claim that Discord lost control of tens of thousands of government IDs. We'll look at that. Microsoft plans to move GitHub to Azure, which is where we roll out our. What could possibly go wrong with that? Actually they tried a few times and then aborted the effort because it's not going to be easy.

Steve Gibson [00:03:29]:

We've got a new California law that our governor Gavin Newsom signed last week that should be. Should help things. We'll talk about that. OpenAI blocking foreign abuse. Does anyone care ie there's actually some. Some news about. About IE mode, which believe it or not, refuses to die. We've got.

Steve Gibson [00:03:55]:

Okay, we're going to spend a little bit of time on a Texas law that's going into effect on January 1st. Tim Cook reportedly phoned Greg Abbott and said please, please don't let this happen. Veto this or at least amend it because it's really bad. Well, Greg, you know, I don't think he has much sympathy for anything Tim Cook has to say. So we're getting this in 10 weeks and oh boy, is it going to be a problem. Also Breach forum, which I we showed last week, the Breach Forums webpage, remember that was with the extortion demand from Salesforce. Well, that site has received a makeover which we'll be looking at today. Also we've got a.

Steve Gibson [00:04:45]:

Oh boy. A 100,000 strong global botnet attacking US based RDP services. And again, what could possibly go wrong there? I did see one of our listeners sent me a UI experts weigh in on Apple's iOS 26 user interface. I did talk a little bit about going off topic. Well, I just have to indulge myself here. You.

Leo Laporte [00:05:13]:

You don't like it one bit.

Steve Gibson [00:05:14]:

I know.

Leo Laporte [00:05:16]:

Liquid Glass.

Steve Gibson [00:05:16]:

Turns out. Turns out I'm in good company.

Leo Laporte [00:05:19]:

Yeah. Yes.

Steve Gibson [00:05:21]:

Anyway, so that the Redis servers. We got a picture of the week and I think maybe Leo, this time we've got a good podcast for once.

Leo Laporte [00:05:33]:

It's about damn time.

Steve Gibson [00:05:35]:

Maybe this will be worthwhile.

Leo Laporte [00:05:37]:

I should mention this is also the last patch Tuesday for Windows 10 today.

Steve Gibson [00:05:43]:

That's right.

Leo Laporte [00:05:44]:

End of life for Windows 10.

Steve Gibson [00:05:50]:

Except of course if you click your heels twice and look at the moon, then, then Microsoft says, okay fine. I.

Leo Laporte [00:06:02]:

Well, before we get to the picture of the week, because I know that's imminent and I have not, as always, I have preserved. I. I've stayed all week in a soundproof room and I preserve listeners.

Steve Gibson [00:06:13]:

Are you going to tell our listeners who's paying the bills?

Leo Laporte [00:06:16]:

I'm going to tell our listeners who's paying the bills. Exactly. This portion of security now brought to you by Zscaler, the world's largest cloud security platform. The world's largest. Let Me say that again. Cloud security platform. Pretty good. As organizations leverage AI to grow their business and support workforce productivity, they can't rely anymore on traditional, you know, network centric security solutions.

Leo Laporte [00:06:44]:

Perimeter defenses that just don't protect against emerging threats and particularly against AI attacks. Bad actors have jumped on AI like everybody else. Like business too, right? AI is amazing. They're using new AI capabilities and powerful AI agents and they're using it across all four attack phases, which I think is interesting. They're using AI to discover the attack surface, to compromise it once in. They're using AI to move laterally. They're using AI to exfiltrate data. That's what Salesforce is learning about right now.

Leo Laporte [00:07:19]:

Traditional firewalls and VPNs in fact don't help. Instead they expand your attack surface by giving you public IP addresses. They enable lateral threat movement. They're also more easily exploited with AI powered attacks. So what do we need? We need a more modern approach. We need. Say it with me. Z Scalers, Zero Trust plus AI that A removes your attack surface, B secures your data everywhere and very importantly C safeguards your use of public and private AI.

Leo Laporte [00:07:55]:

And then finally, of course the most important protects against ransomware and AI powered phishing attacks. Just think about the job Steve Harrison has to do. He is the CISO of MGM Resorts International. They've been hit in the past. They are not going to, they're not taking any chances. They chose Zscaler. He says with Zscaler quote we hit zero trust segmentation across our entire workforce in record time and the day to day maintenance of the solution with data loss protection, with insights into our applications. These were really quick and easy wins from our perspective.

Leo Laporte [00:08:34]:

Here's a guy who knows the risks and has chosen Z Scaler to protect his entire organization. With Zero trust plus AI you could thrive in the AI era. You could stay ahead of competition and you can remain resilient even as threats and risks evolve. Learn more@zscaler.com Security Zscaler.com Security we thank them so much for their support of security now. And now I shall. Oh, turn on my extra camera so that we can see.

Steve Gibson [00:09:09]:

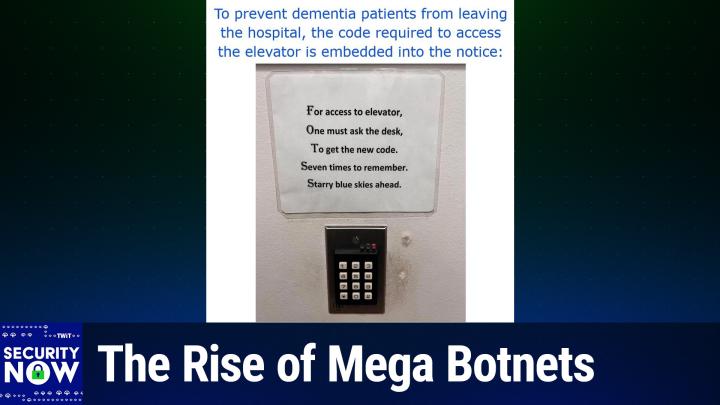

This was just picture. This was very clever. The goal is to prevent dementia patients from leaving the hospital which has an elevator. Okay.

Leo Laporte [00:09:22]:

My mom is in a memory care ward and that's exactly right. They have a, a code panel in the elevator. Right?

Steve Gibson [00:09:28]:

Right. And so above this code it says for access to elevator, one must ask the desk to get the new code seven times to remember Starry blue skies ahead.

Leo Laporte [00:09:43]:

Whoa, that's a code.

Steve Gibson [00:09:46]:

Is 4, 1, 2, 7, the first word of the first four sentences.

Leo Laporte [00:09:53]:

And they've capitalized each of them too, to make it a little bit more obvious. But if you've got dementia, you're probably not going to be able to.

Steve Gibson [00:10:02]:

Yeah, well, no. No one would tell you so.

Leo Laporte [00:10:06]:

Right.

Steve Gibson [00:10:07]:

So here is the code published above the keypad for access to elevator, one must ask the desk to get the new code seven times to remember. And so four, one, two, seven. So everybody who. All of the staff.

Leo Laporte [00:10:24]:

Kind of brilliant.

Steve Gibson [00:10:25]:

Isn't it wonderful? Yeah. And nobody would ever think. They would think, oh, I better go to the desk and ask for the new code.

Leo Laporte [00:10:34]:

You know what?

Steve Gibson [00:10:34]:

I'm stupid enough.

Leo Laporte [00:10:35]:

That's exactly what I would have done. Oh, that's clever. I like it. I like it. Yeah. In order to get up to see my mom, I have to get them to come and open the door and enter the code and bring me up, and then I have to do the same to get down. And I wish they had something like this, but they don't. I have to have help every time.

Leo Laporte [00:10:55]:

That's all right.

Steve Gibson [00:10:56]:

Yeah. Okay. So the much anticipated. Yes, good news. The much anticipated vote among EU member countries originally slated for today, October 14, was called off once it became clear that the vote for the adoption of the controversial measure was would fail, thankfully. Remember last week we. We reported that Germany seemed to be waffling a little bit, although the Netherlands said they were a firm no. Turns out Germany made up their mind, also signaling a firm no.

Steve Gibson [00:11:34]:

I learned of this from a blurb on the Risky Business newsletter, which offered some additional detail. They wrote, the European Union has scrapped the vote on Chat control proposed legislation that would have mandated tech companies to break their encryption to scan content for child abuse materials. The project was supposed to be put to a vote on Tuesday, October 14, during a meeting of interior ministers of EU member states. Denmark, which currently holds the EU presidency and was backing the legislation, scrapped the vote, according to reports Austrian and German media. Danish officials scrapped the vote after failing to gather the necessary votes to pass the legislation and advance it to the EU parliament. Only 12 of the bloc's 27 members publicly backed the proposal, with nine against and the rest undecided. The final blow to chat control came over the past two weeks when both the Netherlands and Germany publicly opposed it.

Leo Laporte [00:12:39]:

I love what the. With the. Oh, go ahead, you're going to say it. Here's the.

Steve Gibson [00:12:42]:

Yeah. Germany's justice minister, Dr. Stephanie Hubig, went out of her way to describe chat control as a, quote, taboo for the rule of law, unquote. Arguing that the fight against child pornography does not justify removing everyone's right to privacy.

Leo Laporte [00:13:03]:

She used the, the phrase suspicionless chat control. And I think that's key because of course, it was for everyone. Not really.

Steve Gibson [00:13:11]:

Yes, yeah, yeah, right. Well, I mean, and that's what you have to do if you're going to find people you don't know. It's got to scan everybody sharing illegal content. Everybody has. Exactly. It's, it's like if a website says you have to be an adult, well, then everybody has to prove their age, you know, not just young people, because you don't know. So anyway, the Risky Business newsletter said the law is been seen, has seen the usual mass opposition from privacy groups such as the EFF, but also from the tech sector too. With over 440 major EU tech companies signing an open letter to EU officials.

Steve Gibson [00:13:56]:

Signatories describe chat control as. And this is really interesting. I'm going to share this letter because it also contains some interesting stuff. They, they, they quote, quote, a blessing for US and Chinese companies, unquote, since EU users will migrate to products that respect their privacy and ignore chat control. The chat control opposition also received major help from a Danish programmer whose fight chat control website allowed Europeans to mass mail their representatives and urged them to vote against. According to a Politico Europe report, the website had driven so much traffic that it broke the inboxes of EU members of Parliament over the past few weeks. And you can imagine that might get their attention. So, you know, it's one thing to be living within a government that declares itself to be a democracy, but it's truly wonderful to see a movement where the voices, opinions and feelings of that democracy's subjects can be and are heard.

Steve Gibson [00:15:08]:

So I checked out that open letter, as I mentioned, to the EU Parliament that was signed by 40 major European Union companies. Since this was one of the major issues of our time, I want to share that letter. It was addressed to open letter to EU member states on their proposed CSA regulation. And it read, dear Minute. So this is from the 40, you know, co signed by the 40 top tech companies in the EU. Dear ministers and ambassadors of EU member states, we the undersigned European enterprises, as well as the European Digital SME alliance, you know, Small Medium Enterprise alliance, which represents more than 45,000 digital, small and medium enterprises across Europe, write to you with deep concern regarding the proposed regulation on child sexual abuse, protecting children and ensuring that everyone is safe on our Services and on the Internet in general is at the core of our mission. As privacy focused companies, we see privacy and as a fundamental right, one that underpins trust, security and freedom online for adults and children alike. However, we are convinced that the current approach followed by the Danish presidency would not only make the Internet less safe for everyone, but also undermine one of the EU's most important strategic goals, progressing toward higher levels of digital sovereignty.

Steve Gibson [00:16:46]:

Digital sovereignty is Europe's strategic future. In an increasingly unstable world, Europe needs to be able to develop and control its own secure digital infrastructure, services and technologies in line with European values. The only way to mitigate these risks is to empower innovative European technology providers. Digital sovereignty matters for two key reasons. First, economic independence. Europe's digital future depends on the competitiveness of its own businesses. But forcing European services to undermine their security standards by scanning all messages, even encrypted ones using client side scanning would undermine users safety online and go against Europe's high data protection standards. Therefore, European users, individuals and business alike, and global customers will lose trust in our services and turn to foreign providers.

Steve Gibson [00:17:56]:

This will make Europe even more dependent on American and Chinese tech giants that currently do not respect our rules, undermining the block's ability to compete. And second, national security encryption is essential for national security. Mandating what would essentially amount to back doors or other scanning technologies inevitably creates vulnerabilities that can and will be exploited by hostile state actors and criminals. For this exact reason, governments exempted themselves from from the proposed CSA scanning obligations. Nevertheless, a lot of sensitive information from businesses, politicians and citizens will be at risk. Should the CSA regulation move forward, it will weaken Europe's ability to protect its critical infrastructure, its companies and its people. The CSA regulation will undermine trust in European businesses. Trust is Europe's competitive advantage.

Steve Gibson [00:19:05]:

Thanks to the GDPR and Europe's strong data protection framework, European companies have built services that users worldwide rely on for data protection, security and integrity. This reputation is hard earned and gives European based services a unique selling point which big tech tech monopolies will never be able to match. This is one of the few, if not the only competitive advantage Europe has over the US and China in the tech sector. But the CSA regulation risks reversing this success. This legal text would undermine European ethical and privacy first services by forcing them to weaken the very security guarantees that differentiate European businesses internationally. This is particularly problematic in a context where the US Administration explicitly forbids its companies to weaken encryption, even if mandated to do so by EU law. Ultimately, the CSA regulation will be a blessing for for U S and Chinese companies as it will make Europe kill its only competitive advantage and open even wider the doors to big tech. The EU has committed itself to strengthening cybersecurity through measures such as NIS 2, the Cyber Resilience act and the Cybersecurity Act.

Steve Gibson [00:20:36]:

These policies recognize encryption as essential to Europe's digital independence. The CSA regulation, however, must not undermine these advancements by effectively mandating systemic vulnerabilities. It is incoherent for Europe to invest in cybersecurity with one hand while legislating against it with the other. European small and medium sized enterprises, SMEs would be hit hardest if obliged to implement client side scanning. Unlike large technology corporations, SMEs often do not have the financial and technical resources to develop and maintain intrusive surveillance mechanisms, meaning compliance would impose prohibitive costs or force market exit. Moreover, many SMEs build their unique market position on offering the highest levels of data protection and privacy, which particularly in Europe, is a decisive factor for many to choose their products over the counterparts of big tech. Mandating client side scanning would undermine this core value proposition for many European companies. This will suffocate European innovation and cement the dominance of foreign providers.

Steve Gibson [00:21:59]:

Instead of building a vibrant, independent digital ecosystem, Europe risks legislating its own companies out of the market. For these reasons, we call on you to and they have five points. Reject measures that would force the implementation of client side scanning backdoors or mass surveillance of private communications, such as we currently see in the Danish proposal for a Council position on the CSA regulation. Second, protect encryption to strengthen European cybersecurity and digital sovereignty. Third, preserve the trust that European businesses have built internationally. Fourth, ensure that EU regulation strengthens, rather than undermines the competitiveness of European SMEs and pursue child protection measures that, that are effective, proportionate and compatible with Europe's strategic goal of digital sovereignty. Digital sovereignty, they finish, cannot be achieved if Europe undermines the security and integrity of its own businesses by mandating client side scanning or other similar tools or methodologies designed to scan encrypted environments, which technologists have once again confirmed cannot be done without weakening or undermining encryption. To lead in the global digital economy, the EU must protect privacy, trust and encryption.

Steve Gibson [00:23:36]:

So that's sort of the business side, you know, business centric view of, of what this meant for the EU's businesses. And I wanted to share that because we don't want this to rear its ugly head again. You know, I mean, this, this, it's hard to put this thing to bed once and for all we keep, you know, smacking it down and it pops up because you know, legislators say well but what about the children? And that gets everybody all riled up again. So hopefully this is, you know, having this come to as much ahead as it has and, and, and really get getting everyone's attention noticed that they weren't breaking encryption. They figured out okay, we're not going to do that. We're going to do client side scanning before and after encryption. Believe that alone trying to come up with a way of shimming their scanning into the system. And even that didn't go the idea being no, sorry, we're just not, we're were not going to become a surveillance state.

Steve Gibson [00:24:44]:

Doing that would, would kill and you know, we're, we're thinking of companies like threema, right, that are EU based and couldn't do what they do that mean it would just kill the, the, the three A messenger completely to have to, to have to operate under a, A, a chat control legislation. So one, one line stood out for me in that letter that I thought was interesting. It said this is particularly problematic in a context where the US administration explicitly forbids its companies to weaken encryption even if mandated to do so by EU law, even though it wasn't the eu. You know, that feels like a response to, to the UK's order right to Apple, which as we know was loudly and publicly rebuffed when Tulsi Gabbard, the US Director of National Intelligence, tweeted on X that as a result of the US administration's closely working with the UK Americans, private data would remain private and our constitutional rights and civil liberties would be protected. You know, she stated in that tweet that quote, as a result, the UK has agreed to drop its mandate for Apple to provide a back door that would have enabled access to the protected encrypted data of American citizens and encroached on our civil liberties, unquote. So you know, unfortunately, as we know, it's now believed that the UK has since issued another order to Apple requiring it to allow the government access to the icloud data of its own citizens. Not everyone, everywhere. And so we're going to see how that plays out.

Steve Gibson [00:26:37]:

Overall though, I think that this open letter made a very good point about the fundamental competitive disadvantage that the entire EU business sector would face if its companies were forced to abide by a clearly, you know, surveillance oriented privacy invading law. They're right about, you know, what the rest of the world would do in response. So anyway, that bullet got dodged. You know we still have the, this unknown order in the UK and of course the, the the UK is still strong on enforcing age restrictions. So we see how those things continue to play out.

Leo Laporte [00:27:22]:

Yeah, you saw well probably going to talk about it, but you saw what California did with that.

Steve Gibson [00:27:28]:

Yeah, yeah, yeah. Okay, so Salesforce said it's not going to pay that and this is the extortion demand threatening a billion actually it was 984 million know just shy of a billion records belonging to a it's believed to be 39 of its customers whose, who, whose networks were all breached as a consequence of their relationship with Salesforce. So as I said last week we showed the ransom demand posted to breach forums by the Scattered Lapsis Hunters group. Salesforce public response was was widely covered in the tech press. Here's what Dan Guden writing for Ars Technica said he wrote Salesforce says it's refusing to pay an extortion demand made by a crime syndicate that claims to have stolen roughly 1 billion records from dozens of Salesforce customers. Google's Mandiant Group said in June that the threat group making the demands began their campaign in May when they made voice calls to organizations storing data on the Salesforce platform. The English speaking callers would provide a pretense that necessitated the target connect an attacker controlled app. We know that now that that was an OAuth based authentication app to their Salesforce portal, Dan wrote.

Steve Gibson [00:29:03]:

Amazingly, but not surprisingly, many of the people who received the calls complied. The threat group behind the campaign is calling itself Scattered Lapsis Hunters, a mashup of three prolific data extortion actors, Scattered Spider Lapsus and Shiny Hunters. Mandiant meanwhile, tracks the group as UNC6040 because the researchers so far have been unable to positively identify the connections. Earlier this month, the group created a website that named Toyota FedEx and 37 other Salesforce customers whose data was stolen in the campaign. In all, the number of records recovered scattered lapses Hunters claimed was oh it's 989.45 million, approximately a billion. The site called on Salesforce to begin negotiations for a ransom amount quote or all your customers data will be leaked, unquote. The site went on to say nobody else will have to pay us if you pay Salesforce Inc. The site said the deadline for payment was last Friday.

Steve Gibson [00:30:19]:

In an email Wednesday, a Salesforce representative said the company is spurning the demand. The representative wrote, quote, I can confirm Salesforce will not engage, negotiate with or pay any extortion demand. The confirmation came a day after Bloomberg reported that Salesforce told customers in an email that it won't pay the ransom. The email went on to say that Salesforce had received credible threat evidence indicating a group known as Shiny Hunters planned to publish data stolen and in the series of attacks on customers Salesforce portals. The refusal comes amid a continuing explosion in the number of ransomware attacks on organizations around the world. The reason these breaches keep occurring is the hefty sums the attackers receive in return for decrypting encrypted data and or promising not to publish stolen data. Online security firm Deep Strike estimated that global ransom payments totaled $813 million last year, that being down from 1.1 billion in 2023. So $1.1 billion in ransom payments in 2023, 813 million in 2024, Dan wrote.

Steve Gibson [00:31:47]:

The group that breached drug distributor Sencora alone received a whopping $75 million in ransomware payments, Bloomberg reported, citing unnamed people familiar with the matter. So one payout of 75 million for the breached drug distributor Sencora's ransom payment. No wonder these guys, you know, stay at it. Making ransomware payments, he finishes, has come increasingly under fire by security experts who say the payments reward the bad actors responsible and only encourage them to pursue more riches, right? Independent researcher Kevin Beaumont wrote on Mastodon, referring to the UK's National Crime Agency. Quote, corporations should not be directly funding organized crime with the support of the National Crime Agency and their insurance, break the cycle, beaumont said in an interview. While the NCA publicly recommends against paying ransoms, multiple organizations he's talked to report having NCA members present during ransom negotiations. On Mastodon, Kevin warned that payments pose threats to broader security, writing, it's becoming a real mess to defend against this stuff in the trenches, let me tell you. I'm concerned about where this is going, unquote.

Steve Gibson [00:33:15]:

So I imagine that Kevin is worried because there's no end in sight. The bad guys have figured out that the human factor is reliably the weakest link in the enterprise security chain. This gets the attackers inside the enterprise, and enterprise networks are not currently hardened against abuse from the inside. Among the approximately 39 companies believed to have been breached due to their customer relationship with Salesforce, Sales Loft and Drift is the Australian airline Qantas that we talked about last week with its loony injunction against anyone republishing their data after it's leaked on the dark web. Like those guys are going to care about an injunction. And also reportedly the Australian telco Telstra, although Telstra is denying the cyber daily report of their breach. Unfortunately, these ransomware groups are compelled to release the data they have obtained once they've made such a public spectacle of their data breach. Right.

Steve Gibson [00:34:23]:

You know, they can't ever be seen to be bluffing or making empty threats, or they'll lose their ability to threaten. So I imagine that next week we'll be seeing stories of nearly 1 billion records of data from around 39 major Salesforce customers being leaked online. The bad guys need to leak the data so their next victim will take them seriously. Oh, Leo, what a world. I don't. But we. We have some good news, and it comes from one of our sponsors that I can.

Leo Laporte [00:34:59]:

Can definitely provide you with. Can't help you with the other stuff, though, unfortunately. That's unbelievable. I swear, we live in difficult times.

Steve Gibson [00:35:10]:

And now we're after this, we're going to talk about the extent of Discord's horrible breach.

Leo Laporte [00:35:17]:

Yeah. And of course, since we use Discord for our club, I read that story with great interest. I have never been asked for my government ID from Discord, but a number I asked in our club, and a number of our club members have. Mostly outside the U.S. but.

Steve Gibson [00:35:32]:

And it's when their estimate of your age needs to be challenged. So they come back and they say, we think you're a teenager.

Leo Laporte [00:35:43]:

Yeah.

Steve Gibson [00:35:43]:

And it's like, you know, you talk.

Leo Laporte [00:35:46]:

A lot about Pokemon Go. I don't know.

Steve Gibson [00:35:49]:

That's right.

Leo Laporte [00:35:50]:

Yeah.

Steve Gibson [00:35:51]:

Actually, you know, we looked at your discourse on Discord, and it looks a little. Junior.

Leo Laporte [00:36:02]:

Yeah, there's no question. I'm a. I'm a kid. We will get to that in just a bit. But first, a word from our sponsor. My favorite VPN. I'm talking, of course, about ExpressVPN. Let me just click this link so I can get the full screen and Steve can have a little privacy while I talk about protecting your privacy online.

Steve Gibson [00:36:23]:

Right.

Leo Laporte [00:36:24]:

If you've ever browsed in incognito mode. Well, you know, I think we've talked about it. It's probably not as incognito as you think. Google recently settled a $5 billion lawsuit with a b billion dollar lawsuit as being accused of secretly tracking users in incognito mode. Google's defense is. Well, incognito doesn't mean invisible. In fact, you're not. All of your online activity is still 100% visible to third parties.

Leo Laporte [00:36:54]:

Don't use incognito mode. Use ExpressVPN, the only VPN I use and trust. And you better believe when I go online, especially when I'M traveling in airports or coffee shops, or I'm outside the country. ExpressVPN is my go to. Why does everyone need ExpressVPN? Well, with ExpressVPN, these third parties can still see every website you visit, even in incognito mode. Who are the third parties? Your Internet service provider. They see what you're doing. Or your mobile network provider, whoever administers that wifi network you're on, if it's at your school, the school IT department, if it's at work, your boss.

Leo Laporte [00:37:33]:

ExpressVPN reroutes 100% of your traffic through secure encrypted servers. So third parties can't see your browsing history. They can't see whatever you're. They can't see anything. They just see gobbledygook. They see encrypted data. It works beautifully. Now, I should point out that when you do get to the ExpressVPN server, the the end point of that encrypted tunnel, you have to be unencrypted, right? So you can browse.

Leo Laporte [00:38:01]:

You can't maintain it. So it's really important you choose a VPN provider that protects your data because they're at the other end of that, you know, of that tunnel. That's why I use ExpressVPN. They make the extra effort to keep you private. ExpressVPN completely hides your IP address, which means it's very difficult for third parties to track your online activity. To them, you look like one of other. Hundreds of other people are using that same IP address. ExpressVPN customers, the other thing they do, though, that I think is really important, they rotate their IP addresses.

Leo Laporte [00:38:35]:

They spend the extra money to make sure those IP addresses change. So it really is hard to track you. It's hard to even know that you're on a VPN, which is great. ExpressVPN is easy to use. You fire up the app, you click one button, you're done, you're protected. And it works everywhere, on all devices. Phones, laptops, tablets, even. You can put it on your router to protect your whole house.

Leo Laporte [00:38:59]:

You could stay private on the go and at home, wherever you are. And ExpressVPN is rated number one by the top tech reviewers, people like CNET and the Verge. It's what I use. Secure your online data today by visiting ExpressVPN.com SecurityNow that's E X P R E-S-S VPN.com SecurityNow to find out how you can get up to four extra months. ExpressVPN.com SecurityNow ExpressVPN.com SecurityNow we thank him so much for supporting Security now. And Steve Gibson. Back to you, Stevie. So.

Steve Gibson [00:39:42]:

Hackers are claiming that the Discord breach exposed the data of 5.5 million million users.

Leo Laporte [00:39:50]:

Oh, I didn't know it's that many.

Steve Gibson [00:39:52]:

Yeah, yeah, but not the, not the government IDs. That's a subset. Okay, so since my initial reporting of the Discord breach last week, additional troubling details have surfaced. Bleeping Computer reports this significant breach at Discord, though the attackers and Discord numbers don't agree. Bleepy Computer reported Discord says they will not be paying threat actors who claim to have stolen the data of 5.5 million unique users from the company's Zendesk support system instance. The stolen Data includes government IDs and partial payment information for some people. The company is also pushing back on claims that 2.1 million photos of government ID is were government IDs were disclosed in the breach, stating that approximately. As if this is not.

Steve Gibson [00:40:50]:

I mean this is not much better. 70,000 users had their government ID photos exposed. So folks, we need a better solution for proving age.

Leo Laporte [00:41:03]:

Wow, this, this, this shows you exactly why. Yeah.

Steve Gibson [00:41:06]:

Yes, they said. While the attackers claim the breach occurred through Discord Zendesk support instance, the company has not confirmed this and only described it as involving a third party service. So they're being coy used for customer support. Gee, I wonder what that could be. Discord told Bleeping Computer in a statement, quote, first, as stated in our blog post, this was not a breach of Discord. Okay, who, who cares? But rather a third party service we use to support our customer service efforts. Second, the numbers being shared are incorrect and part of an attempt to extort a payment from Discord. Of the accounts impacted globally, we have identified approximately 70,000 users that may have had government ID photos exposed.

Steve Gibson [00:42:00]:

That. That's like saying, well this, this zero day export may be attacked. Uhhuh. Which our vendor, they wrote, used to review age related appeals. Third, we will not reward those responsible for their illegal actions. In a conversation, writes Bleeping Computer with the hackers, Bleeping Computer was told the Discord is not being transparent about. So, so Bleeping Computer talked to the hackers and the hackers said Discord is not being transparent about the severity of the breach, stating that they stole 1.6 terabytes of data from the company's Zendesk instance. According to the threat actor, they gained access to Discord's zendesk instance.

Steve Gibson [00:42:51]:

For 58 hours beginning on, you know, because it takes a while to exfiltrate 1.6 terabytes of data. They gained access for 58 hours beginning on September 20, 2025. However, the attackers say the breach did not stem from a vulnerability or breach of Zendesk, but rather from a compromised account belonging to a support agent employed through an outsourced business process. Outsourcing, that's our new term of art. A BPO business process outsourcing provider used by Discord. As many companies have outsourced their support and IT help desks to BPOs, they have become a popular target for attackers to gain access to downstream customer environments. The hackers allege that Discord's internal Zendesk instance gave them access to a support application known as Zenbar that allowed them to perform various support related tasks such as disabling multi factor authentication and looking up users phone numbers and email addresses. Using access to Discord support platform, the attackers claimed to have stolen or 1.6 terabytes of data, including around one and a half terabytes of ticket attachments and over 100 gigabytes of ticket transcripts.

Steve Gibson [00:44:22]:

The attackers say this consisted of roughly 8.4 million tickets affecting 5.5 million unique users. I'll just mention that all those numbers track, they all make sense in terms of like the ratios that you would expect to see and that about 580,000 users contain some sort of payment information. The threat actors themselves acknowledged a belief in computer that they're unsure how many government IDs were stolen, but they believe it's more than 70,000. As they say there were approximately 521,000 age verification tickets. So more than half a million age verification tickets among the 8.4 million tickets total. The threat actors also shared a sample of the stolen user data which can include a wide variety of information including email addresses, discord usernames and IDs, phone numbers, partial payment information, date of births, multi factor authentication, related information, suspicious activity levels and other internal info. The payment information for some users was allegedly retrievable through Zendesk integrations with Discord's internal systems. These integrations reportedly allowed the attackers to perform millions of API queries to Discord's internal database via the Zendesk platform and retrieve further information.

Steve Gibson [00:45:59]:

Bleeping computer could not independently verify the hackers claims or the authenticity of the provided data samples. The hacker said the group demanded $5 million in ransom only 5 million later, reducing it to three and a half million and engaged in a private negotiation with Discord between September 25 and October 2. After Discord ceased communications and released a public statement about the incident. The the attackers said they were extremely angry and plan to leak the data publicly if an extortion demand is not paid. Bleeping Computer contacted Discord with additional questions about these claims, including why they retained government IDs after completing age verification.

Leo Laporte [00:46:49]:

That's the key.

Steve Gibson [00:46:50]:

Yeah, uhhuh. But did not receive answers beyond the above statement. So you know, no one's going to shed a tear for angry extortionists whose three and a half million dollar payday fell through. Unfortunately, as I noted in the case of Salesforce, the attackers must now follow through with their threats. I would not want to have my own photo ID out on the Internet circulating among criminals. And Leo, it's interesting in the details that we, we got thanks to bleeping computers pursuit of this, this is what we are increasingly seeing. We are seeing that, that automated back end systems which publish an API are then being used by their clients or their customers to acts, you know, in order to get the services. So like Discord's platform accesses Zendesk or vice versa.

Steve Gibson [00:47:50]:

You know, they've got APIs going in both directions. Which means that if, if, and in this case this BPO guy, this business process outsourced person, they got compromised, who knows, clicked on email, phishing, whatever that allowed their credential which allowed them to log into either Discord or Zendesk to get them in. And then the bad guys were able to use the, the, the, the basically the automated API cross access in order to plumb the other services. You, you using the API at high speed in order to, to, to, to obtain more data and, and create problems. So basically what, what's happened is we've sort of that enterprises that are, that are establishing operational back ends are, have like an underground private network which is, which is data rich. And now bad guys are breaking into that and then, and then taking advantage of these APIs in order to suck out all of the data that is normally, you know, layers deep from the, the, the, the front end that users see, you know, convenient for those people who are doing it. But boy it magnifies the effect of a breach when one happens. And guess what? They're happening.

Steve Gibson [00:49:29]:

And yeah, maybe half a million government IDs and, and, and as you clued in on and as we mentioned before, why are the age verification records being kept?

Leo Laporte [00:49:40]:

That's just lazy. That's just lazy.

Steve Gibson [00:49:42]:

Well and it's also, it's, it's Data Data aggregation fever. Yeah, I mean, it's like, oh, the more data we have, you know, who knows what we'll be able to do with this in the future, right? So why delete what what might be of some value to us? Yikes. Okay, so I suppose it was only a matter of time before Microsoft would decide to move its GitHub property over to their own Azure cloud infrastructure. But the details behind the move will likely be of interest to many of our listeners. The publication the Next Stack provided the background for this move, they wrote after acquiring GitHub in 2018, Microsoft mostly let the developer platform run autonomously. But in recent months that's changed. With GitHub CEO Thomas Domke leaving the company this August and GitHub being folded more deeply into Microsoft's organizational structure, GitHub lost that independence. Now, according to internal GitHub documents, the new stack has seen the next step of this deeper integration into Microsoft structure is Moving all of GitHub's infrastructure to Azure, even at the cost of delaying work on new features.

Steve Gibson [00:51:21]:

In a message to GitHub staff, CTO Vladimir Fedorov notes that GitHub is constrained on capacity in its Virginia data center. He writes, quote it's existential for us to keep up with the demands of AI and co pilot. Well of course Leo, you can, you know, if you're going to have AI and co pilot, you got to move to a facility that's big enough for that, he said, which are changing how people use GitHub. The plan, he writes, is for GitHub to completely move out of its own data centers in 24 months. Quote this means we have 18 months to execute with a six month buffer. Fedorov memo says. He acknowledges that since any migration of this scope will have run in parallel on both the new and old infrastructure for at least six months, the team realistically needs to get this work done in the next 12 months. So during 2026 to do so, he's asking GitHub's teams to focus on moving to Azure over virtually everything else, fedorov wrote.

Steve Gibson [00:52:40]:

Quote we will be asking teams to delay feature work, to focus on moving GitHub. We have a small opportunity window where we can delay feature work to focus, and we need to make that window as short as possible. While GitHub had previously started work on migrating parts of its service to Azure, they write, our understanding is that these migrate migrations have been halted, halting and sometimes failed. There are some projects, like its data residency initiative, internally referred to as Project Proxima that will allow GitHub Enterprise users to store all of their code in Europe that already solely use Azure's local cloud regions, fedorov writes. We have to do this. It's existential for GitHub to have the ability to scale to meet the demands of AI and Copilot, and Azure is our path forward. We have been incrementally using more Azure capacity in places like Actions, Search, Edge Sites and Proxima, but the time has come to go all in on this move and finish it. Unquote, the next stack said.

Steve Gibson [00:54:03]:

GitHub has recently seen more outages, in part because its central data center in Virginia is resource constrained and running into scaling issues. AI agents are part of the problem, but it's our understanding that some GitHub employees are concerned about this migration because GitHub's MySQL clusters, which form the backbone of the service and run on bare metal servers, won't easily make the move to Azure and lead to even more outages going forward. In a statement, a GitHub spokesperson confirmed our reporting and told us GitHub is migrating to Azure over the next 24 months because we believe it's the right move for our community and our teams. We need to scale faster to meet the explosive growth in developer activity and AI powered workflows and our current infrastructure is hitting its limits. We're prioritizing this work now because it unlocks everything else for us. Availability is job one, and this migration ensures GitHub remains the fast, reliable platform developers depend on, while positioning us to build more, ship more, and scale without limits. This is about ensuring GitHub can grow with its community at the speed and scale the future demands for open source developers having GitHub linked even closer to Microsoft and and Azure may also be a problem, though for the most part, some of the recent outages and rate limits developers have been facing have been the bigger issue for the service. Microsoft has long been a good steward of GitHub's fortunes, but in the end no good service can escape the internal politics of a giant machine like Microsoft, where executives will always want to increase the size of their fiefdoms.

Steve Gibson [00:56:12]:

So to me it makes sense for Microsoft, but it also sounds as though it's going to be more easily said than done. You know, and the thing about unforeseen consequences is that they're unforeseen. And outages for any service yeah, outages for any service such as GitHub, upon which so much depends, are going to be a big problem. You know, but no one sees another way. So I have the feeling that some future security now podcasts will be reporting on the consequences of this move. And boy, if like moving to Azure gets screwed up from a security standpoint, that's going to be a big nightmare for GitHub because that's that's not something anyone wants to see happening. So I've been noting that the proper place for consumers to specify how they would like the Internet to treat them is their browser. You know, that was what I love so much about the original DNT that, you know, the do not track beacon with just the flip of a switch just once, a user could configure their web browser to always append a DNT header to every Internet resource request.

Steve Gibson [00:57:40]:

And had anyone ever cared to honor that request, which of course was the big problem, that would have been their one and done prohibition against tracking. Because this broad concept has merit, the newer incarnation of DNT is GPC, the Global Privacy Control. And remember, Global PrivacyControl.org is a site you can go to and right up at the top of the page you are notified whether your browser is broadcasting the GPC signal to saying no, thank you. But even though the GPC signal has been around since it's since its release for like quite a while. Sorry, only today only Brave, the Duck Duck Go and Tor browsers are broadcasting that signal by default. Firefox, since its release number 95 has supported GPC, but it needs to be turned on. And of course I went over to global privacycontrol.org with Firefox and yep, right up at the top I get a little green light saying your browser is GPC enabled. Sadly and perhaps not surprisingly, there's no support for GPC from the various other Chrome based browsers Chrome, Edge, Vivaldi and Opera.

Steve Gibson [00:59:21]:

Anyone wishing to emit the GPC signal from any Chromium based browser other than Brave will need to install an add on. And there's also been no sign of GPC from Safari, which I find kind of surprising. Okay, so all that make all makes news all of that makes news because of California's new legislation last Wednesday, which Gavin Newsom signed. The the the the Record reported California Governor Gavin Newsom on Wednesday signed a bill which requires that's right requires web browsers to make it easier for Californians to opt out of allowing third parties to sell their data. The California Consumer Privacy act, signed in 2018 gave Californians the right to send opt out signals, but major browsers have not had to make opt outs simple to use. The bill signed Wednesday requires browsers to set up an easy to find mechanism that lets Californians opt out with the push of a button instead of having to do so repeatedly when visiting individual websites. Privacy and consumer rights advocates have been nervously waiting for Newsom to sign the bill which passed the California legislature on September 11th. This is the first law in the country of its kind.

Steve Gibson [01:00:56]:

The governor vetoed a similar but broader bill last year which also applied to mobile operating systems. Matt Schwartz, a policy analyst at Consumer Reports, said, quote, these signals are going to be available to millions more people and it's going to be much easier for them to opt out, unquote. Until now, Schwartz said individuals who want to use a universal opt out have had to download third party browser extensions or use a privacy protective browser, you know, meaning Brave duckduckgo or Tor or Firefox if you flip the switch on. Other bills signed by Newsom on Wednesday also give Californians important data privacy rights. One of them requires social media companies to make it easy to cancel accounts and mandates that cancellation lead to full deletion of consumers data. A second bolsters the state's data broker registration law by giving consumers more information about what personal data is collected by data brokers and who can obtain it. So I did some additional research and found that this was measure AB566 relating to OPT out preference signals. Unfortunately, it appears that we're not going to be getting it for another 14 months since the new law doesn't take effect until January 1, 2027.

Steve Gibson [01:02:25]:

But at that time all web browsers will need to include functionality for Californians to send an opt out preference signal to businesses they visit online through the browser. The law follows the California Privacy Protection Agency CPPA announcement of a joint investigative sweep with privacy enforcers in Colorado and Connecticut to investigate potential non compliance with global privacy control the GPC signal. So at least we have some progress. Chromium browsers will need to get with the GPC plan, which just basically means that Chromium, the Chromium core is going to have to support it, as will Apple's Safari browser. And once we have GPC available, privacy enforcers or will then be able to start investigating who is and who is not honoring the clear preference setting that will be sent to all browsers. As we saw, do not track, you know, never got enforcement, you know, and just having a GPC signal means nothing if there's no penalty for ignoring it, you know. And while we're at it, how about we also allow our browsers to send a cookie and acceptance preference signal so that we can also dispense with all of those ridiculous cookie permission pop ups. That would be a step forward for the, you know, the world's user interfaces.

Steve Gibson [01:03:57]:

Anyway, it's, you know, it's progress. We, we have the technology and then we have the legislation to, to require its use and that it actually be honored. So you know, it takes years but we're getting there. Last Tuesday Open AI posted a piece titled Disrupting Malicious uses of AI this this. Their little blurb pointed to a detailed and lengthy 37 page report about their efforts to block many different abuses of their technology. Among those cited, Open AI moved to disrupt prc, you know, People's Republic of China espionage operations. Their security team banned chat GPT accounts used by Chinese state sponsored hackers to write spear phishing emails. The accounts were allegedly used by groups tracked by the infosec industry as UNK, Drop Pitch and UTA0388.

Steve Gibson [01:05:09]:

The emails targeted Taiwan's semiconductor industry, US, US academia, US think tanks and organizations representing Chinese minorities. OpenAI says threat actors primarily abuse its service to improve phishing messages rather than write malware, which is also what the threat company that the threat intel company Intel 471 has observed. Okay, now this is certainly a good thing for them to do. We've noted how phishing email is no longer obviously grammar impaired, making you know, you know, basically removing the first obvious sign that you should hit delete in your email is instead of bothering to even read what the nonsense that it's spewing. But I suppose I'm not hugely impressed because OpenAI was likely being used only because it was among the lowest hanging of the myriad of available fruit. There are so many other sources of the same or similar generative AI assistance. I mean the threat actors could even spin up their own as we know that this feels like a battle that will always be lost. You know, just it it seems to me that there's just no way that AI is not now going to always be used to improve the quality of fishing mails.

Steve Gibson [01:06:42]:

Regardless of what barriers any of the commercial providers put up, there will always be, you know, alternatives available. So I guess I'm not hugely impressed. It would be difficult to find a better example of the need to continue supporting long past its prime website code than is evidenced by the fact that Microsoft continues, believe it or not, to need to offer the option to reload very old web pages under its creaky old IE mode. It's true and in fact this email went out yesterday afternoon, and I have already heard from one of our listeners who said he just the other day encountered an instance where some government websites would not run under Edge and he had to switch to IE mode and then the page rendered. So they're still out there. What's also true, unfortunately, is that IE's old Chakra JavaScript interpreter contains known exploitable flaws that bad guys want access to. We're talking about this ancient history today because it's apparently less ancient than we might hope. IE mode is still being exploited to the point that Microsoft's most recent iteration of Edge has removed all of the easy to click buttons from the browser's ui.

Steve Gibson [01:08:34]:

An unknown threat actor has been tricking Microsoft Edge users into enabling Internet Explorer Mode in Edge to run their malicious code in the user's browser in order to take over their machine. These mysterious attacks have been have been conducted since at least August of this year. According to the Microsoft Edge security team, IE's legacy mode, or IE Mode, is a separate website execution environment within Edge. It works by reloading a web page, but running the reloaded page and its code inside the old Internet Explorer engines. And as we know, Microsoft included IE mode in Edge when it retired its official IE predecessor. And I guess we had, we had like IE 11, I think was the last version of IE, but there were still some code that was dependent upon it. So to access a site under IE mode previously, users would have to press a button or a menu option to reload the page from Edge into the old IE execution environment. Microsoft has said that it has received credible reports that hackers were using clones of legitimate websites to instruct users to reload the clone pages in Edge IE mode.

Leo Laporte [01:10:05]:

Oh, God.

Steve Gibson [01:10:07]:

Yep, yep.

Leo Laporte [01:10:08]:

And why would they do that, Steve, why?

Steve Gibson [01:10:11]:

Well, when that happened, the malicious site would execute and exploit chaining, an exploit chain targeting IE mode's creaky old Chakra JavaScript engine that has bugs that have never been patched. The exploit chain contained a chakra zero day that allowed them to run malicious code and a second exploit that allowed them to elevate privileges and take over the entire user platform.

Leo Laporte [01:10:41]:

Now, that's actually pretty ingenious, though.

Steve Gibson [01:10:44]:

It is. I mean, if you think about it again, if there's a way these guys do it. So in response, Microsoft did not assign a CVE nor release a patch. Instead, they overhauled the entire IE mode access. The Edge security team has completely removed all the dedicated buttons and menu items that could once easily refresh and relaunch a website under IE mode. This Includes the dedicated toolbar button, the context menu option, and under the hamburger main menu item. From this point on, anyone wishing, needing actually to relaunch a website in IE mode will first have to go into the browser's settings and specifically enable the normally disabled feature there. They'll then need to re.

Steve Gibson [01:11:40]:

Relaunch the browser, you know, shut it down, completely relaunch their browser, and manually add the URL of a website into an Allow list of sites permitted to be reloaded in IE mode. So, I mean, they, you really have to need it that way. But it does sound like for those people, for example, who, who, who are using a government site that is old and creaky and only runs under IE mode, well, you can still do it. You just have, you know, you have to go in and you turn it on, then you relaunch the browser, then you manually put that government URL into the Allow list and then you'll be able to access it. So it sure sounds as though Microsoft is none too happy that their old Internet Explorer code is still coming back to bite them. So they decided that while they still cannot safely just kill it off once and for all, at least they can make it really much more difficult to use and thus to abuse. So that's good. Leo, break time.

Steve Gibson [01:12:51]:

And then we're going to look at some news that actually occurred while I was putting the show together about Salesforce customer data leakage. And we're going to get to this horrifying new Texas law that takes effect in 10 weeks that Tim Cook begged not to have.

Leo Laporte [01:13:11]:

Yeah, Apple's not thrilled about what California did either, although it's exactly what you proposed, I think, in the past, which is that the App Store ask people when they're setting up an account, are you 18 or over? Are you over 18? And then if, or I guess what they do is they say, is this being set up for somebody besides you? And what is the age of this child? And then it's in the phone and there's an API that apps can query it. That seems like a such a sensible plan. It's what Meta wanted, Google even wanted it OpenAI wanted. But Apple's kind of like, well, do we have to be responsible for this?

Steve Gibson [01:13:47]:

Well, well, as we're, as we're going to see in a few minutes, everybody's going to get it as a, As a result of SB420.

Leo Laporte [01:13:54]:

Yeah. Oh, you're going to talk about SB2420. Good.

Steve Gibson [01:13:57]:

Yes.

Leo Laporte [01:13:57]:

Okay, good.

Steve Gibson [01:13:58]:

Yep.

Leo Laporte [01:13:59]:

Coming up. But first, a word from our sponsor Vanta. What's, what's your 2am Security worry? What keeps you up at 2am? Is it do I have the right controls at place? Or is it are my vendors secure? How about the really scary one? How do I get out from under these old tools and manual processes? Well, enter Vanta. Vanta automates manual work so you can stop sweating over spreadsheets, chasing audit evidence and filling out endless questionnaires. Vanta's trust management platform continuously monitors your systems, centralizes your data and simplifies your security at scale. Vanta also fits right into your workflows, using AI to streamline evidence collection, flag risks, and keep your program audit ready all the time. Isn't that nice? With Vanta, you get everything you need to move faster, scale confidently and get back to sleep. Get started@vanta.com SecurityNow that's V A N T A.com SecurityNow thank you Vanta for supporting the good work Steve's doing here at Security Now.

Leo Laporte [01:15:14]:

And you support us by going to vanta.comsecurity now. Okay, Steve.

Steve Gibson [01:15:23]:

Excuse me. Okay, here we go. Wow. So first of all, remember how I was saying that we were almost certainly going to soon be hearing news of the leaks of Salesforce customer data? Just during the time I was assembling today's show, the news broke that the first tranche of those nearly 1 billion billion leaked Salesforce customer records has been published. Top of the list was Qantas Airlines. And what do you know, the criminals were not dissuaded by that permanent injunction the Qantas CEO managed to obtain from Australia's Supreme Court joining Qantas in private database publication. We also have Vietnam Airlines Albertsons, the Gap, Fujifilm and NG Resources spelled E N G I E Resources. And in the case of Vietnam Airlines who were mentioning for the first time the scattered lapses hunters group leaked 7.3 million details from Vietnam Airlines customer loyalty program which was data taken from the company's Salesforce account.

Steve Gibson [01:16:44]:

So the salesforce said nope, we're not paying you your $3.5 million. Which you know, doesn't really seem like that much for 39 of their customers. All the data of 39 of their customers. But what are you going to do again? You know, I mean they made a lot a big stink about it. Everyone says don't pay the bad guys so they're not going to. But you know, their customers, customers are paying the price. Wow. Okay, here we go.

Steve Gibson [01:17:17]:

Last Wednesday, Apple's developer portal posted their position and response to the Texas Senate Bill SB that's what you know, Senate Bill stands for SB 2420, whose official title is relating to the regulation of platforms for the sale and distribution of software applications for mobile devices. Okay, so there's been a lot of recent child protective legislation activity in Texas recently, so things could get a little bit confusing there. So first, to clarify, this Senate Bill 2420 legislation, which Apple is responding to, is not the same bill that recently caused pornhub to go dark across Texas. Pornhub suspended its services in Texas because of a Texas law that was passed two years ago back in 2023. That was House Bill HB 1181. It was. That bill was immediately challenged in the courts, and it wound up surviving. That's the law that requires websites hosting a majority of content that's inappropriate for minors to proactively verify the age of every single visitor before they are allowed to view the site's context contents, lacking any accepted privacy enforcing means to do that.

Steve Gibson [01:18:49]:

And given that we're now seeing tens of thousands of extremely personal government ID scans falling into criminal hands during data breaches, which appears to be inevitable, pornhub probably correctly determined that the percentage of people who would be willing to be fully de anonymized during their visits to their site would not be appreciable. So just closing their doors to Texans was the right solution for them. It's worth mentioning that this Texas legislation, HB 1181, now having survived the courts all the way up to and including the Supreme Court, is likely to become a model for other states legislation which will therefore not need to struggle for adoption and enforcement because Texas already did that, you know, verifying that the legislation passed the court's scrutiny. So we might expect to see many other states driving adult content websites out of their jurisdictions simply by following Texas's lead. Okay, but back to Texas's newer legislation, which is Senate Bill SB 2420. While its official title is quote, relating to the regulation of platforms for the sale and distribution of software applications for mobile devices, it is commonly referred to as the App Store Accountability Act. It regulates how app stores and software application developers operating in Texas must verify users, ages and handle purchases or downloads by any miners in the state. The legislation states that the owners and operators of app stores, meaning Apple and Google, Right, must verify a user's age via a, quote, commercially reasonable method, unquote, when an account is created.

Steve Gibson [01:20:56]:

If a user is a minor under 18 as defined in the bill, the legislation requires that the minor's account be tied to, you know, affiliated with a parents or guardian's account. Additionally, each download or purchase by a minor must first receive explicit parental consent. And I'll. I'll expand on some of these points in detail from the language of the law. The store must also clearly and conspicuously display each app's age rating and the content elements that were used to derive that rating. App stores must limit their data collection. And let me just reinforce this is going into effect in 10 weeks. This is not, you know, 2029 or something.

Steve Gibson [01:21:48]:

This is January 1, 2026. This is happening. And I'll ju. I'll. I'll. I'll preempt something I mention later. There's been no legislation filed against this, probably because people, after what happened with HB 1181, no one's bothering. So this is happening.

Steve Gibson [01:22:09]:

App stores must limit their data collection to what is needed for obtaining age verification, consent, and record keeping. And any violations of any of these provisions, such as attempts to obtain blanket consent or making any misrepresentations, can be treated as deceptive trade practices. Okay, so that's on the App Store side. Software developers have obligations under this new legislation as well. Developers must assign an age rating to each of their apps, including any in app purchases, and those age assignments must be consistent with the age categories specified in the bill. Developers must also substantiate their age assignments by providing the app content elements with which led to that rating. They must notify app Stores of any significant changes to the app's terms, its privacy policy, changes in monetization or features, or any change in the app's resulting rating. Apps must use the age and consent information from the App Store to enforce age restrictions to comply with the law and enable any safety features.

Steve Gibson [01:23:26]:

Apps must delete any personal data received for age verification once such verification is completed. So that's very good. That gives us what we didn't see happening with discord, Zendesk deal and any violations such as misrepresenting the app's age rating, enforcing terms against minors without consent, wrongful disclosure of personal data, app and such are actionable. However, there are some liability protections if the developer follows widely adopted industry standards in good faith. This new Texas law states that they may be exempt from liability, so mistakes are understood. Okay, so until we had this new Texas law, which was signed into law by Texas Governor Greg Abbott and is scheduled to take effect on January 1, 2026. So in less than three months, App Store and application age and content ratings were advisory only App stores run by Apple and Google were assigning age ratings to apps using self regulatory Frameworks such as the iarc, the International Age Rating Coalition System, developers answered questionnaires about their apps. The stores would generate an age rating such as 12 plus or teen, and display those indications.

Steve Gibson [01:24:58]:

But all of those were informational only. Nothing prevented app acquisition and download by younger users. That is the big thing that SB2420 changes one of them at least. Now app developers are legally required to assign an age rating using criteria defined by this Texan law. App stores must display those ratings and the specific content descriptors. And these ratings now carry legal weight and must be enforced by app store technology to trigger parental consent requirements and enable or disable the app's download. And any developer who misrates an app or fails to update ratings after material changes can face liability under deceptive trade practice law. Naturally, Apple, who sees privacy concerns hiding around every corner, has not been happy about any of this.

Steve Gibson [01:26:13]:

And Tim Cook is believed to have called Greg Abbott to argue against the provisions of the legislation, asking Abbott to either modify or completely veto the bill. Those pleas apparently fell on deaf ears. Apple's concerns have been that the new law would require sensitive, personally identifying information to be collected from any Texan who wants to download apps, even innocuous ones such as weather apps, sports scores, etc.

Leo Laporte [01:26:45]:

Because everything's going to be designated, even if it's a weather app. I'm going to designate it adults only because I don't want to take any chances. Right? Almost all apps will be adults only.

Steve Gibson [01:26:55]:

And but get this Leo, even if it says age 2 and above still requires.

Leo Laporte [01:27:02]:

Got it.

Steve Gibson [01:27:03]:

Anything. Anything. Yes, anything. Any. Any app downloaded by a minor, regardless of its rating, requires parental consent. I mean, parents are going to get fed up having to say yes, fine, you can download the port the sports scores app. Anyway, Apple is warned of downstream Data sharing since SB2420 requires requires app stores to implicitly share age and parental consent status with developers. Now, of course, the bottom line is that Apple really, really, really really doesn't want to do this or get involved in any way with any of this.

Steve Gibson [01:27:49]:

However, that doesn't feel like a practical long term position for Apple to be taking given the legislation which we're seeing. You mentioned California. That's that. That's like generous compared to what Texas is is doing in 10 weeks. Everything we see everywhere evidences a growing awareness of age related Internet content control. Okay, so I started out noting that last Wednesday Apple's developer portal posted their position and response to these new Texas requirements. Apple's posting was titled New Requirements for apps available in Texas and Apple writes Beginning January 1, 2026, a new state law in Texas, SB2420 introduces Age Assurance requirements for app marketplaces and developers While we share the goal of of strengthening kids online safety, we're concerned that SB2420 impacts the privacy of users by requiring the collection of sensitive, personally identifiable information to download any app, even if a user simply wants to check the weather or sports scores. Apple will continue to provide parents and developers with industry leading tools that help enhance child safety while safeguarding privacy within the constraints of the law.

Steve Gibson [01:29:25]:

Once this law goes into effect, users located in Texas who Create a new Apple account and I'll come back to this but this is interesting Leo create a new Apple account. There is no language that is retroactive in this legislation which is really interesting, Apple said will be required to confirm whether they are 18 years or older. Again required to confirm whether they're 18 years or older. That means everybody. I mean all adults. All new Apple accounts for users under the age of 18 will be required to join a family sharing group and parents or guardians will need to provide consent for all App Store downloads, app purchases and transactions using Apple's In App purchase system by the Miner. This will also impact developers who will need to adopt new capabilities and modify behavior within their apps to meet their obligations under the law in 10 weeks. Similar requirements will come into effect later next year in Utah and Louisiana.

Steve Gibson [01:30:42]:

So Texas alone for now here comes Utah and Louisiana so and just wait till Mississippi gets wind of this and figures out that they can do the same thing. Apple continued. Today we're sharing details about updates we're making and the tools we'll provide to help developers meet these new requirements. To assist developers in meeting their obligations in a privacy preserving way will introduce capabilities to help them obtain users age categories and manage significant changes as required by Texas state law. The declared age range API is available to implement now and will be updated in the coming months. I hope it's in weeks to provide the required age categories for new new account users in Texas and new APIs launching later this year, meaning in time this year will enable developers when they determine a significant change is made to their app to invoke a system experience to allow the user to request that parental consent be re obtained. Additionally, parents will be able to revoke consent for a minor continuing to use an app. More details, including additional technical documentation will be released later this fall.

Steve Gibson [01:32:11]:

In other words, Apple is scrambling in order to provide the API which the APIs that developers will need in order to be compliant with this Texas law. They said, we know protecting kids from online threats requires constant vigilance and effort. That's why we will continue to create industry leading features to help developers provide age appropriate experiences and safeguard privacy for their apps and games. And empower parents with a comprehensive set of tools to help keep their kids safe online. Okay, so I went over to check out Apple's declared Age Range API. The page is titled Declared Age range. Create age appropriate experiences in your app by asking people to share their age range. Okay, I was struck by the language by asking people to share and by the name of the entire API, the API, which is after all the declared age range API.

Steve Gibson [01:33:20]:

What follows then is the overview of, of the API on the page. And it just says in so here's the overview of the declared age range API says use the declared age range API to request people to share their age range with your app. For children in a family sharing group, a parent or guardian or the family organizer can decide whether to always share a child's age information information with your app. Ask the child every time or never share their age information. Along with an age range. The system returns an age rangeservice dot age range declaration object for the age range a person provides.

Leo Laporte [01:34:08]:

This is Apple's compliance with the California law because it doesn't work with the Texas law.

Steve Gibson [01:34:14]:

Right, right, right.

Leo Laporte [01:34:16]:

So the California law does exactly that. It says that a maker of an app store or operating system, by the way, the California law would apply to Linux has to ask for an age of the user and then have an API that returns that age range. And it's like 0 to 5, right?

Steve Gibson [01:34:35]:

It's like 4, 5 to 10, 10.

Leo Laporte [01:34:37]:

To 13, 13 to 16, 16 to 18 or at 18 or over. But that satisfies California, you're about, I mean there's no way this satisfies what Texas is asking for.

Steve Gibson [01:34:49]:

No, no, just wait till we get to the specifics. Yeah, so, but, but even on Apple's page, in a vividly highlighted call out box labeled important, the page states data from the declared age range API is based on information declared by an end user.

Leo Laporte [01:35:08]:

Right.

Steve Gibson [01:35:08]:

Or their parent or guardian. You are solely responsible for ensuring compliance with associated laws or regulations that may apply to your app. So you know Apple is saying we're not, we're not responsible for verifying what this, what is declared as the user's age. So to exactly to your point, Leo, the none of this is useful for Texas. Right. And so you know, this is Apple saying that all they're doing is here is functioning as a middleman to pass along whatever age declaration the user might assert and that the app's developer in this instance remains responsible for ensuring compliance with associated laws or regulation. So this of course begs the question, what does the forthcoming Texas SB 2420 law require in that regard? We know that in other jurisdictions and contexts self declaration of age is specifically regarded as insufficient. So what about Texas?

Leo Laporte [01:36:19]:

Because kids are going to lie, they're going to say.

Steve Gibson [01:36:24]: