Security Now 1028 transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show

0:00:01 - Leo Laporte

It's time for security now. Steve Gibson is here. A great program for you the results from Pwn2Own 2025. Millions of dollars at stake. The rising abuse of a graphics format that actually could really be problematic. Open AI's models to find zero-day flaws, a technique that's definitely going to be on the rise. All that and more coming up next on Security Now Podcasts you love From people you trust. This is Twit. This is Security Now with Steve Gibson, episode 1028. Recorded Tuesday, june 3rd 2025. This is Security Now with Steve Gibson, episode 1028, recorded Tuesday June 3rd 2025. Ai vulnerability hunting it's time for Security Now. Woo-hoo, the show we cover, I don't know, celebrating insecurity since 1864. No, the show we cover your privacy, your security, uh, how computers work, a little sci-fi and some health news too, with this guy right here steve gibson of grccom the things that interest us is basically yeah, but yeah, we stay on topic, so I like it being wide ranging.

I think people enjoy all the your it's about your brains and you have such good brains we want to dine on them of the three other classic sci-fi movies, and by classic I mean 1955, 1956, 1970.

0:01:49 - Steve Gibson

They're movies that everybody knows. But if you don't, then you have an assignment, because I mean these things, like those of us who know know about the Krell and know about Monsters from the Id, and know about the Krell and know about monsters from the id and know about and know about folks.

0:02:11 - Leo Laporte

These are terrible movies.

0:02:12 - Steve Gibson

Oh, they're fantastic movies. Oh, my goodness.

0:02:19 - Leo Laporte

They're cheesy. I mean, if you, if you're, if you have taken the right spirit, I guess it's fun to watch them. I mean, they're not like. It's not like 2001,. A Space Odyssey Way better Okay, no Okay. Stay tuned, you're going to learn what Steve's picks are. We will be talking about that.

0:02:39 - Steve Gibson

But, as I promised last week, because we tackled a a big topic, there were some things I didn't get to. We're getting to them this week. We're going to talk about the pwn to own 2025 hacking competition which, for the first time, was held in berlin. We've got the results from that. A couple weeks ago, paypal seeking a newly registered domains patent, which, which I think is very clever, but I worried that they're patenting it because they shouldn't. We've got a really cool inside. Look at a long term expert iOS jailbreaker who has given up, and we're going to look at why. Also, the rising abuse of SVG scalable vector graphic images and who put this spec together and why, because it's insane.

We've got some interesting feedback from our listeners. As I said, I will touch on and Leo and I will discuss our varying views on classics of a couple of classic sci-fi movies that are just, I think are fantastic. Then we're going to take a deep dive into how OpenAI's O3 model discovered a previously unknown remotely executable zero-day exploit in the Linux kernel oh my goodness. And what this means for AI vulnerability hunting, which is the title of today's podcast. Wow. So it's a guy who did this. I mean he understands AI. He's been interested in vulnerability hunting and development. He well I don't want to step on the news on on the news, but it's a really, really, really interesting story and, of course, we have a picture of the week that is one for the history books.

Uh, I think everyone is going to get a big kick out of it, so, uh, yeah, if the good guys can discover vulnerabilities with AI, so can the bad guys.

0:05:01 - Leo Laporte

So can the bad guys.

0:05:03 - Steve Gibson

And I do make the point that if the AI is used before the release of the software, then there won't be vulnerabilities for the bad guys to find Good point. So I realized for a while I was thinking, oh, this is bad, I mean that there's a symmetry here.

0:05:21 - Leo Laporte

But no, actually, because you don't have to let it go until the AI has had a chance to go through it, so yeah, I've been using Claude code and AI to write tests, which I think is a really good use of AI because it's an independent eye looking at your code.

0:05:39 - Steve Gibson

That's exactly what I was going to say.

0:05:40 - Leo Laporte

Yes.

0:05:41 - Steve Gibson

I mean the reason I don't test my own code code. I've got a whole bunch of neat guys who are pounding on. It is I can't. I know how it works I don't press the button at the wrong time. I don't want to cause a race condition. If I'm a guy presses, I go. Why did you do that?

0:05:58 - Leo Laporte

well, it was there, oh in the middle of oh my god, all right, we'll get to that in a moment. I always look forward to this every tuesday. I'm glad you're here, and I know you're glad you're here too. Our show today, brought to you by another company. I'm very glad to have here our sponsor material. Actually, if you get material, you'll be glad you have it.

It's a multi-layered detection and response toolkit for email email, of course, number one vector for bad things happening phishing and so forth. And if you're a cloud-based business almost everybody is, we are certainly your cloud office isn't just another app. It's the heart and soul of your business. The problem is, traditional security tools assume everything's on-prem right and that means you're vulnerable. They treat email and cloud documents as afterthoughts, so your most critical assets are exposed without any protection, not if you have material.

Material transforms cloud workspace protection with a revolutionary approach that goes beyond traditional security paradigms. With a revolutionary approach that goes beyond traditional security paradigms, dedicated security for modern workspaces ensures purpose-built protection specifically designed for Google Workspace and Microsoft 365. Now what's cool about this is they can do this without forcing you to pass everything through their filters, because both Microsoft 365 and Google Workspace provides very capable APIs that allow them to protect you without you giving up your privacy. Complete protection across the security lifecycle that means defending your organization before, during and after potential incidents, not just attempting to prevent them. Material allows you to scale a security without scaling your team, using intelligent automation to multiply your security team's impact. They provide security that respects how people work and eliminates that impossible choice or seemingly impossible choice between robust protection and productivity. It's not a trade-off anymore, not with material. They deliver comprehensive threat defense, four different ways, four critical capabilities. They've got fishing protection. Of course that's kind of table stakes, but they're using AI, just like we were talking about AI power detection that identifies sophisticated attacks. It's not looking for something, it's seen before. It's looking for attacks and it's very good at this. They also help you with data loss prevention, intelligent content protection and sensitive data management. You also get posture management so you identify misconfigurations, risky user behaviors and identity protection, comprehensive control over access and verification. Those are kind of the four key areas.

The head of security at Figma they use material. He said this it's rare to find a modern security tool with a pleasant, usable UI. Being at Figma, we obviously are attracted to well-designed interfaces. Materials interface was just so smooth, so slick, it doesn't get in your way. That's really the point. You no longer have to give up productivity for protection. From automatic threat investigation to custom detection workflows, material converts manual security tasks into streamlined, intelligent processes. They provide visibility across your entire digital workspace, allowing security professionals to focus on strategic initiatives instead of endless alert triage. It's a partner your team will love working with. Protect your digital workspace, empower your team and secure your future with Material. Visit materialsecurity to learn more and book a demo. That's materialsecurity. That's all you need, materialsecurity. We thank him so much for supporting Steve and Security Now.

0:09:54 - Steve Gibson

You started off talking about email, which reminded me of something that I wanted to say. Yes, yesterday evening, 17,568 pieces of SecurityNow email Wow, well, attempted to go out. Oh, I looked a little bit later and 650 some had bounced, which never happens.

0:10:21 - Leo Laporte

That's a low bounce rate. That's not terrible.

0:10:23 - Steve Gibson

It's normally five because the system's working really well, and so forth. Anyway, I thought what the what, as you would say? And I checked, For a reason I have no explanation for, Yahoo decided that we were a bad email server. So some cocks? Because, of course, you know, cocks sold themselves to Yahoo. So there were some cocks, but mostly. So I just wanted to let our listeners know I'm sorry if you're a Yahoo email subscriber and you did not receive the Security Now show notes. I tried to send them, you know.

0:11:04 - Leo Laporte

Your ISP wouldn't let me. 17,000 other people got the show notes.

0:11:06 - Steve Gibson

I tried to send them. You know your isp wouldn't let me. 17 000 other people got the show notes.

0:11:09 - Leo Laporte

Well, I know because last night lisa said oh, steve's working hard, she got the email. Now I I get it, but I don't look at it. That's right.

0:11:19 - Steve Gibson

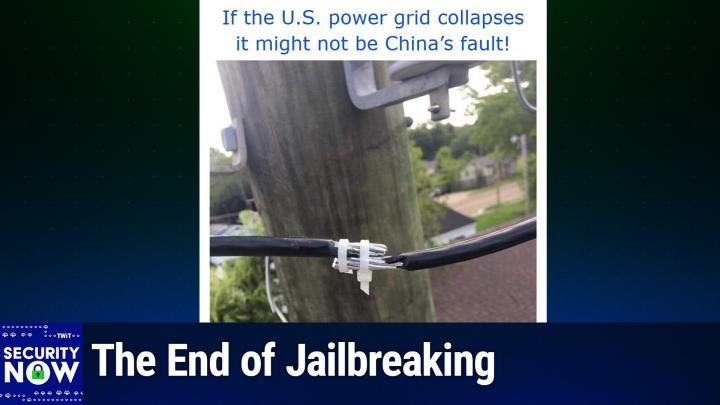

I don't want to see the picture and leo, I have to say this one. There could only have been one caption for this picture. I gave this picture the caption if the us power grid collapses, it might not be china's fault.

0:11:35 - Leo Laporte

Oh, I love these fun with power pictures.

0:11:38 - Steve Gibson

Let me scroll up because I haven't seen it yet if the us power grid collapses, it might not be China's fault.

0:11:46 - Leo Laporte

Oh my God, that's an interesting way to make a splice, do you think that would? I guess it would work.

0:11:58 - Steve Gibson

Oh well, as long as you don't have a windstorm or something.

0:12:00 - Leo Laporte

Now I actually maybe a little electrical tape around it, just you know, just to for extra support.

0:12:07 - Steve Gibson

Presumably this person, the lineman who did this splice, intended to come back soon.

0:12:14 - Leo Laporte

Uh, we don't really know anything about the story here you know, I like, though he was careful to trim the tails of the of the zip ties, because, yeah, and we've got two zip ties oh there's another one, oh look.

0:12:29 - Steve Gibson

No, no, I meant there are two up there on the main splice.

0:12:32 - Leo Laporte

Oh, yeah, yeah, yeah, yeah, yeah. Well, that's double protection.

0:12:36 - Steve Gibson

Yeah, yeah, that's right, because you know one tie wrap's not good enough, you need to do two, yeah, yeah, if you need to do two, yeah.

0:12:42 - Leo Laporte

Wow. So those who aren't able to see Go ahead. You describe it.

0:12:47 - Steve Gibson

Yeah, yeah, for someone who is unable to see this, we have a power line. We can tell because it's sort of in the background is a telephone pole, a power pole with power lines, a house in the background you hope that they've got their fire insurance paid up and a naked, bare splice of two cables. Where about maybe an inch and a half of each of the cables? The rubber insulation has been cut off and they're put next to each other and then held in place with a pair of white plastic zip ties. So now I actually think that this may be ground wires, and so they're less.

Oh, that wouldn't be too bad, they're less, it's less of a concern than you might otherwise think, but, boy, there's really no excuse for something that is certainly slipshod at best.

0:13:54 - Leo Laporte

Well, has anybody ever used zip ties? I mean, that could slip out easily and it's not protected from the rain.

0:14:01 - Steve Gibson

Yeah, and there's nothing to prevent either side being pulled on, as you said, it's just going to slide right out.

0:14:10 - Leo Laporte

So anyway, I got a kick out of it. The.

0:14:12 - Steve Gibson

US power grid collapses.

0:14:15 - Leo Laporte

It's all held together with spit and chewing gum. Might be Mo, wow, yeah, okay.

0:14:23 - Steve Gibson

So last week I promised to catch us up with the results from the recent Pwn2Own hacking competition, which we've been following for the entire 20 years of this podcast. They wrote while the Pwn2Own competition started in Vancouver in 2007,. We always want to ensure we are reaching the right people with our choice of venue. Over the last few years, the Offensive Con Conference in Berlin has emerged as one of the best offensive-focused events of the year, and while CanSecWest has been a great host over the years and our longtime listeners will remember that's where we've talked of it being held in the past CanSecWest it became apparent that perhaps it was time to relocate our spring event to a new home. With that, we are happy to announce that the enterprise-focused Pwn2Own event will take place on May 15th through 17th 2025 at the Offensive Con Conference in Berlin, germany. While this event is currently sold out, we do have tickets available for competitors and we believe the conference will also open a few more tickets for the public too. The conference sold out its first run of tickets in under six hours, so it should be a fantastic crowd of some of the best vulnerability researchers in the world. Okay, so now that was two and a half weeks ago.

What happened? Before I run through what happened, I want to remind everyone the context of what we're going to hear. These are the results when today's upper echelon most skilled penetration hackers go up against fully patched systems. What always strikes me is that the targets here are not old junk routers past their end of life that the FBI says everybody should stop using or should have years ago. In every case, these targets, what these guys are successfully cracking open, are fully patched, modern systems, but like what we're all using right now. So for me this serves as a reminder that, to a large extent, the only reason this is also why my model for security is, unfortunately, swiss cheese or a sponge these most skilled hackers want to attack us because all the evidence suggests they could get in if we let them at our system. Hopefully, most of these are local attacks on systems, not remote code exploits, so thank goodness for that. So here's what happened two and a half weeks ago in Berlin, for that. So here's what happened two and a half weeks ago in Berlin.

I'm just going to to keep this short, I'm going to run through the list of things that happened. There's absolutely no chance that I could pronounce any of the names of these people, so I apologize. I'm just going to talk about the teams that they're in, because the names of their organizations are pronounceable. I didn't want to mangle their names so badly, so here's what happened, in chronological order. It was a three-day event, so we've got three days of this.

First, devcore's research team used an integer overflow to escalate their privileges on Red Hat Linux, earning $20,000 and two master of pwn points. In other words, this was somebody who sat down at today's fully patched Red Hat Linux and got root, even though I mean mean, endless effort has gone into making that not be possible whoops. Second, although the summoning team successfully demonstrated an exploit of nvidia triton, the bug that they used, that they discovered independently, was also known to nvidia, but nvidia had not yet patched it. So that still qualifies because these guys independently discovered a bug that was in the public space, so anybody's fully patched nvidia systems would have succumbed. That earned them $15,000 and one and a half master of pwn points. Star Labs SG combined a use after free. They use the initials UAF and use after free is significant. We're going to run across this a couple of times. Unfortunately, I'm going to actually be talking about it in depth before I go into a great deal of detail at the end of the podcast. So things are in fact, I'm using it before I describe it as opposed to using it after freeing it. So these guys, star Labs SG combined a use after free and an integer overflow to escalate to system level on Windows 11. That got them $30,000 and three master of poem points.

Researchers from Theory were able to escalate to root on Red Hat Linux using a different hack with an info leak and a use after free. One of the bugs used was an end day, meaning that it was known to the world but not to them at the time. But they got $15,000 and one and a half master of pawn points. But they got $15,000 and one and a half master of Pwn points. The first ever winner of the AI category. I forgot to mention that this was. I mentioned it last week.

This is the first time that artificial intelligence was considered in scope for the Pwn2Own conference. So the first ever winner in the AI category was the summoning team. They successfully exploited Chroma to earn $20,000 and two master of pawn points. In a surprise to no one, the conference holders wrote that Marcin Wajaszowski's privilege escalation on Windows 11 was confirmed, he used an out-of-bounds right to obtain system privileges and also obtained $30,000 for himself and three master of poem points. Their enthusiasm was rewarded as team prison break. The best of the best. 13th used an integer overflow to escape Oracle's virtual box VM and execute code on the underlying OS, again fully patched, you know, like as current as you could have it be, and they broke out of the VM. Why?

0:22:03 - Leo Laporte

Because they wanted to, because they could, because they could, because they for them, okay fine you think, well, there's another reason they did it. How much did they make out of that?

0:22:13 - Steve Gibson

forty thousand dollars, oh, and four master of poem points. So, yes, they had motivation, and we'll be talking about motivation here in a minute. That's a perfect lead. Lead in Leo, uh uh Vettel. Cybersecurity targeting NVIDIA Triton inference server successfully demonstrated their exploit. It was again NVIDIA must be a little slow in getting their, their, their updates out, because again, this is NVIDIA and it was known to the vendor, though had not yet been patched. They earned $15,000 and one and a half master of pawn points. A researcher from out of bounds earned $15,000 for a third round and three master of pawn points by successfully using a type confusion bug to escalate privileges on Windows 11. To escalate privileges on Windows 11. Star Labs used a use-after-free to perform their Docker desktop escape and execute code on the underlying OS, so broke right out of Docker's containment and earned themselves $60,000 and six master-of-pwn points.

0:23:22 - Leo Laporte

Breaking out of VMs or Dockers seems to be the big money maker, right yeah?

0:23:27 - Steve Gibson

well, because that's the cloud attack. I mean, everything in the cloud is is vms and and containment, and so if you can get to the underlying the you know vm in a cloud environment, that's golden. And that was just day one. Fuzzing Labs exploited NVIDIA's Triton. The exploit they used was also known to the vendor. Again, nvidia, get with the program here, get these patches out. But that still earned them $15,000.

Vittel Cybersecurity combined an off-bypass and an insecure deserialization bug to exploit Microsoft SharePoint pass and an insecure deserialization bug to exploit Microsoft SharePoint, earning $100,000 and 10 master of Pwn points. Star Labs SG was back with a single integer overflow to exploit VMware's ESXi the first in Pwn to Own history earning them $150,000 and 15 master of Pwn points. As you said, leo, breaking out of VMs and containment. That's where the money is and this is an enterprise-focused competition. So that's why we're seeing VirtualBox and VMware ESXi and so forth. Palo Alto Networks researchers used an out-of-bounds right to exploit Mozilla Firefox to earn $50,000 and five master of poem points. The second win in the AI category goes to the team from Wiz Research who leveraged a use-after-free to exploit Redis, earning $40,000 and four Master of Pwn points In the first full win against NVIDIA Triton inference server. Researchers from Quirious Secure used a four-bug chain to exploit NVIDIA's Triton. Their unique work earned them $30,000 and three Master of Pwn.

0:25:27 - Leo Laporte

And NVIDIA said, oh, we didn't know about that one.

0:25:33 - Steve Gibson

There's one. We didn't know so, and if we did, we wouldn't have passed it anyway. Yeah right Idiots. Vitel Cybersecurity used an out-of-bounds write for their guest-to-host escape on Oracle VirtualBox. That get them $40,000. Another researcher from Star Labs SG used a use-after-free bug to escalate privileges on Red Hat Enterprise Linux. That earned them $10,000. Although Angelboy from DevCore Research Team successfully demonstrated their privilege escalation on Windows windows 11 one of the two bugs used was known to microsoft. Nevertheless, that guy got eleven thousand two hundred and fifty dollars. Although the team from fpt nightwolf successfully exploited nvidia's triton, the bug once again they used was knownVIDIA but had not yet been patched. Still $15,000 richer as a result.

Former Master of Pwn winner Manfred Paul used an integer overflow to exploit Mozilla Firefox's renderer. His excellent work earned him $50,000. Him $50,000. Wizz researchers used an external initialization of trusted variables bug to exploit the NVIDIA container toolkit. Star Labs researchers used a TOC-TOU that's a time of check, time of use, race condition to escape the virtual machine and an improper validation of array index for the Windows privilege escalation. So they got out of a Windows VM and then escalated their privileges to full admin, earning them $70,000 and nine.

Master of pulling points. Reverse tactics used a pair of bugs to exploit ESXi, but the use of the uninitialized variable bug collided with a previous entry. Nevertheless, the integer overflow was unique and earned them $112,500 and 11.5 master of poem points. We have two left. Two researchers from Synactive used a heat-based buffer overflow to exploit VMware Workstation. That got them $80,000. And in the final attempt of Pwn2Own Berlin 2025, milos Ivanovic used a race condition bug to escalate privileges to system, which is to say admin on Windows 11. His fourth round win netted him $15,000 and three master of poem points.

I would love to watch this. It would be. So it is, and that's why it sold out. In six hours, leo Wow, they put the tickets online Bang Gone. In six hours. Leo, wow, they put the tickets online bang gone. You know, we want to sit there because it is all done, live, right on stage, right with the guys and their laptops. You know, sweating over the keyboard, hoping that their exploits going to work. Um, there were a total of 26 individual exploits demonstrated. While some of them were known to their respective vendors, largely NVIDIA. In every one of those cases, patches for them had not yet been made public, so they still qualified as new independent discoveries.

Trend Micro summed up the event writing and we're finished. What an amazing three days of research. We awarded an event total of $1,078,750. They said congratulations to the Star Labs SG team for winning Master of Pwn. They took home $320,000 and 35 master of poem points. During the event they wrote, we purchased from the researchers and disclosed to their respective vendors 28 unique zero days Wow, seven of which came from the AI category. Thanks to OffensiveCon for hosting the event.

0:29:53 - Leo Laporte

The participants for bringing their amazing research and the vendors for acting on the bugs quickly, except in the case of NVIDIA, Although our chat's saying that many of the things you just described have been patched most recently, like Ubuntu just updated a bunch of patches.

0:30:03 - Steve Gibson

No, that's exactly. What happens here is that Trend Micro is thanks to sponsors of the event and there are many enterprise level sponsors who provide the money to back this Trend Micro. So this is like a bug bounty, sort of like a live bug bounty event. And of course they do run. Trend Micro runs the zero day. Zdi is the bug bounty program, so this is sort of like that. You know the bug bounty in real time as a conference format, these exploits from the guys who find them and then immediately turn around and report them to the vendors and say by the way, microsoft, we have three new zero days in Windows 11 that allow people just to cut through all your security. Microsoft goes oh well, we'll get around to fixing that one.

0:31:02 - Leo Laporte

We'll fix that, but I wonder if the companies that benefit from this, like Microsoft and NVIDIA, pay into it, do they?

0:31:10 - Steve Gibson

Yeah, okay, so some of that money is coming from them.

0:31:13 - Leo Laporte

I mean, they want this to happen.

0:31:16 - Steve Gibson

Yeah, they are corporate sponsors. Yeah, and you know, it occurs to me as I was running through this. First of all, again, everyone has a has a taste for this. Think about that that the these are, you know, these are the best of the best. You know that that is said, but it just says that here we're talking about, you know, docker containers and vmware esxi, which is state-of-the-art virtual machine containment, and these guys go, eh.

0:31:49 - Leo Laporte

Well, they're pretty good. They are good. Of course, they work all year and save these up because they want to make this money.

0:31:58 - Steve Gibson

I was listening to you guys talking about code authoring on MacBreak Weekly before the podcast.

Vibe coding, yeah, yeah yes, vibe, vibe coding, and one thing occurred to me, and that is that what I heard was uh, for example, in the case of alex and andy, who are not, you know, real like, aren't themselves code authors, they are now using AI to create apps, to interact with the AI, to create apps. We've talked in the past on the bug bounty side about the possibility of our listeners generating some extra revenue on the side if they were to find vulnerabilities. Well, today's podcast is AI vulnerability hunting and it's an interesting possibility that there may be people listening who are not at this level, and would never say that they were at the level of pwned-to-own competition winners, but who may well be able to work with various large language models and systems which are offered, for which bug bounties are offered, and use ai to help them find some problems that they would, some bugs that they wouldn't otherwise find, and generate some revenue.

so you don't know until you look and uh, and you want these guys working at white hat, not black hat, obviously yes, they're good yes, yes, give them a reason to to boy.

But it just goes to show again that, like here are all these mainstream actively maintained in the case of nvidia, products, uh, that are. Don't hackers sit down and say I'm gonna, I want to find a way in, and they can I imagine you get more points for a more difficult yes task, yes, well and more cringeworthy. I mean, if you're breaking out a vsxi vm, that's worth a lot of money yeah and and and, and I'll also understand too.

There that is it. Was it Zerodium that are the bad guys that are buying these bugs.

0:34:26 - Leo Laporte

Yeah, yeah, yeah. You could sell that to Zerodium for a ton of money, yeah yeah, yeah, they know they're taking a cut in pay to be good guys.

0:34:36 - Steve Gibson

Yeah.

0:34:37 - Leo Laporte

Yeah, what an interesting. I love this.

0:34:41 - Steve Gibson

Speaking of a cut in pay. Would you like? A boost a little, uh, a little, uh, a little something extra. Re-up my caffeine.

0:34:52 - Leo Laporte

We can all tell them I'm a little low energy at the moment actually, I want to talk about a very interesting uh sponsor of ours, out systems, the leading ai powered application and agent development platform. For more than 20 years, the mission of out systems has been to give every company the power to innovate through software. Okay, and as as ai has advanced, uh low code solutions have gotten smarter. This is this is their time. Let me tell you it. Teams, as you, well, I'm sure, have two choices when it comes to software. You can buy off-the-shelf SaaS products and you're up to speed right away, but you lose flexibility and, frankly, a lot of competitors are using the same product, so you lose differentiation. So that's the buy side. Or you could build it yourself, and trust me as somebody who has chosen to build. It's a lot of time, a lot of money and you may not get the best quality software. Build versus buy this is for for decades, this has been the conundrum, but now there's a third way, thanks to ai the fusion low-code and DevSecOps automation into a single beautiful development platform. That's what OutSystems does. This is incredible. It's not build versus buy anymore. You can actually build custom applications using AI agents as easily as buying generic off-the-shelf sameware, and what's nice about ad systems is as a base, you automatically get flexibility, security, scalability those come standard right With AI-powered low-code, teams can build custom future-proof applications at the speed of buying, with already built-in fully automated architecture, security.

The integrations you want are there the data flows, all the permissions you need. That's because out systems is good. Out systems is the last platform you'll ever buy, because you can use it to build anything and customize and extend your core systems to boot. Build your future without systems. Such a cool idea. Visit out systemscom slash twit to learn more out systemscom slash twit. We thank you so much for supporting security. Now and mr now, fully caffeinated steve gibson. Are you ever fully caffeinated, steve, really?

0:37:28 - Steve Gibson

yeah, there have been times when I dare not have any more over caffeinated over caffeinated okay.

so the online publication domain name wire posted some interesting news under the headline paypal wants patent for system that scans newly registered domains. With the subheading patent describes automated crawler and checkout simulator to spot fraud in newly registered domains, and I just think this is extremely clever. The publication that explained PayPal filed a patent application back at the end of November 2023. Ok, so again a year and a half. It was just published last Thursday, May 29th describes a method to proactively detect scam websites which have historically created a problem for PayPal, by automatically examining newly registered domains. That's just so clever. And simulating checkout processes oh wow, Isn't that neat.

0:38:43 - Leo Laporte

Yeah, the.

0:38:43 - Steve Gibson

US patent application 18-521-909, titled Automated Domain Crawler and Checkout Simulator for Proactive and Real-Time Scam Website Detection, describes a system designed to tackle online fraud at its earliest stages. According to the application, paypal's system monitors newly registered domains to identify those that include checkout options. The technology then performs simulated checkout operations on these sites, mimicking a genuine user's experience. This simulation specifically looks for domain redirections during checkout processes, because this is a common tactic scammers use to conceal fraudulent activity. If a redirection occurs, paypal's system checks the redirected domain against its database of known scam merchants and flagged accounts. Domains linked to previous fraudulent activities trigger a scam alert, allowing PayPal to promptly label and potentially block transactions from these websites.

Paypal notes that scammers often set up new, seemingly legitimate websites to mask their operations. By proactively identifying suspicious redirections and cross-referencing them against scam-related merchant accounts, the method allows it to significantly reduce that risk, which, again, this is just brilliant. It's like one of those. Why didn't I think of that kind of things? But my first thought upon reading this was that, while you know it is a very cool and clever idea, it feels wrong to issue a patent for this. I mean, or I don't know, it makes me a little nervous, since the idea's use really should remain freely available for any similar service that is subject to this sort of abuse to employ.

0:40:55 - Leo Laporte

I don't think they can patent it because there's lots of prior art. We talked last week about NextDNS, which we both use as a DNS server, right Right On their security page and I have it turned on. I know I'll tell you how. I know they have a switch that says block newly registered domains domains less than 30 days ago known to be favored by threat actors. This has been around forever and the reason I know about this. My daughter created a new store online store and she wanted me to check it and I couldn't get to it For the longest time online store and she wanted me to check it and I couldn't get to it For the longest time. I thought, oh, it's broken, it's broken. Then I realized, oh, wait a minute. When did you register that domain? She said last week. I said, okay, it works, it really works. But PayPal didn't invent this, I guess, is the point.

Well they're going further, though they could patent that process.

0:41:46 - Steve Gibson

Well, they're going further though they could patent that process, sure yeah. What they're trying to patent is the notion of proactively examining the site, the actual content of the site, simulating a purchase event and then watching to see what happens with that purchase event, and my concern is that this ought to be in the public for the public good. Now, it is true that not all patents are obtained for competitive advantage and used to prevent competitors from using the invention. To prevent competitors from using the invention, it might be and this would be great if it were true that PayPal is being civic-minded and desires to obtain the patent preemptively to prevent anyone else from patenting what I think is a very clever and useful solution, and then they might prevent PayPal from doing the same. So let's hope that if this automated you know, newly registered domain scrutiny concept were to become commonplace, that PayPal would not prevent other commercial entities from availing themselves of similar solutions, because this is, you know, clearly a good idea. And what is really cool is that if this became pervasive, then it basically it would shut this down as something that scam sites could get away with doing, because, you know, registering domains is not expensive but it's not free and if it stopped working enough to justify them going through all this effort. They would just, you know, give up. You know, give that up. As you know, generally, as security is increasing, we're we're seeing things that used to work no longer working for the bad guys, and so they sort of say, okay, fine, well, we'll go try to, you know, make money maliciously somehow else. Anyway, very cool patent and, I thought, a very clever new idea.

Okay, I ran across an important story that I wanted to share because it comes from an extremely unlikely source, a true and unabashed vulnerability exploit developer and hacker who's been fixated upon Apple and iOS for years and who has been right, has the deepest of adversarial knowledge and understanding of iOS. We learn why, as he puts it about kernel exploitation and we'll get to his quote a little bit later. But he said quote. Those days are evidently long gone, meaning successful exploitation, he said, with the iOS 19 beta being near weeks away and there being no public kernel exploit for iOS 18 or 17 whatsoever. In other words, apple quietly changed the world.

Since this was no easy feat, I'm sure this is known and appreciated among those at Apple who made this happen, as well as those in the exploit community, whose many tricks no longer work. But it's not something. This is not something that I think has ever really been made or has come completely clear to the rest of the world, because you really need to get down in the weeds to understand this, because this is where these sorts of changes need to happen. Anyway, they did happen, so okay now, part of the problem I have with sharing this is that, because what Apple did really is down in the weeds. That's where we have to go in order to get a really deep understanding. But as I was absorbing absorbing the, the, the this hacker's name is Siguza S I G U Z A. He's Swiss. Um, as I was absorbing what he wrote, and and and explained, I was thinking okay, um, by the end of this podcast, our listeners are going to have enough of an understanding about what it means to double free a kernel object to have this make more sense.

0:46:40 - Leo Laporte

I have no idea what that means. I know.

0:46:43 - Steve Gibson

But I'm actually going to be talking about it at the end of the podcast and as I was putting this together, I had already written the end, so I knew that I was going to be explaining what this stuff was, except that now I'm talking about it before I've explained it.

So, as I said, things are a little ordered upside down here, but the AI vulnerability hunting really does need to be our main topic and I like having it at the end. Anyway, I'm going to share enough of this that everyone's going to get a good sense for what Apple has done, but at some point you're just going to have to let some of the details wash over you and not worry about the details I'm gonna. So I'm gonna settle for sharing enough of segooza's non-technical backgrounding for everyone, as I said, to get a real, a good sense for the environment that this, this hacker, had historically been swimming through and for how he now observes that has totally changed. Apple has totally changed the game and this sort of happened without anyone really. I mean, you know, wwdc happens every year, it's what next Monday?

0:48:09 - Leo Laporte

right, Leo, and you guys are going to be covering it. It is, we're going to stream the keynotes.

0:48:12 - Steve Gibson

And five years ago just five years ago, in 2020, everything was different from the way it is today. So he wrote. Today, so he wrote. I'm an iOS hacker slash security researcher from Switzerland. I spend my time reverse engineering Apple's code, tearing apart security mitigations, writing exploits for vulnerabilities or building tools that help me with that. Sometimes I speak about it at conferences, sometimes I do lengthy blog posts with all the technical details, sometimes my work becomes part of a jailbreak and sometimes it never sees the light of day.

Okay, two weeks ago, he wrote a blog posting titled Tachyon the last zero-day jailbreak. It starts off. He said hey, long time no see. Huh, people have speculated over the years that someone bought my silence or asked me whether I had moved my blog post to some other place, but no, life just got in the way. This is not the blog post which I planned to return to or return with, he probably means but it's the one for which all the research is said and done. So that's what you're getting. I have plenty more that I want to do, but I'll be happy if I can even manage to put out two blogs a year. He said now Tachyon.

Tachyon is an old exploit for iOS 13.0 through 13.5, released in Uncover, where U-N-C-O is a numeric O-V-E-R, and, in fact, if you put uncoverdev, u-n-c, numeric zero, v-e-r, dot D-E-V, what you will find there is a jailbreaking kit, because that's where a lot of this guy's work goes. He's one of the guys who was always figuring out how to jailbreak iOS and he said it was released in Uncover that is, this Tachyon exploit version 5.0.0 on May 23rd 2020, exactly five years ago. So this is his five-year anniversary of the Tachyon exploit. He said okay, so anyway. I'm going to interrupt here to remind everyone that once upon a time, end-user jailbreaking was a thing. It was common. Mostly, it was for people wanting to make unauthorized changes or customizations to their devices, to run unsigned code or sideloading apps, to get apps installed not from the app store, or just to have the freedom of digging around in their iOS or Android devices. Innards, in this case, it's all Apple and iOS with this guy. So this Swiss Saguza hacker was one of the Uncover developers. In fact, he contributed to a number of other jailbreaking products, as we'll see, products as we'll see, and Uncover describes itself as the most advanced jailbreak tool and on the homepage it says iOS 11.0 through 14.8. Uncover is now at version 10.0.2. And under what's new, it notes quote add exploit guidance to improve this version 8.0.2. Added exploit guidance fix exploit reliability on iPhone XS devices running iOS 14.6 through 14.8. And then under the about uncover, they write uncover is a jailbreak, which means that you can have the freedom to do whatever you would like to do to your iOS device, allowing you to change what you want. Operate within your purview. Uncover unlock the true power of your iDevice. Then, lower down on the homepage, they also remind us, under jailbreak legality, that quote it is also important to note that iOS jailbreaking is exempt and legal under DMCA. Any installed jailbreak software can be uninstalled by re-jailbreaking with the restore root FS option to take Apple's service for an iPhone, ipad or iPad touch that was previously jailbroken.

Okay, so now back to Saguza, as I said, one of the guys behind this uncovered jailbreak, as well as some others, where he's explaining about tachyon. He says it was fairly standard of tachyon. It was a fairly standard kernel lpe, meaning a local privilege escalation for the time. But one thing that made it noteworthy is that it was dropped as a zero day, affecting the latest ios version at the time leading apple, so you know. So this was, you know and remember. World has changed in five years. Where he describes techie on as quote, a fairly standard kernel local privilege escalation, like that's just what we did back then. So this was the work that these guys were doing, were the sorts of things that was causing Apple to respond immediately and, of course, we know why our iDevices were having to update themselves and restart so often.

Back then. He says this is something that used to be common a decade ago but has become extremely rare, so rare, in fact, that it has never happened again after this. Another thing he writes that made it noteworthy is that, despite having been a zero day on iOS 13.5, it had actually been exploited before by me and friends, but as a one day at the time, by me and friends, but as a one day at the time. And that's where this whole story starts. He says in early 2020, pwn2owned, and he says I don't know that's.

P-w-n-t-0-w-n-d is a jailbreak author, not to be confused with pwn to own that the event. So this, the, this person whose handle is pwn to owned uh, he said contacted me saying he'd found a zero day reachable from the app sandbox, meaning any app running on ios could break out of the app containment, which is very valuable, and was asking whether I'd be willing to write an exploit for it. At the time, I'd been working on Checkra1n C-H-E-K-R-A-1-N and Leo, it's interesting. If you look at the Check Rain site, it's C-H-E-C-K-R-A dot I-N. The logo will immediately be familiar. We, of course, talked about this at the time. We were covering all these things back in the day, as they say.

0:56:40 - Leo Laporte

Remember that logo on the site.

0:56:42 - Steve Gibson

Yeah, chess pieces, yeah yep, he said, and so he was, he said at the time. I've been working on check rain for a couple of months, so, and that's you know, another exploit.

0:56:53 - Leo Laporte

Um, uh, so I figured he wrote you think these guys would have gotten over the elite speak spellings by now?

0:57:02 - Steve Gibson

it's like oh, that's so clever, I used a one instead of an eye. I know, oh my gosh, so well, we don't know how old they are right, yeah they write pretty well, but we don't know, maybe they're kids yeah he said.

So I figured going back to colonel research was a welcome change of scenery and I agreed meaning he agreed to to to accept what this Pone Pone to owned author had the zero day that he discovered the vulnerability. So so this Saguza decided, you know, said yeah, I will create an exploit for, for the vulnerability. Ok, so he said, but where did this bug come from? He said it was extremely unlikely that someone would have just sent him this bug for free, with no strings attached, meaning because they were so valuable. Back then, he said, and despite being a jailbreak author, he wasn't doing security research himself, so it was equally unlikely that he would discover such a bug. And yet he did the way.

He managed to beat a trillion dollar corporation, meaning Apple was through the kind of simple but tedious and boring work that apple this guy writes sucks at regression testing. Because you see, this has happened before on ios 12. Sock puppet was one of the big exploits used by jailbreaks. It was found and reported to apple by Ned Williamson from Project Zero, patched by Apple in iOS 12.13, and subsequently unrestricted on the Project Zero bug tracker right. Because Apple patched it. So Project Zero published it, but against all odds it then resurfaced on ios 12.4, as if it had never been patched.

0:59:14 - Leo Laporte

So apple had a regression aha, that means they made some changes to the code that brought back a bug they had already fixed.

0:59:22 - Steve Gibson

Right right and he wrote. I can only speculate that this was because Apple likely forked their XNU kernel to a separate branch for that version and had meaning for version 12.4 and had failed to apply the patch there. But this made it evident that they had no regression tests for this kind of stuff, a gap that was both easy and potentially very rewarding to fill. And indeed, after implementing regression testing for just a few known one days, pohn got a hit. In other words, okay. So in other words, back in early 2020, this jailbreak developer, realizing that Apple sometimes inadvertently reintroduced previously repaired bugs, took it upon himself to check for anything else that Apple might have inadvertently reintroduced and struck pay dirt. That's when Pohn asked Saguza if he'd he drops into a very detailed instruction level description of precisely how this exploit works. Understanding it requires developer level knowledge of the perils and pitfalls of multi-threaded concurrent tasks and the complex management of dynamically shared and dynamically allocated memory among these tasks. And, as I mentioned, believe it or not, everyone actually will understand a great deal more about that by the time we're finished here today, because we're going to get to that, but we haven't gotten to it yet. The sense, however, one comes away with, is that as recently as only five years ago, in 2020, things were a were still a free for all, with hackers really having their way with iOS and there appeared to be little that Apple was able to do to prevent them, because Apple was constantly being reactive. They were patching zero days that were being found and found and found, and then add to that the possibility of old, previously known and fixed flaws returning and it's clear why iPhones, as I said, were needing to be restarted so often, so resurfacing. After his deep dive into the exact operation of this and exploitation of this zero-day vulnerability which Pohn had given him, which allowed them to then update their uncovered jailbreak to once again work on the latest fully patched iOS, which then forced Apple to immediately respond, segooza continues the scene, as he expressed it obviously took note of a full zero-day exploit dropping for the latest signed version meaning of iOS. He wrote. Brandon Azad, who worked for Project Zero at the time, went full throttle, figured out the vulnerability within four hours and informed Apple of his findings. Six days after the exploit dropped, synactive published a new blog post where they noted how the original fix in iOS 12 introduced a memory leak and speculated that it was an attempt to fix this memory leak that brought back the original bug, he says, which I think is quite likely. Then, nine days after the exploit dropped, Apple released a patch. He said, and I got some private messages from people telling me that this time they'd made sure that the bug would stay dead. And I think those were private messages from inside Apple is what he's saying, because otherwise how would anybody know that Apple had made sure it stayed dead? They even added a regression test for it to their XNU kernel. And finally he writes 54 days after the exploit dropped, a reverse engineered version dubbed Tardion was shipped in the Odyssey jailbreak, also targeting iOS 13.0 through 13.5.

But by then the novelty of it had already worn off. Wwdc 2020 had already taken place and the world had shifted its attention to iOS 14 and the changes ahead. And he writes and oh boy did things change. He writes and oh boy did things change. Ios 14 represented a strategy shift from Apple.

Until then, they had been playing whack-a-mole with first-order primitives, but not much beyond the kernel.

Underscore, task restriction and zone underscore require were feeble attempts at stopping an attacker when it was already too late, had a heap overflow, over-release on a C++ object type confusion.

Pretty much, no matter the initial primitive, the next target was always mock ports, and from there you could just grab a dozen public exploits on the net and plug their second half into your code. Obviously, this guy has had his sleeves rolled way up for quite a while, so this is just the game that all of these hackers were playing. He says iOS 14 changed this once and for all, and that is obviously something that had been in the works for some time, unrelated to Uncover or Tachyon, and it was likely happening due to a change in corporate policy, not technical understanding. Okay, and here we're going to get a bunch of technical jargon, but don't worry about following it all, just sort of let it wash over you. As I said, saguza writes perhaps the single biggest change was to the allocators K-Alloc and Z-Alloc. Many decades ago, he writes, cpu vendors started shipping a feature called data execution prevention, and actually I don't think it was decades ago maybe, but for someone that young, everything feels like decades ago.

1:06:42 - Leo Laporte

It was 100 years ago, that's right. I remember DEP. Yeah, we actually talked about it on the show. Yeah, so it wasn't decades ago. I don't think it was a hundred years ago, that's right. I remember dep. Yeah, we actually talked about it on the show. So yeah, so it wasn't decades ago.

1:06:51 - Steve Gibson

Right, and he says he said so data execution prevention, dep, because people understood that separating data and code has security benefits. Now right, you know. In other words, there's a huge security benefit if we're able to prevent the simple execution of data as if it were code, since bad guys can send anything they want as data. That is the separation, he says, but with data and pointers. Instead they butchered up the zone map and would go into one heap, kernel objects into another. And I'll just interject that heap is terminology from computer science, it's the place from of memory for allocation, segouza writes, for kernel objects they also implemented sequestering, which means that once a given page of the virtual address range is allocated for a given zone, it will never be used for anything else again until the system reboots. Now that's a big architectural change and it's brilliant. I'll explain in a second. He writes the physical memory can be released and detached if all objects on the page are freed, but the virtual memory range will not be reused for different objects, effectively killing kernel object type confusions. Add in some random guard pages, some per boot randomness in where different zones will start allocating and it's effectively no longer possible to do cross zone attacks with any reliability, still made it into the kernel object heap and vice versa. But this has been refined and hardened over time to the point where CLang now has some built-in underscore XNU features to carry over some compile time type information, to runtime, to help with better isolation between different data types. And here it is.

But the allocator wasn't the only thing that changed, it was the approach to security as a whole. Apple no longer just patches bugs, they patch strategies. Now you were spraying K message structs as a memory corruption target as part of your exploit. Well, those are signed now so that any tampering with them will panic the kernel. You are using pipe buffers to build a stable kernel read-write interface. Too bad, those pointers are packed now. Virtually any time you used an unrelated object as a victim, apple would go and harden that object type.

This obviously made developing exploits much more challenging. Developing exploits much more challenging? Well, obviously to those kind of guys to the point where exploitation strategies soon became more valuable than the initial memory corruption zero days. Okay, in other words, he's saying that Apple had succeeded in raising the bar so high because, instead of patching vulnerabilities, they were patching strategies. They had cut off and killed so many of the earlier tried and true exploitation strategies that hackers were needing to come up with and invent entirely new approaches. Avenues, entire avenues of exploitation were finally being eliminated at the architectural level. Apple was no longer merely patching mistakes, they were redesigning for fundamental unexploitability. Segouza continues quote.

But another aspect of this is that, with only very few exceptions, it basically stopped information sharing dead in its tracks. Before iOS 14 dropped, the public knowledge about iOS security research was almost on a par with what people knew privately, meaning it was out in the ether, everyone was talking about it, it was on forums and so forth. It was being shared and exchanged, he said, and there wasn't much to add. Hobbyist hackers had to pick exotic targets like KTTR or secure ROM in order to see something new and get a challenge. These days are evidently long gone, and here's the quote from earlier, with the iOS 19 beta being merely weeks away and there being no public kernel exploit for iOS 18 or 17 whatsoever, even though Apple security notes will still list vulnerabilities that were exploited in the wild.

Every now and then, private research was able to keep up. Public information has been left behind. I assume what Saguza means here is that iOS has finally become so significantly tightened up meaning like big time that it is no longer possible for casual developer hacker hobbyists to nip at its heels any longer. It's no fun anymore. All of the low hanging fruit has been pruned and the fruit that may still be hanging is so high up that it's no fun to climb that high. The chances are that you'll get all the way up there and come away empty-handed. Saguza concludes by writing it's insane to think that that exploitation was so easy a mere five years ago.

He says I think this really serves as an illustration of just how unfathomably fast this field moves. And he finishes I can't possibly imagine where we'll be five years from now. So his webpage notes his involvement in Phoenix, a jailbreak for all 32-bit devices on iOS 9.3.5, created by Thimstar, and he said and himself, and he said and himself something called Totally Not Spyware a web-based jailbreak for all 64-bit devices on iOS 10, which can be saved to a web clip for offline use. Spice, an unfinished untether for iOS 11. Uncover, which we talked about, an app-based jailbreak for all devices running iOS 11.0 through 14.3. And he said I'm not an active developer there, but I wrote the kernel exploit for iOS 13.0 through 13.5.

Check Rain, a semi-tethered boot ROM jailbreak for a seven through a 11 devices on iOS 12.0 and up.

And he said the biggest project I've ever been a part of and by far the best team I've ever worked with. So now here is Saguza, who obviously has, you know, deep involvement in this, in this what was previously a hobby industry, essentially saying that this game is over and that it ended a few years ago with iOS 14 and the changes that Apple made and some deep change in their security strategy within Apple. The Apple finally made the required fundamental changes and all public kernel exploits disappeared. He says at the end he wants to thank everyone he's learned from before these changes hit, because it's time to move on. Apple finally got very, very serious, stopped believing that they could ever get there, you know, like get ahead of the bugs using traditional system design and bit the bullet to make fundamental changes that were required to change the game forever. And it did so anyway. I thought this was some really terrific perspective from someone who was, you know, once on the inside, but there is no longer any inside to be in because Apple fixed iOS.

1:17:03 - Leo Laporte

Let's remember that it's not. It probably wasn't solely to stop these guys. Apple's biggest challenge were zero click attacks from nation states Right Through NSO group and Pegasus, and I think they were really. I mean, that's what blast door was all about. They were really trying to protect their phones from that kind of exploit, and it's just a nice side effect that jailbreakers couldn't get in either. I wonder, though, if you gave these pwn to own guys 150 000 or 250 000 dollars, do you really think there's no way in?

1:17:40 - Steve Gibson

it's a good question. I mean, we do. We do still hear that pegasus is around.

1:17:45 - Leo Laporte

It's still around. Celebrite is still there downloading the contents of people's iPhones. Nobody knows how they don't publicize that obviously. Oh Lord, no, I mean Apple probably has some thought, and that's what Apple's patching right is these is these?

1:18:08 - Steve Gibson

well, and remember we we've covered. A couple years ago we covered one of these where there was some obscure range of hardware access in an undocumented area of a chip which by like, somehow, somebody reverse engineered this and figured it out and was able to use it to access some weird random iPhone grid of numbers.

1:18:30 - Leo Laporte

Yeah, I like what this is about, though, which is that Apple isn't specifically trying to patch flaws. They're changing how the system works to be less vulnerable, and I think that's the right approach, right.

1:18:44 - Steve Gibson

Right. Traditional software development, traditional software architecture never needed to be this hardened Right. And Apple adopted that technology for their device when it was created and said okay, well, we won't have any bugs. Well, you're going to have bugs. There's always bugs. And so what they finally had to do was to go back and say, okay, we gotta, we gotta, stop allowing these things, these bugs, to be turned into exploits. Yeah, that's right. Yeah, and so they changed the architecture.

1:19:20 - Leo Laporte

It's a better way of thinking of it. I think you're right. Yeah, I think you're exactly right. What a, what an interesting story. I wonder do you think this guy really retired, or maybe he went to high school and got busy?

1:19:31 - Steve Gibson

that's right, let's take a break, and then we're going to talk about the unbelievable design of scalable vector graphics I mean they're everywhere if there's a problem oh, le're not going to. This is a head slapper.

1:19:47 - Leo Laporte

Get ready, stay tuned. You know this comes back. I always am reminded how the lesson you have taught us time and time again. Interpreters are really vulnerable, and I suspect that's what we're going to hear about, but we'll find out in just a little bit. Steve Gibson he's getting refreshed while I'm telling you about our sponsor, a great little company with a big name, bigid. They're the next generation AI-powered data security and compliance solution.

Bigid is the first and only leading data security and compliance solution to uncover dark data through AI classification, identify and manage the risk and then remediate the way you want. You can use it to map and monitor access controls and to scale your data security strategy, along with unmatched coverage for cloud and on-prem data sources. Bigid seamlessly integrates with your existing tech stack and allows you to coordinate security and remediation workflows. You could take action on data risks to protect against breaches and I said the way you want, which means annotate it, delete it, quarantine it and more based on the data and, again, with everything you do with BigID, maintaining an audit trail. Bigid works with everybody. Partners include ServiceNow, palo Alto Networks, microsoft, google, aws and more, and with BigID's advanced AI models, you can reduce risk, accelerate time to insight and gain visibility and control over all your data. Intuit named it the number one platform for data classification in accuracy, speed and scalability.

This is a big problem nowadays because we want to use our data right. I mean that data is a treasure trove. It's hugely valuable, but it's in a lot of different places, in a lot of different. You know, on-prem, in the cloud. All kinds of formats Plus. If you're going to use it for AI, maybe some of it's appropriate, some of it you don't want to use. It turns out. Now it's more important than ever to know what your data is, where it is and what you can do with it. If you're going to use an example client, I don't think there's anybody better than the United States Army States Army. Imagine how much data, how diverse the data the Army has collected over years in all sorts of ways, in all sorts of places. Big ID equipped the US Army to illuminate dark data, to accelerate cloud migration, to minimize redundancy and to automate data retention. I can't imagine a bigger job than that.

Us Army Training and Doctrine Command gave us the best quote. They said quote the first wow, this is a direct quote from US Army Training and Doctrine Command. These guys are pretty straight-laced. They don't get excited very often. The first wow moment they said with BigID came with being able to have that single interface that inventories a variety of data holdings, including structured and unstructured data, across emails, zip files, sharepoint databases and more. The quote continues to see that mass and to be able to correlate across those completely novel. See that mass and to be able to correlate across those completely novel. I've never seen a capability that brings this together like big id does. That's. That's somebody at us army training and doctorate command getting pretty darn excited about big id. Cnbc did too. They recognize big id is one of the top 25 startups for the enterprise. Big id was named the Inc 5000 and the Deloitte 500, not just once for four years running.

The publisher of Cyber Defense Magazine says quote Big ID embodies three major features we judges look for to become winners Understanding tomorrow's threats today, providing a cost-effective solution and innovating in unexpected ways that can help mitigate cyber risk and get one step ahead of the next breach. Start protecting your sensitive data wherever your data lives at bigidcom security now Get a free demo to see how BigID can help your organization reduce data risk and accelerate the adoption of generative AI Again. That's bigidcom slash security now. Also, there's a free white paper that provides valuable insights for a new framework AI TRISM, t-r-i-s-m. That's AI Trust, risk and Security Management to help you harness the full potential of AI responsibly. You can get that for free at bigidcom slash security now. Thank you, big ID, for sponsoring the show and for all the stuff you do. Bigidcom slash security now. Okay, steve, I got to find out how much trouble am I in with SVG?

1:24:42 - Steve Gibson

These are everywhere, I mean yes, that's the cause for concern. So to set the stage here, back on February 5th, sophos' headline with scalable vector graphics files pose a novel phishing threat. Knowbe4 posted on March 12th 245% increase in SVG files used to obfuscate phishing payloads. On March 28th, asec's headline SVG phishing malware being distributed with analysis obstruction feature. On March 31st, mimecast wrote Mimecast threat researchers have recently identified several campaigns utilizing scalable vector graphics attachments in credential phishing attacks. On April 2nd, force Points headline An Old Vector for New Attacks how Obfuscated SVG Files Redirect Victims. On April 7th, the Keep Awares headline SVG Phishing Email Attachment recent targeted campaign. On April 10th, trustwaves writes pixel-perfect trap the surge of SVG-borne phishing attacks. Viper Security Group's April 16th headline was SVG phishing attacks the new trick in the cyber criminals playbook. On April 23rd, indexer blogs under emerging phishing techniques, new threats and attack vectors. And last month, on may 6th, cloud force one which is cloud flares security guys posted under the headline svgs the hackers canvas oh god, so Like I said holy smokes.

Okay, all this leads to one question, and I mean this with the utmost sincerity and all due respect when I ask what idiot decided that allowing JavaScript to run inside a simple two-dimensional vector-based image format would be a good idea?

1:26:52 - Leo Laporte

Wait what.

1:26:53 - Steve Gibson

Come on. What You're kidding me, believe it or not. The SVG scalable vector graphics file format based on XML can host HTML, css and even JavaScript, and it's all by design, so you could put arbitrary JavaScript in an SVG graphics file?

1:27:19 - Leo Laporte

Yes, and how does it get?

1:27:21 - Steve Gibson

triggered. It runs by design. It is un it's displayed.

1:27:31 - Leo Laporte

That's what I mean. Yeah, when it's used. Yeah, okay.

1:27:34 - Steve Gibson

Now, let's just remember I was once famously on the receiving end of some ridicule for stating my opinion that the infamous Windows metafile vulnerability, which allowed WMF files to contain not only inherently benign interpreted drawing actions but also native Intel code, I said it was almost certainly not a bug but a deliberate feature added as a cool hack back then to allow images to also carry executable code. As we know, the world I wrote in the show notes went nuts. It lost its shit is the technical phrase when this Windows metafile so-called vulnerability was discovered, or rather rediscovered, and it was none other than Mark Rusanovich, who also examined the native Windows Metafile interpreter as I had, who concluded it sure does appear to have been intentional, oh wow.

1:28:43 - Leo Laporte

But you know what I think back to TrueType fonts which also execute code.

1:28:49 - Steve Gibson

Not in this way. They are they're sandboxed. Yes, truetype was based off of PDF. That is an interpreted-.

1:29:01 - Leo Laporte

Postscript yeah, right, postscript yes.

1:29:03 - Steve Gibson

Right Postscript. Okay, so my point was that, okay, back in the early 1990s, before the internet interconnected everything, which is what changed the landscape of security overnight this would have been the idea of executable code in a WMF file would have been an entirely reasonable thing for Microsoft to do. Mark Rusanovich and I both examined the WMF interpreter machine language and it was clear that after the interpreter parsed an escape token, it would deliberately jump to the code immediately following that token and execute it. That's what the code was written to do. You can't make a mistake like that, which is why Mark concluded it sure looks like it was intentional. Now I'm reminding everyone of this because, bizarrely enough, we're back here again with a widely supported image file format that explicitly enables its displaying host to execute content on its viewer's PC when the file image is displayed PC when the file image is displayed. The only difference this time is that, while this is still clearly a horrible idea, no one thinks it's a mistake. The SVG image file format first appeared back in 1991. The version 1.0 specification was finalized 24 years ago, in 2001. Section 18 of the SVG specification is titled scripting and makes clear that SVG files are allowed to support ECMA script, which is the standards following javascript ecma yeah right, ecma.

Obviously, given the headlines we've seen over just the past few months, which I just read, bad guys have figured out took them a while how to weaponize this built-in scripting facility and are now using it with abandon. And just one sample of the recent coverage and explanation of the problem I'm going to share. Here's what Cloudflare's Cloudforce One security group wrote on May 6th under their headline svgs the hackers canvas. They were being a bit clever here, since the canvas is the term for the virtual surface upon which svg graphics are rendered. Um and in general, the web you know canvas is is the term used for rendering on web browsers they wrote. Over the past year, fishguard, which is a CloudFlare email security system, observed an increase in phishing campaigns leveraging scalable vector graphics SVG files as initial delivery vectors, with attackers favoring this format due to its flexibility yeah, it's so nice to have script and the challenges it presents for static detection.

Svgs, they write, are an XML-based format designed for rendering two-dimensional vector graphics. Unlike raster formats like JPEGs or PNGs, which rely on pixel data, svgs define graphics using vector paths and mathematical equations, making them infinitely scalable without loss of quality. Their markup-based structure also means they can be easily searched, indexed and compressed, making them a popular choice in modern web applications. However, the same features that make SVGs attractive to developers also make them a highly flexible and dangerous attack vector when abused. Since SVGs are essentially code, they can embed JavaScript and interact with the document object model, the DOM. When rendered in a browser, they aren't just images, they become active content capable of executing scripts and other manipulative behavior. In other words, this is Cloudflare. Writing this. Svgs are moreclassified as innocuous image files similar to PNGs or JPEGs, a misconception that downplays the fact that they can contain scripts and active content. Many security solutions and email filters fail to deeply inspect SVG content beyond basic MIME type checks, a tool that identifies the type of file based on its contents, allowing malicious SVG attachments to bypass detection. They wrote.

We've seen a rise in the use of crafted SVG files in phishing campaigns. These attacks typically fall into three categories Redirectors SVGs that embed JavaScript to automatically redirect users to credential harvesting sites when viewed. Wow, that's just wonderful you display an image and it takes you somewhere else. What could possibly be wrong with that? Second, self-contained phishing pages SVGs that contain full phishing pages encoded in Base64, rendering fake login portals entirely client-side. Gee, what a terrific feature to have in an image. And finally, DOM injection and script abuse. They write SVGs embedded into trusted apps or portals that exploit poor sanitization and weak content security policies, enabling them to run malicious code, hijack inputs or exfiltrate sensitive data. Wow, that's right. How many sites allow you to upload images, after all? What harm could an image do? And why does that SVG embed the term drop tables?

1:36:09 - Leo Laporte

Hmm, Given the capabilities highlighted above.

1:36:13 - Steve Gibson

They write. Attackers can now use SVGs to gain unauthorized access to accounts. Okay, svgs images gain unauthorized access to accounts, create hidden mail rules, fish internal contacts, steal sensitive data, initiate fraudulent transactions and maintain long-term access. Telemetry shows that manufacturing and industrial sectors are taking the brunt of these SVG-based phishing attacks, attributing to over half of all targeting observed. Financial services follow closely behind, likely due to SVG's ability to easily facilitate the theft of banking credentials and other sensitive data. The pattern is clear Attackers are concentrating on business sectors that handle high volumes of documents or frequently interact with third parties.

The article then goes on to greater depth, but that's all I'm going to share here, since I'm sure by now everybody gets the idea and must be shaking their heads, as I am. Essentially, what this means is that SVGs provide another way of sneaking executable content into an innocent user's computer and in front of them to display things like bogus credential harvesting logon prompts that most users would just assume were legitimate, because how would they know otherwise? Their computer just popped up as it often does. Their computer just popped up as it often does, asking them for their username and password, so they sigh and type them in web page. That JavaScript in the signature logo, just retrieved from a server in Croatia, which would love to have them fill out its form, please.

As I've often observed here, most users, most PC users, really have never needed to obtain any fundamental understanding of the computers that they now have come to utterly depend upon. Many of us here, listening to this podcast, grew up with PCs. We love them for their own sake, so we know and care about things like directory structures. Most users will ask do you mean folders? They have no underlying grasp of what's going on and they don't want to. They don't want to need to know, they just want to use their PC to get them where they want to go. They want to use it as a tool to get whatever it is done. And, of course, the industry has not helped very much with this, because there is no normal right. You can't tell if something is abnormal because there's zero uniformity among sites and site actions. If any of us were to open an email and receive a pop-up from an email asking for authentication, we'd say what? No? But the typical user would shrug and think oh okay, whatever, I guess I need to log into this just for some reason. Again, how would they know I don't have any solution to this problem. Chrome, firefox and Safari might simply block script execution within SVG images. Yes, please, if there was a toggle that I could turn on that would turn off script running in SVGs, I would turn that on or off or something. But our browsers are less the problem than email In their write-up about detecting and mitigating this malicious misuse of SVG scripting.

Cloudflare's Cloudforce One folks wrote Cloudflare's email security have deployed a targeted set of detections specifically aimed at malicious emails that leverage SVG files for credential theft and malware delivery. And remember that all of those headlines I read before were about phishing. These detections inspect embedded SVG content for signs of obfuscation, layered redirection and script-based execution chains. They analyze these behaviors in context, correlating metadata, link patterns and structural anomalies within the SVG itself. These high-confidence SVG detections are currently deployed in our email security product and are augmented by continuous threat hunting to identify emerging techniques involving SVG abuse. We also leverage machine learning models trained to evaluate visual spoofing, dom manipulation within SVG tags and behavioral signals associated with phishing or malware staging.

Okay, in other words, this is not easy to fix. I would just say no, I would just turn this off. No, once upon a time, back in the early days, when scripting was first happening, many of us old timers simply ran the no script browser extension to block any scripting from running on websites. We were like no, thank you. We also noted when, over time, as sites became increasingly dependent upon scripting, you know that little no script add-on started causing more trouble than it was probably worth, and at the same time the security of our web browsers was steadily increasing. So it was probably good for us to run a no scripting window for a while, but it became obsolete and as browser security got a lot better, scripting became less of a concern.

The big problem that Cloudflare and all the other security companies are seeing is from SVGs. Being for SVGs in email clients would seem to be a terrific first step, given that the SVG designed the SVG spec designed JavaScript on purpose back in 2000, in the spec from the start, and given that it's apparently being used for some legitimate purposes, I'm sure it's here to stay. But it might be nice to be able to turn it off and I hope that the industry responds to this quickly and just start saying no to running scripting in our SVG images. If things stopped running scripting, then designers would stop being able to rely on scripting in SVG. You really just have to decide that it's a bad idea to have it.

1:44:05 - Leo Laporte