Security Now 1027 transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show

0:00:00 - Leo Laporte

It's time for Security Now. Steve Gibson is here with some shocking tales from the frontier of AI. He'll also talk about the status of encrypted client. Hello, it seems like a good idea. Maybe it's not. And what radio technology would be best? If I wanted? Remote shutdowns of solar power inverters? All of that and more coming up next on Security Now Podcasts you love.

0:00:28 - Steve Gibson

From people you trust.

0:00:31 - Leo Laporte

This is Twit. This is Security. Now with Steve Gibson, episode 1027, recorded Tuesday May 27th 2025. Artificial intelligence it's time for security now. The show. We cover your security, your privacy, how computers work and a whole lot more, with this cat right here. The cat's been drinking some milk, it is. It is steve gibson, I got it in his mustache. Steve Gibson no, don't do it. He's got a lovely little froth catcher device on his lips.

0:01:11 - Steve Gibson

I do yes, it needs to be independently cleansed.

0:01:15 - Leo Laporte

I don't want to know how. No, you don't want to hear about that. So today the show is all about AI.

0:01:22 - Steve Gibson

No, no Well no show is all about ai. No, no, well, uh, no, um, as things came together, there were a number of stories that were about privacy and security and I ended up with maybe four or five different things and and I thought, okay, I think we should just, I'm just going to title this Artificial Intelligence. For a while, as I said to you before the podcast, I had Artificial Intelligence and I thought, no, that's a little too cutesy-poo.

0:01:56 - Leo Laporte

You know you could use AI to design the title. Just ask AI for an artificially intelligent title. Shall I look up some candidates for you?

0:02:06 - Steve Gibson

I don't know if you've spent any time with the O3 model, but whoa is it astonishing.

0:02:12 - Leo Laporte

I think there's some really good ones out there, yeah.

0:02:15 - Steve Gibson

Oh, wow. Anyway, we got a bunch of stuff to talk about. One of the reasons that, well, there was no other overriding central event although I actually already have some things to talk about queued up for next week that I will talk about at the end of this podcast but I wanted to talk about the status of encrypted client hello, ech, which we did a podcast on entirely one of our deep dives about 18 months ago toward the end of 2023, I ran across a security group in the business that noticed some recent changes and thought what, and then did some of their own deep diving that I want to share. Want to share there was a bunch of the feedback from our listeners has become just golden, which is why some of our podcasts has had a lot more of that. So they serve as talking points for answering questions like what radio technology would be best for remote inverter shutdown. Turns out that some DNS providers already do block newly listed domains, which is something we were talking about last week. It became clear that knowing when not to click a link can actually take some true understanding. Many people need to keep asking, because they're not the people who listen to this podcast essentially. Also, why can losing a small portion of a large power grid bring way more of the grid down than one would think.

Where are we in the AI hype cycle? And is this the first? Maybe it's the last We'll see Speaking of hype? An AI system resorted to blackmail, what? Also, why are we so quick to imbue AI with awareness? It turns out that goes back a long way, way further than most people might imagine. Also, people might imagine Also. Chatgpt's latest 3.0 model ignored its orders to shut down, which raises some interesting new eyebrows. We're going to run out of eyebrows, leo. And also, it turns out that Copilot, which Microsoft is now using with great abandon, apparently remember we recently heard that. What was it? A third of the new code is being written Turns out that it may not be making Windows code any better. Yeah, what a shock. We've got some details from GitHub which are a little concerning, and I stumbled upon an unfiltered and unrestrained LLM that I thought our listeners would probably have some fun playing with.

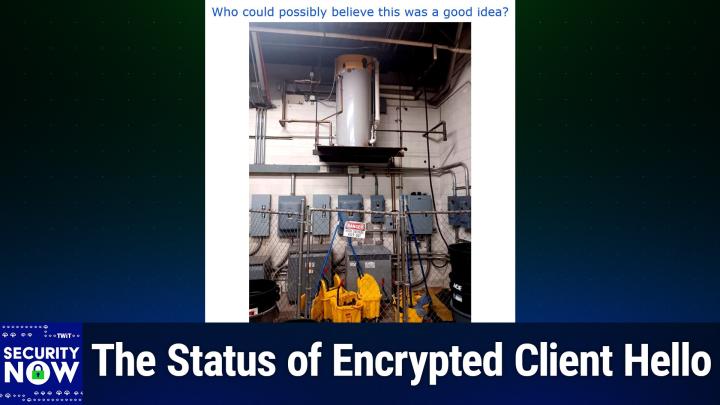

Of course, we've got a picture of the week that has already generated a week's worth of feedback from the people who received all the show notes yesterday around this time this time. It's surprising to me how many people have had the events surrounding this picture occur in their lives. Oh wow, I haven't seen it because I put myself in a soundproof booth.

0:05:39 - Leo Laporte

The minute your email comes in. So I am. I'm virgin ears and eyes.

0:05:44 - Steve Gibson

You and Lori's son impressed me with the your speed of acquisition. I showed this to her son a couple days ago and it it was you would measure in milliseconds the amount of time it took him to process the photo and burst out in a belly laugh and I thought smart kid really, smart kid Really smart kid.

0:06:05 - Leo Laporte

He's probably not a kid, but he's smart anyway. This is I did feed your show description into the AI and these are the titles it came up with AI Hype, Hysteria and Hard Truths. Oh, I'm going to do that from now on. Ai Hype Hacks and Headaches.

0:06:21 - Steve Gibson

AI Security.

0:06:22 - Leo Laporte

Hype versus Reality. When AI crosses the security line. This is my favorite ai friend, foe or flaw. Oh, leo, there's some good ones in here. Perplexity huh yeah, I love perplexity. I'm a big perplexity fan. Really good, yeah. Well, we will get to the show. Whatever it's called, it's gonna be good, yeah, I'm now.

0:06:43 - Steve Gibson

I'm annoyed. I call it artificial intelligence.

0:06:46 - Leo Laporte

You could have used AI. That's the new. I could have had a V8.

0:06:50 - Steve Gibson

I could have used AI. I could have used AI man.

0:06:55 - Leo Laporte

It's so great to have you on Security Now, glad you're watching and, of course, we love our Security Now. Sponsors. This episode brought to you by our friends at OutSystems. I love this idea. Outsystems has been around for more than 20 years. They were one of the first in the low-code space, but now, thanks to AI, outsystems is even better. They are now the leading AI-powered application and agent development platform.

More than 20 years now, the mission of OutSystems has been to give every company the power to innovate through software, and they saw a real opportunity here with this amazing AI plus low code plus DevSecOps, to solve a conundrum that's been in the enterprise as long as the enterprise has been around the old build versus buy conundrum. Right, when you need some software capability in the business, you have two choices you buy off the shelf some SaaS product that well, you can get it there quick and up and running, but you're going to lose flexibility and you're also going to lose differentiation because everybody else in your space is using that. Or you can do what we've done, to our great regret sometimes build custom software at great expensive time and resources right, plus a certain fragility to the resulting product. Well, now it's not build or buy. There's a third way. Ai forges the way for another path and I wish I'd thought of this. It's the fusion, as I said, of ai, low code and devsec ops automation into a single development platform. That gives your teams the power to build custom applications. They use ai agents and they could do it as easily as buying generic off-the-shelf sameware, but you get something just right for what you need.

Plus, outsystems software always comes with flexibility, security and scalability standard. It's built right in With AI-powered low-code. Teams can build custom, future-proof applications at the speed of buying, with fully automated architecture, security integrations, data flows, permissions it's all built in Instead of greenfield AI vibe coding. You've got a great low-code platform that AI understands and can manipulate, can create some great software for you and get all that additional benefit right. Outsystems really is the last platform you'll ever buy, because you can use it to build anything. You can even use it to customize and extend your core systems. Build your future with OutSystems. Visit OutSystemscom slash twit to learn more. That's OutSystemscom slash twit to learn more. That's outsystemscom slash twit. These guys have been around and they they know they've got what you need outsystemscom slash twit.

What a brilliant idea, thank you out systems for supporting the, I think, pretty important work steve does here at security. Now, all right, steve, I am going to. I'm going to go to the show notes, so I gave this picture, the caption.

0:10:10 - Steve Gibson

Who could possibly believe this was a good?

0:10:13 - Leo Laporte

idea who could possibly believe, and I am going to. You could start the timer, scroll up and see a water heater heater if floating in the air, because of course, floor space is a premium premium. Yeah, what's below it. Yeah, it even says danger, high voltage, keep out. And then the really, to add insult to injury, there's some mop buckets there too. Yeah, this.

0:10:44 - Steve Gibson

Yeah, this picture just keeps on giving. For those who are not privy to this photo, basically the picture is in two halves, an upper and a lower. Unfortunately, the upper is the upper as opposed to the lower. On the bottom of the photo, where a lot of the potential action will be happening, is a lineup of large high-tension, high-power, high-voltage breakers with those levers on the side for pulling the master switch.

0:11:27 - Leo Laporte

Looks like we've got some big power like trans substation kind of transformer things. I like how they've protected it with the same kind of cyclone fence you'd find in your backyard. Oh yeah, you got even as a gate yeah, yeah, danger, high voltage, keep out.

0:11:39 - Steve Gibson

And the punctuation to this is that, on a little jutting out, uh stand, uh ledge, come jutting out from the wall. Floating above all of this is a water heater I mean some, some.

0:12:01 - Leo Laporte

Well, thank God they never leak.

0:12:07 - Steve Gibson

Wow, I mean, residential heaters have to be strapped down to the house now in California. Yeah, three straps because of the earthquakes. A in a um. A tray yeah, an aluminum tray, thank you. Uh, with a drain, because, yes, they are in fact known to have a limited lifetime and they will start leaking. This can't be real. Oh, leo, this can't be real, it's just. Oh, my god. And what was interesting, as I, as I mentioned to you before we began, is this this photo photo went out. Of course, it's the picture of the week, so it was featured in the show notes. I got a bunch of feedback from people who said, oh, yeah, I've seen this. I mean, they got a whole bunch of their own anecdotes, of variations on this theme of something wet being involved suspended over something highly electric, and I love it because the cyclone fence.

0:13:15 - Leo Laporte

As burke says, we'll just kill you faster. That's as you rush in to mop up the water.

0:13:23 - Steve Gibson

Oh, great, great, great, classic picture of the week todayives into the continuing privacy vulnerability that's inherent in most TLS connections today, where SNI, which is short for server name indication, allows a single IP address to be the home for any number of websites. Now, backing up a little bit, as we know, tls connections require web servers to return their valid certificate to prove their identity. If that wasn't done, then there'd be no protection from man-in-the-middle attacks. A man-in-the-middle could return a spoofed certificate and we would think we'd connected to the real server rather than mistakenly connecting to something that is going to intercept all of our traffic. So TLS connections require web servers to return a valid certificate to prove their identity. But when many sites are hosted at the same IP address which is now way more common than not, probably because this is one of the solutions for the IPv4 exhaustion problem it turns out you don't need your own IP per server. I even am now sharing a few IPs among multiple services. So the question then is how does such a hybrid website or server collection know which certificate to return to the client that's wanting to connect? The answer is that the user's TLS client web browser indicates the name of the domain the name of the domain it wishes to connect to when it sends its first TLS handshake message to the site hosting that has many domains behind it. Now the problem with doing that is that this must take place, by definition, before the encryption keys have had a chance to be exchanged. You know you're, you're telling the remote site which server you want to receive its certificate from. So obviously you don't have encryption yet, because it's not until you tell it that it knows which certificate to send you. So the problem is then that this SNI information, this server name indication, domain name, will be exchanged in the clear and thus completely open for any spoofing or snooping ISP or hotspot operator to observe. Now, as its name suggests, ech encrypted client hello is the next generation technology to solve this problem, and again, we talked about it about 18 months ago.

I just ran across an interesting, just published research report by the mobile security company Corrata C-O-R-R-A-T-A, which was titled Living with ECH. What I thought was so interesting is that they bring some perspective from the field, whereas what we talked about 18 months ago was pure protocol theory. So what's really going on? Here's what they wrote. They said last year our security research team noticed an uptick in detections of the domain cloudflare-echcom across our customer base. So I should note that they're a mobile security company. So their customers are running their software in their mobile devices which provides security. That software is monitoring what they're doing to protect them and also generating telemetry back to them. So their team sees like suddenly cloudflare-echcom starts to appear. They said the numbers were small, low, thousands among hundreds of millions of domain scans, but nonetheless intriguing. Did this herald the prime time arrival of encrypted client hello, a protocol which information security professionals feared would make widely used security tools blind to large swaths of Internet traffic? Now that's a perfect example of something we didn't talk about or think about. It's like why is this not really good? Well, turns out security tools are taking non-malicious advantage of the fact that they can know what's going on. So that's going to go away if ECH or once ECH takes hold. They said, for example, it was readily apparent that the spike in Cloudflare-echcom detections was directly related to Internet infrastructure provider Cloudflare's decision to support ECH by default on its most popular plan, its most popular plan. They said our researchers wanted to investigate whether this change represented a tipping point in ECH adoption or just a small step change related to the policy of a single infrastructure provider. So then they give us a little bit of background from their perspective. They wrote encrypted client.

Hello is an extension to the TLS 1.3 Internet Encryption Standard. Tls, or Transport Layer Security, is the standard used to safeguard communications between an endpoint device and the web server it's connected to. That's, of course, one use case. You could use it for all kinds of things. It is the standard indicated by the familiar padlock symbol in browsers and the HTTPS designation in front of web addresses. Tls now protects the vast majority of Internet traffic. A variety of sources put current adoption well above 90% Of that traffic. The vast majority uses TLS 1.3, and I'm not quite sure that's the case. Maybe the vast majority can. It's not clear yet whether the server side has yet caught up. As I recall, last time we looked 1.2 was still widely represented, but anyway they wrote the most recent version of the standard being 1.3.

Ech is designed to increase user privacy by encrypting the content exchange between clients and servers when they're establishing an encrypted connection. Without ECH, which is again an extension to an optional add-on to TLS 1.3, a client will reveal the domain of the website it's attempting to visit before the encrypted connection is established by disclosing the domain server name indicator SNI. This means that an entity with visibility of a user's internet traffic. For example, an internet service provider, mobile operator, enterprise security teams and or bad actors can see that user's destination, even when the user and the server take precautions to avoid this. So increased user privacy what's not to like? So increased user privacy what's not to like? Unfortunately, in the view of many enterprise information security professionals, increased privacy will come at the cost of compromI in order to identify the destination of traffic which would otherwise be hidden, because the use of encrypted DNS and network edge services like Cloudflare. Widespread adoption of ECH would severely curtail the ability of enterprises to identify and block connections to malicious domains. A particular problem arises for regulated industries who need to selectively decrypt TLS traffic for compliance purposes. Unable to do so selectively, they may have little choice but to decrypt all of it. Given the likely impact, it's important that we have a clear understanding of the current state and likely future trajectory of the rollout of VCH. So again, lots of things we had not thought about before.

Transport layer security has revolutionized the confidentiality of internet communications. Before widespread use of encryption, many legitimate and illegitimate actors had the potential to snoop on internet traffic and even to modify it. However, information leakage remains. Some is herent just to the way the internet works. For example, the source and destination addresses and other networking metadata will always remain in the clear, but other leakage is due to privacy gaps in the protocols. Two of these are of particular importance client DNS queries and TLS client hellos, those being the two that are still outstanding. They said DNS queries are the way a device translates a request to visit a named website googlecom into the IP addresses for example 74.125.197.113, which it needs to connect to the site. It sends the query to a DNS resolver, normally one which is provided by its ISP. In general, these DNS requests are sent in the clear, meaning that the ISP and anyone else with access to the traffic can see the website the user is looking to access. Isps, governments and enterprise IT teams can use this information to block access to sites deemed unacceptable or malicious.

Encrypted DNS and they say IE DNS over TLS, also known as DOT, or DNS over HTTP, known as DOH, is a way for users to hide this information. Is a way for users to hide this information. Typically, they do this by replacing the default DNS on their device with an encrypted service, such as 1.1.1.1, cloudflare or 8.8.8.8, google. All major operating systems and browsers can be configured to use encrypted DNS. Well, not Windows 10, but Windows 11 does have it natively and there are proxies you can add onto Windows 10 and browsers have it built in, they said.

Corotta estimates that 20% of enterprise devices use encrypted DNS. They said encrypted DNS eDNS is not turned on by default for a number of reasons. Isps have a commercial interest in understanding how their subscribers use the Internet. Access to DNS queries is very useful in this regard. Governments also have an interest in being able to passively monitor and potentially restrict access to illegal, malicious or unacceptable content. Enterprise information security teams also have legitimate reasons for wanting to block access to content that could represent a threat, for example phishing or malware download sites. They said device manufacturers want their devices to work seamlessly in all environments and are reluctant to turn on a feature which might lead to a poor user experience in some circumstances. And of course, we've talked about how there are DNS services that, like family plans that just will not return the IPs of, you know, not safe for work style websites. They don't appear to be on the internet if you use those DNS services. Anyway, these guys wrote.

Unfortunately for users who want to hide their internet activity from prying eyes. Even with eDNS enabled, they cannot remain private. This is because of the information which is exchanged when their device establishes a TLS connection to the server it wants to access. The TLS client hello message sends the domain name of the server they're connecting to in the clear, making it visible to any entity monitoring network traffic. It is this gap which encrypted client hello attempts to address. Encrypted client hello works by encrypting the client hello message, which is the first message sent by the client in a TLS handshake. Traditionally this message, including the server name indication, as they said, is set in plain text, they said, allowing network observers to see which specific domain a user is trying to access, even when the rest of the connection will then be encrypted. Ech addresses this by encrypting the entire client hello using a public key obtained via DNS, specifically through the HTTPS resource record, that's, a DNS resource record type 65.

The client sends an outer client hello with a benign or shared SNI server name indication because, again, that's not going to be hideable. So it uses sort of a fake SNI and includes the encrypted inner client hello as an extension. So basically it's sort of a Trojan horse. We don't care about this client hello and that's where that CloudFlare-echcom appeared. That's commonly used in that outer wrapping kind of we don't care about this client Hello packet. That's what everybody sees and that's. You know, it's a kludge, right, but unfortunately first SSL and then TLS wasn't designed for this, so it had to be hung on later after the spec was finished, on later after the spec was finished, and the problem was you don't want to upset the existing protocol with something that it doesn't know about, so you send it a client hello that looks legitimate. But in the extension fields it says oh look, here's a blob which, if I have the matching private key to the public key that was attached, I'm able to decrypt it.

So they said the client sends an outer client hello with a benign or shared SNI and includes the encrypted inner client hello as an extension. Only the gateway to the intended server which holds the corresponding private key can decrypt this inner message and complete the handshake securely. They said it should be noted that this necessarily depends on ECH-enabled traffic being mixed with other ECH traffic going to different servers but through the same gateway. Otherwise no privacy gains are achieved. I'll explain that. In order to achieve its goal, ech requires traffic to go through gateways controlled by large content delivery networks such as Cloudflare, who will still have access to the SNI. The privacy gains are therefore limited. Okay, now to pause for a second. Another way of putting this is that encrypting your client hello handshake with GRCcom is not going to buy you much privacy, since there's no one home at 4.79.142.200 other than GRC, 979.142.200 other than GRC. So anyone who's connecting to that IP is definitely known to be talking or planning to talk to GRCcom, because that's the only server at that IP. But the same is not true by a huge measure when connecting to a website that's fronted by Cloudflare, since a bazillion different websites will all be sharing Cloudflare's relatively small collection of destination IP addresses. So you're hiding among the crowd. No one knows where you're going once you go through Cloudflare's entry gate.

The Carada report continues. In our research, all outer client hello messages observed use the same SNI, cloudflare-echcom. This was true, they said, whether or not the website used Cloudflare's infrastructure. This makes all websites using ECH indistinguishable from each other. This is possible because the content of this field plays no part in the TLS handshake, which I think is really interesting. So maybe that's going to kind of become a de facto standard. Okay, so where do we stand regarding the adoption of this?

They report for a website owner to offer ECH natively, they will need to use a name server, a DNS name server and a TLS stack that support it. Today, that support is limited and for this reason, the simplest way to enable ECH on your website is to work with a CDN that supports it. That is, put yourself behind a big CDN In practical terms, they said. In practical terms, that means using Cloudflare. For an end user to take advantage of ECH, they will need to use a browser Chrome, firefox and so forth that supports it. They will also need to configure their browser or whatever device to use encrypted DNS, both to hide their DNS queries and to access an ECH-compatible resolver such as 1.1.1.1 or 8.8.88.

They said our research aimed to establish how often these connections apply to enterprise traffic. Our findings are based on analyzing billions of connections made by devices running Corotta's threat detection and response solution. Corada's software is used to protect iOS and Android devices and is representative of that important segment of enterprise internet traffic. Corada has visibility of DNS query and TLS connection metadata for all these connections and has tracked the number of successful ECH connections created between January and March of 2025, this year, of the top 1 million websites, slightly less than 10% support ECH. So of the top million, just less than 10% support ECH at all.

With a tiny number of exceptions, all of these sites use Cloudflare's infrastructure, underlining the importance of Cloudflare for ECH adoption, but also highlighting the lack of support from other infrastructure providers. They said, looking at the end user side, we see major gaps in potential ECH support, meaning on the client side. The first carve out is for Apple devices. Ios does not support ECH. On Android devices, we see that 30% of users have both configured their browser and or device to use encrypted DNS and are using a browser Chrome in the vast majority of cases, of course, on Android that is ECH compatible. So 30% of Android, zero of iOS because it doesn't support ECH at all. Taking the server and client support proportions together would suggest around06% of connections actually use it.

That's pretty close to nothing, yeah, and so, wow, 0.06% of connections actually use it. This underperformance is due to the fact that Cloudflare sites are not distributed evenly across the popularity tiers. Coverage falls to 3% when you look at just the top 1,000 sites and 1% in the top 100. So I have a chart in the show notes here on page five, which is really interesting. Uh, there's not even a bar for sites, one through a hundred, the top 100. They've got it labeled one percent, but I don't something's wrong with a chart, because the three percent bar is a big guy, it's a lot bigger than 1%.

Yeah, it's certainly more than three times. Nothing but of the top 1,000, aggregate 3%. Then from 1,000 to 10,000, it jumps to 7%. From 10,000 to 100,000, that's our biggest bar. There's 10% support there. And then from 100,000 all the way to a million aggregate that's 9%. But overall 0.06% of connections are actually using it.

They said the Cloudflare ECH site population exhibits other characteristics. So that's how many connections actually have their TLS handshake encrypted with ECH? And I mean it's not going to get you very far, unfortunately. What about the Cloudflare side? Which sites are using ECH? They said Cloudflare's ECH site population exhibits other characteristics.

Malicious and risky sites are heavily overrepresented, are heavily overrepresented. In total, about 17% of ECH-enabled sites fall in those categories risky or malicious. And why, leo? Because they want to hide. They want the protection that ECH provides. They said our analysis of phishing detections shows that over 90% of phishing uses Cloud Flare's infrastructure. Wow, in addition to the anonymity provided by ECH, these sites take advantage of other Cloud Flare features. For example, the CAPTCHA page can be used to direct desktop traffic to the legitimate site, while mobile traffic is sent to the fake one. Alternatively, traffic not coming from the targeted country may be redirected to the legitimate site. These are deliberate tactics to avoid detection by security providers. We have a pie chart there that just shows that malicious is 9%, inappropriate one way or the other is 8%. So together, 17% of Cloudflare's total traffic.

Okay, so where do we go from here? So far it all seems rather depressing. We have the technology, but very few are choosing to use. It does come from massive website aggregators that offer destination privacy by virtue of the fact that the destination could be any of their website customers. So the Carada report says the combination of the low levels of traffic which use ECH, together with the high level of malicious and risky sites within the ECH-enabled population, will persuade many organizations to block access to sites using ECH absent any further knowledge of the content of the site, which is frightening. It's like wait, you're saying we can't use any of this privacy enhancing technology period because not that many sites use it. We wouldn't be losing much if we blocked it and the chances are nearly one in five that we would want to block it because of where you're trying to go and we can't tell if we don't block it. Yikes.

They said such an approach is defensible from a user experience perspective, while ECH support remains at such low levels that they've identified in their research. They said so how quickly might that change? Adoption can be driven from either the client or the server side. Well, like hello Apple, they said there are a number of factors which would drive adoption on the client side. The first would be for Safari to support the standard. Relay is Apple's subscription-based privacy-enhancing technology and it is unlikely to proactively support a potential alternative. Well, that's interesting. So the ECH can be viewed by Apple maybe is as a competitive threat, and so it's like no we're going to give you private relay, they said.

A second would be for Chrome to enable encrypted DNS by default. They said this is more likely. Google would lose nothing with such an approach and it might disadvantage competitors who are seeking to monetize internet usage information. Our estimate is that where Chrome were Chrome to make encrypted DNS a default, it would likely double the proportion of TLS connections using ECH. But so what? Up to 0.12 percent, great. But such a change, they wrote, would be a significant market intervention and would not be welcomed by mobile carriers, who have influence over Google due to their role in handset sales.

The position in relation to Android is also not positive from an ECH adoption perspective. To understand why, we must first look at how encrypted DNS is handled at the device-wide level in Android. No major manufacturer has enabled DNS encryption by default. Users have the option to turn on private DNS. Once enabled, the device will encrypt all DNS queries using the DNS over TLS, the DOT standard. However, device-wide DOT is not properly compatible with ECH for a variety of reasons. Furthermore, the TLS stack with an Android would need to be enhanced to support ECH, to allow the 90% of connections which are not browser related to make use of the privacy enhancing standard, meaning if only the browser, if if only chrome on android knows how to use ech well, that's 10 percent of all tls use for android. The other 90% would still be out in the cold. But it needs to be done device-wide in order to put a wrapper around the user's entire experience and to give them true privacy.

They said for server-side adoption to increase you would need to see wholesale migration to Cloudflare, which they have in parens. Unlikely or default support from other content delivery networks. 23.6% of the top 15 million websites use a CDN, so nearly one quarter of the top 15 million websites currently use a CDN. But that's three quarters. That don't the three quarters where the IP address you go to tells everybody where you're going, they said. This understates their importance, as CDN penetration is particularly high among the most popular sites. The market is dominated by Cloudflare, fastly, amazon and Akamai. Cdns other than Cloudflare have so far announced only tentative steps towards ECH support, with no suggestion of default enablement. That said, ech adoption is a positive for the CDNs. The complexity of implementation means more websites will opt to use CDN services. At a more strategic level, the CDNs would become the only infrastructure players with widespread visibility of end-user application usage, and their report finally offers some interesting conclusions.

They said the spike in detections of the CloudFlare-echcom domain observed in the latter part of 2024 was the catalyst for this research. We wanted to understand what the implications of this new phenomenon might be for enterprise information security. A rapid increase in the use of encrypted client hello would mean that it would no longer be possible to directly detect the destination of much internet traffic. Security tools designed to keep enterprises safe would lose some visibility that they rely on. And now we understand. Unfortunately, despite what brought this to their attention, none of this is happening, they said. Our findings indicate that this visibility apocalypse is not in fact imminent. The fact that large elements of the ecosystem are not moving to quickly support ECH means that the low levels of penetration we see today are unlikely to change rapidly.

There are major gaps on both the client and infrastructure side. On the client side, you need support for both DOH and ECH Again, not DOT. That's not compatible with ECH. So you need DNS over HTTPS, which isn't available. Device-wide support for this combination is non-existent for Android and iOS, and this is unlikely to change in the short or medium term. Lack of support from Safari is a big gap. On the browser side, chrome offers support for DOH and ECH, but not by default, and we know what that means. Most people won't have it turned on.

On the infrastructure side, they conclude Cloudflare is the only provider supporting ECH today. And remember, it doesn't really do you much good unless you're behind a CDN, because otherwise everybody can see where you're going by IP. Cloudflare's promotion of the standard is part of its privacy-first positioning, and support is baked into its tech stack. Other providers are not in the same market or technical position. It would be complacent to expect this position to continue longer term, as ECH adoption offers significant market opportunities for the CDN industry. That is, you know, offering privacy a sigh of relief. But continuing to track this space is no longer optional. It needs to be done.

So I think the bottom line here is that we have another example of adoption inertia, where there's no overwhelming incentive to make any changes. So you know, there's zero user interface visibility for one thing into any of this. So users don't get any benefit that they can see. They have no idea what's going on. So they're unable to drive any change. And even if they could, what's needed is the underlying technology support, which is missing. What's needed is the underlying technology support, which is missing. And even when all the technology is in place, only sites behind large site aggregators obtain the benefit, are the disreputable sites deliberately operating behind Cloudflare for the purpose of using ECH to prevent their detection and filtering and blocking. And even if ECH support might be identical, or rather incidental, for all those sites, they're still obtaining ECH's benefits, which help them to remain hidden.

So, as we've often seen, obtaining true privacy on the internet is, overall, a heavy lift. In ECH we have a terrific technology, but it's one that requires explicit DNS support to allow a browser to obtain a site certificate before receiving it from the site, as well as support by each end. And if the user's browser is not also encrypting its DNS queries, then those required DNS lookups are also leaking their intended destination. So it feels as though ECH will continue to gradually, very gradually, seep into the internet's infrastructure. Someday in the far future, all web browser DNS will be fetched over privacy-enforcing TLS, adding the HTTPS certificate to DNS records will be standard behavior, and all clients and servers will be supporting ECH. Someday it'll probably happen on its own, but you know it's nothing that anybody can do. I mean, we as users can't drive this, they're just. You know. Know, the internet fights privacy. It wasn't ever designed for it. We've been layering on, you know, adding widgets to it in order to, to increase it and create it, but what we are able to do is limited a system.

0:51:20 - Leo Laporte

Is it as good? No as which? As ech like their, their internet relay and all that? Yeah, is it? Okay, yeah so that's, in a way, that's the way to do it, uh, because then it's apple, it's not a phishing scam, uh, and you get the privacy right, but of course apple's not the entire world no, I, yes, I understand, and maybe if, if google did it on android, then you'd have much of the mobile world.

No, I, yes, I understand, and maybe if, if google did it on android, then you'd have much of the mobile world. Anyway, right, right, it's not going to get desktop, but maybe that's, at this point, not important. I don't know. It's a thought. Would you like to do a little break here? Oh, I'm par. I thought you might be. I am here to save your parched vocal cords, my parchment.

Our show today brought to you by something we've talked about many times Zero Trust and, in particular, the best company to put Zero Trust in action in your business ThreatLocker. First, let me talk about why you need something like Zero Trust. Clearly, if you listen to the show, you know ransomware is rampaging through the business world Phishing emails, infected downloads, malicious websites I mean even RDP exploits but you don't want to be the next victim, do you? That's why you need ThreatLocker's Zero Trust platform, and here's the key. It takes a proactive, and this is it. These are the three words you want. Deny by default. The stop sign is up. The red light is on. The bar is closed by default. That blocks every unauthorized action, protecting you from both known and unknown threats, because nothing can get through unless you have specifically explicitly authorized it. So zero days, who cares? They don't get in. You don't authorize that. Threatlocker does it and they do it affordably, they do it right, they do it easily. And I tell you, if you are an infrastructure company, if you're a mission-critical company, you need something like this. That's probably why JetBlue uses ThreatLocker and the Port of Vancouver talk about infrastructure. They can't afford to go down. They use ThreatLocker. Threatlocker shields them and can shield you from zero-day exploits, from supply chain attacks, while providing complete audit trails for compliance. It's kind of a side effect, right? You know exactly who used what, when and where.

Threatlocker's innovative ring fencing technology isolates critical applications from weaponization. It stops ransomware cold. It also and this is really important limits lateral movement within your network. Somebody gets in. It doesn't mean they can go anywhere, they can only go where they're allowed right. Threadlocker works across all industries. It supports Mac environments, of course, windows, so it's perfect for everybody. You'll get 24-7 US-based support and again, this is kind of a side effect, but it's a big benefit you get comprehensive visibility and control.

Let me give an example. This is another mission critical situation mark tolson. He's the director for the city of champaign, illinois. I mean, look, cities are really targets for ransomware, right? Mark's going what do I do? What do I do? What do I do? He says ThreatLocker provides that extra key to block anomalies. Nothing else can do If bad actors got in and tried to execute something. I take comfort in knowing ThreatLocker will stop that. That's the key.

Stop worrying about cyber threats. Get unprecedented protection quickly, easily, cost-effectively. In fact, it kind of blew me away how affordable ThreatLocker is. Visit ThreatLockercom slash TWIT. You can find out. You can also get a free 30-day trial and, by the way, it's so easy to implement that, before the show's over, you'll be using it To learn more about how ThreatLocker can help mitigate unknown threats and ensure compliance. Visit ThreatLockercom slash twit. Threatlockercom slash twit. If you are at the RSA conference, rsac, if you are talking to your fellow CISOs I know you saw ThreatLocker. I know you're hearing about ThreatLocker. I know you saw ThreatLocker. I know you're hearing about ThreatLocker. This is the one you want ThreatLockercom slash Twit. Thank them so much for supporting Steve's work. They're big believers in security. Now we're glad they're here.

0:56:14 - Steve Gibson

Steve, continue, Mr Parch, but that was so long that I wanted to cover that because I thought that was really so much interesting information about how unfortunately, a very privacy-forward protocol, which its lack means that there really isn't a way to hide where we're going.

0:56:37 - Leo Laporte

This is why we can't have nice things.

0:56:41 - Steve Gibson

The bad guys use them. Yeah, yeah, um, anyway. So I've got some things to talk about based on feedback from our listeners, which, as I said, is just fantastic. At this point I've I'm waiting around in feedback, so I'm certainly not I'm got lots to share. Michael wrote hi, steve, it sounds like.

Sounds like the inverters from China that you spoke about last week use internet connectivity as a backdoor to potentially be shut down by remote command. Actually, we know that's true because of what happened last November, where a remote command was used to cause some blackouts here and in other countries. He said now, if I was looking to install a Trojan horse off switch, I'd use a tiny radio receiver in my inverters that scans a specific, rarely used VHF frequency for a specific pattern or code. If China did this, then they just have to launch one of their balloons with a transmitter sending out that code over radio waves. Ham radio balloons can cover multiple states with their signals and I believe China's recent balloon flights over America were even higher than the typical ham radio balloon. Satellites could also broadcast a kill switch VHF signal. Such a system would be undetectable and unstoppable. No firewall needed unless the hardware was reverse engineered. Who knows, maybe this system already exists in our infrastructure. After all, china has flown balloons over our nation, perhaps as a test for their virtual EMP attack in a future conflict.

Thanks for the show, stephen Leo. I've been a big fan for decades, michael, so I've been thinking about this too, ever since the story surfaced. That was our headline topic last week. What occurred to me is that it's now possible to create very inexpensive satellite radio receivers. You know all cars have them for your optional. You know, serious FM.

Many years ago the housing complex I lived in at the time upgraded its aging Excuse me, I got a tickle. Complex I lived in at the time upgraded its aging water sprinkler infrastructure to use weather data from a satellite data service of some sort. I remember looking at the new sprinkler controllers controllers and they had this little black puck on top of them and it turns out that's what that was. So if I wanted to be nefarious, I think I'd probably piggyback on an existing global data transmission facility transmission facility I'm sure that commercial, serious style FM radio is just one of many because this weather information was being received no matter where you were in the country and then simply arrange to have an off switch encoded into the data stream somehow, so let's just hope. As I said, we talked about this last week. The good news is the right people are aware of this possibility. The bad news is tensions are high with China, unfortunately, and between China and the US at the moment, which is really disappointing and unfortunate, but it's the case, and so I would say at this point, if any power system that an individual is responsible for gets blacked out as a consequence of an inverter being shut down remotely, it's hard to believe anyone other than the operators of that service, because it should be possible to pop the lids on all this stuff and find out what's going on.

Marshall M said Hi, steve. Related to the new domain registration blocking question, this is currently possible using nextdnsio or controlledcom. He said I'm sharing a couple of screenshots of the rules for each. I'm looking forward to more episodes beating up the podcast. That's all he said, but I put the screenshots he shared in the show notes.

One shows a screen showing new domain filtering options and it says contains new domains that were just registered. Many of these could be used for badware distribution, procedurally generated domains for malware, command and control servers, etc. This could also cause collateral damage and block legitimate, brand new domains. And then you have a choice of choosing as recently, only as recently as last week, which, it says, blocks domains that were registered, obviously, in the last week, presumably seven days, and then last month, blocks domains that were registered in the last month. Page of switches that you're able to turn on off.

You can enable crypto jacking protection, enable DNS rebinding protection. In fact, that's one of the things that GRC's DNS benchmark has always checked for. We talked about DNS rebinding attacks many, many years ago. Also, you can enable homograph attack protection, typosquatting protection, domain-generated algorithms protection and also, finally, block newly registered domains protection. So, thank you, marshall. I'd forgotten about those options which are built into today's more feature-packed DNS services. And seeing him mention Control-Dcom, I don't think we've ever talked about that service on the podcast, but it put a smile on my face, since their DNS resolvers are part of the updated DNS benchmark, which I and all of the people who've been testing it for the last six months or so frequently see, because they perform quite well. Uh, next, dns is up at the top of the list too, as our is, you know, quad nine and google, and, and you know all the familiar names, but it was fun yeah, I use next dns.

1:03:17 - Leo Laporte

Yeah, yeah.

1:03:18 - Steve Gibson

Yeah, yes, and I do too, in fact. In fact, there was a time a few months ago, when I was experimenting with the throttling of DNS queries and I removed experimentally removed all of the throttling from the benchmark and just let it go, benchmark, and and just let it go. Well, it turns out that I was using next dns as the dns server on the workstation where I did this and I thought what, like something weird happened and suddenly I had no access to the internet and I you know, browsers wouldn't work, and blah blah. It turns out that without the throttling which the benchmark has always had, nextdns detected a DNS attack. Oh wow, it thought that the benchmark was attacking because so many queries were being run through it and it just it blocked my IP.

It was like oopsie.

1:04:25 - Leo Laporte

I guess I understand that yeah.

1:04:27 - Steve Gibson

Tyler wrote. He said dear Steve, I work in higher ed. I'm a one manned it band at my regional location. I hear on the show all the time about successful phishing attacks and the fallout that comes. I just wanted to give a shout out to end users who are proactive in stopping these attacks. My coworkers look at every link with a scantz, he said, with a side eye, and every email with healthy skepticism. Good, yes, while it might be annoying getting asked regularly quote is this safe to click on? Unquote? He says I welcome the annoyance and routinely thank them for asking. So, as many IT professionals here on the show.

He said can we get a shout out for all the end users that are doing the correct thing. Yes, love the show, tyler, and absolutely yes, I agree with you, tyler, um I bet everybody listens to this show, does that right? Yeah, that's what you get when you listen to the show is some healthy paranoia well and and probably where all of the we listeners are also the ones who get asked, you know, is this by the people who are not listening.

Yeah, so we're all Tyler's. I do think that the message has been received by many end users. I smiled when Tyler talked about his coworkers asking is this safe to click on? Because while I don't have coworkers nearby, I do have Lori and she will frequently, bless her heart, receive some things she is unsure about. And the good news is, as with Tyler's coworkers, she'll always ask me.

You know, the events are generally text messages which happen to mention some service that by coincidence she uses. You know, and as we know, the spoofed messages are things like you know, your package is being held at the post office and it will be discarded unless you click this link. And it's like what? What package? Oh no, you know. Or your account is overdrawn and has been locked. You know to use it further. Please click this link, you know. So, anyway, she's become accustomed to me looking at the message and you know the link's phone number or the domain name and saying, eh, yeah, you could just delete that. That's nonsense. So, anyway, anyway, uh, I'm glad that everybody is becoming much more aware of these threats. I did, you know. It just takes time for sort of the awareness of this to percolate out into, you know, the user base. But uh, you know it really is.

Um kesimir cause in croatia, wrote regarding your proposed solution for secure conversation records retention. I see a few issues. He said it's not automatic. For example, it's still depending on someone adding this archiving agent to group chat. That's, he said, and we know how one can rely on people abiding by procedures, lol. He said also it will not cover one-to-one chats without adding an archiving agent.

He says I guess that in the end, in regulated environments, there will still be a need for modified client programs WhatsApp, signal, telegram, etc. But with properly secured content delivery into an archive. In other words, there's still a need for what TeleMessage was trying to do but fumbled so badly. He said, for example, public key is used in apps to encrypt the payload and private key is used on the archive end to decrypt it. Publicly exposed endpoint can host a webpage with QR code to configure the client company name, API URL and public key. It has to be scanned from the app as a prerequisite for app activation, therefore forcing people to comply. He says wish you all the best, go strong all the way to episode 2K and beyond. And, leo, I hope this 2K and beyond doesn't become a meme, because-.

1:08:48 - Leo Laporte

Uh-oh.

1:08:50 - Steve Gibson

I have a feeling it might, it's another 20 years, yikes. Anyway, kesimir is certainly correct in observing that. You know, the signal bot archiver would need to be deliberately added to any conversation, and even when the conversation is just two party just you know, you know me and you. That worked, that approach worked.

The signal bot archiver approach worked for the model we were discussing, where responsible office holders would be obeying clearly written laws requiring that they archive their messages. So you could inadvertently forget. But it would certainly hopefully become a habit, and typically I think people establish all these conversations and then rarely are creating them on an ongoing basis. So you end up with the Signalbot archiver participating in pretty much all that you're doing, but there is the problem of forgetting to add it. I get that it would be possible to return to the use of a modified signal app, although this approach avoided that. But such a modified signal app would always automatically add the archiving signal bot to any conversation. That way, government or corporate officials, whose every conversation must be archived, would have the convenience of having the Signal bot always tagging along. So anyway, just more good thoughts. And, leo, I need the convenience of another sip of coffee because I've got a scratchy throat, oh please steve, you're such a wimp you gotta.

I talked for hours and hours that that first piece went so long that it pushed our, our second sponsor back you can't, you can't, can't get it in.

1:10:56 - Leo Laporte

Get back on track here, I'm gonna show you our sponsor for this portion of security now. I think you might recognize this, oh, I sure do oh, baby, this here is my thinks canary.

And this is super cool, man, this thing for those of you listening, it looks like an external, uh, hard drive. You know, it's just black case with a green light and that picture of a canary on it, but it also has a ethernet jack and a power plug. That's it. But this little puppy, despite its looks, is, uh, something pretty amazing. It's a honeypot that looks like something really valuable to the bad guys, not vulnerable, not like oh look at me, come in here. No, it looks like there might be something on there that somebody prowling your network might want. I think this is one of the best security devices ever, because, after all, mostly the way that we consider security is as kind of a perimeter defense, a barbed wire fence surrounding our enterprise, and for so long we've just assumed that, oh well, that's all you need, right? Except that we now know that people get in. They get in because not every employee is as swift as Tyler's, not every user is as careful. Bad guys are out there in force. So once somebody is in your network, what are the chances you're going to catch them? All these hackers are pretty savvy these days. Probably the chances are you're not, and that's why you need a honeypot. That's what this is A honeypot that, unlike the kind of honeypots we talked about with Steve Belevin back in the day, these are easy, these take no trouble.

You can deploy them in minutes. You have a drop-down menu that could choose any personality you want. For a long time it was a Synology NAS. It's so easy to change. I mean you might change every day. Sometimes it's a Windows server, sometimes NAS. It's so easy to change, I mean you might change every day. Sometimes it's a Windows server, sometimes a Linux server. Sometimes it's got all the services turned on, lit up like a Christmas tree, sometimes just one, you know. Just maybe RDP turned on something.

This is my uh, my thinks canary console, and I could see there've been port scans. You have more alerts than normal. Would you like to mass acknowledge them? Yes, mass acknowledge. I don't know what's going on. Here's the tokens. Uh, these are. You know you can make a variety of. You can say this is an aws api key. Of course it's not. It's a key, though, that if somebody tries to use it on your aws, you get an alert. There's a bad guy using that phony key.

Look at all these things WireGuard VPN client config that alerts when connected. Here's a QR code that you could put that will ping you when it's scanned. Now all of this goes back to the Thinks Canary, which, as I thought was a Windows Server 2019 Office file share. But if you get tired of that, hey, you're not stuck. It can be any number of things. Look at all this an IIS server, a Linux proxy, an Oracle Enterprise Linux. It could be a FortiGate. It could be a MicroTik router Steve, we know how good those are for attracting bad guys. It can even be a SCADA device. I mean this thing. Look at this. It could be a Hirschman RS-20 industrial switch. It can be anything.

But once you've configured it, man, the minute somebody touches it, somebody accesses those lure files or tries to brute force your fake internal SSH server or your Windows 2019 server, you're going to get an alert immediately that tells you you have a problem. No false alerts. You saw I had all those alerts. Somebody, I think I was port scanning, so that was probably what that was me doing that to myself, unless I don't know, maybe it was russell getting in here. I think I maybe have to look at those alerts. See, you just choose a profile. You saw how easy it was. You register with the hosted console for monitoring and notifications. Then you put your hands back and you relax, you relax, you wait.

As soon as somebody breaches your network, whether it's a malicious insider or a hacker, some other adversary, you're going to know about it and that's the key. You know, on average, companies don't know they've been breached for 91 days. That's three months. A bad guy could do the worst without you even knowing it. That's why you got to have some things to canaries. Maybe, let's say five. Okay, visit canarytools, slash, twit. Five things to canaries would cost you about $7,500 a year. By the way, some big banks have hundreds. Just depends on the size of your operation. You know how much protection you want. You get, for the 7,500 bucks a year, five ThinkScanaries, your own hosted console. You get upgrades, you get support, you get maintenance and if you use the code TWIT, t-w-i-t in the how did you hear about us? Box, that's going to be 10% off and not just for the first year. That's going to be forever. For as long as you are a ThinkScanary user, 10% off.

Oh, one other thing that should reassure you. You might say well, I don't know. You can always return your ThinkScanaries with their two-month, 60-day money-back guarantee, and that's for a full refund. I should tell you, though, that we've been doing these ads about eight years. In all that time, that refund guarantee has never been claimed. No, because once you get these in, you say how did I live without them? The Thinks Canary Visit canarytoolstwit, and if they go twit in the how did you hear about us box canarytoolstwit, I might turn port scan detection off, because I think that was me in there. You can do that canarytools slash twit, or maybe steve this next segment. I should spend a little time browsing around and see who's been scanning my ports.

1:16:56 - Steve Gibson

It's a form of flattery, leo, that's it. That's it. It just tells the world you matter. You've got a full canary load of port detections.

1:17:06 - Leo Laporte

I got an SSH server on here. It's so much fun to set it up for different things. I love it. Anyway, on we go with the show. So, thank you, I've soothed my trembling vocal cords and ready to go, my trembling vocal cords and ready to go.

1:17:20 - Steve Gibson

Mike said from Ireland, loved the show, stumbled across it some years back when, wondering one day what happened to the lads from the screensavers. He says a show I watched in my younger years. Yes, mike, we were all younger back then. Little did we know.

1:17:40 - Leo Laporte

Don't make me show any video, because we really were younger.

1:17:44 - Steve Gibson

Yes, he says, being a solution architect for a large multinational telecommunications company in Europe. Now I immediately started listening to Security Now and it's provided invaluable info ever since. Thank you In regards to your piece last week on rogue telecommunications devices found in Chinese inverters. Very recently, the Iberian grid collapsed for an extended period.

1:18:12 - Leo Laporte

Yeah, Spain was offline.

1:18:15 - Steve Gibson

Yeah, it was blacked out, yeah 2.2 gigawatts of solar generation tripped out in the south of spain. The country had been running on 90 renewable energy in the moments before the collapse wow, he said. I immediately wondered about the possibility of a cyber attack, but we're unlikely to ever find out if it was, I suppose. In any event, this collapse event does demonstrate how vulnerable inverter technology makes modern grids as we move away from the massive spinning machines of yesteryear to generate our electricity. The very nature of these massive machines and there he's talking about hydroelectric turbines in dams help stabilize grids and maintain nominal AC frequency in the face of ever-fluctuating demand, and he's absolutely right about that. He says inverters, as you likely know, cannot perform the same task, as they are instead designed to match grid frequency. As they are instead designed to match grid frequency, they cannot work to maintain nominal hertz, even as it drops under load. Seems we've naively introduced significant vulnerabilities into our grids in a race to meet net zero. What's the Chinese word for blitzkrieg anyone? All the best, mike.

1:19:41 - Leo Laporte

This guy has an ax to grind. I don't buy it. Well, because nobody's putting these inverters directly on the grid.

1:19:52 - Steve Gibson

You have a battery system or some other intermediate system storing the electricity? I'm sure no, they are on the grid and they did bring the grid down. Is that what happened in Spain? The inverters?

1:19:57 - Leo Laporte

brought them down.

1:19:59 - Steve Gibson

No, it did happen last November in several locations. The inverters brought down, sure, but I thought it was interesting about this whole issue of a grid and why running a large power grid really is almost as much art as science. I mean, obviously you need a lot of science. It turns out that having unused excess power capacity is expensive, like if it's unused because you still have to have it and it's wasteful if it's not being paid for. So the other factor is that moving large amounts of power over great distances is expensive due to transmission losses. So the way the grid is set up, large and distributed power grids are typically being fed from many smaller local sources to prevent those transmission losses, while at the same time everything is also tied together thus in a grid, so that instantaneous variations in demand, which are occurring constantly, can briefly be fed from other connected sources. So over time, if there is a net power flow across some particular power provider boundary, then somebody will be paying the other side for the difference, but it averages out overall over the long term. So it's all a large collective.

The reason a portion of a grid suddenly going down can deprive a much larger area of power is the inherent interdependence of these subgrids. They each depend upon the others and also provide to the others, but only at the margins. If a significant piece of a grid's power input were to suddenly disappear, the demand from all of those that are still drawing power from that grid in the region, which just before its disappearance was balanced at supply and demand. It's still there. It's not going to disappear. This results in the grid's voltage and to some degree its frequency being pulled, pulled down, which can be catastrophic. For many systems.

1:22:45 - Leo Laporte

It is far better to have no voltage than low voltage, in fact, that the frequency drop was what they think caused the spanish black ah, okay, yeah, yeah, okay, um, you know it was a cascade of failures, which is often right.

1:23:00 - Steve Gibson

You know, we've all heard of the so-called brownout. Rather than a blackout, which is what you don't want to have, neighbors must quickly decide at what point to abandon that effort of like supporting the neighborhood in favor of saving themselves, so that they're also not brought down because they're trying to supply too much marginal power to someone they're connected to. And so when too much power is being pulled from an adjacent subgrid, that grid will quickly be cut off so that adequate power can continue to be supplied to the other subgrid's primary customers. So you know they want to help, but they just can't let themselves get hurt in the process. And so, as we know, power failures can also occur during times of extreme demand, when the demand simply outstrips the supply.

During peak summer days, with many high-power consuming air conditioner compressors all running at the same time, it can occur that all the power generation available is still unable to keep the voltage and frequency up where they need to be.

So, because it's far better, again, to have no power than low power, the subgrid that's unable to meet demand will simply disconnect from its neighboring grids and pull the plug on all of its customers, and then, later in the day, once temperatures are lower power can be carefully restored. So when shutdowns of power sources are planned in advance, as opposed to by surprise, such as like the sun sets on a solar farm, everyone knew that was going to happen. Right, the Earth continues rotating, so in that case a source of replacement power can be made ready and be switched in before the planned power source outage. So the real problem is one of surprise, no-transcript, you know, and since daytime power needs typically far outstripped nighttime need, it might also be that without solar and wind power there just isn't sufficient backup to keep everything running. You know, if Spain is 90% renewable, that means it's really, depending on that, renewable.

1:26:08 - Leo Laporte

Yeah, but it was a bunch of failures that happened. It wasn't. You know. I think it's unfortunate to blame renewable for it, because, yeah, that's part of it and it doesn't. Mike's right, there's no inertia as there would be with a big turbine. But uh, there are. There are fake inertia solutions out there. There are ways to to simulate that kind of inertia.

1:26:30 - Steve Gibson

Well, and what was so cool too. Remember that back in the day when it was all hydroelectric power and and you had to have synchronized generators right, because they all had had to be running in sync, so they were all it's a complicated thing, isn't it, oh my God? And what would happen is the actual AC frequency would fall during the day because the load on the generators would slow them down. They would all slow down synchronously, and so you would actually lose cycles during the day and your clocks would be off.

Very, very nominally, and then the engineers would make up for it at night by running the generators faster so that in a 24-hour period you had the same number of cycles.

1:27:18 - Leo Laporte

Wow, it feels, and maybe I mean I don't know anything about it, but it feels like these are solvable problems, that it's not an excuse to go back to a uh, you know, oil generation, by the way I just want to show, and no one, no one is suggesting that these are solvable. The problem is that it's complicated unaccounted for radios were found in inverters and so that's a problem.

1:27:40 - Steve Gibson

Yeah, yeah, so we want to. You know the. You know my. My feeling is this is great. I I was stunned that to read that 200 gigawatts of power is now being generated amazing. As we talked about, I was always of the opinion that we were going to end up returning to nuclear nuclear in nuclear in order to make it, because there's so much good technology now that we didn't have back when Three Mile Island and Chernobyl and things happened.

1:28:10 - Leo Laporte

I think economics will solve this, but the demand for AI energy is really powering nuclear at this point.

1:28:16 - Steve Gibson

Yeah, yes, yes.

1:28:18 - Leo Laporte

Hey, you want to see some young guys talking about the click of death. You want to see that.

1:28:26 - Steve Gibson

All the information is there that you need, but we're still not doing the online.

1:28:29 - Leo Laporte

I love that shirt. You still have it.

I think I do. Actually, I had that shirt but I did finally get rid of it. I had a lot of the screensaver shirts for a long time. Look at those monitors, by the way, all CRTs get rid of it. I had a lot of the screensaver shirts for a long time. Look at those monitors, by the way, all crts state-of-the-art. Leo, this doctor program. And look at our hair. This was back when we had stylists. I would never let my hair look like that. Naturally, you had hair. Which is they?

don't yeah something, something different now. Wow, that's where we first started talking to you. This is a problem that happens to. I think it's a little sped up. We sound a little chip monkey. Anyway, that's all on youtube from the old. Very cool, I love those black cords you remember the, the clothes you were wearing, that?

1:29:14 - Steve Gibson

is wild. Steve lori's figured out how tactile I am look, there we are with a zip disc.

1:29:19 - Leo Laporte

Remember those? Oh, do I remember the click of death?

1:29:24 - Steve Gibson

so does laurie like buy you cords now, fuzzy cords all the time no, no, but I do have comfies that I that are fuzzy, that I immediately switch into when I get home she knows how tactile you are yeah, that's like a flannel shirt, soft corduroys, I think she.

1:29:40 - Leo Laporte

I think you're right. I didn't know this about you. Interesting. Wow, we had fun back then. My friend, you're a soft touch what's that all? Began. What's that? What am I showing there? What?

1:29:51 - Steve Gibson

there are two things that happened. Oh, that was the actual zip disc that got sliced by the look at it, but by by the defective heads on a zip drive.

1:30:00 - Leo Laporte

That's what caused the click of death, that slice Wow.

1:30:05 - Steve Gibson

And so what would happen is a drive would do that to the disk and then you'd go why isn't the disk working? And you stick it into another drive and it would kill the drive. So it was like a physical virus.

1:30:19 - Leo Laporte

This is how we started kids Back in day. Look at those webcams on top of the screen. Yeah, those that was the uh. Those are the 3com uh netcams that made for the zd tv netcam network.

1:30:35 - Steve Gibson

See those it was really funny. I remember, uh, you had a, your co-host was Kate Patello, yeah, and she had her little segment during the screensavers, each show. And so she said well, so now, kate, what have you got? And she said, well, I found this really interesting thing that tests your Internet security. And she said it's called shields up and so so, and you were kind of only half paying attention because you know, there was something off camera that had somebody was trying to talk to you at the same time, and so she kind of got into it a bit a little ways, and then suddenly you were looking at he said wait, our steve well, I don't own you steve, I don't, but I do feel some some uh measure kind of proprietary you know because you're like, wait, he's a disc guy.

What does he know about security?

1:31:33 - Leo Laporte

what is he? The shields up that, that and spin writer your two longest uh surviving uh tools, aren't they yeah? Look at that. There's a zip drive with a click of death. That's how we first met. I don't think this was your first appearance, but uh, but it was shortly.

1:31:49 - Steve Gibson

I was on many times, of course. Then I began became a regular when we were doing the toronto stuff that's right.

1:31:55 - Leo Laporte

And then when?

1:31:56 - Steve Gibson

you, you're. It was during a inter-taping event that you said so. How would you like to do a weekly podcast on security? I said a whatcast.

1:32:11 - Leo Laporte

When's our 20th anniversary, Steve? I don't know.

1:32:13 - Steve Gibson

It's this fall, yeah it's coming up, or August, august, I think it's August. I think it's like in a couple of months, yeah, 20 years, it'll be very cool.

1:32:21 - Leo Laporte

Wow, all right. Do you want to get into this AI thing?

1:32:26 - Steve Gibson

We're still going to need to take a break in the middle of it, but let's do another sponsor now, and then we're going to plow into a number of interesting stories about artificial intelligence.

1:32:36 - Leo Laporte

I can't wait. Your take is very good. I can't wait. I'm always interested. Your take is very good. Of course, you know we do an AI show every Wednesday Intelligent Machines.

1:32:43 - Steve Gibson

But you have a different, you know, angle on it, and what's cool is that it's bringing you up to speed so that you're knowing what's going on.

1:32:52 - Leo Laporte

Oh, I mean, I'm using it all the time. Now I live on AI. You know that's if I want to like, if I want to know about power generation. It's a great place to go and get. You know, ask perplexity or something like that. Yep, get a, get a backgrounder pretty, pretty, darn quick. It's very useful. Our show today brought to you by oh, this is useful. Hox hunt h-o-x-h-u-n-t. Uh, I want to speak right now to the people was it was? Was it who was it? Was it Tyler who said thank you to, or thank you to our to my users who asked me Tyler, tyler, tyler, this is for you and for all the people who wish their users asked them is this safe to click on?

If you're a security leader, you have a job, right, you're paid to protect your company against cyber attacks. But that is a tough job. More cyber attacks than ever, and you know the bad guys are using AI now to generate phishing emails. Used to be, we could say, well, that's ungrammatical. Clearly that's phony. No, no, now they're really, really look good. They really they fool a lot of employees and that's the problem.

Legacy, one size fits, all-fits-all awareness programs. They don't stand a chance against the modern onslaught of AI-written email. In fact, these generic programs at most send maybe I don't know four generic trainings out a year. Most employees go oh that again and ignore them. Or when somebody actually clicks you, you know the new guy clicks it. Then they're forced into embarrassing training programs that feel like punishment. Of course this isn't working. That's why more and more organizations are trying hawks hunt some very big companies, because hawks hunt has gamified this. They actually made awareness training fun. They go beyond you traditional security awareness and they change behaviors by rewarding good clicks and coaching away the bad. So whenever an employee, instead of going to tyler and saying, is this, can I click on this? Whenever employee looks at the email, says, is this a scam, hawks hunt does it automatically instantly. You know they'll surround the email with a red thing. You get a dopamine rush that gets your people to click, learn and protect your company. What's wrong with this email? Right, as an admin, hawks Hunt makes it easy to automatically deliver these phishing simulations across not just email but Slack Teams. They use AI just like the bad guys are to mimic the latest real-world attacks. They use AI just like the bad guys are to mimic the latest real-world attacks and even more. The simulations are personalized to every employee, based on department location and more, so they really are effective. And then, instead of going okay, now you're going to watch a four-hour flash video you get instant micro-trainings. That are fun. They solidify understanding and they drive lasting safe behavior. That are fun. They solidify understanding and they drive lasting safe behavior.

You can trigger gamified security awareness training that awards employees with stars You'd be surprised they love this and badges boosting completion rates, ensuring compliance. In fact, I talked to the folks from Hawks Hunt a couple of weeks ago. I had a great conversation. They said it's so much fun that employees are saying send us more phishing emails. It's a game for them. I want to detect more. Send me more. That's great. When you have the employees saying I want the training. You couldn't have a better situation.

Choose from a huge library of customizable training packages, but they also give you an AI tool that you can generate your own, so you can mix it up any way. You want Hoxhunt H-O-X-H-U-N-T. It's like Foxhunt with an H. It has everything you need to run effective security training in one platform, meaning it's easy to measurably reduce your human cyber risk at scale and, by the way, measurably is good for showing the boss right. You don't have to take my word for it. Good for showing the boss right. You don't have to take my word for it.

Over 3,000 user reviews on G2 make Hawkshunt the top rated security training platform for the enterprise. They won easiest to use. They won best results. Also recognized as customer's choice by Gartner. Thousands of companies use it. Some of the biggest Qualcomm uses it. You can imagine they're the target of a lot of phishing email, aes, nokia. They use it to train millions of employees all over the globe. Visit hoxhuntcom slash security now today to learn why modern, secure companies are making the switch to Hoxhunt. It's security training that's actually fun that employees want more of. How about that? That's actually fun that employees want more of.

How about that hawkshuntcom slash security Now. We thank them so much for their support. It's when you get an employee saying can you send us more of those phishing emails? That was fun. You know you're on the right track. All right, steve, on we go, let's talk AI.

1:37:43 - Steve Gibson

So, okay, I feel a bit self-conscious, preceding most of the news about ai with a disclaimer.