The Rise of Internet Foreground Radiation

AI-created, human-edited.

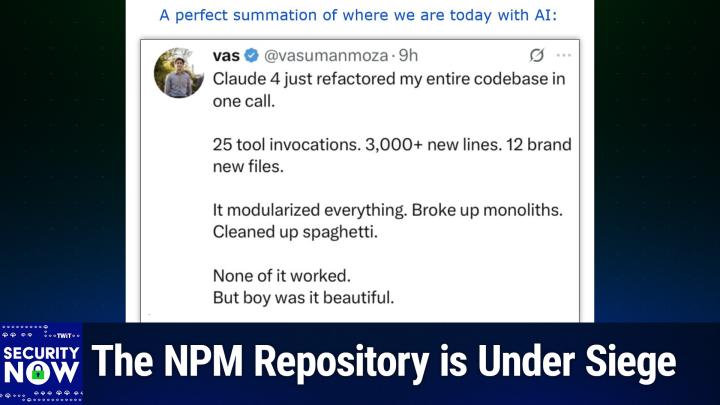

In a recent episode of Security Now, cybersecurity expert Steve Gibson introduced a chilling new concept that should concern every website owner: "Internet Foreground Radiation." Unlike the passive "Internet Background Radiation" he coined 26 years ago, this new phenomenon represents a coordinated, intentional assault on web infrastructure that begins the moment any new site goes online.

Gibson originally coined the term "Internet Background Radiation" in 1999 to describe the random network noise that would flow into IP addresses, much like cosmic background radiation fills space. This background radiation was largely unintentional, often coming from forgotten infected servers or legacy systems sending packets aimlessly across the internet.

Today's reality is far more sinister. As Gibson explained to host Leo Laporte, "Internet Foreground Radiation" represents deliberate, coordinated scanning operations targeting new websites with malicious intent. The key difference? Purpose and speed.

Research from Human Security revealed a startling truth: bots, not people, are the first visitors to new websites. Their honeypot experiments showed that web scanners consistently dominated early traffic to new sites, sometimes making up 100% of detected bot visitors on certain days and averaging 70% of all bot traffic in the first days after a site goes live.

"Never assume that any security can be added after any portion of a new site goes live," Gibson warned. The entire internet has essentially become "a loaded and cocked mousetrap ready to spring and capture at the slightest provocation."

The sophistication of these scanning operations has evolved dramatically. Threat actors now monitor multiple intelligence sources to identify new targets:

- Newly Registered Domain (NRD) feeds: Lists of recently registered, updated, or dropped domains

- Certificate Transparency logs: Public logs of new TLS certificates like CertStream

- Search engine indexing: Large-scale SEO crawlers that help scanners identify new listings

Gibson noted an interesting defensive possibility: wildcard certificates provide some obscurity since they don't reveal specific hostnames, though he cautioned against relying on this alone for security.

One of the most technical insights from the discussion involved Server Name Indication (SNI) and how it has changed the scanning landscape. With more than 90% of websites now sharing IP addresses behind proxies and CDNs, simple IP scanning is no longer sufficient. Scanners must now know specific domain names to establish successful TLS handshakes.

However, as Gibson pointed out, this hasn't significantly hindered malicious actors. They've adapted by monitoring domain registration feeds and certificate transparency logs to maintain their first-mover advantage.

The research revealed disturbing patterns in what these bots target most aggressively:

- 33.6% target .ENV files: These often contain API keys, database passwords, and other secrets

- 33.5% seek Git secrets: Repository data that could expose source code or credentials

- 23.4% probe common PHP files: Looking for configuration details and version information

Together, just these three categories account for over 90% of all website probes—a clear indication of malicious intent since no legitimate user would request these file types.

Gibson's analysis painted a sobering picture of modern web security. The signal-to-noise ratio for legitimate traffic has become so poor that GRC's servers don't even log website activity. "The only way to be safe is to assume that everything is malicious and be prepared for that," he stated.

This represents a fundamental shift from the early internet's "insecure by default" mentality. Where once it was acceptable—even common—to deploy systems without passwords or basic security measures, today's internet demands a "secure by default" approach from the moment any service goes online.

Gibson offered several practical recommendations:

- IP-based filtering: Block all access initially, then selectively allow trusted IPs

- Trap mechanisms: Set up honeypots that blacklist IPs requesting files your site doesn't use

- Never assume temporary security: Don't deploy anything publicly that isn't fully hardened

- Behavior analysis: Monitor for suspicious patterns like rapid successive requests

As Leo Laporte reflected, this evolution mirrors broader societal changes: "I remember a time when no one locked their doors and children would play out in the street with absolutely no fear at all. Times have changed, Steve."

The internet, once a "friendly neighborhood," has become a hostile environment where vigilance is not just recommended but essential for survival.

The research highlighted in this Security Now episode represents more than just technical analysis—it's a wake-up call for anyone operating web infrastructure. The days of "soft launches" or gradual security implementation are over. In today's internet landscape, security must be baked in from day one, because the bots are already watching, waiting, and ready to attack.

The shift from background to foreground radiation marks a new era in cybersecurity, one where the assumption of good faith has been permanently replaced by the reality of coordinated, automated threats operating at internet scale.